Apr. 16, 2024

There is an expectation that implementing new and emerging Generative AI (GenAI) tools enhances the effectiveness and competitiveness of organizations. This belief is evidenced by current and planned investments in GenAI tools, especially by firms in knowledge-intensive industries such as finance, healthcare, and entertainment, among others. According to forecasts, enterprise spending on GenAI will increase by two-fold in 2024 and grow to $151.1 billion by 2027.

However, the path to realizing return on these investments remains somewhat ambiguous. While there is a history of efficiency and productivity gains from using computers to automate large-scale routine and structured tasks across various industries, knowledge and professional jobs have largely resisted automation. This stems from the nature of knowledge work, which often involves tasks that are unstructured and ill-defined. The specific input information, desired outputs, and/or the processes of converting inputs to outputs in such tasks are not known a priority, which consequently has limited computer applications in core knowledge tasks.

GenAI tools are changing the business landscape by expanding the range of tasks that can be performed and supported by computers, including idea generation, software development, and creative writing and content production. With their advanced human-like generative abilities, GenAI tools have the potential to significantly enhance the productivity and creativity of knowledge workers. However, the question of how to integrate GenAI into knowledge work to successfully harness these advantages remains a challenge. Dictating the parameters for GenAI usage via a top-down approach, such as through formal job designs or redesigns, is difficult, as it has been observed that individuals tend to adopt new digital tools in ways that are not fully predictable. This unpredictability is especially pertinent to the use of GenAI in supporting knowledge work for the following reasons.

Continue reading: How Different Fields Are Using GenAI to Redefine Roles

Reprinted from the Harvard Business Review, March 25, 2024

Maryam Alavi is the Elizabeth D. & Thomas M. Holder Chair & Professor of IT Management, Scheller College of Business, Georgia Institute of Technology.

News Contact

Lorrie Burroughs

Apr. 11, 2024

Georgia Tech’s College of Engineering has established an artificial intelligence supercomputer hub dedicated exclusively to teaching students. The initiative — the AI Makerspace — is launched in collaboration with NVIDIA. College leaders call it a digital sandbox for students to understand and use AI in the classroom

Initially focusing on undergraduate students, the AI Makerspace aims to democratize access to computing resources typically reserved for researchers or technology companies. Students will access the cluster online as part of their coursework, deepening their AI skills through hands-on experience. The Makerspace will also better position students after graduation as they work with AI professionals and help shape the technology’s future applications.

“The launch of the AI Makerspace represents another milestone in Georgia Tech’s legacy of innovation and leadership in education,” said Raheem Beyah, dean of the College and Southern Company Chair. “Thanks to NVIDIA’s advanced technology and expertise, our students at all levels have a path to make significant contributions and lead in the rapidly evolving field of AI.”

News Contact

Jason Maderer, College of Engineering

Mar. 28, 2024

The Association for the Advancement of Artificial Intelligence released its Spring 2024 special issue of AI Magazine (Volume 45, Issue 1). This issue highlights research areas, applications, education initiatives, and public engagement led by the National Science Foundation (NSF) and USDA-NIFA-funded AI Research Institutes. It also delves into the background of the NSF’s National AI Research Institutes program, its role in shaping U.S. AI research strategy, and its future direction. Titled “Beneficial AI,” this issue showcases various AI research domains, all geared toward implementing AI for societal good.

The magazine, available as open access at https://onlinelibrary.wiley.com/toc/23719621/2024/45/1, a one-year effort, spearheaded and co-edited by Ashok Goel, director of the National AI-ALOE Institute and professor of computer science and human-centered computing at Georgia Tech, along with Chaohua Ou, AI-ALOE’s managing director and assistant director, Special Projects and Educational Initiatives Center for Teaching and Learning (CTL) at Georgia Tech, and Jim Donlon, the NSF's AI Institutes program director.

In this issue, insights into the future of AI and its societal impact are presented by the three NSF AI Institutes headquartered at Georgia Tech:

- AI-ALOE: National AI Institute for Adult Learning and Online Education

- Introduction to the Special Issue by Ashok Goel and Chaohua Ou.

- The magazine features AI-ALOE’s work in reskilling, upskilling, and workforce development, showcasing how AI is reshaping adult learning and online education to prepare our workforce for the future.

- AI4OPT: AI Institute for Advances in Optimization

- An overview of AI4OPT’s efforts in combining AI and optimization to tackle societal challenges in various sectors. The institute aims to create AI-assisted optimization systems for efficiency improvements, uncertainty quantification, and sustainability challenges, while also offering educational pathways in AI for engineering.

- AI-CARING: National AI Institute for Collaborative Assistance and Responsive Interaction for Networked Groups

- AI-CARING’s section in the magazine details its comprehensive approach to using AI technologies to address the complex needs of aging adults, while navigating ethical considerations and promoting education in the field.

- Co-authors contributing to AI-CARING's section include Sonia Chernova, associate professor at Georgia Tech and director of AI-CARING, along with members Elizabeth Mynatt, Agata Rozga, Reid Simmons, and Holly Yanco, all involved in AI-CARING research and education.

The magazine provides a comprehensive overview of how each of the 25 institutes is shaping the future of AI research.

About 'AI Magazine'

AI Magazine is an artificial intelligence magazine by the Association for the Advancement of Artificial Intelligence (AAAI). It is published four times each year, and is sent to all AAAI members and subscribed to by most research libraries. Back issues are available online (issues less than 18 months old are only available to AAAI members).

The purpose of AI Magazine is to disseminate timely and informative articles that represent the current state of the art in AI and to keep its readers posted on AAAI-related matters. The articles are selected to appeal to readers engaged in research and applications across the broad spectrum of AI. Although some level of technical understanding is assumed by the authors, articles should be clear enough to inform readers who work outside the particular subject area.

To learn more, click here.

News Contact

Breon Martin

AI Research Communications Manager

Georgia Tech

Mar. 19, 2024

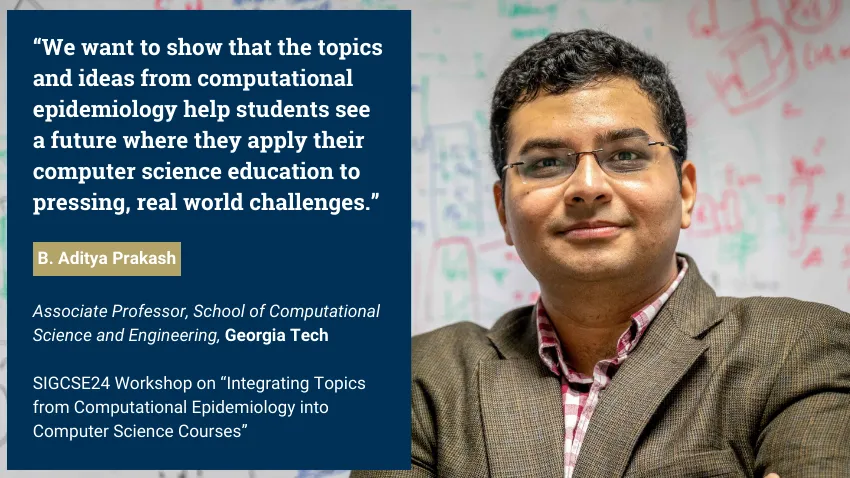

Computer science educators will soon gain valuable insights from computational epidemiology courses, like one offered at Georgia Tech.

B. Aditya Prakash is part of a research group that will host a workshop on how topics from computational epidemiology can enhance computer science classes.

These lessons would produce computer science graduates with improved skills in data science, modeling, simulation, artificial intelligence (AI), and machine learning (ML).

Because epidemics transcend the sphere of public health, these topics would groom computer scientists versed in issues from social, financial, and political domains.

The group’s virtual workshop takes place on March 20 at the technical symposium for the Special Interest Group on Computer Science Education (SIGCSE). SIGCSE is one of 38 special interest groups of the Association for Computing Machinery (ACM). ACM is the world’s largest scientific and educational computing society.

“We decided to do a tutorial at SIGCSE because we believe that computational epidemiology concepts would be very useful in general computer science courses,” said Prakash, an associate professor in the School of Computational Science and Engineering (CSE).

“We want to give an introduction to concepts, like what computational epidemiology is, and how topics, such as algorithms and simulations, can be integrated into computer science courses.”

Prakash kicks off the workshop with an overview of computational epidemiology. He will use examples from his CSE 8803: Data Science for Epidemiology course to introduce basic concepts.

This overview includes a survey of models used to describe behavior of diseases. Models serve as foundations that run simulations, ultimately testing hypotheses and making predictions regarding disease spread and impact.

Prakash will explain the different kinds of models used in epidemiology, such as traditional mechanistic models and more recent ML and AI based models.

Prakash’s discussion includes modeling used in recent epidemics like Covid-19, Zika, H1N1 bird flu, and Ebola. He will also cover examples from the 19th and 20th centuries to illustrate how epidemiology has advanced using data science and computation.

“I strongly believe that data and computation have a very important role to play in the future of epidemiology and public health is computational,” Prakash said.

“My course and these workshops give that viewpoint, and provide a broad framework of data science and computational thinking that can be useful.”

While humankind has studied disease transmission for millennia, computational epidemiology is a new approach to understanding how diseases can spread throughout communities.

The Covid-19 pandemic helped bring computational epidemiology to the forefront of public awareness. This exposure has led to greater demand for further application from computer science education.

Prakash joins Baltazar Espinoza and Natarajan Meghanathan in the workshop presentation. Espinoza is a research assistant professor at the University of Virginia. Meghanathan is a professor at Jackson State University.

The group is connected through Global Pervasive Computational Epidemiology (GPCE). GPCE is a partnership of 13 institutions aimed at advancing computational foundations, engineering principles, and technologies of computational epidemiology.

The National Science Foundation (NSF) supports GPCE through the Expeditions in Computing program. Prakash himself is principal investigator of other NSF-funded grants in which material from these projects appear in his workshop presentation.

[Related: Researchers to Lead Paradigm Shift in Pandemic Prevention with NSF Grant]

Outreach and broadening participation in computing are tenets of Prakash and GPCE because of how widely epidemics can reach. The SIGCSE workshop is one way that the group employs educational programs to train the next generation of scientists around the globe.

“Algorithms, machine learning, and other topics are fundamental graduate and undergraduate computer science courses nowadays,” Prakash said.

“Using examples like projects, homework questions, and data sets, we want to show that the topics and ideas from computational epidemiology help students see a future where they apply their computer science education to pressing, real world challenges.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Mar. 14, 2024

Schmidt Sciences has selected Kai Wang as one of 19 researchers to receive this year’s AI2050 Early Career Fellowship. In doing so, Wang becomes the first AI2050 fellow to represent Georgia Tech.

“I am excited about this fellowship because there are so many people at Georgia Tech using AI to create social impact,” said Wang, an assistant professor in the School of Computational Science and Engineering (CSE).

“I feel so fortunate to be part of this community and to help Georgia Tech bring more impact on society.”

AI2050 has allocated up to $5.5 million to support the cohort. Fellows receive up to $300,000 over two years and will join the Schmidt Sciences network of experts to advance their research in artificial intelligence (AI).

Wang’s AI2050 project centers on leveraging decision-focused AI to address challenges facing health and environmental sustainability. His goal is to strengthen and deploy decision-focused AI in collaboration with stakeholders to solve broad societal problems.

Wang’s method to decision-focused AI integrates machine learning with optimization to train models based on decision quality. These models borrow knowledge from decision-making processes in high-stakes domains to improve overall performance.

Part of Wang’s approach is to work closely with non-profit and non-governmental organizations. This collaboration helps Wang better understand problems at the point-of-need and gain knowledge from domain experts to custom-build AI models.

“It is very important to me to see my research impacting human lives and society,” Wang said. That reinforces my interest and motivation in using AI for social impact.”

[Related: Wang, New Faculty Bolster School’s Machine Learning Expertise]

This year’s cohort is only the second in the fellowship’s history. Wang joins a class that spans four countries, six disciplines, and seventeen institutions.

AI2050 commits $125 million over five years to identify and support talented individuals seeking solutions to ensure society benefits from AI. Last year’s AI2050 inaugural class of 15 early career fellows received $4 million.

The namesake of AI2050 comes from the central motivating question that fellows answer through their projects:

It’s 2050. AI has turned out to be hugely beneficial to society. What happened? What are the most important problems we solved and the opportunities and possibilities we realized to ensure this outcome?

AI2050 encourages young researchers to pursue bold and ambitious work on difficult challenges and promising opportunities in AI. These projects involve research that is multidisciplinary, risky, and hard to fund through traditional means.

Schmidt Sciences, LLC is a 501(c)3 non-profit organization supported by philanthropists Eric and Wendy Schmidt. Schmidt Sciences aims to accelerate and deepen understanding of the natural world and develop solutions to real-world challenges for public benefit.

Schmidt Sciences identify under-supported or unconventional areas of exploration and discovery with potential for high impact. Focus areas include AI and advanced computing, astrophysics and space, biosciences, climate, and cross-science.

“I am most grateful for the advice from my mentors, colleagues, and collaborators, and of course AI2050 for choosing me for this prestigious fellowship,” Wang said. “The School of CSE has given me so much support, including career advice from junior and senior level faculty.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Mar. 05, 2024

Computer graphic simulations can represent natural phenomena such as tornados, underwater, vortices, and liquid foams more accurately thanks to an advancement in creating artificial intelligence (AI) neural networks.

Working with a multi-institutional team of researchers, Georgia Tech Assistant Professor Bo Zhu combined computer graphic simulations with machine learning models to create enhanced simulations of known phenomena. The new benchmark could lead to researchers constructing representations of other phenomena that have yet to be simulated.

Zhu co-authored the paper Fluid Simulation on Neural Flow Maps. The Association for Computing Machinery’s Special Interest Group in Computer Graphics and Interactive Technology (SIGGRAPH) gave it a best paper award in December at the SIGGRAPH Asia conference in Sydney, Australia.

The authors say the advancement could be as significant to computer graphic simulations as the introduction of neural radiance fields (NeRFs) was to computer vision in 2020. Introduced by researchers at the University of California-Berkley, University of California-San Diego, and Google, NeRFs are neural networks that easily convert 2D images into 3D navigable scenes.

NeRFs have become a benchmark among computer vision researchers. Zhu and his collaborators hope their creation, neural flow maps, can do the same for simulation researchers in computer graphics.

“A natural question to ask is, can AI fundamentally overcome the traditional method’s shortcomings and bring generational leaps to simulation as it has done to natural language processing and computer vision?” Zhu said. “Simulation accuracy has been a significant challenge to computer graphics researchers. No existing work has combined AI with physics to yield high-end simulation results that outperform traditional schemes in accuracy.”

In computer graphics, simulation pipelines are the equivalent of neural networks and allow simulations to take shape. They are traditionally constructed through mathematical equations and numerical schemes.

Zhu said researchers have tried to design simulation pipelines with neural representations to construct more robust simulations. However, efforts to achieve higher physical accuracy have fallen short.

Zhu attributes the problem to the pipelines’ incapability of matching the capacities of AI algorithms within the structures of traditional simulation pipelines. To solve the problem and allow machine learning to have influence, Zhu and his collaborators proposed a new framework that redesigns the simulation pipeline.

They named these new pipelines neural flow maps. The maps use machine learning models to store spatiotemporal data more efficiently. The researchers then align these models with their mathematical framework to achieve a higher accuracy than previous pipeline simulations.

Zhu said he does not believe machine learning should be used to replace traditional numerical equations. Rather, they should complement them to unlock new advantageous paradigms.

“Instead of trying to deploy modern AI techniques to replace components inside traditional pipelines, we co-designed the simulation algorithm and machine learning technique in tandem,” Zhu said.

“Numerical methods are not optimal because of their limited computational capacity. Recent AI-driven capacities have uplifted many of these limitations. Our task is redesigning existing simulation pipelines to take full advantage of these new AI capacities.”

In the paper, the authors state the once unattainable algorithmic designs could unlock new research possibilities in computer graphics.

Neural flow maps offer “a new perspective on the incorporation of machine learning in numerical simulation research for computer graphics and computational sciences alike,” the paper states.

“The success of Neural Flow Maps is inspiring for how physics and machine learning are best combined,” Zhu added.

News Contact

Nathan Deen, Communications Officer

Georgia Tech School of Interactive Computing

nathan.deen@cc.gatech.edu

Mar. 05, 2024

Georgia Tech is developing a new artificial intelligence (AI) based method to automatically find and stop threats to renewable energy and local generators for energy customers across the nation’s power grid.

The research will concentrate on protecting distributed energy resources (DER), which are most often used on low-voltage portions of the power grid. They can include rooftop solar panels, controllable electric vehicle chargers, and battery storage systems.

The cybersecurity concern is that an attacker could compromise these systems and use them to cause problems across the electrical grid like, overloading components and voltage fluctuations. These issues are a national security risk and could cause massive customer disruptions through blackouts and equipment damage.

“Cyber-physical critical infrastructures provide us with core societal functionalities and services such as electricity,” said Saman Zonouz, Georgia Tech associate professor and lead researcher for the project.

“Our multi-disciplinary solution, DerGuard, will leverage device-level cybersecurity, system-wide analysis, and AI techniques for automated vulnerability assessment, discovery, and mitigation in power grids with emerging renewable energy resources.”

The project’s long-term outcome will be a secure, AI-enabled power grid solution that can search and protect the DER’s on its network from cyberattacks.

“First, we will identify sets of critical DERs that, if compromised, would allow the attacker to cause the most trouble for the power grid,” said Daniel Molzahn, assistant professor at Georgia Tech.

“These DERs would then be prioritized for analysis and patching any identified cyber problems. Identifying the critical sets of DERs would require information about the DERs themselves- like size or location- and the power grid. This way, the utility company or other aggregator would be in the best position to use this tool.”

Additionally, the team will establish a testbed with industry partners. They will then develop and evaluate technology applications to better understand the behavior between people, devices, and network performance.

Along with Zonouz and Molzahn, Georgia Tech faculty Wenke Lee, professor, and John P. Imlay Jr. chair in software, will also lead the team of researchers from across the country.

The researchers are collaborating with the University of Illinois at Urbana-Champaign, the Department of Energy’s National Renewable Energy Lab, the Idaho National Labs, the National Rural Electric Cooperative Association, and Fortiphyd Logic. Industry partners Network Perception, Siemens, and PSE&G will advise the researchers.

The work will be carried out at Georgia Tech’s Cyber-Physical Security Lab (CPSec) within the School of Cybersecurity and Privacy (SCP) and the School of Electrical and Computer Engineering (ECE).

The U.S. Department of Energy (DOE) announced a $45 million investment at the end of February for 16 cybersecurity initiatives. The projects will identify new cybersecurity tools and technologies designed to reduce cyber risks for energy infrastructure followed by tech-transfer initiatives. The DOE’s Office of Cybersecurity, Energy Security, and Emergency Response (CESER) awarded $4.2 million for the Institute’s DerGuard project.

News Contact

JP Popham, Communications Officer II

Georgia Tech School of Cybersecurity & Privacy

john.popham@cc.gatech.edu

Feb. 29, 2024

One of the hallmarks of humanity is language, but now, powerful new artificial intelligence tools also compose poetry, write songs, and have extensive conversations with human users. Tools like ChatGPT and Gemini are widely available at the tap of a button — but just how smart are these AIs?

A new multidisciplinary research effort co-led by Anna (Anya) Ivanova, assistant professor in the School of Psychology at Georgia Tech, alongside Kyle Mahowald, an assistant professor in the Department of Linguistics at the University of Texas at Austin, is working to uncover just that.

Their results could lead to innovative AIs that are more similar to the human brain than ever before — and also help neuroscientists and psychologists who are unearthing the secrets of our own minds.

The study, “Dissociating Language and Thought in Large Language Models,” is published this week in the scientific journal Trends in Cognitive Sciences. The work is already making waves in the scientific community: an earlier preprint of the paper, released in January 2023, has already been cited more than 150 times by fellow researchers. The research team has continued to refine the research for this final journal publication.

“ChatGPT became available while we were finalizing the preprint,” Ivanova explains. “Over the past year, we've had an opportunity to update our arguments in light of this newer generation of models, now including ChatGPT.”

Form versus function

The study focuses on large language models (LLMs), which include AIs like ChatGPT. LLMs are text prediction models, and create writing by predicting which word comes next in a sentence — just like how a cell phone or email service like Gmail might suggest what next word you might want to write. However, while this type of language learning is extremely effective at creating coherent sentences, that doesn’t necessarily signify intelligence.

Ivanova’s team argues that formal competence — creating a well-structured, grammatically correct sentence — should be differentiated from functional competence — answering the right question, communicating the correct information, or appropriately communicating. They also found that while LLMs trained on text prediction are often very good at formal skills, they still struggle with functional skills.

“We humans have the tendency to conflate language and thought,” Ivanova says. “I think that’s an important thing to keep in mind as we're trying to figure out what these models are capable of, because using that ability to be good at language, to be good at formal competence, leads many people to assume that AIs are also good at thinking — even when that's not the case.

It's a heuristic that we developed when interacting with other humans over thousands of years of evolution, but now in some respects, that heuristic is broken,” Ivanova explains.

The distinction between formal and functional competence is also vital in rigorously testing an AI’s capabilities, Ivanova adds. Evaluations often don’t distinguish formal and functional competence, making it difficult to assess what factors are determining a model’s success or failure. The need to develop distinct tests is one of the team’s more widely accepted findings, and one that some researchers in the field have already begun to implement.

Creating a modular system

While the human tendency to conflate functional and formal competence may have hindered understanding of LLMs in the past, our human brains could also be the key to unlocking more powerful AIs.

Leveraging the tools of cognitive neuroscience while a postdoctoral associate at Massachusetts Institute of Technology (MIT), Ivanova and her team studied brain activity in neurotypical individuals via fMRI, and used behavioral assessments of individuals with brain damage to test the causal role of brain regions in language and cognition — both conducting new research and drawing on previous studies. The team’s results showed that human brains use different regions for functional and formal competence, further supporting this distinction in AIs.

“Our research shows that in the brain, there is a language processing module and separate modules for reasoning,” Ivanova says. This modularity could also serve as a blueprint for how to develop future AIs.

“Building on insights from human brains — where the language processing system is sharply distinct from the systems that support our ability to think — we argue that the language-thought distinction is conceptually important for thinking about, evaluating, and improving large language models, especially given recent efforts to imbue these models with human-like intelligence,” says Ivanova’s former advisor and study co-author Evelina Fedorenko, a professor of brain and cognitive sciences at MIT and a member of the McGovern Institute for Brain Research.

Developing AIs in the pattern of the human brain could help create more powerful systems — while also helping them dovetail more naturally with human users. “Generally, differences in a mechanism’s internal structure affect behavior,” Ivanova says. “Building a system that has a broad macroscopic organization similar to that of the human brain could help ensure that it might be more aligned with humans down the road.”

In the rapidly developing world of AI, these systems are ripe for experimentation. After the team’s preprint was published, OpenAI announced their intention to add plug-ins to their GPT models.

“That plug-in system is actually very similar to what we suggest,” Ivanova adds. “It takes a modularity approach where the language model can be an interface to another specialized module within a system.”

While the OpenAI plug-in system will include features like booking flights and ordering food, rather than cognitively inspired features, it demonstrates that “the approach has a lot of potential,” Ivanova says.

The future of AI — and what it can tell us about ourselves

While our own brains might be the key to unlocking better, more powerful AIs, these AIs might also help us better understand ourselves. “When researchers try to study the brain and cognition, it's often useful to have some smaller system where you can actually go in and poke around and see what's going on before you get to the immense complexity,” Ivanova explains.

However, since human language is unique, model or animal systems are more difficult to relate. That's where LLMs come in.

“There are lots of surprising similarities between how one would approach the study of the brain and the study of an artificial neural network” like a large language model, she adds. “They are both information processing systems that have biological or artificial neurons to perform computations.”

In many ways, the human brain is still a black box, but openly available AIs offer a unique opportunity to see the synthetic system's inner workings and modify variables, and explore these corresponding systems like never before.

“It's a really wonderful model that we have a lot of control over,” Ivanova says. “Neural networks — they are amazing.”

Along with Anna (Anya) Ivanova, Kyle Mahowald, and Evelina Fedorenko, the research team also includes Idan Blank (University of California, Los Angeles), as well as Nancy Kanwisher and Joshua Tenenbaum (Massachusetts Institute of Technology).

DOI: https://doi.org/10.1016/j.tics.2024.01.011

Researcher Acknowledgements

For helpful conversations, we thank Jacob Andreas, Alex Warstadt, Dan Roberts, Kanishka Misra, students in the 2023 UT Austin Linguistics 393 seminar, the attendees of the Harvard LangCog journal club, the attendees of the UT Austin Department of Linguistics SynSem seminar, Gary Lupyan, John Krakauer, members of the Intel Deep Learning group, Yejin Choi and her group members, Allyson Ettinger, Nathan Schneider and his group members, the UT NLL Group, attendees of the KUIS AI Talk Series at Koç University in Istanbul, Tom McCoy, attendees of the NYU Philosophy of Deep Learning conference and his group members, Sydney Levine, organizers and attendees of the ILFC seminar, and others who have engaged with our ideas. We also thank Aalok Sathe for help with document formatting and references.

Funding sources

Anna (Anya) Ivanova was supported by funds from the Quest Initiative for Intelligence. Kyle Mahowald acknowledges funding from NSF Grant 2104995. Evelina Fedorenko was supported by NIH awards R01-DC016607, R01-DC016950, and U01-NS121471 and by research funds from the Brain and Cognitive Sciences Department, McGovern Institute for Brain Research, and the Simons Foundation through the Simons Center for the Social Brain.

News Contact

Written by Selena Langner

Editor and Press Contact:

Jess Hunt-Ralston

Director of Communications

College of Sciences

Georgia Tech

Feb. 15, 2024

Artificial intelligence is starting to have the capability to improve both financial reporting and auditing. However, both companies and audit firms will only realize the benefits of AI if their people are open to the information generated by the technology. A new study forthcoming in Review of Accounting Studies attempts to understand how financial executives perceive and respond to the use of AI in both financial reporting and auditing.

In “How do Financial Executives Respond to the Use of Artificial Intelligence in Financial Reporting and Auditing?,” researchers surveyed financial executives (e.g., CFOs, controllers) to assess their perceptions of AI use in their companies’ financial reporting process, as well as the use of AI by their financial statement auditor. The study is authored by Nikki MacKenzie of the Georgia Tech Scheller College of Business, Cassandra Estep from Emory University, and Emily Griffith of the University of Wisconsin.

“We were curious about how financial executives would respond to AI-generated information as we often hear how the financial statements are a joint product of the company and their auditors. While we find that financial executives are rightfully cautious about the use of AI, we do not find that they are averse to its use as has been previously reported. In fact, a number of our survey respondents were excited about AI and see the significant benefits for their companies’ financial reporting process,” says MacKenzie.

Continue reading: The Use of AI by Financial Executives and Their Auditors

Reprinted from Forbes

News Contact

Lorrie Burroughs, Scheller College of Business

Feb. 12, 2024

If you’ve spent even an hour or two on ChatGPT or another generative AI model, you know that getting it to generate the content you want can be challenging and even downright frustrating.

Prompt engineering is the process of crafting and refining a specific, detailed prompt — one that will get you the response you need from a generative AI model. This kind of “coding in English” is a complex and tricky process. Fortunately, our faculty at the Ivan Allen College of Liberal Arts at Georgia Tech are engaged in teaching and research in this exciting emerging field.

I met with Assistant Professor Yeqing Kong in the School of Literature, Media, and Communication to talk about prompt engineering. She shared three approaches to crafting a prompt that she has collected from leading experts, and invited me to try them out on a prompt. Read the full story.

News Contact

Stephanie N. Kadel

Ivan Allen College of Liberal Arts

Pagination

- Previous page

- 7 Page 7

- Next page