Feb. 05, 2024

Scientists are always looking for better computer models that simulate the complex systems that define our world. To meet this need, a Georgia Tech workshop held Jan. 16 illustrated how new artificial intelligence (AI) research could usher the next generation of scientific computing.

The workshop focused AI technology toward optimization of complex systems. Presentations of climatological and electromagnetic simulations showed these techniques resulted in more efficient and accurate computer modeling. The workshop also progressed AI research itself since AI models typically are not well-suited for optimization tasks.

The School of Computational Science and Engineering (CSE) and Institute for Data Engineering and Science jointly sponsored the workshop.

School of CSE Assistant Professors Peng Chen and Raphaël Pestourie led the workshop’s organizing committee and moderated the workshop’s two panel discussions. The duo also pitched their own research, highlighting potential of scientific AI.

Chen shared his work on derivative-informed neural operators (DINOs). DINOs are a class of neural networks that use derivative information to approximate solutions of partial differential equations. The derivative enhancement results in neural operators that are more accurate and efficient.

During his talk, Chen showed how DINOs makes better predictions with reliable derivatives. These have potential to solve data assimilation problems in weather and flooding prediction. Other applications include allocating sensors for early tsunami warnings and designing new self-assembly materials.

All these models contain elements of uncertainty where data is unknown, noisy, or changes over time. Not only is DINOs a powerful tool to quantify uncertainty, but it also requires little training data to become functional.

“Recent advances in AI tools have become critical in enhancing societal resilience and quality, particularly through their scientific uses in environmental, climatic, material, and energy domains,” Chen said.

“These tools are instrumental in driving innovation and efficiency in these and many other vital sectors.”

[Related: Machine Learning Key to Proposed App that Could Help Flood-prone Communities]

One challenge in studying complex systems is that it requires many simulations to generate enough data to learn from and make better predictions. But with limited data on hand, it is costly to run enough simulations to produce new data.

At the workshop, Pestourie presented his physics-enhanced deep surrogates (PEDS) as a solution to this optimization problem.

PEDS employs scientific AI to make efficient use of available data while demanding less computational resources. PEDS demonstrated to be up to three times more accurate than models using neural networks while needing less training data by at least a factor of 100.

PEDS yielded these results in tests on diffusion, reaction-diffusion, and electromagnetic scattering models. PEDS performed well in these experiments geared toward physics-based applications because it combines a physics simulator with a neural network generator.

“Scientific AI makes it possible to systematically leverage models and data simultaneously,” Pestourie said. “The more adoption of scientific AI there will be by domain scientists, the more knowledge will be created for society.”

[Related: Technique Could Efficiently Solve Partial Differential Equations for Numerous Applications]

Study and development of AI applications at these scales require use of the most powerful computers available. The workshop invited speakers from national laboratories who showcased supercomputing capabilities available at their facilities. These included Oak Ridge National Laboratory, Sandia National Laboratories, and Pacific Northwest National Laboratory.

The workshop hosted Georgia Tech faculty who represented the Colleges of Computing, Design, Engineering, and Sciences. Among these were workshop co-organizers Yan Wang and Ebeneser Fanijo. Wang is a professor in the George W. Woodruff School of Mechanical Engineering and Fanjio is an assistant professor in the School of Building Construction.

The workshop welcomed academics outside of Georgia Tech to share research occurring at their institutions. These speakers hailed from Emory University, Clemson University, and the University of California, Berkeley.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Jan. 29, 2024

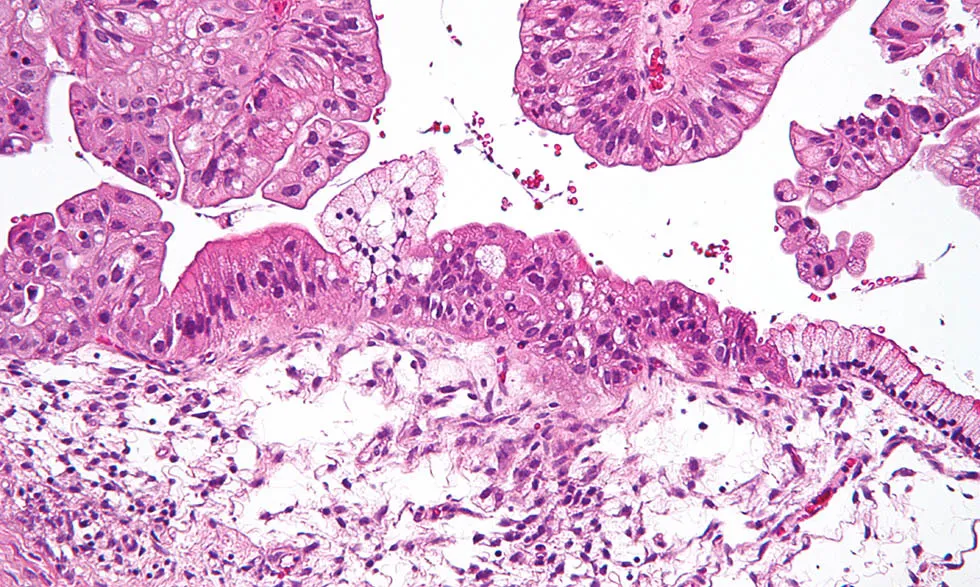

For over three decades, a highly accurate early diagnostic test for ovarian cancer has eluded physicians. Now, scientists in the Georgia Tech Integrated Cancer Research Center (ICRC) have combined machine learning with information on blood metabolites to develop a new test able to detect ovarian cancer with 93 percent accuracy among samples from the team’s study group.

John McDonald, professor emeritus in the School of Biological Sciences, founding director of the ICRC, and the study’s corresponding author, explains that the new test’s accuracy is better in detecting ovarian cancer than existing tests for women clinically classified as normal, with a particular improvement in detecting early-stage ovarian disease in that cohort.

The team’s results and methodologies are detailed in a new paper, “A Personalized Probabilistic Approach to Ovarian Cancer Diagnostics,” published in the March 2024 online issue of the medical journal Gynecologic Oncology. Based on their computer models, the researchers have developed what they believe will be a more clinically useful approach to ovarian cancer diagnosis — whereby a patient’s individual metabolic profile can be used to assign a more accurate probability of the presence or absence of the disease.

“This personalized, probabilistic approach to cancer diagnostics is more clinically informative and accurate than traditional binary (yes/no) tests,” McDonald says. “It represents a promising new direction in the early detection of ovarian cancer, and perhaps other cancers as well.”

The study co-authors also include Dongjo Ban, a Bioinformatics Ph.D. student in McDonald’s lab; Research Scientists Stephen N. Housley, Lilya V. Matyunina, and L.DeEtte (Walker) McDonald; Regents’ Professor Jeffrey Skolnick, who also serves as Mary and Maisie Gibson Chair in the School of Biological Sciences and Georgia Research Alliance Eminent Scholar in Computational Systems Biology; and two collaborating physicians: University of North Carolina Professor Victoria L. Bae-Jump and Ovarian Cancer Institute of Atlanta Founder and Chief Executive Officer Benedict B. Benigno. Members of the research team are forming a startup to transfer and commercialize the technology, and plan to seek requisite trials and FDA approval for the test.

Silent killer

Ovarian cancer is often referred to as the silent killer because the disease is typically asymptomatic when it first arises — and is usually not detected until later stages of development, when it is difficult to treat.

McDonald explains that while the average five-year survival rate for late-stage ovarian cancer patients, even after treatment, is around 31 percent — but that if ovarian cancer is detected and treated early, the average five-year survival rate is more than 90 percent.

“Clearly, there is a tremendous need for an accurate early diagnostic test for this insidious disease,” McDonald says.

And although development of an early detection test for ovarian cancer has been vigorously pursued for more than three decades, the development of early, accurate diagnostic tests has proven elusive. Because cancer begins on the molecular level, McDonald explains, there are multiple possible pathways capable of leading to even the same cancer type.

“Because of this high-level molecular heterogeneity among patients, the identification of a single universal diagnostic biomarker of ovarian cancer has not been possible,” McDonald says. “For this reason, we opted to use a branch of artificial intelligence — machine learning — to develop an alternative probabilistic approach to the challenge of ovarian cancer diagnostics.”

Metabolic profiles

Georgia Tech co-author Dongjo Ban, whose thesis research contributed to the study, explains that “because end-point changes on the metabolic level are known to be reflective of underlying changes operating collectively on multiple molecular levels, we chose metabolic profiles as the backbone of our analysis.”

“The set of human metabolites is a collective measure of the health of cells,” adds coauthor Jeffrey Skolnick, “and by not arbitrarily choosing any subset in advance, one lets the artificial intelligence figure out which are the key players for a given individual.”

Mass spectrometry can identify the presence of metabolites in the blood by detecting their mass and charge signatures. However, Ban says, the precise chemical makeup of a metabolite requires much more extensive characterization.

Ban explains that because the precise chemical composition of less than seven percent of the metabolites circulating in human blood have, thus far, been chemically characterized, it is currently impossible to accurately pinpoint the specific molecular processes contributing to an individual's metabolic profile.

However, the research team recognized that, even without knowing the precise chemical make-up of each individual metabolite, the mere presence of different metabolites in the blood of different individuals, as detected by mass spectrometry, can be incorporated as features in the building of accurate machine learning-based predictive models (similar to the use of individual facial features in the building of facial pattern recognition algorithms).

“Thousands of metabolites are known to be circulating in the human bloodstream, and they can be readily and accurately detected by mass spectrometry and combined with machine learning to establish an accurate ovarian cancer diagnostic,” Ban says.

A new probabilistic approach

The researchers developed their integrative approach by combining metabolomic profiles and machine learning-based classifiers to establish a diagnostic test with 93 percent accuracy when tested on 564 women from Georgia, North Carolina, Philadelphia and Western Canada. 431 of the study participants were active ovarian cancer patients, and while the remaining 133 women in the study did not have ovarian cancer.

Further studies have been initiated to study the possibility that the test is able to detect very early-stage disease in women displaying no clinical symptoms, McDonald says.

McDonald anticipates a clinical future where a person with a metabolic profile that falls within a score range that makes cancer highly unlikely would only require yearly monitoring. But someone with a metabolic score that lies in a range where a majority (say, 90%) have previously been diagnosed with ovarian cancer would likely be monitored more frequently — or perhaps immediately referred for advanced screening.

Citation: https://doi.org/10.1016/j.ygyno.2023.12.030

Funding

This research was funded by the Ovarian Cancer Institute (Atlanta), the Laura Crandall Brown Foundation, the Deborah Nash Endowment Fund, Northside Hospital (Atlanta), and the Mark Light Integrated Cancer Research Student Fellowship.

Disclosure

Study co-authors John McDonald, Stephen N. Housley, Jeffrey Skolnick, and Benedict B. Benigno are the co-founders of MyOncoDx, Inc., formed to support further research, technology transfer, and commercialization for the team’s new clinical tool for the diagnosis of ovarian cancer.

News Contact

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Jan. 16, 2024

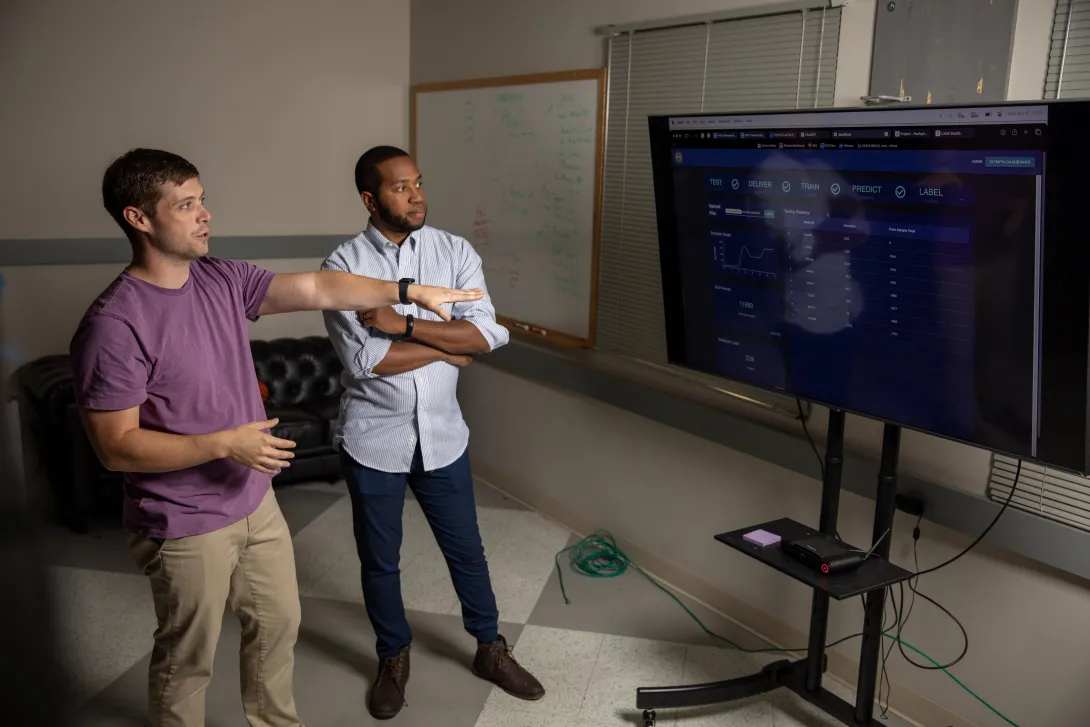

Machine learning (ML) has transformed the digital landscape with its unprecedented ability to automate complex tasks and improve decision-making processes. However, many organizations, including the U.S. Department of Defense (DoD), still rely on time-consuming methods for developing and testing machine learning models, which can create strategic vulnerabilities in today’s fast-changing environment.

The Georgia Tech Research Institute (GTRI) is addressing this challenge by developing a Machine Learning Operations (MLOps) platform that standardizes the development and testing of artificial intelligence (AI) and ML models to enhance the speed and efficiency with which these models are utilized during real-time decision-making situations.

“It’s been difficult for organizations to transition these models from a research environment and turn them into fully-functional products that can be used in real-time,” said Austin Ruth, a GTRI research engineer who is leading this project. “Our goal is to bring AI/ML to the tactical edge where it could be used during active threat situations to heighten the survivability of our warfighters.”

Rather than treating ML development in isolation, GTRI’s MLOps platform would bridge the gap between data scientists and field operations so that organizations can oversee the entire lifecycle of ML projects from development to deployment at the tactical edge.

The tactical edge refers to the immediate operational space where decisions are made and actions take place. Bringing AI and ML capabilities closer to the point of action would enhance the speed, efficiency and effectiveness of decision-making processes and contribute to more agile and adaptive responses to threats.

“We want to develop a system where fighter jets or warships don’t have to do any data transfers but could train and label the data right where they are and have the AI/ML models improve in real-time as they’re actively going up against threats,” said Ruth.

For example, a model could monitor a plane’s altitude and speed, immediately spot potential wing drag issues and alert the pilot about it. In an electronic warfare (EW) situation when facing enemy aircraft or missiles, the models could process vast amounts of incoming data to more quickly identify threats and recommend effective countermeasures in real time.

AI/ML models need to be trained and tested to ensure their effectiveness in adapting to new, unseen data. However, without having a standardized process in place, training and testing is done in a fragmented manner, which poses several risks, such as overfitting, where the model performs well on the training data but fails to generalize unseen data and makes inaccurate predictions or decisions in real-world situations, security vulnerabilities where bad actors exploit weaknesses in the models, and a general lack of robustness and inefficient resource utilization.

“Throughout this project, we noticed that training and testing are often done in a piecemeal fashion and thus aren’t repeatable,” said Jovan Munroe, a GTRI senior research engineer who is also leading this project. “Our MLOps platform makes the training and testing process more consistent and well-defined so that these models are better equipped to identify and address unknown variables in the battle space.”

This project has been supported by GTRI’s Independent Research and Development (IRAD) Program, winning an IRAD of the Year award in fiscal year 2023. In fiscal year 2024, the project received funding from a U.S. government sponsor.

Writer: Anna Akins

Photos: Sean McNeil

GTRI Communications

Georgia Tech Research Institute

Atlanta, Georgia

The Georgia Tech Research Institute (GTRI) is the nonprofit, applied research division of the Georgia Institute of Technology (Georgia Tech). Founded in 1934 as the Engineering Experiment Station, GTRI has grown to more than 2,900 employees, supporting eight laboratories in over 20 locations around the country and performing more than $940 million of problem-solving research annually for government and industry. GTRI's renowned researchers combine science, engineering, economics, policy, and technical expertise to solve complex problems for the U.S. federal government, state, and industry.

News Contact

(Interim) Director of Communications

Michelle Gowdy

Michelle.Gowdy@gtri.gatech.edu

404-407-8060

Jan. 04, 2024

While increasing numbers of people are seeking mental health care, mental health providers are facing critical shortages. Now, an interdisciplinary team of investigators at Georgia Tech, Emory University, and Penn State aim to develop an interactive AI system that can provide key insights and feedback to help these professionals improve and provide higher quality care, while satisfying the increasing demand for highly trained, effective mental health professionals.

A new $2,000,000 grant from the National Science Foundation (NSF) will support the research.

The research builds on previous collaboration between Rosa Arriaga, an associate professor in the College of Computing and Andrew Sherrill, an assistant professor in the Department of Psychiatry and Behavioral Sciences at Emory University, who worked together on a computational system for PTSD therapy.

Arriaga and Christopher Wiese, an assistant professor in the School of Psychology will lead the Georgia Tech team, Saeed Abdullah, an assistant professor in the College of Information Sciences and Technology will lead the Penn State team, and Sherrill will serve as overall project lead and Emory team lead.

The grant, for “Understanding the Ethics, Development, Design, and Integration of Interactive Artificial Intelligence Teammates in Future Mental Health Work” will allocate $801,660 of support to the Georgia Tech team, supporting four years of research.

“The initial three years of our project are dedicated to understanding and defining what functionalities and characteristics make an AI system a 'teammate' rather than just a tool,” Wiese says. “This involves extensive research and interaction with mental health professionals to identify their specific needs and challenges. We aim to understand the nuances of their work, their decision-making processes, and the areas where AI can provide meaningful support.In the final year, we plan to implement a trial run of this AI teammate philosophy with mental health professionals.”

While the project focuses on mental health workers, the impacts of the project range far beyond. “AI is going to fundamentally change the nature of work and workers,” Arriaga says. “And, as such, there’s a significant need for research to develop best practices for integrating worker, work, and future technology.”

The team underscores that sectors like business, education, and customer service could easily apply this research. The ethics protocol the team will develop will also provide a critical framework for best practices. The team also hopes that their findings could inform policymakers and stakeholders making key decisions regarding AI.

“The knowledge and strategies we develop have the potential to revolutionize how AI is integrated into the broader workforce,” Wiese adds. “We are not just exploring the intersection of human and synthetic intelligence in the mental health profession; we are laying the groundwork for a future where AI and humans collaborate effectively across all areas of work.”

Collaborative project

The project aims to develop an AI coworker called TEAMMAIT (short for “the Trustworthy, Explainable, and Adaptive Monitoring Machine for AI Team”). Rather than functioning as a tool, as many AI’s currently do, TEAMMAIT will act more as a human teammate would, providing constructive feedback and helping mental healthcare workers develop and learn new skills.

“Unlike conventional AI tools that function as mere utilities, an AI teammate is designed to work collaboratively with humans, adapting to their needs and augmenting their capabilities,” Wiese explains. “Our approach is distinctively human-centric, prioritizing the needs and perspectives of mental health professionals… it’s important to recognize that this is a complex domain and interdisciplinary collaboration is necessary to create the most optimal outcomes when it comes to integrating AI into our lives.”

With both technical and human health aspects to the research, the project will leverage an interdisciplinary team of experts spanning clinical psychology, industrial-organizational psychology, human-computer interaction, and information science.

“We need to work closely together to make sure that the system, TEAMMAIT, is useful and usable,” adds Arriaga. “Chris (Wiese) and I are looking at two types of challenges: those associated with the organization, as Chris is an industrial organizational psychology expert — and those associated with the interface, as I am a computer scientist that specializes in human computer interaction.”

Long-term timeline

The project’s long-term timeline reflects the unique challenges that it faces.

“A key challenge is in the development and design of the AI tools themselves,” Wiese says. “They need to be user-friendly, adaptable, and efficient, enhancing the capabilities of mental health workers without adding undue complexity or stress. This involves continuous iteration and feedback from end-users to refine the AI tools, ensuring they meet the real-world needs of mental health professionals.”

The team plans to deploy TEAMMAIT in diverse settings in the fourth year of development, and incorporate data from these early users to create development guidelines for Worker-AI teammates in mental health work, and to create ethical guidelines for developing and using this type of system.

“This will be a crucial phase where we test the efficacy and integration of the AI in real-world scenarios,” Wiese says. “We will assess not just the functional aspects of the AI, such as how well it performs specific tasks, but also how it impacts the work environment, the well-being of the mental health workers, and ultimately, the quality of care provided to patients.”

Assessing the psychological impacts on workers, including how TEAMMAIT impacts their day-to-day work will be crucial in ensuring TEAMMAIT has a positive impact on healthcare worker’s skills and wellbeing.

“We’re interested in understanding how mental health clinicians interact with TEAMMAIT and the subsequent impact on their work,” Wiese adds. “How long does it take for clinicians to become comfortable and proficient with TEAMMAIT? How does their engagement with TEAMMAIT change over the year? Do they feel like they are more effective when using TEAMMAIT? We’re really excited to begin answering these questions.

News Contact

Written by Selena Langner

Contact: Jess Hunt-Ralston

Dec. 20, 2023

A new machine learning method could help engineers detect leaks in underground reservoirs earlier, mitigating risks associated with geological carbon storage (GCS). Further study could advance machine learning capabilities while improving safety and efficiency of GCS.

The feasibility study by Georgia Tech researchers explores using conditional normalizing flows (CNFs) to convert seismic data points into usable information and observable images. This potential ability could make monitoring underground storage sites more practical and studying the behavior of carbon dioxide plumes easier.

The 2023 Conference on Neural Information Processing Systems (NeurIPS 2023) accepted the group’s paper for presentation. They presented their study on Dec. 16 at the conference’s workshop on Tackling Climate Change with Machine Learning.

“One area where our group excels is that we care about realism in our simulations,” said Professor Felix Herrmann. “We worked on a real-sized setting with the complexities one would experience when working in real-life scenarios to understand the dynamics of carbon dioxide plumes.”

CNFs are generative models that use data to produce images. They can also fill in the blanks by making predictions to complete an image despite missing or noisy data. This functionality is ideal for this application because data streaming from GCS reservoirs are often noisy, meaning it’s incomplete, outdated, or unstructured data.

The group found in 36 test samples that CNFs could infer scenarios with and without leakage using seismic data. In simulations with leakage, the models generated images that were 96% similar to ground truths. CNFs further supported this by producing images 97% comparable to ground truths in cases with no leakage.

This CNF-based method also improves current techniques that struggle to provide accurate information on the spatial extent of leakage. Conditioning CNFs to samples that change over time allows it to describe and predict the behavior of carbon dioxide plumes.

This study is part of the group’s broader effort to produce digital twins for seismic monitoring of underground storage. A digital twin is a virtual model of a physical object. Digital twins are commonplace in manufacturing, healthcare, environmental monitoring, and other industries.

“There are very few digital twins in earth sciences, especially based on machine learning,” Herrmann explained. “This paper is just a prelude to building an uncertainty aware digital twin for geological carbon storage.”

Herrmann holds joint appointments in the Schools of Earth and Atmospheric Sciences (EAS), Electrical and Computer Engineering, and Computational Science and Engineering (CSE).

School of EAS Ph.D. student Abhinov Prakash Gahlot is the paper’s first author. Ting-Ying (Rosen) Yu (B.S. ECE 2023) started the research as an undergraduate group member. School of CSE Ph.D. students Huseyin Tuna Erdinc, Rafael Orozco, and Ziyi (Francis) Yin co-authored with Gahlot and Herrmann.

NeurIPS 2023 took place Dec. 10-16 in New Orleans. Occurring annually, it is one of the largest conferences in the world dedicated to machine learning.

Over 130 Georgia Tech researchers presented more than 60 papers and posters at NeurIPS 2023. One-third of CSE’s faculty represented the School at the conference. Along with Herrmann, these faculty included Ümit Çatalyürek, Polo Chau, Bo Dai, Srijan Kumar, Yunan Luo, Anqi Wu, and Chao Zhang.

“In the field of geophysics, inverse problems and statistical solutions of these problems are known, but no one has been able to characterize these statistics in a realistic way,” Herrmann said.

“That’s where these machine learning techniques come into play, and we can do things now that you could never do before.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nov. 29, 2023

The National Institute of Health (NIH) has awarded Yunan Luo a grant for more than $1.8 million to use artificial intelligence (AI) to advance protein research.

New AI models produced through the grant will lead to new methods for the design and discovery of functional proteins. This could yield novel drugs and vaccines, personalized treatments against diseases, and other advances in biomedicine.

“This project provides a new paradigm to analyze proteins’ sequence-structure-function relationships using machine learning approaches,” said Luo, an assistant professor in Georgia Tech’s School of Computational Science and Engineering (CSE).

“We will develop new, ready-to-use computational models for domain scientists, like biologists and chemists. They can use our machine learning tools to guide scientific discovery in their research.”

Luo’s proposal improves on datasets spearheaded by AlphaFold and other recent breakthroughs. His AI algorithms would integrate these datasets and craft new models for practical application.

One of Luo’s goals is to develop machine learning methods that learn statistical representations from the data. This reveals relationships between proteins’ sequence, structure, and function. Scientists then could characterize how sequence and structure determine the function of a protein.

Next, Luo wants to make accurate and interpretable predictions about protein functions. His plan is to create biology-informed deep learning frameworks. These frameworks could make predictions about a protein’s function from knowledge of its sequence and structure. It can also account for variables like mutations.

In the end, Luo would have the data and tools to assist in the discovery of functional proteins. He will use these to build a computational platform of AI models, algorithms, and frameworks that ‘invent’ proteins. The platform figures the sequence and structure necessary to achieve a designed proteins desired functions and characteristics.

“My students play a very important part in this research because they are the driving force behind various aspects of this project at the intersection of computational science and protein biology,” Luo said.

“I think this project provides a unique opportunity to train our students in CSE to learn the real-world challenges facing scientific and engineering problems, and how to integrate computational methods to solve those problems.”

The $1.8 million grant is funded through the Maximizing Investigators’ Research Award (MIRA). The National Institute of General Medical Sciences (NIGMS) manages the MIRA program. NIGMS is one of 27 institutes and centers under NIH.

MIRA is oriented toward launching the research endeavors of young career faculty. The grant provides researchers with more stability and flexibility through five years of funding. This enhances scientific productivity and improves the chances for important breakthroughs.

Luo becomes the second School of CSE faculty to receive the MIRA grant. NIH awarded the grant to Xiuwei Zhang in 2021. Zhang is the J.Z. Liang Early-Career Assistant Professor in the School of CSE.

[Related: Award-winning Computer Models Propel Research in Cellular Differentiation]

“After NIH, of course, I first thanked my students because they laid the groundwork for what we seek to achieve in our grant proposal,” said Luo.

“I would like to thank my colleague, Xiuwei Zhang, for her mentorship in preparing the proposal. I also thank our school chair, Haesun Park, for her help and support while starting my career.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nov. 14, 2023

Georgia Tech researchers have created a machine learning (ML) visualization tool that must be seen to believe.

Ph.D. student Alec Helbling is the creator of ManimML, a tool that renders common ML concepts into animation. This development will enable new ML technologies by allowing designers to see and share their work in action.

Helbling presented ManimML at IEEE VIS, the world’s highest-rated conference for visualization research and second-highest rated for computer graphics. It received so much praise at the conference that it won the venue’s prize for best poster.

“I was quite surprised and honored to have received this award,” said Helbling, who is advised by School of Computational Science and Engineering Associate Professor Polo Chau.

“I didn't start ManimML with the intention of it becoming a research project, but because I felt like a tool for communicating ML architectures through animation needed to exist.”

ManimML uses animation to show ML developers how their algorithms work. Not only does the tool allow designers to watch their projects come to life, but they can also explain existing and new ML techniques to broad audiences, including non-experts.

ManimML is an extension of the Manim Community library, a Python tool for animating mathematical concepts. ManimML connects to the library to offer a new capability that animates ML algorithms and architectures.

Helbling chose familiar platforms like Python and Manim to make the tool accessible to large swaths of users varying in skill and experience. Enthusiasts and experts alike can find practical use in ManimML considering today’s widespread interest and application of ML.

“We know that animation is an effective means of instruction and learning,” Helbling said. “ManimML offers that ability for ML practitioners to easily communicate how their systems work, improving public trust and awareness of machine learning.”

ManimML overcomes what has been an elusive approach to visualizing ML algorithms. Current techniques require developers to create custom animations for every specific algorithm, often needing specialized software and experience.

ManimML streamlines this by producing animations of common ML architectures coded in Python, like neural networks.

A user only needs to specify a sequence of neural network layers and their respective hyperparameters. ManimML then constructs an animation of the entire network.

“To use ManimML, you simply need to specify an ML architecture in code, using a syntax familiar to most ML professionals,” Helbling said. “Then it will automatically generate an animation that communicates how the system works.”

ManimML ranked as the best poster from a field of 49 total presentations. IEEE VIS 2023 occurred Oct. 22-27 in Melbourne, Australia. This event marks the first time IEEE held the conference in the Southern Hemisphere.

ManimML has more than 23,000 downloads and a demonstration on social media has hundreds of thousands of views.

ManimML is open source and available at: https://github.com/helblazer811/ManimML

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nov. 09, 2023

Generative AI tools have taken the world by storm. ChatGPT reached 100 million monthly users faster than any internet application in history. The potential benefits of efficiency and productivity gains for knowledge-intensive firms are clear, and companies in industries such as professional services, health care, and finance are investing billions in adopting the technologies.

But the benefits for individual knowledge workers can be less clear. When technology can do many tasks that only humans could do in the past, what does it mean for knowledge workers? Generative AI can and will automate some of the tasks of knowledge workers, but that doesn’t necessarily mean it will replace all of them. Generative AI can also help knowledge workers find more time to do meaningful work, and improve performance and productivity. The difference is in how you use the tools.

In this article, we aim to explain how to do that well. First, to help employees and managers understand ways that generative AI can support knowledge work. And second, to identify steps that managers can take to help employees realize the potential benefits.

What Is Knowledge Work?

Knowledge work primarily involves cognitive processing of information to generate value-added outputs. It differs from manual labor in the materials used and the types of conversion processes involved. Knowledge work is typically dependent on advanced training and specialization in specific domains, gained over time through learning and experience. It includes both structured and unstructured tasks. Structured tasks are those with well-defined and well-understood inputs and outputs, as well as prespecified steps for converting inputs to outputs. Examples include payroll processing or scheduling meetings. Unstructured tasks are those where inputs, conversion procedures, or outputs are mostly ill-defined, underspecified, or unknown a priori. Examples include resolving interpersonal conflict, designing a product, or negotiating a salary.

Very few jobs are purely one or the other. Jobs consist of many tasks, some of which are structured and others which are unstructured. Some tasks are necessary but repetitive. Some are more creative or interesting. Some can be done alone, while others require working with other people. Some are common to everything the worker does, while others happen only for exceptions. As a knowledge worker, your job, then, is to manage this complex set of tasks to achieve their goals.

Computers have traditionally been good at performing structured tasks, but there are many tasks that only humans can do. Generative AI is changing the game, moving the boundaries of what computers can do and shrinking the sphere of tasks that remain as purely human activity. While it can be worrisome to think about generative AI encroaching on knowledge work, we believe that the benefits can far outweigh the costs for most knowledge workers. But realizing the benefits requires taking action now to learn how to leverage generative AI in support of knowledge work.

Continue reading: How Generative AI Will Transfer Knowledge Work

Reprinted from the Harvard Business Review, November 7, 2023.

- Maryam Alavi is the Elizabeth D. & Thomas M. Holder Chair & Professor of IT Management, Scheller College of Business, Georgia Institute of Technology.

- George Westerman is a Senior Lecturer at MIT Sloan School of Management and founder of the Global Opportunity Forum in MIT’s Office of Open Learning.

News Contact

Lorrie Burroughs

Nov. 01, 2023

The School of Physics’ new initiative to catalyze research using artificial intelligence (AI) and machine learning (ML) began October 16 with a conference at the Global Learning Center titled Revolutionizing Physics — Exploring Connections Between Physics and Machine Learning.

AI and ML have the spotlight right now in science, and the conference promises to be the first of many, says Feryal Özel, Professor and Chair of the School of Physics.

"We were delighted to host the AI/ML in Physics conference and see the exciting rapid developments in this field,” Özel says. “The conference was a prominent launching point for the new AI/ML initiative we are starting in the School of Physics."

That initiative includes hiring two tenure-track faculty members, who will benefit from substantial expertise and resources in artificial intelligence and machine learning that already exist in the Colleges of Sciences, Engineering, and Computing.

The conference attendees heard from colleagues about how the technologies were helping with research involving exoplanet searches, plasma physics experiments, and culling through terabytes of data. They also learned that a rough search of keyword titles by Andreas Berlind, director of the National Science Foundation’s Division of Astronomical Sciences, showed that about a fifth of all current NSF grant proposals include components around artificial intelligence and machine learning.

“That’s a lot,” Berlind told the audience. “It’s doubled in the last four years. It’s rapidly increasing.”

Berlind was one of three program officers from the NSF and NASA invited to the conference to give presentations on the funding landscape for AI/ML research in the physical sciences.

“It’s tool development, the oldest story in human history,” said Germano Iannacchione, director of the NSF’s Division of Materials Research, who added that AI/ML tools “help us navigate very complex spaces — to augment and enhance our reasoning capabilities, and our pattern recognition capabilities.”

That sentiment was echoed by Dimitrios Psaltis, School of Physics professor and a co-organizer of the conference.

“They usually say if you have a hammer, you see everything as a nail,” Psaltis said. “Just because we have a tool doesn't mean we're going to solve all the problems. So we're in the exploratory phase because we don't know yet which problems in physics machine learning will help us solve. Clearly it will help us solve some problems, because it's a brand new tool, and there are other instances when it will make zero contribution. And until we find out what those problems are, we're going to just explore everything.”

That means trying to find out if there is a place for the technologies in classical and modern physics, quantum mechanics, thermodynamics, optics, geophysics, cosmology, particle physics, and astrophysics, to name just a few branches of study.

Sanaz Vahidinia of NASA’s Astronomy and Astrophysics Research Grants told the attendees that her division was an early and enthusiastic adopter of AI and machine learning. She listed examples of the technologies assisting with gamma-ray astronomy and analyzing data from the Hubble and Kepler space telescopes. “AI and deep learning were very good at identifying patterns in Kepler data,” Vahidinia said.

Some of the physicist presentations at the conference showed pattern recognition capabilities and other features for AI and ML:

- Cassandra Hall, assistant professor of Computational Astrophysics at the University of Georgia, illustrated how machine learning helped in the search for hidden forming exoplanets.

- Christopher J. Rozell, Julian T. Hightower Chair and Professor in the School of Electrical and Computer Engineering, spoke of his experiments using “explainable AI” (AI that conveys in human terms how it reaches its decisions) to track depression recovery with deep brain stimulation.

- Paulo Alves, assistant professor of physics at UCLA College of Physical Sciences Space Institute, presented on AI/ML as tools of scientific discovery in plasma physics.

Alves’s presentation inspired another physicist attending the conference, Psaltis said. “One of our local colleagues, who's doing magnetic materials research, said, ‘Hey, I can apply the exact same thing in my field,’ which he had never thought about before. So we not only have cross-fertilization (of ideas) at the conference, but we’re also learning what works and what doesn't.”

More information on funding and grants at the National Science Foundation can be found here. Information on NASA grants is found here.

News Contact

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Oct. 27, 2023

AI solutions have the power to become our silent partners in ways that could drastically improve our daily lives — and are already doing it. Yet, in a world where algorithms can sift through data with a precision no human can match, uneasiness stirs.

Georgia Tech researchers are confronting the paradoxes, pitfalls, and potential of artificial intelligence. Here, some of them shed light on the emerging role of AI in our lives — and answer questions about how humans and machines will coexist in the future.

We asked Georgia Tech AI experts key questions about the technology, its use and misuse, and how it might shape our shared future. Here’s what they had to say.

Click here to read the story.

Pagination

- Previous page

- 8 Page 8

- Next page