May. 22, 2024

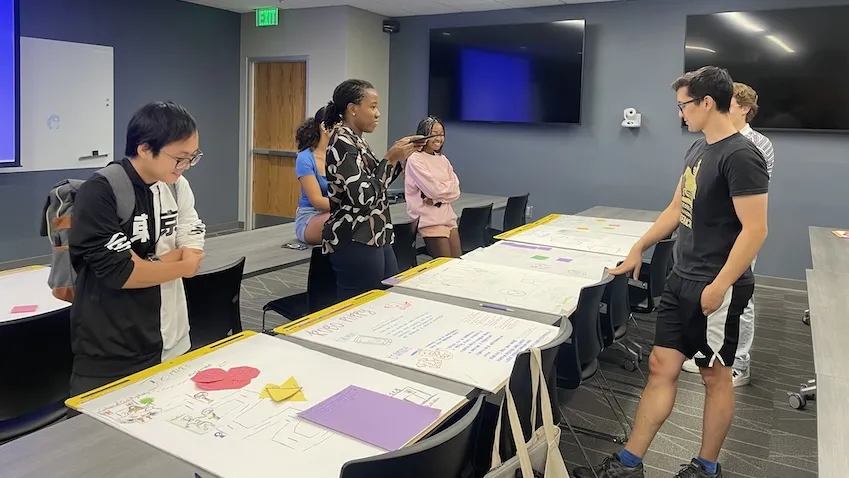

Working on a multi-institutional team of investigators, Georgia Tech researchers have helped the state of Georgia become the epicenter for developing K-12 AI educational curriculum nationwide.

The new curriculum introduced by Artificial Intelligence for Georgia (AI4GA) has taught middle school students to use and understand AI. It’s also equipped middle school teachers to teach the foundations of AI.

AI4GA is a branch of a larger initiative, the Artificial Intelligence for K-12 (AI4K12). Funded by the National Science Foundation and led by researchers from Carnegie Mellon University and the University of Florida, AI4K12 is developing national K-12 guidelines for AI education.

Bryan Cox, the Kapor research fellow in Georgia Tech’s Constellation Center for Equity in Computing, drove a transformative computer science education initiative when he worked at the Georgia Department of Education. Though he is no longer with the DOE, he persuaded the principal investigators of AI4K12 to use Georgia as their testing ground. He became a lead principal investigator for AI4GA.

“We’re using AI4GA as a springboard to contextualize the need for AI literacy in populations that have the potential to be negatively impacted by AI agents,” Cox said.

Judith Uchidiuno, an assistant professor in Georgia Tech’s School of Interactive Computing, began working on the AI4K12 project as a post-doctoral researcher at Carnegie Mellon under lead PI Dave Touretzky. Joining the faculty at Georgia Tech enabled her to be an in-the-classroom researcher for AI4GA. She started her Play and Learn Lab at Georgia Tech and hired two research assistants devoted to AI4GA.

Focusing on students from underprivileged backgrounds in urban, suburban, and rural communities, Uchidiuno said her team has worked with over a dozen Atlanta-based schools to develop an AI curriculum. The results have been promising.

“Over the past three years, over 1,500 students have learned AI due to the work we’re doing with teachers,” Uchidiuno said. “We are empowering teachers through AI. They now know they have the expertise to teach this curriculum.”

AI4GA is in its final semester of NSF funding, and the researchers have made their curriculum and teacher training publicly available. The principal investigators from Carnegie Mellon and the University of Florida will use the curriculum as a baseline for AI4K12.

STARTING STUDENTS YOUNG

Though AI is a complex subject, the researchers argue middle schoolers aren’t too young to learn about how it works and the social implications that come with it.

“Kids are interacting with it whether people like it or not,” Uchidiuno said. “Many of them already have smart devices. Some children have parents with smart cars. More and more students are using ChatGPT.

“They don’t have much understanding of the impact or the implications of using AI, especially data and privacy. If we want to prepare students who will one day build these technologies, we need to start them young and at least give them some critical thinking skills.”

Will Gelder, a master’s student in Uchidiuno’s lab, helped analyze data exploring the benefits of co-designing the teaching curriculum with teachers based on months of working with students and learning how they understand AI. Rebecca Yu, a research scientist in Uchidiuno’s lab, collected data to determine which parts of the curriculum were effective or ineffective.

Through the BridgeUP STEM Program at Georgia Tech, Uchidiuno worked with high school students to design video games that demonstrate their knowledge of AI based on the AI4GA curriculum. Students designed the games using various maker materials in 2D and 3D representations, and the games are currently in various stages of development by student developers at the Play and Learn Lab.

“The students love creative projects that let them express their creative thoughts,” Gelder said. “Students love the opportunity to break out markers or crayons and design their dream robot and whatever functions they can think of.”

Yu said her research shows that many students demonstrate the ability to understand advanced concepts of AI through these creative projects.

“To teach the concept of algorithms, we have students use crayons to draw different colors to mimic all the possibilities a computer is considering in its decision-making,” Yu said.

“Many other curricula like ours don’t go in-depth about the technical concepts, but AI4GA does. We show that with appropriate levels of scaffolding and instructions, they can learn them even without mathematical or programming backgrounds.”

EMPOWERING TEACHERS

Cox cast a wide net to recruit middle school teachers with diverse student groups. A former student of his answered the call.

Amber Jones, a Georgia Tech alumna, taught at a school primarily consisting of Black and Latinx students. She taught a computer science course that covered building websites, using Excel, and basic coding.

Jones said many students didn’t understand the value and applications of what her course was teaching until she transitioned to the AI4GA curriculum.

“AI for Georgia curriculum felt like every other lesson tied right back to the general academics,” Jones said. “I could say, ‘Remember how you said you weren’t going to ever use y equals mx plus b? Well, every time you use Siri, she's running y equals mx plus b.’ I saw them drawing the connections and not only drawing them but looking for them.”

Connecting AI back to their other classes, favorite social media platforms, and digital devices helped students understand the concepts and fostered interest in the curriculum.

Jones’s participation in the program also propelled her career forward. She now works as a consultant teaching AI to middle school students.

“I’m kind of niche in my experiences,” Jones said. “So, when someone says, ‘Hey, we also want to do something with a young population that involves computer science,’ I’m in a small pool of people that can be looked to for guidance.”

AI4GA quickly cultivated a new group of experts within a short timeframe.

“They’ve made their classes their own,” Cox said. “They add their own tweaks. Over the course of the project, the teachers were engaged in cultivating the lessons for their experience and their context based on the identity of their students.”

News Contact

Nathan Deen

Communications Officer

School of Interactive Computing

May. 15, 2024

Georgia Tech researchers say non-English speakers shouldn’t rely on chatbots like ChatGPT to provide valuable healthcare advice.

A team of researchers from the College of Computing at Georgia Tech has developed a framework for assessing the capabilities of large language models (LLMs).

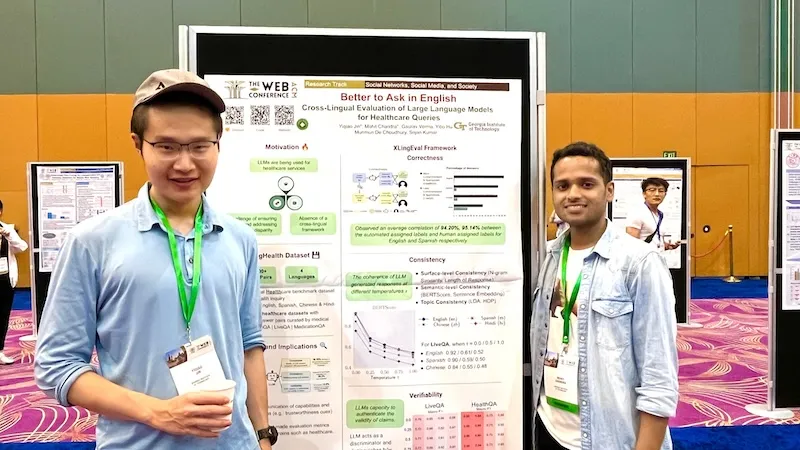

Ph.D. students Mohit Chandra and Yiqiao (Ahren) Jin are the co-lead authors of the paper Better to Ask in English: Cross-Lingual Evaluation of Large Language Models for Healthcare Queries.

Their paper’s findings reveal a gap between LLMs and their ability to answer health-related questions. Chandra and Jin point out the limitations of LLMs for users and developers but also highlight their potential.

Their XLingEval framework cautions non-English speakers from using chatbots as alternatives to doctors for advice. However, models can improve by deepening the data pool with multilingual source material such as their proposed XLingHealth benchmark.

“For users, our research supports what ChatGPT’s website already states: chatbots make a lot of mistakes, so we should not rely on them for critical decision-making or for information that requires high accuracy,” Jin said.

“Since we observed this language disparity in their performance, LLM developers should focus on improving accuracy, correctness, consistency, and reliability in other languages,” Jin said.

Using XLingEval, the researchers found chatbots are less accurate in Spanish, Chinese, and Hindi compared to English. By focusing on correctness, consistency, and verifiability, they discovered:

- Correctness decreased by 18% when the same questions were asked in Spanish, Chinese, and Hindi.

- Answers in non-English were 29% less consistent than their English counterparts.

- Non-English responses were 13% overall less verifiable.

XLingHealth contains question-answer pairs that chatbots can reference, which the group hopes will spark improvement within LLMs.

The HealthQA dataset uses specialized healthcare articles from the popular healthcare website Patient. It includes 1,134 health-related question-answer pairs as excerpts from original articles.

LiveQA is a second dataset containing 246 question-answer pairs constructed from frequently asked questions (FAQs) platforms associated with the U.S. National Institutes of Health (NIH).

For drug-related questions, the group built a MedicationQA component. This dataset contains 690 questions extracted from anonymous consumer queries submitted to MedlinePlus. The answers are sourced from medical references, such as MedlinePlus and DailyMed.

In their tests, the researchers asked over 2,000 medical-related questions to ChatGPT-3.5 and MedAlpaca. MedAlpaca is a healthcare question-answer chatbot trained in medical literature. Yet, more than 67% of its responses to non-English questions were irrelevant or contradictory.

“We see far worse performance in the case of MedAlpaca than ChatGPT,” Chandra said.

“The majority of the data for MedAlpaca is in English, so it struggled to answer queries in non-English languages. GPT also struggled, but it performed much better than MedAlpaca because it had some sort of training data in other languages.”

Ph.D. student Gaurav Verma and postdoctoral researcher Yibo Hu co-authored the paper.

Jin and Verma study under Srijan Kumar, an assistant professor in the School of Computational Science and Engineering, and Hu is a postdoc in Kumar’s lab. Chandra is advised by Munmun De Choudhury, an associate professor in the School of Interactive Computing.

The team will present their paper at The Web Conference, occurring May 13-17 in Singapore. The annual conference focuses on the future direction of the internet. The group’s presentation is a complimentary match, considering the conference's location.

English and Chinese are the most common languages in Singapore. The group tested Spanish, Chinese, and Hindi because they are the world’s most spoken languages after English. Personal curiosity and background played a part in inspiring the study.

“ChatGPT was very popular when it launched in 2022, especially for us computer science students who are always exploring new technology,” said Jin. “Non-native English speakers, like Mohit and I, noticed early on that chatbots underperformed in our native languages.”

School of Interactive Computing communications officer Nathan Deen and School of Computational Science and Engineering communications officer Bryant Wine contributed to this report.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nathan Deen, Communications Officer

ndeen6@cc.gatech.edu

May. 10, 2024

Faculty from the George W. Woodruff School of Mechanical Engineering, including Associate Professors Gregory Sawicki and Aaron Young, have been awarded a five-year, $2.6 million Research Project Grant (R01) from the National Institutes of Health (NIH).

“We are grateful to our NIH sponsor for this award to improve treatment of post-stroke individuals using advanced robotic solutions,” said Young, who is also affiliated with Georgia Tech's Neuro Next Initiative.

The R01 will support a project focused on using optimization and artificial intelligence to personalize exoskeleton assistance for individuals with symptoms resulting from stroke. Sawicki and Young will collaborate with researchers from the Emory Rehabilitation Hospital including Associate Professor Trisha Kesar.

“As a stroke researcher, I am eagerly looking forward to making progress on this project, and paving the way for leading-edge technologies and technology-driven treatment strategies that maximize functional independence and quality of life of people with neuro-pathologies," said Kesar.

The intervention for study participants will include a training therapy program that will use biofeedback to increase the efficiency of exosuits for wearers.

Kinsey Herrin, senior research scientist in the Woodruff School and Neuro Next Initiative affiliate, explained the extended benefits of the study, including being able to increase safety for stroke patients who are moving outdoors. “One aspect of this project is testing our technologies on stroke survivors as they're walking outside. Being outside is a small thing that many of us take for granted, but a devastating loss for many following a stroke.”

Sawicki, who is also an associate professor in the School of Biological Sciences and core faculty in Georgia Tech's Institute for Robotics and Intelligent Machines, is also looking forward to the project. "This new project is truly a tour de force that leverages a highly talented interdisciplinary team of engineers, clinical scientists, and prosthetics/orthotics experts who all bring key elements needed to build assistive technology that can work in real-world scenarios."

News Contact

Chloe Arrington

Communications Officer II

George W. Woodruff School of Mechanical Engineering

May. 06, 2024

Cardiologists and surgeons could soon have a new mobile augmented reality (AR) tool to improve collaboration in surgical planning.

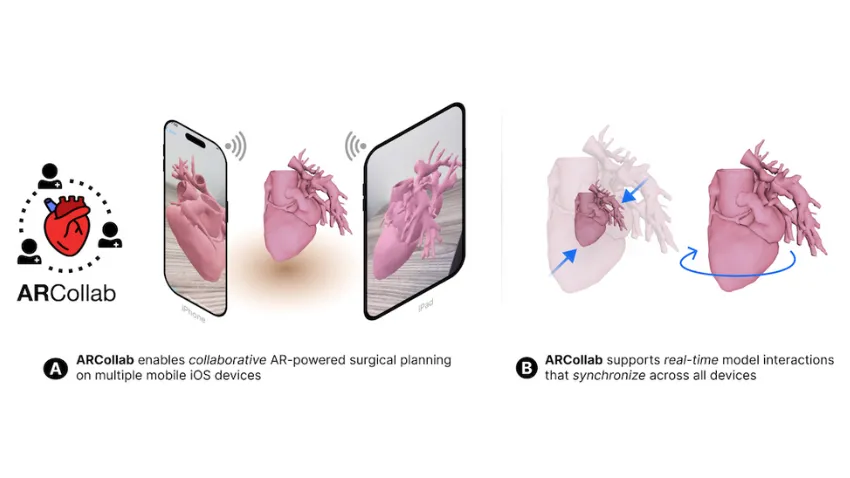

ARCollab is an iOS AR application designed for doctors to interact with patient-specific 3D heart models in a shared environment. It is the first surgical planning tool that uses multi-user mobile AR in iOS.

The application’s collaborative feature overcomes limitations in traditional surgical modeling and planning methods. This offers patients better, personalized care from doctors who plan and collaborate with the tool.

Georgia Tech researchers partnered with Children’s Healthcare of Atlanta (CHOA) in ARCollab’s development. Pratham Mehta, a computer science major, led the group’s research.

“We have conducted two trips to CHOA for usability evaluations with cardiologists and surgeons. The overall feedback from ARCollab users has been positive,” Mehta said.

“They all enjoyed experimenting with it and collaborating with other users. They also felt like it had the potential to be useful in surgical planning.”

ARCollab’s collaborative environment is the tool’s most novel feature. It allows surgical teams to study and plan together in a virtual workspace, regardless of location.

ARCollab supports a toolbox of features for doctors to inspect and interact with their patients' AR heart models. With a few finger gestures, users can scale and rotate, “slice” into the model, and modify a slicing plane to view omnidirectional cross-sections of the heart.

Developing ARCollab on iOS works twofold. This streamlines deployment and accessibility by making it available on the iOS App Store and Apple devices. Building ARCollab on Apple’s peer-to-peer network framework ensures the functionality of the AR components. It also lessens the learning curve, especially for experienced AR users.

ARCollab overcomes traditional surgical planning practices of using physical heart models. Producing physical models is time-consuming, resource-intensive, and irreversible compared to digital models. It is also difficult for surgical teams to plan together since they are limited to studying a single physical model.

Digital and AR modeling is growing as an alternative to physical models. CardiacAR is one such tool the group has already created.

However, digital platforms lack multi-user features essential for surgical teams to collaborate during planning. ARCollab’s multi-user workspace progresses the technology’s potential as a mass replacement for physical modeling.

“Over the past year and a half, we have been working on incorporating collaboration into our prior work with CardiacAR,” Mehta said.

“This involved completely changing the codebase, rebuilding the entire app and its features from the ground up in a newer AR framework that was better suited for collaboration and future development.”

Its interactive and visualization features, along with its novelty and innovation, led the Conference on Human Factors in Computing Systems (CHI 2024) to accept ARCollab for presentation. The conference occurs May 11-16 in Honolulu.

CHI is considered the most prestigious conference for human-computer interaction and one of the top-ranked conferences in computer science.

M.S. student Harsha Karanth and alumnus Alex Yang (CS 2022, M.S. CS 2023) co-authored the paper with Mehta. They study under Polo Chau, an associate professor in the School of Computational Science and Engineering.

The Georgia Tech group partnered with Timothy Slesnick and Fawwaz Shaw from CHOA on ARCollab’s development.

“Working with the doctors and having them test out versions of our application and give us feedback has been the most important part of the collaboration with CHOA,” Mehta said.

“These medical professionals are experts in their field. We want to make sure to have features that they want and need, and that would make their job easier.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

May. 06, 2024

Thanks to a Georgia Tech researcher's new tool, application developers can now see potential harmful attributes in their prototypes.

Farsight is a tool designed for developers who use large language models (LLMs) to create applications powered by artificial intelligence (AI). Farsight alerts prototypers when they write LLM prompts that could be harmful and misused.

Downstream users can expect to benefit from better quality and safer products made with Farsight’s assistance. The tool’s lasting impact, though, is that it fosters responsible AI awareness by coaching developers on the proper use of LLMs.

Machine Learning Ph.D. candidate Zijie (Jay) Wang is Farsight’s lead architect. He will present the paper at the upcoming Conference on Human Factors in Computing Systems (CHI 2024). Farsight ranked in the top 5% of papers accepted to CHI 2024, earning it an honorable mention for the conference’s best paper award.

“LLMs have empowered millions of people with diverse backgrounds, including writers, doctors, and educators, to build and prototype powerful AI apps through prompting. However, many of these AI prototypers don’t have training in computer science, let alone responsible AI practices,” said Wang.

“With a growing number of AI incidents related to LLMs, it is critical to make developers aware of the potential harms associated with their AI applications.”

Wang referenced an example when two lawyers used ChatGPT to write a legal brief. A U.S. judge sanctioned the lawyers because their submitted brief contained six fictitious case citations that the LLM fabricated.

With Farsight, the group aims to improve developers’ awareness of responsible AI use. It achieves this by highlighting potential use cases, affected stakeholders, and possible harm associated with an application in the early prototyping stage.

A user study involving 42 prototypers showed that developers could better identify potential harms associated with their prompts after using Farsight. The users also found the tool more helpful and usable than existing resources.

Feedback from the study showed Farsight encouraged developers to focus on end-users and think beyond immediate harmful outcomes.

“While resources, like workshops and online videos, exist to help AI prototypers, they are often seen as tedious, and most people lack the incentive and time to use them,” said Wang.

“Our approach was to consolidate and display responsible AI resources in the same space where AI prototypers write prompts. In addition, we leverage AI to highlight relevant real-life incidents and guide users to potential harms based on their prompts.”

Farsight employs an in-situ user interface to show developers the potential negative consequences of their applications during prototyping.

Alert symbols for “neutral,” “caution,” and “warning” notify users when prompts require more attention. When a user clicks the alert symbol, an awareness sidebar expands from one side of the screen.

The sidebar shows an incident panel with actual news headlines from incidents relevant to the harmful prompt. The sidebar also has a use-case panel that helps developers imagine how different groups of people can use their applications in varying contexts.

Another key feature is the harm envisioner. This functionality takes a user’s prompt as input and assists them in envisioning potential harmful outcomes. The prompt branches into an interactive node tree that lists use cases, stakeholders, and harms, like “societal harm,” “allocative harm,” “interpersonal harm,” and more.

The novel design and insightful findings from the user study resulted in Farsight’s acceptance for presentation at CHI 2024.

CHI is considered the most prestigious conference for human-computer interaction and one of the top-ranked conferences in computer science.

CHI is affiliated with the Association for Computing Machinery. The conference takes place May 11-16 in Honolulu.

Wang worked on Farsight in Summer 2023 while interning at Google + AI Research group (PAIR).

Farsight’s co-authors from Google PAIR include Chinmay Kulkarni, Lauren Wilcox, Michael Terry, and Michael Madaio. The group possesses closer ties to Georgia Tech than just through Wang.

Terry, the current co-leader of Google PAIR, earned his Ph.D. in human-computer interaction from Georgia Tech in 2005. Madaio graduated from Tech in 2015 with a M.S. in digital media. Wilcox was a full-time faculty member in the School of Interactive Computing from 2013 to 2021 and serves in an adjunct capacity today.

Though not an author, one of Wang’s influences is his advisor, Polo Chau. Chau is an associate professor in the School of Computational Science and Engineering. His group specializes in data science, human-centered AI, and visualization research for social good.

“I think what makes Farsight interesting is its unique in-workflow and human-AI collaborative approach,” said Wang.

“Furthermore, Farsight leverages LLMs to expand prototypers’ creativity and brainstorm a wide range of use cases, stakeholders, and potential harms.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

May. 02, 2024

Two children are playing with a set of toys, each playing alone. That kind of play involves a somewhat limited set of interactions between the child and the toy. But what happens when the two children play together using the same toys?

“The actions are similar, but the choices and outcomes are very different because of the dynamic changes they’re making with the other person,” says Brian Magerko, Regents’ Professor in Georgia Tech’s School of Literature, Media, and Communication. “It’s a thing that humans do all the time, and computers don’t do with us at all.”

Welcome to the next frontier of artificial intelligence (AI) — not just generating but collaborating in real-time.

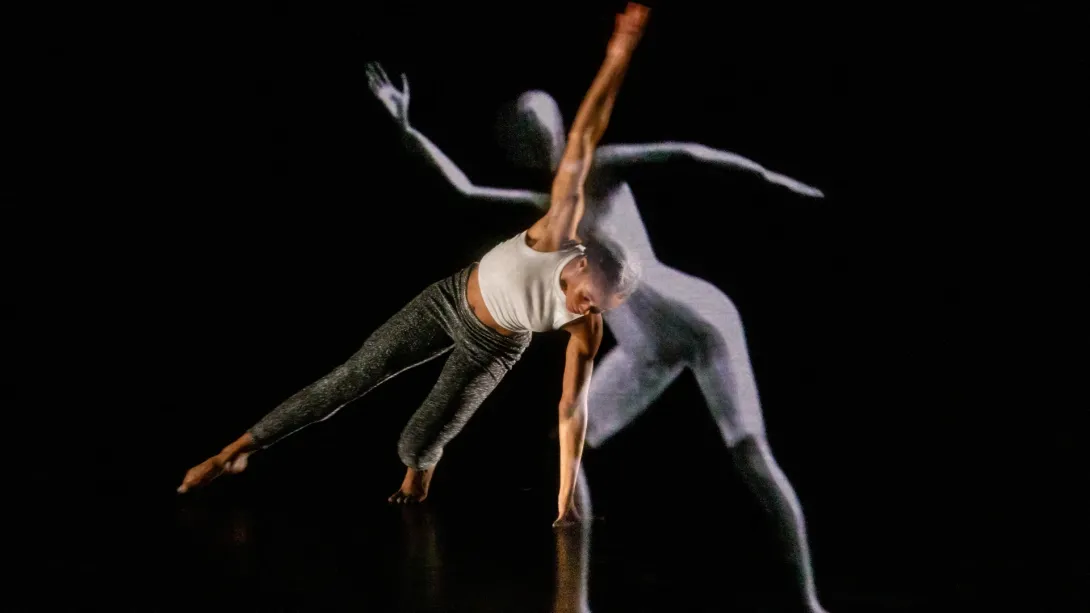

Magerko and his colleagues, Georgia Tech research scientist Milka Trajkova and Kennesaw State University Associate Professor of Dance Andrea Knowlton, are putting a collaborative AI system they’ve developed to the ultimate test: the world’s first collaborative AI dance performance.

Dance Partner

LuminAI is an interactive system that allows participants to engage in collaborative movement improvisation with an AI virtual dance partner projected on a nearby screen or wall. LuminAI analyzes participant movements and improvises responses informed by memories of past interactions with people. In other words, LuminAI learns how to dance by dancing with us.

The National Science Foundation-supported project began about 12 years ago in a lab and became an art installation and public demo. LuminAI has since moved into a different phase as a creative collaborator and education tool in a dance studio.

“We’re looking at the role LuminAI can play in dance education. As far as we’re aware, this is the first implemented version of an AI dancer in a dance studio,” says Trajkova, who was a professional ballet dancer before becoming a research scientist on the project.

To prepare LuminAI to collaborate with dancers, the research team started by studying pairs of improvisational dancers.

“We’re trying to understand how non-verbal, collaborative creativity occurs,” Knowlton says. “We start by trying to understand influencing factors that are perceived as contributing to improvisational success between two artists. Through that understanding, we applied those criteria to an AI system so it can have a similar experience with co-creative success.”

“We’re working on a creative arc,” adds Trajkova. “So instead of the AI agent just generating movements in response to the last thing that happened, we’re working to track and understand the dynamics of creative ideas across time as a continuous flow, rather than isolated instances of reaction.”

Students from Knowlton’s improvisational dance class at Kennesaw State spent two months of their spring semester working routinely with the LuminAI dancer and recording their impressions and experiences. One of the purposes the team discovered is that LuminAI serves as a third view for dancers and allows them to try ideas out with the system before trying it out with a partner.

The classroom experiment will culminate in a public performance on May 3 at Kennesaw State’s Marietta Dance Theater featuring the students performing with the LuminAI dancer. As far as the research team is aware the event is the world’s first collaborative AI dance performance.

While not all the dancers embraced having an AI collaborator, some of those who were skeptical at first left the experience more open to the possibility of collaborating with AI, Knowlton says. Regardless of their feelings toward working with AI, Knowlton says she believes the dancers gained valuable skills in working with specialized technology, especially as dance performances evolve to include more interactive media.

Refined Movement

So, what’s next for LuminAI? The project represents at least two possible paths for its learnings. The first includes continued exploration about how AI systems can be taught to cooperate and collaborate more like humans.

“With the advent of generative AI these past few years, it’s been really clear how great a need there is for this sort of social cognition,” says Magerko. “One of the things we’re going to be getting off the ground is sense-making with large language models. How do you collaborate with an AI system – rather than just making text or images, they’ll be able to make with us.”

The second involves the body movements LuminAI has been cataloging and analyzing over the years. Dance exemplifies highly refined motor skills, often exhibiting a level of detail surpassing that found in various athletic disciplines or physical therapy. While the tools designed to capture these intricate movements—through cameras and AI—are still nascent, the potential for harnessing this granular data is significant, Trajkova says.

That exploration begins on May 30 with a two-day summit being held at Georgia Tech to discuss its application for transforming performance athletics, with interdisciplinary participants in dance, computer vision, biomechanics, psychology, and human-computer interaction from Georgia Tech, Emory, KSU, Harvard, Royal Ballet in London, and Australian Ballet.

“It’s about understanding AI's role in augmenting training, promoting wellness as well as diving deep in decoding the artistry of human movements. How can we extract insights about the quality of athlete’s movements so we can help develop and enhance their own unique nuances?” Trajkova says.

News Contact

Megan McRainey

megan.mcrainey@gatech.edu

May. 01, 2024

Georgia Tech’s AI Hub will be directed by Pascal Van Hentenryck, announced Chaouki Abdallah, executive vice president for Research. Van Hentenryck, A. Russell Chandler III Chair and professor in the H. Milton Stewart School of Industrial and Systems Engineering, also directs the NSF Artificial Intelligence Institute for Advances in Optimization (AI4OPT).

Georgia Tech has been actively engaged in artificial intelligence (AI) research and education for decades. Formed in 2023, the AI Hub is a thriving network, bringing together over 1000 faculty and students who work on fundamental and applied AI-related research across the entire Institute.

“Pascal Van Hentenryck will drive innovation and excellence at the helm of Georgia Tech’s AI Hub,” said Abdallah. “His leadership of one of our three AI institutes has already shown his dedication to fostering impactful partnerships and cultivating a dynamic ecosystem for AI progress at Georgia Tech and beyond.”

The AI Hub aims to advance AI through discovery, interdisciplinary research, responsible deployment, and education to build the next generation of the AI workforce, as well as a sustainable future. Thanks to Tech’s applied, solutions-focused approach, the AI Hub is well-positioned to provide decision makers and stakeholders with access to world-class resources for commercializing and deploying AI.

“A fundamental question people are asking about AI now is, ‘Can we trust it?’” said Van Hentenryck. “As such, the AI Hub’s focus will be on developing trustworthy AI for social impact — in science, engineering, and education.”

U.S. News & World Report has ranked Georgia Tech among the five best universities with artificial intelligence programs. Van Hentenryck intends for the AI Hub to leverage the Institute’s strategic advantage in AI engineering to create powerful collaborations. These could include partnerships with the Georgia Tech Research Institute, for maximizing societal impact, and Tech’s 10 interdisciplinary research centers as well as its three NSF-funded AI institutes, for augmenting academic and policy impact.

“The AI Hub will empower all AI-related activities, from foundational research to applied AI projects, joint AI labs, AI incubators, and AI workforce development; it will also help shape AI policies and improve understanding of the social implications of AI technologies,” Van Hentenryck explained. “A key aspect will be to scale many of AI4OPT’s initiatives to Georgia Tech’s AI ecosystem more generally — in particular, its industrial partner and workforce development programs, in order to magnify societal impact and democratize access to AI and the AI workforce.”

Van Hentenryck is also thinking about AI’s technological implications. “AI is a unifying technology — it brings together computing, engineering, and the social sciences. Keeping humans at the center of AI applications and ensuring that AI systems are trustworthy and ethical by design is critical,” he added.

In its first year, the AI Hub will focus on building an agile and nimble organization to accomplish the following goals:

facilitate, promote, and nurture use-inspired research and innovative industrial partnerships;

translate AI research into impact through AI engineering and entrepreneurship programs; and

develop sustainable AI workforce development programs.

Additionally, the AI Hub will support new events, including AI-Tech Fest, a fall kickoff for the center. This event will bring together Georgia Tech faculty, as well as external and potential partners, to discuss recent AI developments and the opportunities and challenges this rapidly proliferating technology presents, and to build a nexus of collaboration and innovation.

News Contact

Shelley Wunder-Smith

Director of Research Communications

Apr. 23, 2024

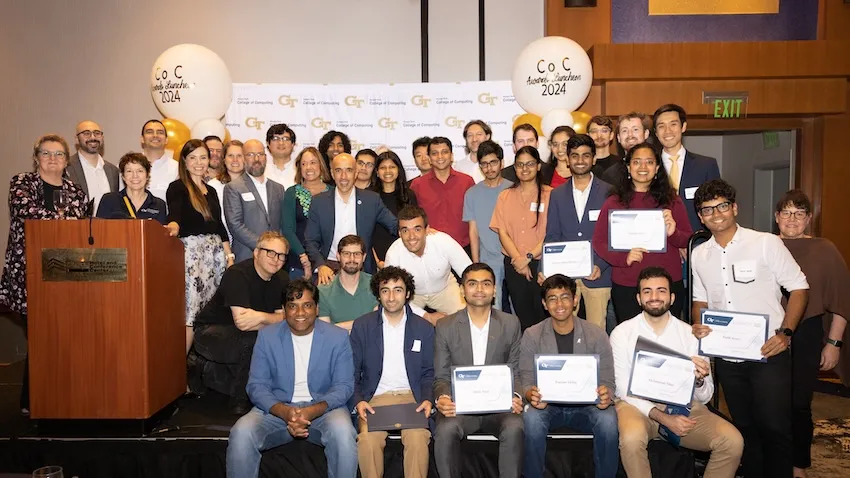

The College of Computing’s countdown to commencement began on April 11 when students, faculty, and staff converged at the 33rd Annual Awards Celebration.

The banquet celebrated the college community for an exemplary academic year and recognized the most distinguished individuals of 2023-2024. For Alex Orso, the reception was a high-water mark in his role as interim dean.

“I always say that the best part about my job is to brag about the achievements and accolades of my colleagues,” said Orso.

“It is my distinct honor and privilege to recognize these award winners and the collective success of the College of Computing.”

Orso’s colleagues from the School of Computational Science and Engineering (CSE) were among the celebration’s honorees. School of CSE students, faculty, and alumni earning awards this year include:

- Grace Driskill, M.S. CSE student - The Donald V. Jackson Fellowship

- Harshvardhan Baldwa, M.S. CSE student - The Marshal D. Williamson Fellowship

- Mansi Phute, M.S. CS student- The Marshal D. Williamson Fellowship

- Assistant Professor Chao Zhang- Outstanding Junior Faculty Research Award

- Nazanin Tabatbaei, teaching assistant in Associate Professor Polo Chau’s CSE 6242 Data & Visual Analytics course- Outstanding Instructional Associate Teaching Award

- Rodrigo Borela (Ph.D. CSE-CEE 2021), School of Computing Instruction Lecturer and CSE program alumnus - William D. "Bill" Leahy Jr. Outstanding Instructor Award

- Pratham Metha, undergraduate student in Chau’s research group- Outstanding Legacy Leadership Award

- Alexander Rodriguez (Ph.D. CS 2023), School of CSE alumnus - Outstanding Doctoral Dissertation Award

At the Institute level, Georgia Tech recognized Driskill, Baldwa, and Phute for their awards on April 10 at the annual Student Honors Celebration.

Driskill’s classroom achievement earned her a spot on the 2024 All-ACC Indoor Track and Field Academic Team. This follows her selection for the 2023 All-ACC Academic Team for cross country.

Georgia Tech’s Center for Teaching and Learning released in summer 2023 the Class of 1934 Honor Roll for spring semester courses. School of CSE awardees included Assistant Professor Srijan Kumar (CSE 6240: Web Search & Text Mining), Lecturer Max Mahdi Roozbahani (CS 4641: Machine Learning), and alumnus Mengmeng Liu (CSE 6242: Data & Visual Analytics).

Accolades and recognition of School of CSE researchers for 2023-2024 expounded off campus as well.

School of CSE researchers received awards off campus throughout the year, a testament to the reach and impact of their work.

School of CSE Ph.D. student Gaurav Verma kicked off the year by receiving the J.P. Morgan Chase AI Research Ph.D. Fellowship. Verma was one of only 13 awardees from around the world selected for the 2023 class.

Along with seeing many of his students receive awards this year, Polo Chau attained a 2023 Google Award for Inclusion Research. Later in the year, the Institute promoted Chau to professor, which takes effect in the 2024-2025 academic year.

Schmidt Sciences selected School of CSE Assistant Professor Kai Wang as an AI2050 Early Career Fellow to advance artificial intelligence research for social good. By being part of the fellowship’s second cohort, Wang is the first ever Georgia Tech faculty to receive the award.

School of CSE Assistant Professor Yunan Luo received two significant awards to advance his work in computational biology. First, Luo received the Maximizing Investigator’s Research Award (MIRA) from the National Institutes of Health, which provides $1.8 million in funding for five years. Next, he received the 2023 Molecule Make Lab Institute (MMLI) seed grant.

Regents’ Professor Surya Kalidindi, jointly appointed with the George W. Woodruff School of Mechanical Engineering and School of CSE, was named a fellow to the 2023 class of the Department of Defense’s Laboratory-University Collaboration Initiative (LUCI).

2023-2024 was a monumental year for Assistant Professor Elizabeth Qian, jointly appointed with the Daniel Guggenheim School of Aerospace Engineering and the School of CSE.

The Air Force Office of Scientific Research selected Qian for the 2024 class of their Young Investigator Program. Earlier in the year, she received a grant under the Department of Energy’s Energy Earthshots Initiative.

Qian began the year by joining 81 other early-career engineers at the National Academy of Engineering’s Grainger Foundation Frontiers of Engineering 2023 Symposium. She also received the Hans Fischer Fellowship from the Institute for Advance Study at the Technical University of Munich.

It was a big academic year for Associate Professor Elizabeth Cherry. Cherry was reelected to a three-year term as a council member-at-large of the Society of Industrial and Applied Mathematics (SIAM). Cherry is also co-chair of the SIAM organizing committee for next year’s Conference on Computational Science and Engineering (CSE25).

Cherry continues to serve as the School of CSE’s associate chair for academic affairs. These leadership contributions led to her being named to the 2024 ACC Academic Leaders Network (ACC ALN) Fellows program.

School of CSE Professor and Associate Chair Edmond Chow was co-author of a paper that received the Test of Time Award at Supercomputing 2023 (SC23). Right before SC23, Chow’s Ph.D. student Hua Huang was selected as an honorable mention for the 2023 ACM-IEEE CS George Michael Memorial HPC Fellowship.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Apr. 19, 2024

When U.S. Rep. Earl L. “Buddy” Carter from Georgia’s 1st District visited Atlanta recently, one of his top priorities was meeting with the experts at Georgia Tech’s 20,000-square-foot Advanced Manufacturing Pilot Facility (AMPF).

Carter was recently named the House Energy and Commerce Committee’s chair of the Environment, Manufacturing, and Critical Materials Subcommittee, a group that concerns itself primarily with contamination of soil, air, noise, and water, as well as emergency environmental response, whether physical or cybersecurity.

Because AMPF’s focus dovetails with subcommittee interests, the facility was a fitting stop for Carter, who was welcomed for an afternoon tour and series of live demonstrations. Programs within Georgia Tech’s Enterprise Innovation Institute — specifically the Georgia Artificial Intelligence in Manufacturing (Georgia AIM) and Georgia Manufacturing Extension Partnership (GaMEP) — were well represented.

“Innovation is extremely important,” Carter said during his April 1 visit. “In order to handle some of our problems, we’ve got to have adaptation, mitigation, and innovation. I’ve always said that the greatest innovators, the greatest scientists in the world, are right here in the United States. I’m so proud of Georgia Tech and what they do for our state and for our nation.”

Carter’s AMPF visit began with an introduction by Thomas Kurfess, Regents' Professor and HUSCO/Ramirez Distinguished Chair in Fluid Power and Motion Control in the George W. Woodruff School of Mechanical Engineering and executive director of the Georgia Tech Manufacturing Institute; Steven Ferguson, principal research scientist and managing director at Georgia AIM; research engineer Kyle Saleeby; and Donna Ennis, the Enterprise Innovation Institute’s director of community engagement and program development, and co-director of Georgia AIM.

Ennis provided an overview of Georgia AIM, while Ferguson spoke on the Manufacturing 4.0 Consortium and Kurfess detailed the AMPF origin story, before introducing four live demonstrations.

The first of these featured Chuck Easley, Professor of the Practice in the Scheller College of Business, who elaborated on supply chain issues. Afterward, Alan Burl of EPICS: Enhanced Preparation for Intelligent Cybermanufacturing Systems and mechanical engineer Melissa Foley led a brief information session on hybrid turbine blade repair.

Finally, GaMEP project manager Michael Barker expounded on GaMEP’s cybersecurity services, and Deryk Stoops of Central Georgia Technical College detailed the Georgia AIM-sponsored AI robotics training program at the Georgia Veterans Education Career Transition Resource (VECTR) Center, which offers training and assistance to those making the transition from military to civilian life.

The topic of artificial intelligence, in all its subtlety and nuance, was of particular interest to Carter.

“AI is the buzz in Washington, D.C.,” he said. “Whether it be healthcare, energy, [or] science, we on the Energy and Commerce Committee look at it from a sense [that there’s] a very delicate balance, and we understand the responsibility. But we want to try to benefit from this as much as we can.”

“I heard something today I haven’t heard before," Carter continued, "and that is instead of calling it artificial intelligence, we refer to it as ‘augmented intelligence.’ I think that’s a great term, and certainly something I’m going to take back to Washington with me.”

“It was a pleasure to host Rep. Carter for a firsthand look at AMPF," shared Ennis, "which is uniquely positioned to offer businesses the opportunity to collaborate with Georgia Tech researchers and students and to hear about Georgia AIM.

“At Georgia AIM, we’re committed to making the state a leader in artificial intelligence-assisted manufacturing, and we’re grateful for Congressman Carter’s interest and support of our efforts."

News Contact

Eve Tolpa

Senior Writer/Editor

Enterprise Innovation Institute (EI2)

Apr. 17, 2024

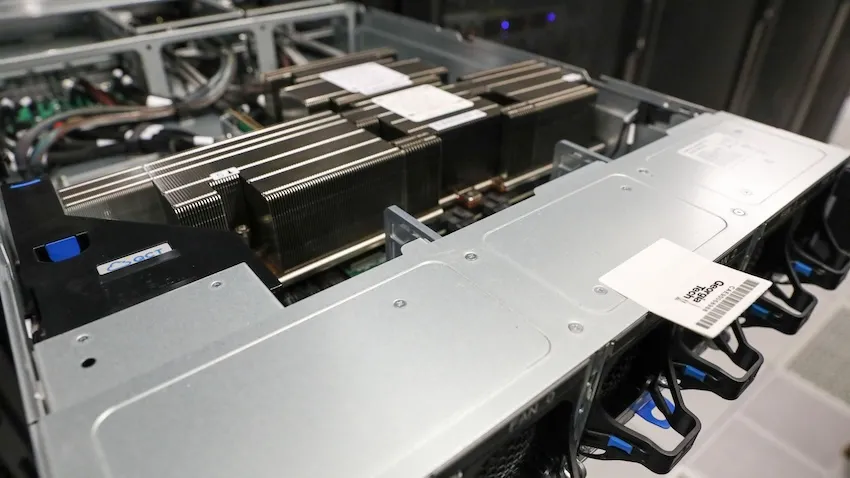

Computing research at Georgia Tech is getting faster thanks to a new state-of-the-art processing chip named after a female computer programming pioneer.

Tech is one of the first research universities in the country to receive the GH200 Grace Hopper Superchip from NVIDIA for testing, study, and research.

Designed for large-scale artificial intelligence (AI) and high-performance computing applications, the GH200 is intended for large language model (LLM) training, recommender systems, graph neural networks, and other tasks.

Alexey Tumanov and Tushar Krishna procured Georgia Tech’s first pair of Grace Hopper chips. Spencer Bryngelson attained four more GH200s, which will arrive later this month.

“We are excited about this new design that puts everything onto one chip and accessible to both processors,” said Will Powell, a College of Computing research technologist.

“The Superchip’s design increases computation efficiency where data doesn’t have to move as much and all the memory is on the chip.”

A key feature of the new processing chip is that the central processing unit (CPU) and graphics processing unit (GPU) are on the same board.

NVIDIA’s NVLink Chip-2-Chip (C2C) interconnect joins the two units together. C2C delivers up to 900 gigabytes per second of total bandwidth, seven times faster than PCIe Gen5 connections used in newer accelerated systems.

As a result, the two components share memory and process data with more speed and better power efficiency. This feature is one that the Georgia Tech researchers want to explore most.

Tumanov, an assistant professor in the School of Computer Science, and his Ph.D. student Amey Agrawal, are testing machine learning (ML) and LLM workloads on the chip. Their work with the GH200 could lead to more sustainable computing methods that keep up with the exponential growth of LLMs.

The advent of household LLMs, like ChatGPT and Gemini, pushes the limit of current architectures based on GPUs. The chip’s design overcomes known CPU-GPU bandwidth limitations. Tumanov’s group will put that design to the test through their studies.

Krishna is an associate professor in the School of Electrical and Computer Engineering and associate director of the Center for Research into Novel Computing Hierarchies (CRNCH).

His research focuses on optimizing data movement in modern computing platforms, including AI/ML accelerator systems. Ph.D. student Hao Kang uses the GH200 to analyze LLMs exceeding 30 billion parameters. This study will enable labs to explore deep learning optimizations with the new chip.

Bryngelson, an assistant professor in the School of Computational Science and Engineering, will use the chip to compute and simulate fluid and solid mechanics phenomena. His lab can use the CPU to reorder memory and perform disk writes while the GPU does parallel work. This capability is expected to significantly reduce the computational burden for some applications.

“Traditional CPU to GPU communication is slower and introduces latency issues because data passes back and forth over a PCIe bus,” Powell said. “Since they can access each other’s memory and share in one hop, the Superchip’s architecture boosts speed and efficiency.”

Grace Hopper is the inspirational namesake for the chip. She pioneered many developments in computer science that formed the foundation of the field today.

Hopper invented the first compiler, a program that translates computer source code into a target language. She also wrote the earliest programming languages, including COBOL, which is still used today in data processing.

Hopper joined the U.S. Navy Reserve during World War II, tasked with programming the Mark I computer. She retired as a rear admiral in August 1986 after 42 years of military service.

Georgia Tech researchers hope to preserve Hopper’s legacy using the technology that bears her name and spirit for innovation to make new discoveries.

“NVIDIA and other vendors show no sign of slowing down refinement of this kind of design, so it is important that our students understand how to get the most out of this architecture,” said Powell.

“Just having all these technologies isn’t enough. People must know how to build applications in their coding that actually benefit from these new architectures. That is the skill.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Pagination

- Previous page

- 6 Page 6

- Next page