Oct. 18, 2024

The U.S. Department of Energy (DOE) has awarded Georgia Tech researchers a $4.6 million grant to develop improved cybersecurity protection for renewable energy technologies.

Associate Professor Saman Zonouz will lead the project and leverage the latest artificial technology (AI) to create Phorensics. The new tool will anticipate cyberattacks on critical infrastructure and provide analysts with an accurate reading of what vulnerabilities were exploited.

“This grant enables us to tackle one of the crucial challenges facing national security today: our critical infrastructure resilience and post-incident diagnostics to restore normal operations in a timely manner,” said Zonouz.

“Together with our amazing team, we will focus on cyber-physical data recovery and post-mortem forensics analysis after cybersecurity incidents in emerging renewable energy systems.”

As the integration of renewable energy technology into national power grids increases, so does their vulnerability to cyberattacks. These threats put energy infrastructure at risk and pose a significant danger to public safety and economic stability. The AI behind Phorensics will allow analysts and technicians to scale security efforts to keep up with a growing power grid that is becoming more complex.

This effort is part of the Security of Engineering Systems (SES) initiative at Georgia Tech’s School of Cybersecurity and Privacy (SCP). SES has three pillars: research, education, and testbeds, with multiple ongoing large, sponsored efforts.

“We had a successful hiring season for SES last year and will continue filling several open tenure-track faculty positions this upcoming cycle,” said Zonouz.

“With top-notch cybersecurity and engineering schools at Georgia Tech, we have begun the SES journey with a dedicated passion to pursue building real-world solutions to protect our critical infrastructures, national security, and public safety.”

Zonouz is the director of the Cyber-Physical Systems Security Laboratory (CPSec) and is jointly appointed by Georgia Tech’s School of Cybersecurity and Privacy (SCP) and the School of Electrical and Computer Engineering (ECE).

The three Georgia Tech researchers joining him on this project are Brendan Saltaformaggio, associate professor in SCP and ECE; Taesoo Kim, jointly appointed professor in SCP and the School of Computer Science; and Animesh Chhotaray, research scientist in SCP.

Katherine Davis, associate professor at the Texas A&M University Department of Electrical and Computer Engineering, has partnered with the team to develop Phorensics. The team will also collaborate with the NREL National Lab, and industry partners for technology transfer and commercialization initiatives.

The Energy Department defines renewable energy as energy from unlimited, naturally replenished resources, such as the sun, tides, and wind. Renewable energy can be used for electricity generation, space and water heating and cooling, and transportation.

News Contact

John Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Oct. 01, 2024

The Institute for Robotics and Intelligent Machines (IRIM) launched a new initiatives program, starting with several winning proposals, with corresponding initiative leads that will broaden the scope of IRIM’s research beyond its traditional core strengths. A major goal is to stimulate collaboration across areas not typically considered as technical robotics, such as policy, education, and the humanities, as well as open new inter-university and inter-agency collaboration routes. In addition to guiding their specific initiatives, these leads will serve as an informal internal advisory body for IRIM. Initiative leads will be announced annually, with existing initiative leaders considered for renewal based on their progress in achieving community building and research goals. We hope that initiative leads will act as the “faculty face” of IRIM and communicate IRIM’s vision and activities to audiences both within and outside of Georgia Tech.

Meet 2024 IRIM Initiative Leads

Stephen Balakirsky; Regents' Researcher, Georgia Tech Research Institute & Panagiotis Tsiotras; David & Andrew Lewis Endowed Chair, Daniel Guggenheim School of Aerospace Engineering | Proximity Operations for Autonomous Servicing

Why It Matters: Proximity operations in space refer to the intricate and precise maneuvers and activities that spacecraft or satellites perform when they are in close proximity to each other, such as docking, rendezvous, or station-keeping. These operations are essential for a variety of space missions, including crewed spaceflights, satellite servicing, space exploration, and maintaining satellite constellations. While this is a very broad field, this initiative will concentrate on robotic servicing and associated challenges. In this context, robotic servicing is composed of proximity operations that are used for servicing and repairing satellites in space. In robotic servicing, robotic arms and tools perform maintenance tasks such as refueling, replacing components, or providing operation enhancements to extend a satellite's operational life or increase a satellite’s capabilities.

Our Approach: By forming an initiative in this important area, IRIM will open opportunities within the rapidly evolving space community. This will allow us to create proposals for organizations ranging from NASA and the Defense Advanced Research Projects Agency to the U.S. Air Force and U.S. Space Force. This will also position us to become national leaders in this area. While several universities have a robust robotics program and quite a few have a strong space engineering program, there are only a handful of academic units with the breadth of expertise to tackle this problem. Also, even fewer universities have the benefit of an experienced applied research partner, such as the Georgia Tech Research Institute (GTRI), to undertake large-scale demonstrations. Georgia Tech, having world-renowned programs in aerospace engineering and robotics, is uniquely positioned to be a leader in this field. In addition, creating a workshop in proximity operations for autonomous servicing will allow the GTRI and Georgia Tech space robotics communities to come together and better understand strengths and opportunities for improvement in our abilities.

Matthew Gombolay; Assistant Professor, Interactive Computing | Human-Robot Society in 2125: IRIM Leading the Way

Why It Matters: The coming robot “apocalypse” and foundation models captured the zeitgeist in 2023 with “ChatGPT” becoming a topic at the dinner table and the probability occurrence of various scenarios of AI driven technological doom being a hotly debated topic on social media. Futuristic visions of ubiquitous embodied Artificial Intelligence (AI) and robotics have become tangible. The proliferation and effectiveness of first-person view drones in the Russo-Ukrainian War, autonomous taxi services along with their failures, and inexpensive robots (e.g., Tesla’s Optimus and Unitree’s G1) have made it seem like children alive today may have robots embedded in their everyday lives. Yet, there is a lack of trust in the public leadership bringing us into this future to ensure that robots are developed and deployed with beneficence.

Our Approach: This proposal seeks to assemble a team of bright, savvy operators across academia, government, media, nonprofits, industry, and community stakeholders to develop a roadmap for how we can be the most trusted voice to guide the public in the next 100 years of innovation in robotics here at the IRIM. We propose to carry out specific activities that include conducting the activities necessary to develop a roadmap about Robots in 2125: Altruistic and Integrated Human-Robot Society. We also aim to build partnerships to promulgate these outcomes across Georgia Tech’s campus and internationally.

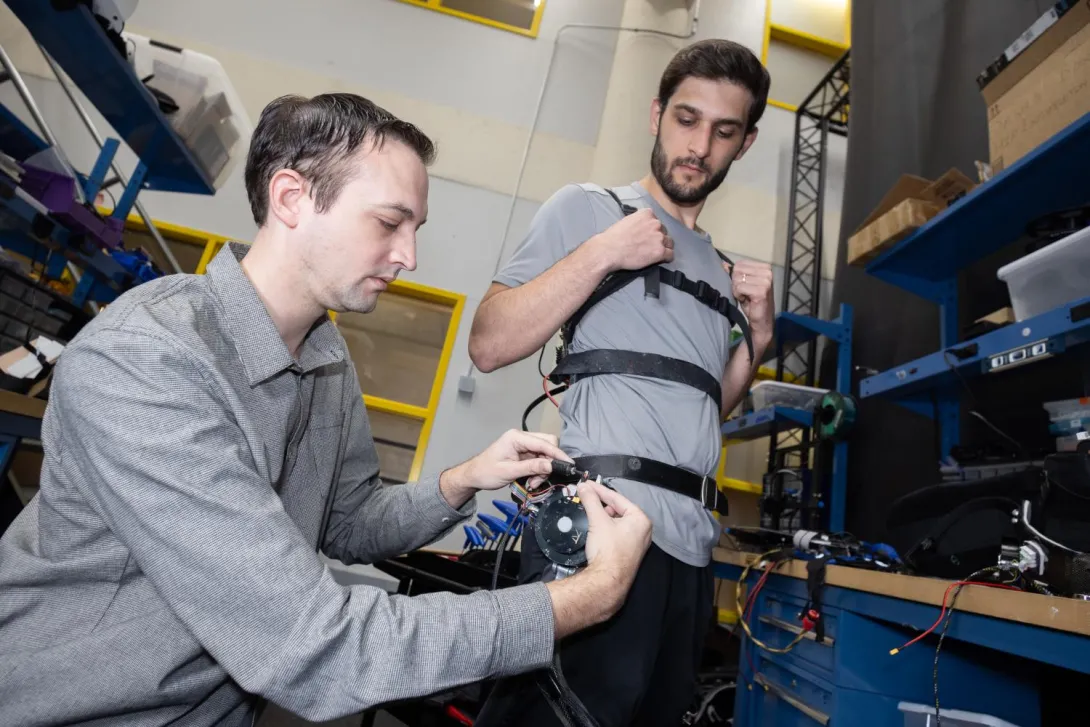

Gregory Sawicki; Joseph Anderer Faculty Fellow, School of Mechanical Engineering & Aaron Young; Associate Professor, Mechanical Engineering | Wearable Robotic Augmentation for Human Resilience

Why It Matters: The field of robotics continues to evolve beyond rigid, precision-controlled machines for amplifying production on manufacturing assembly lines toward soft, wearable systems that can mediate the interface between human users and their natural and built environments. Recent advances in materials science have made it possible to construct flexible garments with embedded sensors and actuators (e.g., exosuits). In parallel, computers continue to get smaller and more powerful, and state-of-the art machine learning algorithms can extract useful information from more extensive volumes of input data in real time. Now is the time to embed lean, powerful, sensorimotor elements alongside high-speed and efficient data processing systems in a continuous wearable device.

Our Approach: The mission of the Wearable Robotic Augmentation for Human Resilience (WeRoAHR) initiative is to merge modern advances in sensing, actuation, and computing technology to imagine and create adaptive, wearable augmentation technology that can improve human resilience and longevity across the physiological spectrum — from behavioral to cellular scales. The near-term effort (~2-3 years) will draw on Georgia Tech’s existing ecosystem of basic scientists and engineers to develop WeRoAHR systems that will focus on key targets of opportunity to increase human resilience (e.g., improved balance, dexterity, and stamina). These initial efforts will establish seeds for growth intended to help launch larger-scale, center-level efforts (>5 years).

Panagiotis Tsiotras; David & Andrew Lewis Endowed Chair, Daniel Guggenheim School of Aerospace Engineering & Sam Coogan; Demetrius T. Paris Junior Professor, School of Electrical and Computer Engineering | Initiative on Reliable, Safe, and Secure Autonomous Robotics

Why It Matters: The design and operation of reliable systems is primarily an integration issue that involves not only each component (software, hardware) being safe and reliable but also the whole system being reliable (including the human operator). The necessity for reliable autonomous systems (including AI agents) is more pronounced for “safety-critical” applications, where the result of a wrong decision can be catastrophic. This is quite a different landscape from many other autonomous decision systems (e.g., recommender systems) where a wrong or imprecise decision is inconsequential.

Our Approach: This new initiative will investigate the development of protocols, techniques, methodologies, theories, and practices for designing, building, and operating safe and reliable AI and autonomous engineering systems and contribute toward promoting a culture of safety and accountability grounded in rigorous objective metrics and methodologies for AI/autonomous and intelligent machines designers and operators, to allow the widespread adoption of such systems in safety-critical areas with confidence. The proposed new initiative aims to establish Tech as the leader in the design of autonomous, reliable engineering robotic systems and investigate the opportunity for a federally funded or industry-funded research center (National Science Foundation (NSF) Science and Technology Centers/Engineering Research Centers) in this area.

Colin Usher; Robotics Systems and Technology Branch Head, GTRI | Opportunities for Agricultural Robotics and New Collaborations

Why It Matters: The concepts for how robotics might be incorporated more broadly in agriculture vary widely, ranging from large-scale systems to teams of small systems operating in farms, enabling new possibilities. In addition, there are several application areas in agriculture, ranging from planting, weeding, crop scouting, and general growing through harvesting. Georgia Tech is not a land-grant university, making our ability to capture some of the opportunities in agricultural research more challenging. By partnering with a land-grant university such as the University of Georgia (UGA), we can leverage this relationship to go after these opportunities that, historically, were not available.

Our Approach: We plan to build collaborations first by leveraging relationships we have already formed within GTRI, Georgia Tech, and UGA. We will achieve this through a significant level of networking, supported by workshops and/or seminars with which to recruit faculty and form a roadmap for research within the respective universities. Our goal is to identify and pursue multiple opportunities for robotics-related research in both row-crop and animal-based agriculture. We believe that we have a strong opportunity, starting with formalizing a program with the partners we have worked with before, with the potential to improve and grow the research area by incorporating new faculty and staff with a unified vision of ubiquitous robotics systems in agriculture. We plan to achieve this through scheduled visits with interested faculty, attendance at relevant conferences, and ultimately hosting a workshop to formalize and define a research roadmap.

Ye Zhao; Assistant Professor, School of Mechanical Engineering | Safe, Social, & Scalable Human-Robot Teaming: Interaction, Synergy, & Augmentation

Why It Matters: Collaborative robots in unstructured environments such as construction and warehouse sites show great promise in working with humans on repetitive and dangerous tasks to improve efficiency and productivity. However, pre-programmed and nonflexible interaction behaviors of existing robots lower the naturalness and flexibility of the collaboration process. Therefore, it is crucial to improve physical interaction behaviors of the collaborative human-robot teaming.

Our Approach: This proposal will advance the understanding of the bi-directional influence and interaction of human-robot teaming for complex physical activities in dynamic environments by developing new methods to predict worker intention via multi-modal wearable sensing, reasoning about complex human-robot-workspace interaction, and adaptively planning the robot’s motion considering both human teaming dynamics and physiological and cognitive states. More importantly, our team plans to prioritize efforts to (i) broaden the scope of IRIM’s autonomy research by incorporating psychology, cognitive, and manufacturing research not typically considered as technical robotics research areas; (ii) initiate new IRIM education, training, and outreach programs through collaboration with team members from various Georgia Tech educational and outreach programs (including Project ENGAGES, VIP, and CEISMC) as well as the AUCC (World’s largest consortia of African American private institutions of higher education) which comprises Clark Atlanta University, Morehouse College, & Spelman College; and (iii) aim for large governmental grants such as DOD MURI, NSF NRT, and NSF Future of Work programs.

-Christa M. Ernst

Sep. 19, 2024

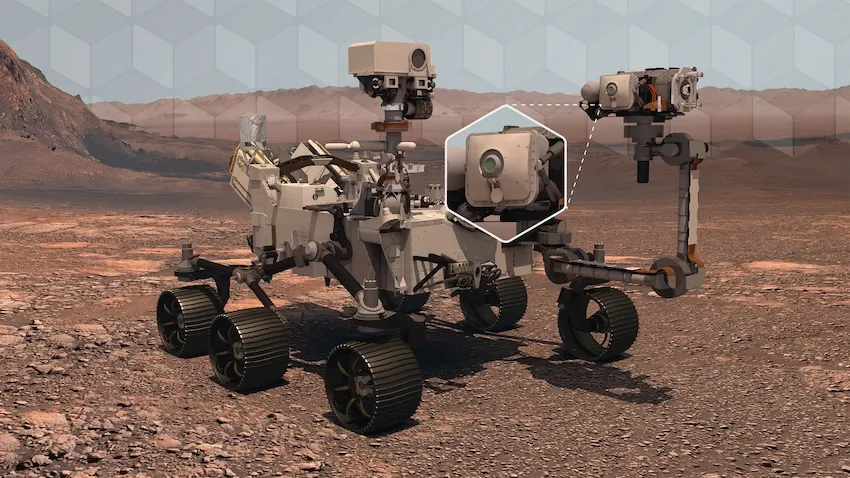

A new algorithm tested on NASA’s Perseverance Rover on Mars may lead to better forecasting of hurricanes, wildfires, and other extreme weather events that impact millions globally.

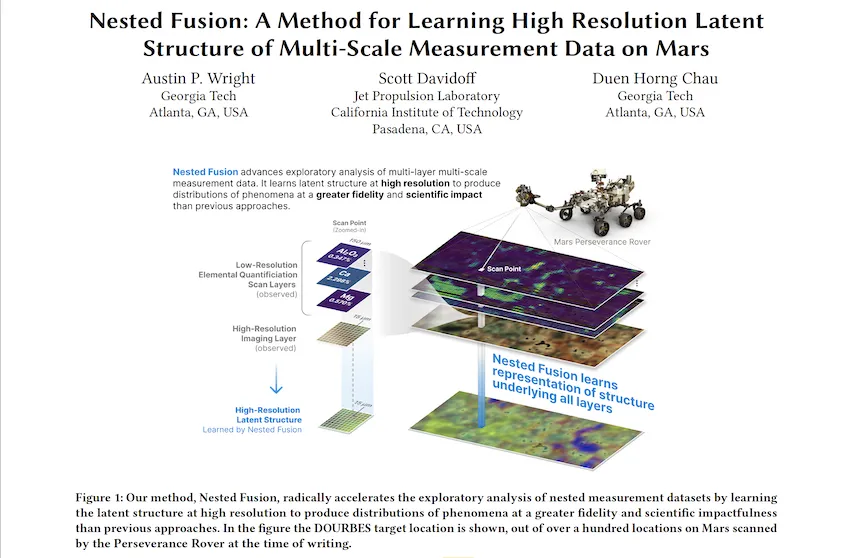

Georgia Tech Ph.D. student Austin P. Wright is first author of a paper that introduces Nested Fusion. The new algorithm improves scientists’ ability to search for past signs of life on the Martian surface.

In addition to supporting NASA’s Mars 2020 mission, scientists from other fields working with large, overlapping datasets can use Nested Fusion’s methods toward their studies.

Wright presented Nested Fusion at the 2024 International Conference on Knowledge Discovery and Data Mining (KDD 2024) where it was a runner-up for the best paper award. KDD is widely considered the world's most prestigious conference for knowledge discovery and data mining research.

“Nested Fusion is really useful for researchers in many different domains, not just NASA scientists,” said Wright. “The method visualizes complex datasets that can be difficult to get an overall view of during the initial exploratory stages of analysis.”

Nested Fusion combines datasets with different resolutions to produce a single, high-resolution visual distribution. Using this method, NASA scientists can more easily analyze multiple datasets from various sources at the same time. This can lead to faster studies of Mars’ surface composition to find clues of previous life.

The algorithm demonstrates how data science impacts traditional scientific fields like chemistry, biology, and geology.

Even further, Wright is developing Nested Fusion applications to model shifting climate patterns, plant and animal life, and other concepts in the earth sciences. The same method can combine overlapping datasets from satellite imagery, biomarkers, and climate data.

“Users have extended Nested Fusion and similar algorithms toward earth science contexts, which we have received very positive feedback,” said Wright, who studies machine learning (ML) at Georgia Tech.

“Cross-correlational analysis takes a long time to do and is not done in the initial stages of research when patterns appear and form new hypotheses. Nested Fusion enables people to discover these patterns much earlier.”

Wright is the data science and ML lead for PIXLISE, the software that NASA JPL scientists use to study data from the Mars Perseverance Rover.

Perseverance uses its Planetary Instrument for X-ray Lithochemistry (PIXL) to collect data on mineral composition of Mars’ surface. PIXL’s two main tools that accomplish this are its X-ray Fluorescence (XRF) Spectrometer and Multi-Context Camera (MCC).

When PIXL scans a target area, it creates two co-aligned datasets from the components. XRF collects a sample's fine-scale elemental composition. MCC produces images of a sample to gather visual and physical details like size and shape.

A single XRF spectrum corresponds to approximately 100 MCC imaging pixels for every scan point. Each tool’s unique resolution makes mapping between overlapping data layers challenging. However, Wright and his collaborators designed Nested Fusion to overcome this hurdle.

In addition to progressing data science, Nested Fusion improves NASA scientists' workflow. Using the method, a single scientist can form an initial estimate of a sample’s mineral composition in a matter of hours. Before Nested Fusion, the same task required days of collaboration between teams of experts on each different instrument.

“I think one of the biggest lessons I have taken from this work is that it is valuable to always ground my ML and data science problems in actual, concrete use cases of our collaborators,” Wright said.

“I learn from collaborators what parts of data analysis are important to them and the challenges they face. By understanding these issues, we can discover new ways of formalizing and framing problems in data science.”

Wright presented Nested Fusion at KDD 2024, held Aug. 25-29 in Barcelona, Spain. KDD is an official special interest group of the Association for Computing Machinery. The conference is one of the world’s leading forums for knowledge discovery and data mining research.

Nested Fusion won runner-up for the best paper in the applied data science track, which comprised of over 150 papers. Hundreds of other papers were presented at the conference’s research track, workshops, and tutorials.

Wright’s mentors, Scott Davidoff and Polo Chau, co-authored the Nested Fusion paper. Davidoff is a principal research scientist at the NASA Jet Propulsion Laboratory. Chau is a professor at the Georgia Tech School of Computational Science and Engineering (CSE).

“I was extremely happy that this work was recognized with the best paper runner-up award,” Wright said. “This kind of applied work can sometimes be hard to find the right academic home, so finding communities that appreciate this work is very encouraging.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Aug. 30, 2024

The Cloud Hub, a key initiative of the Institute for Data Engineering and Science (IDEaS) at Georgia Tech, recently concluded a successful Call for Proposals focused on advancing the field of Generative Artificial Intelligence (GenAI). This initiative, made possible by a generous gift funding from Microsoft, aims to push the boundaries of GenAI research by supporting projects that explore both foundational aspects and innovative applications of this cutting-edge technology.

Call for Proposals: A Gateway to Innovation

Launched in early 2024, the Call for Proposals invited researchers from across Georgia Tech to submit their innovative ideas on GenAI. The scope was broad, encouraging proposals that spanned foundational research, system advancements, and novel applications in various disciplines, including arts, sciences, business, and engineering. A special emphasis was placed on projects that addressed responsible and ethical AI use.

The response from the Georgia Tech research community was overwhelming, with 76 proposals submitted by teams eager to explore this transformative technology. After a rigorous selection process, eight projects were selected for support. Each awarded team will also benefit from access to Microsoft’s Azure cloud resources..

Recognizing Microsoft’s Generous Contribution

This successful initiative was made possible through the generous support of Microsoft, whose contribution of research resources has empowered Georgia Tech researchers to explore new frontiers in GenAI. By providing access to Azure’s advanced tools and services, Microsoft has played a pivotal role in accelerating GenAI research at Georgia Tech, enabling researchers to tackle some of the most pressing challenges and opportunities in this rapidly evolving field.

Looking Ahead: Pioneering the Future of GenAI

The awarded projects, set to commence in Fall 2024, represent a diverse array of research directions, from improving the capabilities of large language models to innovative applications in data management and interdisciplinary collaborations. These projects are expected to make significant contributions to the body of knowledge in GenAI and are poised to have a lasting impact on the industry and beyond.

IDEaS and the Cloud Hub are committed to supporting these teams as they embark on their research journeys. The outcomes of these projects will be shared through publications and highlighted on the Cloud Hub web portal, ensuring visibility for the groundbreaking work enabled by this initiative.

Congratulations to the Fall 2024 Winners

- Annalisa Bracco | EAS "Modeling the Dispersal and Connectivity of Marine Larvae with GenAI Agents" [proposal co-funded with support from the Brook Byers Institute for Sustainable Systems]

- Yunan Luo | CSE “Designing New and Diverse Proteins with Generative AI”

- Kartik Goyal | IC “Generative AI for Greco-Roman Architectural Reconstruction: From Partial Unstructured Archaeological Descriptions to Structured Architectural Plans”

- Victor Fung | CSE “Intelligent LLM Agents for Materials Design and Automated Experimentation”

- Noura Howell | LMC “Applying Generative AI for STEM Education: Supporting AI literacy and community engagement with marginalized youth”

- Neha Kumar | IC “Towards Responsible Integration of Generative AI in Creative Game Development”

- Maureen Linden | Design “Best Practices in Generative AI Used in the Creation of Accessible Alternative Formats for People with Disabilities”

- Surya Kalidindi | ME & MSE “Accelerating Materials Development Through Generative AI Based Dimensionality Expansion Techniques”

- Tuo Zhao | ISyE “Adaptive and Robust Alignment of LLMs with Complex Rewards”

News Contact

Christa M. Ernst - Research Communications Program Manager

christa.ernst@research.gatech.edu

Aug. 21, 2024

- Written by Benjamin Wright -

As Georgia Tech establishes itself as a national leader in AI research and education, some researchers on campus are putting AI to work to help meet sustainability goals in a range of areas including climate change adaptation and mitigation, urban farming, food distribution, and life cycle assessments while also focusing on ways to make sure AI is used ethically.

Josiah Hester, interim associate director for Community-Engaged Research in the Brook Byers Institute for Sustainable Systems (BBISS) and associate professor in the School of Interactive Computing, sees these projects as wins from both a research standpoint and for the local, national, and global communities they could affect.

“These faculty exemplify Georgia Tech's commitment to serving and partnering with communities in our research,” he says. “Sustainability is one of the most pressing issues of our time. AI gives us new tools to build more resilient communities, but the complexities and nuances in applying this emerging suite of technologies can only be solved by community members and researchers working closely together to bridge the gap. This approach to AI for sustainability strengthens the bonds between our university and our communities and makes lasting impacts due to community buy-in.”

Flood Monitoring and Carbon Storage

Peng Chen, assistant professor in the School of Computational Science and Engineering in the College of Computing, focuses on computational mathematics, data science, scientific machine learning, and parallel computing. Chen is combining these areas of expertise to develop algorithms to assist in practical applications such as flood monitoring and carbon dioxide capture and storage.

He is currently working on a National Science Foundation (NSF) project with colleagues in Georgia Tech’s School of City and Regional Planning and from the University of South Florida to develop flood models in the St. Petersburg, Florida area. As a low-lying state with more than 8,400 miles of coastline, Florida is one of the states most at risk from sea level rise and flooding caused by extreme weather events sparked by climate change.

Chen’s novel approach to flood monitoring takes existing high-resolution hydrological and hydrographical mapping and uses machine learning to incorporate real-time updates from social media users and existing traffic cameras to run rapid, low-cost simulations using deep neural networks. Current flood monitoring software is resource and time-intensive. Chen’s goal is to produce live modeling that can be used to warn residents and allocate emergency response resources as conditions change. That information would be available to the general public through a portal his team is working on.

“This project focuses on one particular community in Florida,” Chen says, “but we hope this methodology will be transferable to other locations and situations affected by climate change.”

In addition to the flood-monitoring project in Florida, Chen and his colleagues are developing new methods to improve the reliability and cost-effectiveness of storing carbon dioxide in underground rock formations. The process is plagued with uncertainty about the porosity of the bedrock, the optimal distribution of monitoring wells, and the rate at which carbon dioxide can be injected without over-pressurizing the bedrock, leading to collapse. The new simulations are fast, inexpensive, and minimize the risk of failure, which also decreases the cost of construction.

“Traditional high-fidelity simulation using supercomputers takes hours and lots of resources,” says Chen. “Now we can run these simulations in under one minute using AI models without sacrificing accuracy. Even when you factor in AI training costs, this is a huge savings in time and financial resources.”

Flood monitoring and carbon capture are passion projects for Chen, who sees an opportunity to use artificial intelligence to increase the pace and decrease the cost of problem-solving.

“I’m very excited about the possibility of solving grand challenges in the sustainability area with AI and machine learning models,” he says. “Engineering problems are full of uncertainty, but by using this technology, we can characterize the uncertainty in new ways and propagate it throughout our predictions to optimize designs and maximize performance.”

Urban Farming and Optimization

Yongsheng Chen works at the intersection of food, energy, and water. As the Bonnie W. and Charles W. Moorman Professor in the School of Civil and Environmental Engineering and director of the Nutrients, Energy, and Water Center for Agriculture Technology, Chen is focused on making urban agriculture technologically feasible, financially viable, and, most importantly, sustainable. To do that he’s leveraging AI to speed up the design process and optimize farming and harvesting operations.

Chen’s closed-loop hydroponic system uses anaerobically treated wastewater for fertilization and irrigation by extracting and repurposing nutrients as fertilizer before filtering the water through polymeric membranes with nano-scale pores. Advancing filtration and purification processes depends on finding the right membrane materials to selectively separate contaminants, including antibiotics and per- and polyfluoroalkyl substances (PFAS). Chen and his team are using AI and machine learning to guide membrane material selection and fabrication to make contaminant separation as efficient as possible. Similarly, AI and machine learning are assisting in developing carbon capture materials such as ionic liquids that can retain carbon dioxide generated during wastewater treatment and redirect it to hydroponics systems, boosting food productivity.

“A fundamental angle of our research is that we do not see municipal wastewater as waste,” explains Chen. “It is a resource we can treat and recover components from to supply irrigation, fertilizer, and biogas, all while reducing the amount of energy used in conventional wastewater treatment methods.”

In addition to aiding in materials development, which reduces design time and production costs, Chen is using machine learning to optimize the growing cycle of produce, maximizing nutritional value. His USDA-funded vertical farm uses autonomous robots to measure critical cultivation parameters and take pictures without destroying plants. This data helps determine optimum environmental conditions, fertilizer supply, and harvest timing, resulting in a faster-growing, optimally nutritious plant with less fertilizer waste and lower emissions.

Chen’s work has received considerable federal funding. As the Urban Resilience and Sustainability Thrust Leader within the NSF-funded AI Institute for Advances in Optimization (AI4OPT), he has received additional funding to foster international collaboration in digital agriculture with colleagues across the United States and in Japan, Australia, and India.

Optimizing Food Distribution

At the other end of the agricultural spectrum is postdoc Rosemarie Santa González in the H. Milton Stewart School of Industrial and Systems Engineering, who is conducting her research under the supervision of Professor Chelsea White and Professor Pascal Van Hentenryck, the director of Georgia Tech’s AI Hub as well as the director of AI4OPT.

Santa González is working with the Wisconsin Food Hub Cooperative to help traditional farmers get their products into the hands of consumers as efficiently as possible to reduce hunger and food waste. Preventing food waste is a priority for both the EPA and USDA. Current estimates are that 30 to 40% of the food produced in the United States ends up in landfills, which is a waste of resources on both the production end in the form of land, water, and chemical use, as well as a waste of resources when it comes to disposing of it, not to mention the impact of the greenhouses gases when wasted food decays.

To tackle this problem, Santa González and the Wisconsin Food Hub are helping small-scale farmers access refrigeration facilities and distribution chains. As part of her research, she is helping to develop AI tools that can optimize the logistics of the small-scale farmer supply chain while also making local consumers in underserved areas aware of what’s available so food doesn’t end up in landfills.

“This solution has to be accessible,” she says. “Not just in the sense that the food is accessible, but that the tools we are providing to them are accessible. The end users have to understand the tools and be able to use them. It has to be sustainable as a resource.”

Making AI accessible to people in the community is a core goal of the NSF’s AI Institute for Intelligent Cyberinfrastructure with Computational Learning in the Environment (ICICLE), one of the partners involved with the project.

“A large segment of the population we are working with, which includes historically marginalized communities, has a negative reaction to AI. They think of machines taking over, or data being stolen. Our goal is to democratize AI in these decision-support tools as we work toward the UN Sustainable Development Goal of Zero Hunger. There is so much power in these tools to solve complex problems that have very real results. More people will be fed and less food will spoil before it gets to people’s homes.”

Santa González hopes the tools they are building can be packaged and customized for food co-ops everywhere.

AI and Ethics

Like Santa González, Joe Bozeman III is also focused on the ethical and sustainable deployment of AI and machine learning, especially among marginalized communities. The assistant professor in the School of Civil and Environmental Engineering is an industrial ecologist committed to fostering ethical climate change adaptation and mitigation strategies. His SEEEL Lab works to make sure researchers understand the consequences of decisions before they move from academic concepts to policy decisions, particularly those that rely on data sets involving people and communities.

“With the administration of big data, there is a human tendency to assume that more data means everything is being captured, but that's not necessarily true,” he cautions. “More data could mean we're just capturing more of the data that already exists, while new research shows that we’re not including information from marginalized communities that have historically not been brought into the decision-making process. That includes underrepresented minorities, rural populations, people with disabilities, and neurodivergent people who may not interface with data collection tools.”

Bozeman is concerned that overlooking marginalized communities in data sets will result in decisions that at best ignore them and at worst cause them direct harm.

“Our lab doesn't wait for the negative harms to occur before we start talking about them,” explains Bozeman, who holds a courtesy appointment in the School of Public Policy. “Our lab forecasts what those harms will be so decision-makers and engineers can develop technologies that consider these things.”

He focuses on urbanization, the food-energy-water nexus, and the circular economy. He has found that much of the research in those areas is conducted in a vacuum without consideration for human engagement and the impact it could have when implemented.

Bozeman is lobbying for built-in tools and safeguards to mitigate the potential for harm from researchers using AI without appropriate consideration. He already sees a disconnect between the academic world and the public. Bridging that trust gap will require ethical uses of AI.

“We have to start rigorously including their voices in our decision-making to begin gaining trust with the public again. And with that trust, we can all start moving toward sustainable development. If we don't do that, I don't care how good our engineering solutions are, we're going to miss the boat entirely on bringing along the majority of the population.”

BBISS Support

Moving forward, Hester is excited about the impact the Brooks Byers Institute for Sustainable Systems can have on AI and sustainability research through a variety of support mechanisms.

“BBISS continues to invest in faculty development and training in community-driven research strategies, including the Community Engagement Faculty Fellows Program (with the Center for Sustainable Communities Research and Education), while empowering multidisciplinary teams to work together to solve grand engineering challenges with AI by supporting the AI+Climate Faculty Interest Group, as well as partnering with and providing administrative support for community-driven research projects.”

News Contact

Brent Verrill, Research Communications Program Manager, BBISS

Jun. 04, 2024

Artificial intelligence and machine learning techniques are infused across the College of Engineering’s education and research.

From safer roads to new fuel cell technology, semiconductor designs to restoring bodily functions, Georgia Tech engineers are capitalizing on the power of AI to quickly make predictions or see danger ahead.

Explore some of the ways we are using AI to create a better future on the College's website.

This story was featured in the spring 2024 issue of Helluva Engineer magazine, produced biannually by the College of Engineering.

News Contact

Joshua Stewart

College of Engineering

Jun. 10, 2024

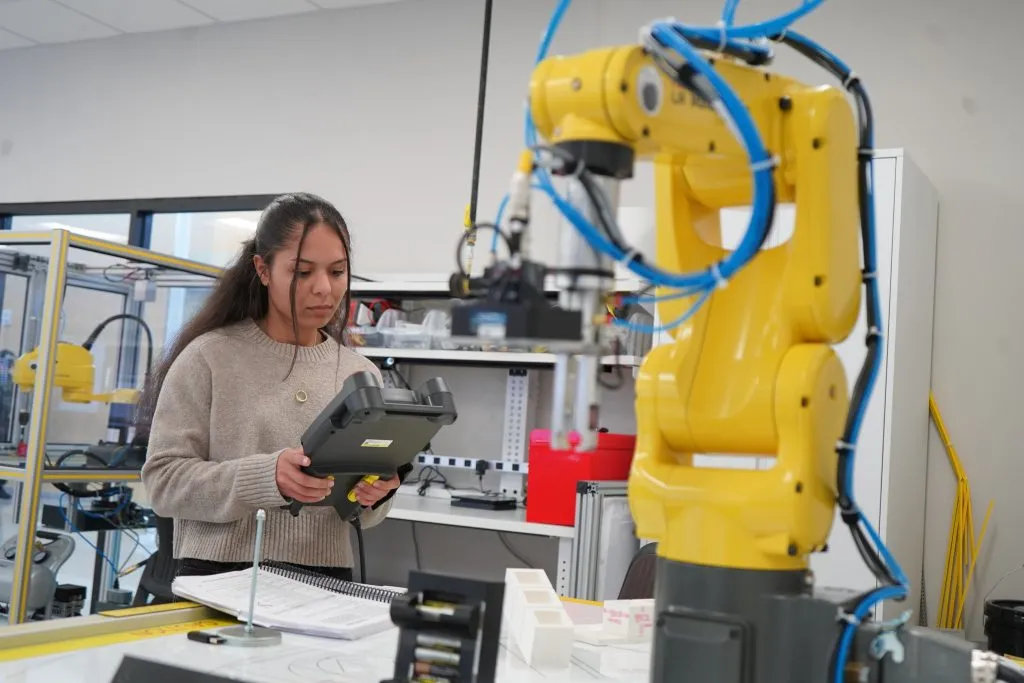

Naiya Salinas and her instructor, Deryk Stoops, looked back and forth between the large screen on the wall and a hand-held monitor.

Tracing between the lines of code, Salinas made a discovery: A character was missing.

The lesson was an important, real-world example of the problem-solving skills required when working in robotics. Salinas is one of a half-dozen students enrolled in the new AI Enhanced Robotic Manufacturing program at the Georgia Veterans Education Career Transition Resource (VECTR) Center, which is setting a new standard for technology-focused careers.

The set-up of the lab was intentional, said Stoops, who designed the course modules and worked with local industry to determine their manufacturing needs. Then, with funding from the Georgia Tech Manufacturing Institute's (GTMI) Georgia Artificial Intelligence in Manufacturing (Georgia AIM) project, Stoops worked with administrators at Central Georgia Technical College to purchase robotics and other cutting-edge manufacturing tools.

As a result, the VECTR Center’s AI-Enhanced Robotic Manufacturing Studio trains veterans in industry-standard robotics, manufacturing modules, cameras, and network systems. This equipment gives students experience in a variety of robotics-based manufacturing applications. Graduates can also finish the 17-credit course with two certifications that carry some weight in the manufacturing world.

“After getting the Georgia AIM grant, we pulled together a roundtable with industry. And then we did site visits to see how they pulled AI and robotics into the space,” said Stoops. “All the equipment in here is the direct result of industry feedback.”

Statewide Strategic Effort

Funded by a $65 million grant from the federal Economic Development Administration, Georgia AIM is a network of projects across the state born out of GTMI and led by Georgia Tech’s Enterprise Innovation Institute. These projects work to connect the manufacturing community with smart technologies and a ready workforce. Central Georgia received around $4 million as part of the initiative to advance innovation, workforce development and STEM education in support of local manufacturing and Robins Air Force Base.

Georgia AIM pulls together a host of regional partners all working toward a common goal of increasing STEM education, access to technology and enhancing AI among local manufacturers. This partnership includes Fort Valley State University, the Middle Georgia Innovation Project led by the Development Authority of Houston County, Central Georgia Technical College, which administers the VECTR Center, and the 21st Century Partnership.

“This grant will help us turn our vision for both the Middle Georgia Innovation Project and the Middle Georgia STEM Alliance, along with our partners, into reality, advancing this region and supporting the future of Robins AFB,” said Brig. Gen. John Kubinec, USAF (ret.), president and chief executive officer of the 21st Century Partnership.

Georgia AIM funding for Central Georgia Technical College and Fort Valley State focused on enhancing technology and purchasing new components to assist in education. At Fort Valley State, a mobile lab will launch later this year to take AI-enhanced technologies to underserved parts of the state, while Central Georgia Tech invested in an AI-enhanced robotics manufacturing lab at the VECTR Center.

“This funding will help bring emerging technology throughout our service area and beyond, to our students, economy, and Robins Air Force Base,” said Dr. Ivan Allen, president of Central Georgia Technical College. “Thanks to the power of this partnership, our faculty and students will have the opportunity to work directly with modern manufacturing technology, giving our students the experience and education needed to transition from the classroom to the workforce in an in-demand industry.”

New Gateway for Vets

The VECTR Center’s AI-Enhanced Robotics Manufacturing Studio includes FANUC robotic systems, Rockwell Automation programmable logic controllers, Cognex AI-enabled machine vision systems, smart sensor networks, and a MiR autonomous mobile robot.

The studio graduated its first cohort of students in February and celebrated its ribbon-cutting ceremony on April 17 with a host of local officials and dignitaries. It was also an opportunity to celebrate the students, who are transitioning from a military career to civilian life.

The new technologies at the VECTR Center lab are opening new doors to a growing, cutting-edge field.

“From being in this class, you really start to see how the world is going toward AI. Not just Chat GPT, but everything — the world is going toward AI for sure now,” said Jordan Leonard, who worked in logistics and as a vehicle mechanic in the U.S. Army. Now, he’s upskilling into robotics and looking forward to using his new skills in maintenance. “What I want to do is go to school for instrumentation and electrical technician. But since a lot of industrial plants are trying to get more robots, for me this will be a step up from my coworkers by knowing these things.”

News Contact

Kristen Morales

Marketing Strategist

Georgia AIM (Artificial Intelligence in Manufacturing)

Jun. 04, 2024

Whether it’s typing an email or guiding travel from one destination to the next, artificial intelligence (AI) already plays a role in simplifying daily tasks.

But what if it could also help people live more efficiently — that is, more sustainably, with less waste?

It’s a concept that often runs through the mind of Iesha Baldwin, the inaugural Georgia AIM Fellow with the Partnership for Inclusive Innovation (PIN) at the Georgia Institute of Technology’s Enterprise Innovation Institute. Born out of the Georgia Tech Manufacturing Institute, the Georgia AIM (Artificial Intelligence in Manufacturing) project works with PIN fellows to advance the project's mission of equitably developing and deploying talent and innovation in AI for manufacturing throughout the state of Georgia.

When she accepted the PIN Fellowship for 2023, she saw an opportunity to learn more about the nexus of artificial intelligence, manufacturing, waste, and education. With a background in environmental studies and science, Baldwin studied methods for waste reduction, environmental protection, and science education.

“I took an interest in AI technology because I wanted to learn how it can be harnessed to solve the waste problem and create better science education opportunities for K-12 and higher education students,” said Baldwin.

This type of unique problem-solving is what defines the PIN Fellowship programs. Every year, a cohort of recent college graduates is selected, and each is paired with an industry that aligns with their expertise and career goals — specifically, cleantech, AI manufacturing, supply chain and logistics, and cybersecurity/information technology. Fellowships are one year, with fellows spending six months with a private company and then six months with a public organization.

Through the experience, fellows expand their professional network and drive connections between the public and private sectors. They also use the opportunity to work on special projects that involve using new technologies in their area of interest.

With a focus on artificial intelligence in manufacturing, Baldwin led an inventory management project at the Georgia manufacturer Freudenberg-NOK, where the objective was to create an inventory management system that reduced manufacturing downtime and, as a result, increased efficiency, and reduced waste.

She also worked in several capacities at Georgia Tech: supporting K-12 outreach programs at the Advanced Manufacturing Pilot Facility, assisting with energy research at the Marcus Nanotechnology Research Center, and auditing the infamous mechanical engineering course ME2110 to improve her design thinking and engineering skills.

“Learning about artificial intelligence is a process, and the knowledge gained was worth the academic adventure,” she said. “Because of the wonderful support at Georgia Tech, Freudenberg NOK, PIN, and Georgia AIM, I feel confident about connecting environmental sustainability and technology in a way that makes communities more resilient and sustainable.”

Since leaving the PIN Fellowship, Baldwin connected her love for education, science, and environmental sustainability through her new role as the inaugural sustainability coordinator for Spelman College, her alma mater. In this role, she is responsible for supporting campus sustainability initiatives.

News Contact

Kristen Morales

Marketing Strategist

Georgia Artificial Intelligence in Manufacturing

May. 23, 2024

Yongsheng Chen, Bonnie W. and Charles W. Moorman IV Professor in Georgia Tech's School of Civil and Environmental Engineering, has been awarded a $300,000 National Science Foundation (NSF) grant to spearhead efforts to enhance sustainable agriculture practices using innovative AI solutions.

The collaborative project, named EAGER: AI4OPT-AG: Advancing Quad Collaboration via Digital Agriculture and Optimization, is a joint effort initiated by Georgia Tech in partnership with esteemed institutions in Japan, Australia, and India. The project aims to drive advancements in digital agriculture and optimization, ultimately supporting food security for future generations.

Chen, who also leads the Urban Sustainability and Resilience Thrust for the NSF Artificial Intelligence Research Institute for Advances in Optimization (AI4OPT), is excited about this new opportunity. "I am thrilled to lead this initiative, which marks a significant step forward in harnessing artificial intelligence (AI) to address pressing issues in sustainable agriculture," he said.

Highlighting the importance of AI in revolutionizing agriculture, Chen explained, "AI enables swift, accurate, and non-destructive assessments of plant productivity, optimizes nutritional content, and enhances fertilizer usage efficiency. These advancements are crucial for mitigating agriculture-related greenhouse gas emissions and solving climate change challenges."

To read the full agreement, click here.

News Contact

Breon Martin

AI Research Communications Manager

Georgia Tech

Apr. 19, 2024

When U.S. Rep. Earl L. “Buddy” Carter from Georgia’s 1st District visited Atlanta recently, one of his top priorities was meeting with the experts at Georgia Tech’s 20,000-square-foot Advanced Manufacturing Pilot Facility (AMPF).

Carter was recently named the House Energy and Commerce Committee’s chair of the Environment, Manufacturing, and Critical Materials Subcommittee, a group that concerns itself primarily with contamination of soil, air, noise, and water, as well as emergency environmental response, whether physical or cybersecurity.

Because AMPF’s focus dovetails with subcommittee interests, the facility was a fitting stop for Carter, who was welcomed for an afternoon tour and series of live demonstrations. Programs within Georgia Tech’s Enterprise Innovation Institute — specifically the Georgia Artificial Intelligence in Manufacturing (Georgia AIM) and Georgia Manufacturing Extension Partnership (GaMEP) — were well represented.

“Innovation is extremely important,” Carter said during his April 1 visit. “In order to handle some of our problems, we’ve got to have adaptation, mitigation, and innovation. I’ve always said that the greatest innovators, the greatest scientists in the world, are right here in the United States. I’m so proud of Georgia Tech and what they do for our state and for our nation.”

Carter’s AMPF visit began with an introduction by Thomas Kurfess, Regents' Professor and HUSCO/Ramirez Distinguished Chair in Fluid Power and Motion Control in the George W. Woodruff School of Mechanical Engineering and executive director of the Georgia Tech Manufacturing Institute; Steven Ferguson, principal research scientist and managing director at Georgia AIM; research engineer Kyle Saleeby; and Donna Ennis, the Enterprise Innovation Institute’s director of community engagement and program development, and co-director of Georgia AIM.

Ennis provided an overview of Georgia AIM, while Ferguson spoke on the Manufacturing 4.0 Consortium and Kurfess detailed the AMPF origin story, before introducing four live demonstrations.

The first of these featured Chuck Easley, Professor of the Practice in the Scheller College of Business, who elaborated on supply chain issues. Afterward, Alan Burl of EPICS: Enhanced Preparation for Intelligent Cybermanufacturing Systems and mechanical engineer Melissa Foley led a brief information session on hybrid turbine blade repair.

Finally, GaMEP project manager Michael Barker expounded on GaMEP’s cybersecurity services, and Deryk Stoops of Central Georgia Technical College detailed the Georgia AIM-sponsored AI robotics training program at the Georgia Veterans Education Career Transition Resource (VECTR) Center, which offers training and assistance to those making the transition from military to civilian life.

The topic of artificial intelligence, in all its subtlety and nuance, was of particular interest to Carter.

“AI is the buzz in Washington, D.C.,” he said. “Whether it be healthcare, energy, [or] science, we on the Energy and Commerce Committee look at it from a sense [that there’s] a very delicate balance, and we understand the responsibility. But we want to try to benefit from this as much as we can.”

“I heard something today I haven’t heard before," Carter continued, "and that is instead of calling it artificial intelligence, we refer to it as ‘augmented intelligence.’ I think that’s a great term, and certainly something I’m going to take back to Washington with me.”

“It was a pleasure to host Rep. Carter for a firsthand look at AMPF," shared Ennis, "which is uniquely positioned to offer businesses the opportunity to collaborate with Georgia Tech researchers and students and to hear about Georgia AIM.

“At Georgia AIM, we’re committed to making the state a leader in artificial intelligence-assisted manufacturing, and we’re grateful for Congressman Carter’s interest and support of our efforts."

News Contact

Eve Tolpa

Senior Writer/Editor

Enterprise Innovation Institute (EI2)

Pagination

- Previous page

- 4 Page 4

- Next page