Jul. 10, 2025

In a bold step to advance AI across Latin America, Georgia Tech is helping Panama develop its first National Artificial Intelligence Strategy—leveraging world-class research, global collaboration, and human-centered design.

In partnership with Panama’s National Secretariat of Science, Technology and Innovation (Secretaría Nacional de Ciencia, Tecnología e Innovación, or SENACYT) and Georgia Tech Panama, Tech AI the AI Hub at Georgia Tech co-led a series of multisectoral workshops in Panama City on July 7–8. The initiative convened voices from government, academia, civil society, and the private sector to co-create an ethical, inclusive and forward-looking roadmap for AI in Panama.

We’re moving forward with one of the most exciting and important processes for Panama’s future: the development of our National Artificial Intelligence Strategy,” said Franklin A. Morales, Head of International Technical Cooperation Panama's Secretariat for Science, Technology and Innovation at SENACY, in a public statement. “Georgia Tech’s expertise is helping us shape a strategy that’s both ambitious and grounded in global best practices.

The workshops were facilitated by Pascal Van Hentenryck, Director of Tech AI, the AI Hub at Georgia Tech and the NSF-funded AI Institute for Advances in Optimization (AI4OPT), and Tim Brown, Academic Program Director for AI at Georgia Tech Professional Education. Through interactive working groups, participants assessed Panama’s AI landscape, identified key challenges and opportunities, and helped lay the foundation for long-term national impact.

In a public statement, Van Hentenryck noted:

We had the honor to spend three days in Panama working on their National AI Strategy with SENACYT, Georgia Tech Panama, and so many stakeholders who contributed their expertise, talent, and time. More to come, obviously. And thank you to the teams at SENACYT, Georgia Tech Panama, and Tech AI at Georgia Tech for an amazing organization.

SENACYT’s vision for Panama’s AI future emphasizes the role of technology in advancing opportunity and improving lives. “The future is not something we wait for—it’s something we build together,” Morales added in a separate public statement.

Additional contributions from leaders across Panama’s innovation ecosystem emphasized the importance of developing homegrown talent, applying AI in high-impact sectors like health and education, and serving as a regional testbed for responsible AI solutions.

“This goes beyond technology. It’s about how we use artificial intelligence to improve people’s lives, make our systems more efficient, and elevate Panamanian talent,” shared a representative from Escala Latam. “We have a big opportunity: to train local talent, to scale responsible solutions, and to build, from Panama, solutions with global impact.”

The initiative reflects Georgia Tech’s broader commitment to advancing AI as a public good.

Through Tech AI and partnerships like this one, the Institute helps governments, industries, and communities around the world design AI strategies that are technically sound, globally relevant, and locally empowering.

“Artificial intelligence has been identified by SENACYT as a critical and emerging technology that requires urgent action to maximize its impact on the country’s economy, innovation capacity, and competitiveness,” said Eduardo Ortega Barría, National Secretary of Science, Technology and Innovation. “That’s why the National AI Strategy we are developing prioritizes broad and participatory reflection—this is a crucial step toward building a shared vision.”

As nations worldwide navigate the rise of artificial intelligence, Georgia Tech stands at the forefront, helping build AI strategies that are not only technically advanced but fundamentally human-centered.

GET INVOVLED

The public is also invited to shape the strategy. SENACYT launched a National Artificial Intelligence Survey—available through July 31 via www.SENACYT.gob.pa; SURVEY and SENACYT’s social media—to collect ideas, questions, and concerns from residents across Panama. (The survey includes 16 questions and is open to all residents of Panama—both nationals and foreigners. Its purpose is to gather perceptions, concerns, and opportunities to be considered in the national strategy. The survey will remain open until July 31, 2025).

About Tech AI

Tech AI is Georgia Tech’s interdisciplinary AI research and policy hub, bringing together expertise in optimization, robotics, ethics, education, and public-sector applications. With a mission to advance AI for social good, Tech AI helps partners across the globe design and deploy trustworthy, scalable AI systems.

About SENACYT

The National Secretariat of Science, Technology and Innovation (SENACYT) is an autonomous institution whose mission is to make science and technology tools for the sustainable development of Panama. Our projects and programs focus on advancing the country’s scientific and technological capabilities to close inequality gaps and promote equitable development that improves quality of life for all Panamanians.

News Contact

Breon Martin

AI Marketing Communications Manager

Jul. 10, 2025

Researchers at Georgia Tech have developed a new artificial intelligence tool that dramatically improves how companies plan their supply chains, cutting down the time and cost it takes to generate complex production and inventory schedules.

The tool, known as PROPEL, combines machine learning with optimization techniques to help manufacturers make better decisions in less time. It was created by researchers at the NSF AI Institute for Advances in Optimization, or AI4OPT, based at Georgia Tech under Tech AI (the AI Hub at Georgia Tech).

The technology is already being tested on real-world supply chain data provided by Kinaxis, a Canada-based company that supplies planning software to global manufacturers in industries ranging from automotive to consumer goods.

Vahid Eghbal Akhlaghi, senior research scientist at Kinaxis and former postdoctoral fellow at AI4OPT and the H. Milton Stewart School of Industrial and Systems Engineering (ISyE) at Georgia Tech, said, “Our industry partner has been instrumental in shaping PROPEL’s capabilities. By validating the approach with real operational data, we ensured it addresses true bottlenecks in supply chain planning.”

"PROPEL represents a leap forward in how we tackle massive, complex planning problems," said Pascal Van Hentenryck, lead researcher, the director of Tech AI and the NSF AI4OPT Institute, and the A. Russell Chandler III Chair and Professor at Georgia Tech with appointments in the colleges of engineering and computing. "By combining supervised and reinforcement learning, we can make near-optimal industrial-scale decisions, an order of magnitude faster."

Traditional supply chain planning problems are typically solved using mathematical models that require immense computing power—often too much to meet real-time business needs. PROPEL, short for Predict-Relax-Optimize using LEarning, reduces this burden by teaching the AI model to first eliminate irrelevant decisions and then fine-tune the solution to meet quality standards.

Reza Zandehshahvar, one of the paper’s co-authors and postdoctoral fellow with the NSF AI4OPT and the H. Milton Stewart School of Industrial and Systems Engineering (ISyE) at Georgia Tech, said the breakthrough lies not just in the AI algorithms but in how they're trained and deployed at scale.

“Many AI models struggle when applied to problems with millions of variables. PROPEL was built from the ground up to handle industrial complexity, not just academic examples,” Zandehshahvar said. “We’re seeing real improvements in both solution speed and quality.”

In trials using Kinaxis’ historical industrial data, PROPEL achieved an 88% reduction in the time needed to find a high-quality plan and improved solution accuracy by more than 60% compared to conventional methods.

While many AI methods in supply chain rely on simulated data or simplified models, PROPEL’s performance has been validated using real-world scenarios, ensuring its reliability in high-stakes operational settings.

The Georgia Tech team says PROPEL could benefit industries that manage large, multi-tiered production networks, including pharmaceuticals, electronics, and heavy manufacturing. The researchers are now exploring partnerships with additional companies to deploy PROPEL in live environments.

Access the abstract on arXiv.

News Contact

Breon Martin

AI Marketing Communications Manager

Jul. 10, 2025

Georgia Tech has been recognized in a new IDC white paper, A Blueprint for AI‑Ready Campuses: Strategies from the Frontlines of Higher Education, as a national leader in deploying artificial intelligence across higher education. The report, published in partnership with Microsoft, highlights Georgia Tech’s comprehensive approach to integrating AI into teaching, research, and campus operations.

The Institute is one of only four U.S. universities featured in the report, joining Auburn University, Babson College, and the University of North Carolina at Chapel Hill.

“AI isn’t a single system or application—it’s a new foundation for how we work, teach, and learn,” said Leo Howell, Georgia Tech’s chief information security officer. “Our goal is to expose people to as many tools as possible, creating an ‘AI for All’ strategy that ensures everyone at Georgia Tech can leverage AI to enhance their work and learning experiences.”

Georgia Tech’s approach centers on a “persona-based model,” tailoring AI tools and resources to meet the needs of students, faculty, researchers, and administrators. That personalized approach, according to the report, is what makes Georgia Tech’s efforts both scalable and sustainable.

The white paper also emphasizes the importance of industry partnerships in Georgia Tech’s strategy. Through collaborations with Microsoft, OpenAI, and NVIDIA, the Institute is deploying advanced AI technologies while preparing students for the demands of an AI-driven workforce.

Georgia Tech’s success lies in its flexibility, the report notes. The Institute tests AI tools through targeted pilots, gathers user feedback, and rapidly iterates to improve outcomes. This adaptive mindset is recommended as a best practice for other institutions navigating their own AI transformation.

The full IDC white paper is available for download here.

News Contact

Breon Martin

AI Marketing Communications Manager

Jun. 26, 2025

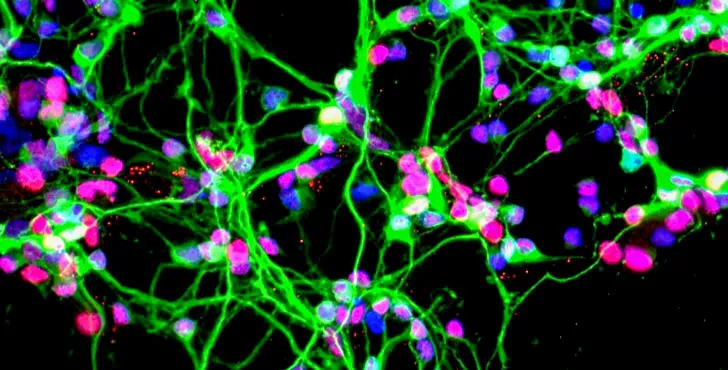

Researchers at Georgia Tech have taken a critical step forward in creating efficient, useful and brain-like artificial intelligence (AI). The key? A new algorithm that results in neural networks with internal structure more like the human brain.

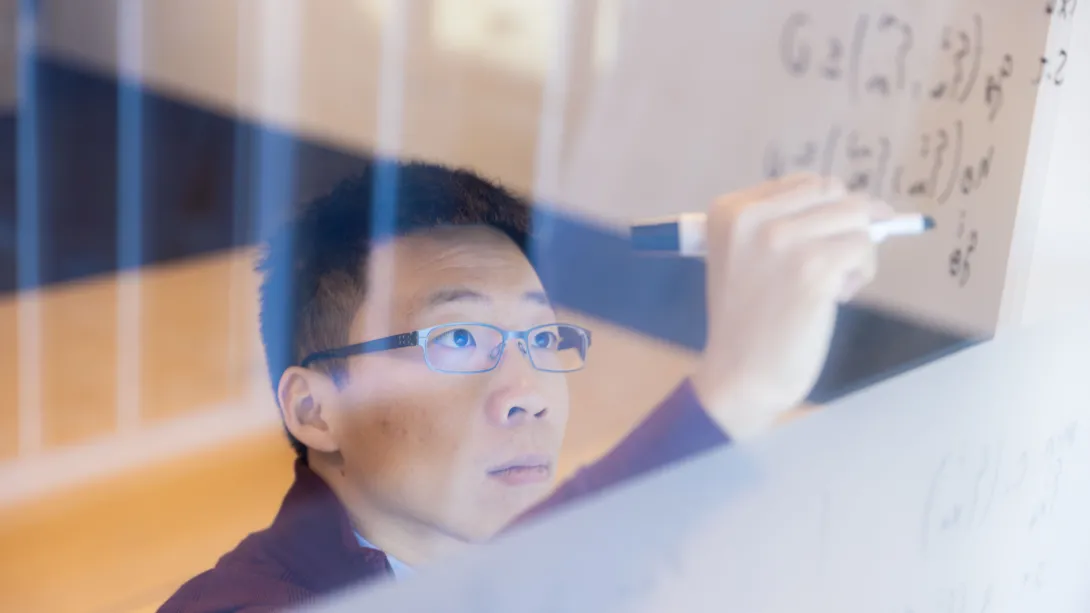

The study, “TopoNets: High-Performing Vision and Language Models With Brain-Like Topography,” was awarded a spotlight at this year’s International Conference on Learning Representations (ICLR), a distinction given to only 2 percent of papers. The research was led by graduate student Mayukh Deb alongside School of Psychology Assistant Professor Apurva Ratan Murty.

Thirty-two of Tech’s computing, engineering, and science faculty represented the Institute at ICLR 2025, which is globally renowned for sharing cutting-edge research.

“We started with this idea because we saw that AI models are unstructured, while brains are exquisitely organized,” says first-author Deb. “Our models with internal structure showed more than a 20 percent boost in efficiency with almost no performance losses. And this is out-of-the-box — it’s broadly applicable to other models with no extra fine-tuning needed.”

For Murty, the research also underscores the importance of a rapidly growing field of research at the intersection of neuroscience and AI. “There's a major explosion in understanding intelligence right now,” he says. “The neuro-AI approach is exciting because it helps emulate human intelligence in machines, making AI more interpretable.”

“In addition to advancing AI, this type of research also benefits neuroscience because it informs a fundamental question: Why is our brain organized the way it is?,” Deb adds. “Making AI more interpretable helps everyone.”

Brain-inspired blueprints

In the brain, neurons form topographic maps: neurons used for comparable tasks are closer together. The researchers applied this concept to AI by organizing how internal components (like artificial neurons) connect and process information.

This type of organization has been tried in the past but has been challenging, Murty says. “Historically, rules constraining how the AI could structure itself often resulted in lower-performing models. We realized that for this type of biophysical constraint, you simply can’t map everything — you need an algorithmic solution.”

“Our key insight was an algorithmic trick that gives the same structure as brains without enforcing things that models don't respond well to,” he adds. “That breakthrough was what Mayukh (Deb) worked on.”

The algorithm, called TopoLoss, uses a loss function to encourage brain-like organization in artificial neural networks, and it is compatible with many AI systems capable of understanding language and images.

“The resulting training method, TopoNets, is very flexible and broadly applicable,” Murty says. “You can apply it to contemporary models very easily, which is a critical advancement when compared to previous methods.”

Neuro-AI innovations

Murty and Deb plan to continue refining and designing brain-inspired AI systems. “All parts of the brain have some organization — we want to expand into other domains,” Deb says. “On the neuroscience side of things, we want to discover new kinds of organization in brains using these topographic systems.”

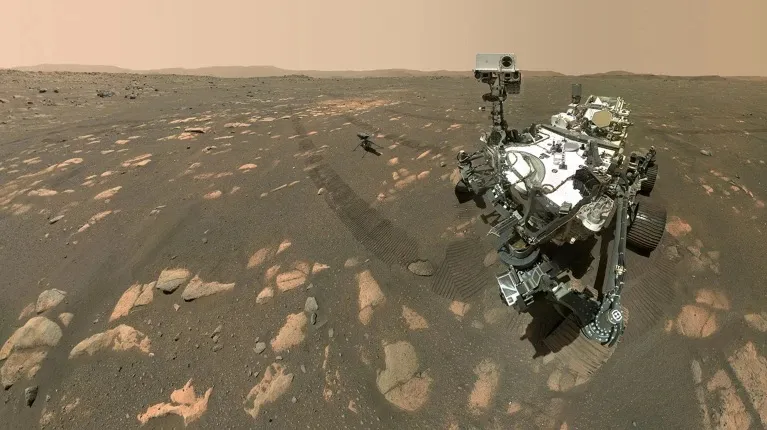

Deb also cites possibilities in robotics, especially in situations like space exploration where resources are limited. “Imagine running a model inside a robot with limited power,” he says. “Structured models can help us achieve 80 percent of performance with just 20 percent of energy consumption, saving valuable energy and space. This is still experimental, but it's the direction we are interested in exploring.”

“This success highlights the potential of a new approach, designing systems that benefit both neuroscience and AI — and beyond,” Murty adds. “We can learn so much from the human brain, and this project shows that brain-inspired systems can help current AI be better. We hope our work stimulates this conversation.”

Jun. 25, 2025

Overwhelmed doctors and nurses struggling to provide adequate patient care in South Korea are getting support from Georgia Tech and Korean-based researchers through an AI-powered robotic medical assistant.

Top South Korean research institutes have enlisted Georgia Tech researchers Sehoon Ha and Jennifer G. Kim to develop artificial intelligence (AI) to help the humanoid assistant navigate hospitals and interact with doctors, nurses, and patients.

Ha and Kim will partner with Neuromeka, a South Korean robotics company, on a five-year, 10 billion won (about $7.2 million US) grant from the South Korean government. Georgia Tech will receive about $1.8 million of the grant.

Ha and Kim, assistant professors in the School of Interactive Computing, will lead Tech’s efforts and also work with researchers from the Korea Advanced Institute of Science and Technology and the Electronics and Telecommunications Research Institute.

Neuromeka has built industrial robots since its founding in 2013 and recently decided to expand into humanoid service robots.

Lee, the group leader of the humanoid medical assistant project, said he fielded partnership requests from many academic researchers. Ha and Kim stood out as an ideal match because of their robotics, AI, and human-computer interaction expertise.

For Ha, the project is an opportunity to test navigation and control algorithms he’s developed through research that earned him the National Science Foundation CAREER Award. Ha combines computer simulation and real-world training data to make robots more deployable in high-stress, chaotic environments.

“Dr. Ha has everything we want to put into our system, including his navigation policies,” Lee said. “He works with robots and AI, and there weren’t many candidates in that space. We needed a collaborator who can create the software and has experience running it on robots.”

Ha said he is already considering how his algorithms could scale beyond hospitals and become a universal means of robot navigation in unstructured real-world environments.

“For now, we’re focusing on a customized navigation model for Korean environments, but there are ways to transfer the data set to different environments, such as the U.S. or European healthcare systems,” Ha said.

“The final product can be deployed to other systems and industries. It can help industrial workers at factories, retail stores, any place where workers can get overwhelmed by a high volume of tasks.”

Kim will focus on making the robot’s design and interaction features more human. She’ll develop a large-language model (LLM) AI system to communicate with patients, nurses, and doctors. She’ll also develop an app that will allow users to input their commands and queries.

“This project is not just about controlling robots, which is why Dr. Kim’s expertise in human-computer interaction design through natural language was essential.,” Lee said.

Kim is interviewing stakeholders from three South Korean hospitals to identify service and care pain points. The issues she’s identified so far relate to doctor-patient communication, a lack of emotional support for patients, and an excessive number of small tasks that consume nurses’ time.

“Our goal is to develop this robot in a very human-centered way,” she said. “One way is to give patients a way to communicate about the quality of their care and how the robot can support their emotional well-being.

“We found that patients often hesitate to ask busy nurses for small things like getting a cup of water. We believe this is an area a robot can support.”

The robot’s hardware will be built in Korea, while Ha and Kim will develop the software in the U.S.

Jong-hoon Park, CEO of Neuromeka, said in a press release the goal is to have a commercialized product as soon as possible.

“Through this project, we will solve problems that existing collaborative robots could not,” Park said. “We expect the medical AI humanoid robot technology being developed will contribute to reducing the daily work burden of medical and healthcare workers in the field.”

Jun. 11, 2025

An algorithmic breakthrough from School of Interactive Computing researchers that earned a Meta partnershipdrew more attention at the IEEE International Conference on Robotics and Automation (ICRA).

Meta announced in February its partnership with the labs of professors Danfei Xu and Judy Hoffman on a novel computer vision-based algorithm called EgoMimic. It enables robots to learn new skills by imitating human tasks from first-person video footage captured by Meta’s Aria smart glasses.

Xu’s Robot Learning and Reasoning Lab (RL2) displayed EgoMimic in action at ICRA May 19-23 at the World Congress Center in Atlanta.

Lawrence Zhu, Pranav Kuppili, and Patcharapong “Elmo” Aphiwetsa — students from Xu’s lab — used Egomimic to compete in a robot teleoperation contest at ICRA. The team finished second in the event titled What Bimanual Teleoperation and Learning from Demonstration Can Do Today, earning a $10,000 cash prize.

Teams were challenged to perform tasks by remotely controlling a robot gripper. The robot had to fold a tablecloth, open a vacuum-sealed container, place an object into the container, and then reseal it in succession without any errors.

Teams completed the tasks as many times as possible in 30 minutes, earning points for each successful attempt.

The competition also offered different challenge levels that increased the points awarded. Teams could directly operate the robot with a full workstation view and receive one point for each task completion. Or, as the RL2 team chose, teams could opt for the second challenge level.

The second level required an operator to control the task with no view of the workstation except for what was provided to through a video feed. The RL2 team completed the task seven times and received double points for the challenge level.

The third challenge level required teams to operate remotely from another location. At this level, teams could earn four times the number of points for each successful task completed. The fourth level challenged teams to deploy an algorithm for task performance and awarded eight points for each completion.

Using two of Meta’s Quest wireless controllers, Zhu controlled the robot under the direction of Aphiwetsa, while Kuppili monitored the coding from his laptop.

“It’s physically difficult to teleoperate for half an hour,” Zhu said. “My hands were shaking from holding the controllers in the air for that long.”

Being in constant communication with Aphiwetsa helped him stay focused throughout the contest.

“I helped him strategize the teleoperation and noticed he could skip some of the steps in the folding,” Aphiwetsa said. “There were many ways to do it, so I just told him what he could fix and how to do it faster.”

Zhu said he and his team had intended to tackle the fourth challenge level with the EgoMimic algorithm. However, due to unexpected time constraints, they decided to switch to the second level the day before the competition due to unexpected time constraints.

“I think we realized the day before the competition training the robot on our model would take a huge amount of time,” Zhu said. “We decided to go for the teleoperation and started practicing.”

He said the team wants to tackle the highest challenge level and use a training model for next year’s ICRA competition in Vienna, Austria.

ICRA is the world’s largest robotics conference, and Atlanta hosted the event for the third time in its history, drawing a record-breaking attendance of over 7,000.

Aug. 01, 2025

The College of Sciences is pleased to announce the launch of the AI4Science Center. The center will promote research and collaboration focused on using state-of-the-art artificial intelligence (AI) and machine learning (ML) techniques to address complex scientific challenges.

“AI and ML have the potential to revolutionize scientific discovery, but there is a clear need for foundational research centered on AI/ML methodologies and application to scientific problems,” says Dimitrios Psaltis, professor in the School of Physics.

Psaltis will co-lead the center with Molei Tao, professor in the School of Mathematics, and Audrey Sederberg, assistant professor in the School of Psychology.

The new center will combine expertise and resources from various disciplines to foster the creation of robust, reusable tools and methods that can be used across scientific domains. Specifically, the center will organize seminars and an annual conference in addition to providing seed funding for collaborative projects across units.

Nearly 40 faculty members from the College’s six schools have already agreed to participate in activities proposed by the center; additional faculty involvement is expected from across the Institute.

The center builds upon initiatives such as Tech AI, the Machine Learning Center, and the Institute for Data Engineering and Science, which seek to boost Georgia Tech’s leadership in cutting-edge, AI/ML-powered interdisciplinary research and education.

The College’s seed grant program will sponsor the center for three years, starting in fiscal year 2026. Created in 2024, this program funds new centers that seek to increase the College’s research impact and advance its strategic goal of excellence in research through a focus on novel interdisciplinary areas or discipline-specific topics of high impact. The AI4Science Center is the third initiative to be seeded by this program, following the funding of the Center for Sustainable and Decarbonized Critical Energy Mineral Solutions and the Center for Research and Education in Navigation in 2024.

“The AI4Science Center was selected for its approach, timeliness, organization, and strong support from all six of the College’s schools,” says Laura Cadonati, associate dean for Research and professor in the School of Physics. “Faculty enthusiasm about this initiative reflects the growing importance of AI/ML tools in research today and the desire for more interdisciplinary collaboration in this space at the College and beyond.”

News Contact

Writer: Lindsay C. Vidal

May. 02, 2025

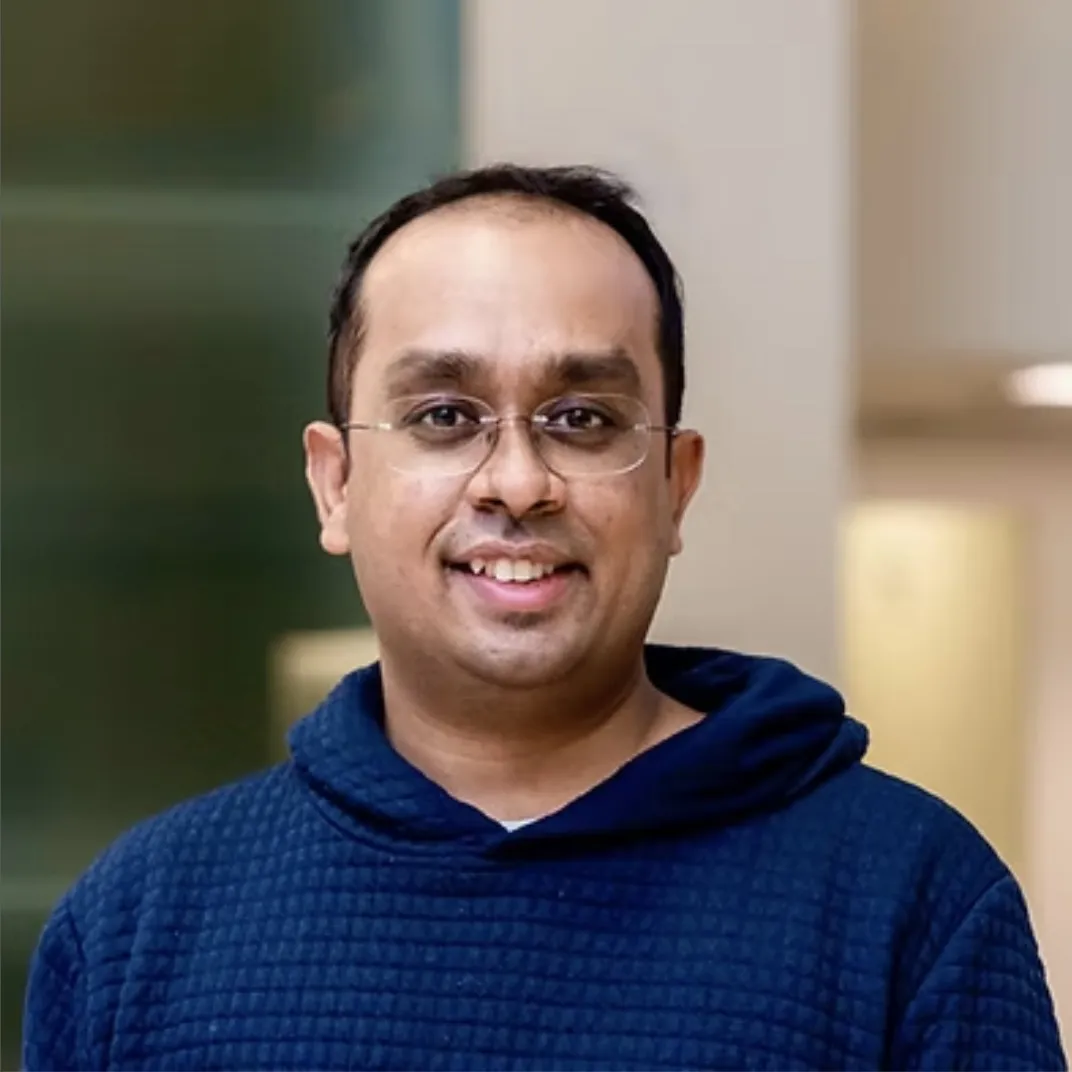

Georgia Tech researchers played a key role in the development of a groundbreaking AI framework designed to autonomously generate and evaluate scientific hypotheses in the field of astrobiology. Amirali Aghazadeh, assistant professor in the school of electrical and computer engineering, co-authored the research and contributed to the architecture that divides tasks among multiple specialized AI agents.

This framework, known as the AstroAgents system, is a modular approach which allows the system to simulate a collaborative team of scientists, each with distinct roles such as data analysis, planning, and critique, thereby enhancing the depth and originality of the hypotheses generated

News Contact

Amelia Neumeister | Research Communications Program Manager

The Institute for Matter and Systems

Apr. 02, 2025

Kinaxis, a global leader in supply chain orchestration, and the NSF AI Institute for Advances in Optimization (AI4OPT) at Georgia Tech today announced a new co-innovation partnership. This partnership will focus on developing scalable artificial intelligence (AI) and optimization solutions to address the growing complexity of global supply chains. AI4OPT operates under Tech AI, Georgia Tech’s AI hub, bringing together interdisciplinary expertise to advance real-world AI applications.

This particular collaboration builds on a multi-year relationship between Kinaxis and Georgia Tech, strengthening their shared commitment to turn academic innovation into real-world supply chain impact. The collaboration will span joint research, real-world applications, thought leadership, guest lectures, and student internships.

“In collaboration with AI4OPT, Kinaxis is exploring how the fusion of machine learning and optimization may bring a step change in capabilities for the next generation of supply chain management systems,” said Pascal Van Hentenryck, the A. Russell Chandler III Chair and professor at Georgia Tech, and director of AI4OPT and Tech AI at Georgia Tech.

Kinaxis’ AI-infused supply chain orchestration platform, Maestro™, combines proprietary technologies and techniques to deliver real-time transparency, agility, and decision-making across the entire supply chain — from multi-year strategic orchestration to last-mile delivery. As global supply chains face increasing disruptions from tariffs, pandemics, extreme weather, and geopolitical events, the Kinaxis–AI4OPT partnership will focus on developing AI-driven strategies to enhance companies’ responsiveness and resilience.

“At Kinaxis, we recognize the vital role that academic research plays in shaping the future of supply chain orchestration,” said Chief Technology Officer Gelu Ticala. “By partnering with world-class institutions like Georgia Tech, we’re closing the gap between AI innovation and implementation, bringing cutting-edge ideas into practice to solve the industry’s most pressing challenges.”

With more than 40 years of supply chain leadership, Kinaxis supports some of the world’s most complex industries, including high-tech, life sciences, industrial, mobility, consumer products, chemical, and oil and gas. Its customers include Unilever, P&G, Ford, Subaru, Lockheed Martin, Raytheon, Ipsen, and Santen.

About Kinaxis

Kinaxis is a global leader in modern supply chain orchestration, powering complex global supply chains and supporting the people who manage them, in service of humanity. Our powerful, AI-infused supply chain orchestration platform, Maestro™, combines proprietary technologies and techniques that provide full transparency and agility across the entire supply chain — from multi-year strategic planning to last-mile delivery. We are trusted by renowned global brands to provide the agility and predictability needed to navigate today’s volatility and disruption. For more news and information, please visit kinaxis.com or follow us on LinkedIn.

About AI4OPT

The NSF AI Institute for Advances in Optimization (AI4OPT) is one of the 27 National Artificial Intelligence Research Institutes set up by the National Science Foundation to conduct use-inspired research and realize the potential of AI. The AI Institute for Advances in Optimization (AI4OPT) is focused on AI for Engineering and is conducting cutting-edge research at the intersection of learning, optimization, and generative AI to transform decision making at massive scales, driven by applications in supply chains, energy systems, chip design and manufacturing, and sustainable food systems. AI4OPT brings together over 80 faculty and students from Georgia Tech, UC Berkeley, University of Southern California, UC San Diego, Clark Atlanta University, and the University of Texas at Arlington, working together with industrial partners that include Intel, Google, UPS, Ryder, Keysight, Southern Company, and Los Alamos National Laboratory. To learn more, visit ai4opt.org.

About Tech AI

Tech AI is Georgia Tech's hub for artificial intelligence research, education, and responsible deployment. With over $120 million in active AI research funding, including more than $60 million in NSF support for five AI Research Institutes, Tech AI drives innovation through cutting-edge research, industry partnerships, and real-world applications. With over 370 papers published at top AI conferences and workshops, Tech AI is a leader in advancing AI-driven engineering, mobility, and enterprise solutions. Through strategic collaborations, Tech AI bridges the gap between AI research and industry, optimizing supply chains, enhancing cybersecurity, advancing autonomous systems, and transforming healthcare and manufacturing. Committed to workforce development, Tech AI provides AI education across all levels, from K-12 outreach to undergraduate and graduate programs, as well as specialized certifications. These initiatives equip students with hands-on experience, industry exposure, and the technical expertise needed to lead in AI-driven industries. Bringing AI to the world through innovation, collaboration, and partnerships. Visit tech.ai.gatech.edu.

News Contact

Angela Barajas Prendiville | Director of Media Relations

aprendiville@gatech.edu

Feb. 14, 2025

Men and women in California put their lives on the line when battling wildfires every year, but there is a future where machines powered by artificial intelligence are on the front lines, not firefighters.

However, this new generation of self-thinking robots would need security protocols to ensure they aren’t susceptible to hackers. To integrate such robots into society, they must come with assurances that they will behave safely around humans.

It begs the question: can you guarantee the safety of something that doesn’t exist yet? It’s something Assistant Professor Glen Chou hopes to accomplish by developing algorithms that will enable autonomous systems to learn and adapt while acting with safety and security assurances.

He plans to launch research initiatives, in collaboration with the School of Cybersecurity and Privacy and the Daniel Guggenheim School of Aerospace Engineering, to secure this new technological frontier as it develops.

“To operate in uncertain real-world environments, robots and other autonomous systems need to leverage and adapt a complex network of perception and control algorithms to turn sensor data into actions,” he said. “To obtain realistic assurances, we must do a joint safety and security analysis on these sensors and algorithms simultaneously, rather than one at a time.”

This end-to-end method would proactively look for flaws in the robot’s systems rather than wait for them to be exploited. This would lead to intrinsically robust robotic systems that can recover from failures.

Chou said this research will be useful in other domains, including advanced space exploration. If a space rover is sent to one of Saturn’s moons, for example, it needs to be able to act and think independently of scientists on Earth.

Aside from fighting fires and exploring space, this technology could perform maintenance in nuclear reactors, automatically maintain the power grid, and make autonomous surgery safer. It could also bring assistive robots into the home, enabling higher standards of care.

This is a challenging domain where safety, security, and privacy concerns are paramount due to frequent, close contact with humans.

This will start in the newly established Trustworthy Robotics Lab at Georgia Tech, which Chou directs. He and his Ph.D. students will design principled algorithms that enable general-purpose robots and autonomous systems to operate capably, safely, and securely with humans while remaining resilient to real-world failures and uncertainty.

Chou earned dual bachelor’s degrees in electrical engineering and computer sciences as well as mechanical engineering from University of California Berkeley in 2017, a master’s and Ph.D. in electrical and computer engineering from the University of Michigan in 2019 and 2022, respectively. He was a postdoc at MIT Computer Science & Artificial Intelligence Laboratory prior to joining Georgia Tech in November 2024. He is a recipient of the National Defense Science and Engineering Graduate fellowship program, NSF Graduate Research fellowships, and was named a Robotics: Science and Systems Pioneer in 2022.

News Contact

John (JP) Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Pagination

- Previous page

- 3 Page 3

- Next page