Aug. 13, 2025

Juba Ziani is on a mission to change how the world thinks about data in artificial intelligence. An assistant professor in Georgia Tech’s H. Milton Stewart School of Industrial and Systems Engineering (ISyE), Ziani has secured a $425,000 National Science Foundation (NSF) grant to explore how smart incentives can lead to higher-quality, more widely shared datasets. His work forms part of a $1 million NSF collaboration with Columbia University computer science professor Augustin Chaintreau and senior personnel Daniel Björkegren, aiming to challenge outdated ideas and shape a more reliable future for AI.

Artificial intelligence (AI) increasingly shapes critical decisions in everyday life, from who sees a job posting or qualifies for a loan, to who is granted bail in the criminal justice system. These systems rely on historical data to learn patterns and make predictions. For example, an applicant might be approved for a loan because an AI system recognizes that previous borrowers with similar credit histories successfully repaid. But when the underlying training data is incomplete or low-quality, the consequences can be serious, disproportionately affecting those from groups historically excluded from such opportunities.

Ziani's research will explore how the economic value of data, combined with the effects of data markets and network dynamics, can lead to incentives that naturally improve dataset robustness. By identifying the conditions under which the supposed efficiency trade-off disappears, Ziani and his collaborators hope to open the door to more reliable and equitable AI systems.

Traditionally, researchers have assumed that making AI-assisted decision-making more robust and representative comes at the expense of efficiency. This assumption treats training data as fixed and unchangeable, which can place limits on the potential of AI systems. But as large-scale data platforms grow and the exchange of data becomes more accessible, the conventional trade-off between robustness and efficiency may no longer apply.

“Our project demonstrates how carefully designing incentives—both for data producers and data buyers—can enhance the quality and robustness of datasets without compromising performance,” said Ziani. “This has the potential to fundamentally reshape the way AI systems are trained and how data is collected, shared, and valued.”

With this work, Ziani aims to advance both the theory and practice of AI and data economics, ensuring that as AI continues to transform society, and does so in a way that is fair, accurate, and trustworthy.

News Contact

Erin Whitlock Brown, Communications Manager II

Aug. 06, 2025

The idea of people experiencing their favorite mobile apps as immersive 3D environments took a step closer to reality with a new Google-funded research iniative at Georgia Tech.

A new approach proposed by Tech researcher Yalong Yang uses generative artificial intelligence (GenAI) technologies to convert almost any mobile or web-based app into a 3D environment.

That includes application software programs from Microsoft and Adobe as well as any social media (Tiktok), entertainment (Spotify), banking (PayPal), or food service app (Uber Eats) and everything in between.

Yang aims to make the 3D environments compatible with augmented and virtual reality (AR/VR) headsets and smart glasses. He believes his research could be a breakthrough in spatial computing and change how humans interact with their favorite apps and computer systems in general.

“We’ll be able to turn around and see things we want, and we can grab them and put them together,” said Yang, an assistant professor in the School of Interactive Computing. “We’ll no longer use a mouse to scroll or the keyboard to type, but we can do more things like physical navigation.”

Yang’s proposal recently earned him recognition as a 2025 Google Research Scholar. Along with converting popular social apps, his platform will be able to instantly render Photoshop, MS Office, and other workplace applications in 3D for AR/VR devices.

“We have so many applications installed in our machines to complete all the various types of work we do,” he said. “We use Photoshop for photo editing, Premiere Pro for video editing, Word for writing documents. We want to create an AR/VR ecosystem that has all these things available in one interface with all apps working cohesively to support multitasking.”

Filling The Gap With AI

Just as Google’s Veo and Open AI’s Sora use generative-AI to create video clips, Yang believes it can be used to create interactive, immersive environments for any Android or Apple app.

“A critical gap in AR/VR is that we do not have all those existing applications, and redesigning all those apps will take forever,” he said. “It’s urgent that we have a complete ecosystem in VR to enable us to do the work we need to do. Instead of recreating everything from scratch, we need a way to convert these applications into immersive formats.”

The Google Play Store boasts 3.5 million apps for Android devices, while the Apple Store includes 1.8 million apps for iOS users.

Meanwhile, there are fewer than 10,000 apps available on the latest Meta Quest 3 headset, leaving a gap of millions of apps that will need 3D conversion.

“We envision a one-click app, and the (Android Package Kit) file output will be a Meta APK that you can install on your MetaQuest 3,” he said.

Yang said major tech companies like Apple have the resources to redesign their apps into 3D formats. However, small- to mid-sized companies that have created apps either do not have that ability or would take years to do so.

That’s where generative-AI can help. Yang plans to use it to convert source code from web-based and mobile apps into WebXR.

WebXR is a set of application programming interfaces (APIs) that enables developers to create AR/VR experiences within web browsers.

“We start with web-based content,” he said. “A lot of things are already based on the web, so we want to convert that user interface into Web XR.”

Building New Worlds

The process for converting mobile apps would be similar.

“Android uses an XML description file to define its user-interface (UI) elements. It’s very much like HTML on a web page. We believe we can use that as our input and map the elements to their desired location in a 3D environment. AI is great at translating one language to another — JavaScript to C-sharp, for example — so that can help us in this process.”

If generative-AI can create environments, the next step would be to create a seamless user experience.

“In a normal desktop or mobile application, we can only see one thing at a time, and it’s the same for a lot of VR headsets with one application occupying everything. To live in a multi-task environment, we can’t just focus on one thing because we need to keep switching our tasks, so how do we break all the elements down and let them float around and create a spatial view of them surrounding the user?”

Along with Assistant Professor Cindy Xiong, Yang is one of two researchers in the School of IC to be named a 2025 Google Research Scholar.

Four researchers from the College of Competing have received the award. The other two are Ryan Shandler from the School of Cybersecurity and Privacy and Victor Fung from the School of Computational Science and Engineering.

Reent Storie

Jul. 24, 2025

Computer vision enables AI to see the world. It’s already being used for self-driving vehicles, medical imaging, face recognition, and more.

Georgia Tech faculty and student experts advancing this field were in action in June at the globally renowned CVPR conference from IEEE and the Computer Vision Foundation. Georgia Tech was in the top 10% of all organizations for lead authors and the top 4% for number of papers. More than 2000 organizations had research accepted into CVPR's main program.

Watch the video and hear from Tech experts about what’s new and what’s coming next. Featured students include College of Computing experts Fiona Ryan, Chengyue Huang, Brisa Maneechotesuwan, and Lex Whalen.

These researchers in computer vision are showing how they are extending AI capabilities with image and video data.

HIGHLIGHTS:

- College of Computing faculty, from the Schools of Interactive Computing (IC) and Computer Science (CS), represented the majority of Tech's faculty in the CVPR papers program (8 of 10 faculty).

- IC faculty Zsolt Kira and Bo Zhu each coauthored an oral paper, the top 3% of accepted papers. IC faculty member Judy Hoffman coauthored two highlight papers, the top 20% of acceptances.

- Georgia Tech is in the top 10% of all organizations for number of first authors and the top 4% for number of papers. More than 2,000 organizations had research in the main program.

- Tech experts were on 30 research paper teams across 16 research areas. Topics with more than one Tech expert included:

• Image/video synthesis & generation

• Efficient and scalable vision

• Multi-modal learning

• Datasets and evaluation

• Humans: Face, body, gesture, etc.

• Vision, language, and reasoning

• Autonomous driving

• Computational imaging

News Contact

Joshua Preston

Communications Manager, Marketing and Research

College of Computing

jpreston7@gatech.edu

Jul. 23, 2025

A groundbreaking new medical dataset is poised to revolutionize healthcare in Africa by improving chatbots’ understanding of the continent’s most pressing medical issues and increasing their awareness of accessible treatment options.

AfriMed-QA, developed by researchers from Georgia Tech and Google, could reduce the burden on African healthcare systems.

The researchers said people in need of medical care file into overcrowded clinics and hospitals and face excruciatingly long waits with no guarantee of admission or quality treatment. There aren’t enough trained healthcare professionals available to meet the demand.

Some healthcare question-answer chatbots have been introduced to treat those in need. However, the researchers said there’s no transparent or standardized way to test or verify their effectiveness and safety.

The dataset will enable technologists and researchers to develop more robust and accessible healthcare chatbots tailored to the unique experiences and challenges of Africa.

One such new tool is Google’s MedGemma, a large-language model (LLM) designed to process medical text and images. AfriMed-QA was used for training and evaluation purposes.

AfriMed-QA stands as the most extensive dataset that evaluates LLM capabilities across various facets of African healthcare. It contains 15,000 question-answer pairs culled from over 60 medical schools across 16 countries and covering numerous medical specialties, disease conditions, and geographical challenges.

Tobi Olatunji and Charles Nimo co-developed AfriMed-QA and co-authored a paper about the dataset that will be presented at the Association for Computational Linguistics (ACL) conference next week in Vienna.

Olatunji is a graduate of Georgia Tech’s Online Master of Science in Computer Science (OMSCS) program and holds a Doctor of Medicine from the College of Medicine at the University of Ibadan in Nigeria. Nimo is a Ph.D. student in Tech’s School of Interactive Computing, where he is advised by School of IC professors Michael Best and Irfan Essa.

Focus on Africa

Nimo, Olatunji, and their collaborators created AfriMed-QA as a response to MedQA, a large-scale question-answer dataset that tests the medical proficiency of all major LLMs. That includes Google’s Gemini, OpenAI’s ChatGPT, and Anthropic’s Claude, among others.

However, because MedQA is trained solely on the U.S. Medical License Exams, Nimo said it is not adequate to serve patients in underdeveloped African countries nor the Global South at-large.

“AfriMed-QA has the contextualized and localized understanding of African medical institutions that you don’t get from Med-QA,” Nimo said. “There are specific diseases and local challenges in our dataset that you wouldn't find in any U.S.-based dataset.”

Olatunji said one problem African users may encounter using LLMs trained on MedQA is that they may advise unfeasible treatments or unaffordable prescription drugs.

“You consider the types of drugs, diagnostics, procedures, or therapies that exist in the U.S. that are quite advanced. These treatments are much more accessible, for example in the US, and Europe,” Olatunji said. “But in Africa, they’re too expensive and many times unavailable. They may cost over $100,000, and many people have no health insurance. Why recommend such treatments to someone who can’t obtain them?”

Another problem may be that the LLM doesn’t take a medical condition seriously if it isn’t predominant in the U.S.

“We tested many of these models, for example, on how they would manage sickle-cell disease signs and symptoms, and they focused on other “more likely” causes and did not rank or consider sickle cell high enough as a possible cause,” he said. “They, for example, don’t consider sickle-cell as important as anemia and cancer because sickle-cell is less prevalent in the U.S.”

In addition to sickle-cell disease, Olatunji said some of the healthcare issues facing Africa that can be improved through AfriMed-QA include:

- HIV treatment and prevention

- Poor maternal healthcare

- Widespread malaria cases

- Physician shortage

- Clinician productivity and operational efficiency

Google Partnership

Mercy Asiedu, senior author of the AfriMed-QA paper and research scientist at Google Research, has dedicated her career to improving healthcare in Africa. Her work began as a Ph.D. student at Duke University, where she invented the Callascope, a groundbreaking non-invasive tool for gynecological examinations

With her current focus on democratizing healthcare through artificial intelligence (AI), Asiedu, who is from Ghana, helped create a research consortium to develop the dataset. The consortium consists of Georgia Tech, Google, Intron, Bio-RAMP Research Labs, the University of Cape Coast, the Federation of African Medical Students Association, and Sisonkebiotik.

Sisonkebiotik is an organization of researchers that drives healthcare initiatives to advance data science, machine learning, and AI in Africa.

Olatunji leads the Bio-RAMP Research Lab, a community of healthcare and AI researchers, and he is the founder and CEO of Intron, which develops natural-language processing technologies for African communities.

In May, Google released MedGemma, which uses both the MedQA and Afri-MedQA datasets to form a more globally accessible healthcare chatbot. MedGemma has several versions, including 4-billion and 27-billion parameter models, which support multimodal inputs that combine images and text.

“We are proud the latest medical-focused LLM from Google, MedGemma, leverages AfriMed-QA and improves performance in African contexts,” Asiedu said.

“We started by asking how we could reduce the burden on Africa’s healthcare systems. If we can get these large-language models to be as good as experts and make them more localized with geo-contextualization, then there’s the potential to task-shift to that.”

The project is supported by the Gates Foundation and PATH, a nonprofit that improves healthcare in developing countries.

Jul. 16, 2025

The National Science Foundation (NSF) has awarded Georgia Tech and its partners $20 million to build a powerful new supercomputer that will use artificial intelligence (AI) to accelerate scientific breakthroughs.

Called Nexus, the system will be one of the most advanced AI-focused research tools in the U.S. Nexus will help scientists tackle urgent challenges such as developing new medicines, advancing clean energy, understanding how the brain works, and driving manufacturing innovations.

“Georgia Tech is proud to be one of the nation’s leading sources of the AI talent and technologies that are powering a revolution in our economy,” said Ángel Cabrera, president of Georgia Tech. “It’s fitting we’ve been selected to host this new supercomputer, which will support a new wave of AI-centered innovation across the nation. We’re grateful to the NSF, and we are excited to get to work.”

Designed from the ground up for AI, Nexus will give researchers across the country access to advanced computing tools through a simple, user-friendly interface. It will support work in many fields, including climate science, health, aerospace, and robotics.

“The Nexus system's novel approach combining support for persistent scientific services with more traditional high-performance computing will enable new science and AI workflows that will accelerate the time to scientific discovery,” said Katie Antypas, National Science Foundation director of the Office of Advanced Cyberinfrastructure. “We look forward to adding Nexus to NSF's portfolio of advanced computing capabilities for the research community.”

Nexus Supercomputer — In Simple Terms

- Built for the future of science: Nexus is designed to power the most demanding AI research — from curing diseases, to understanding how the brain works, to engineering quantum materials.

- Blazing fast: Nexus can crank out over 400 quadrillion operations per second — the equivalent of everyone in the world continuously performing 50 million calculations every second.

- Massive brain plus memory: Nexus combines the power of AI and high-performance computing with 330 trillion bytes of memory to handle complex problems and giant datasets.

- Storage: Nexus will feature 10 quadrillion bytes of flash storage, equivalent to about 10 billion reams of paper. Stacked, that’s a column reaching 500,000 km high — enough to stretch from Earth to the moon and a third of the way back.

- Supercharged connections: Nexus will have lightning-fast connections to move data almost instantaneously, so researchers do not waste time waiting.

- Open to U.S. researchers: Scientists from any U.S. institution can apply to use Nexus.

Why Now?

AI is rapidly changing how science is investigated. Researchers use AI to analyze massive datasets, model complex systems, and test ideas faster than ever before. But these tools require powerful computing resources that — until now — have been inaccessible to many institutions.

This is where Nexus comes in. It will make state-of-the-art AI infrastructure available to scientists all across the country, not just those at top tech hubs.

“This supercomputer will help level the playing field,” said Suresh Marru, principal investigator of the Nexus project and director of Georgia Tech’s new Center for AI in Science and Engineering (ARTISAN). “It’s designed to make powerful AI tools easier to use and available to more researchers in more places.”

Srinivas Aluru, Regents’ Professor and senior associate dean in the College of Computing, said, “With Nexus, Georgia Tech joins the league of academic supercomputing centers. This is the culmination of years of planning, including building the state-of-the-art CODA data center and Nexus’ precursor supercomputer project, HIVE."

Like Nexus, HIVE was supported by NSF funding. Both Nexus and HIVE are supported by a partnership between Georgia Tech’s research and information technology units.

A National Collaboration

Georgia Tech is building Nexus in partnership with the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign, which runs several of the country’s top academic supercomputers. The two institutions will link their systems through a new high-speed network, creating a national research infrastructure.

“Nexus is more than a supercomputer — it’s a symbol of what’s possible when leading institutions work together to advance science,” said Charles Isbell, chancellor of the University of Illinois and former dean of Georgia Tech’s College of Computing. “I'm proud that my two academic homes have partnered on this project that will move science, and society, forward.”

What’s Next

Georgia Tech will begin building Nexus this year, with its expected completion in spring 2026. Once Nexus is finished, researchers can apply for access through an NSF review process. Georgia Tech will manage the system, provide support, and reserve up to 10% of its capacity for its own campus research.

“This is a big step for Georgia Tech and for the scientific community,” said Vivek Sarkar, the John P. Imlay Dean of Computing. “Nexus will help researchers make faster progress on today’s toughest problems — and open the door to discoveries we haven’t even imagined yet.”

News Contact

Siobhan Rodriguez

Senior Media Relations Representative

Institute Communications

Jul. 15, 2025

The National Science Foundation (NSF) has awarded Georgia Tech and its partners $20 million to build a powerful new supercomputer that will use artificial intelligence (AI) to accelerate scientific breakthroughs.

Called Nexus, the system will be one of the most advanced AI-focused research tools in the U.S. Nexus will help scientists tackle urgent challenges such as developing new medicines, advancing clean energy, understanding how the brain works, and driving manufacturing innovations.

“Georgia Tech is proud to be one of the nation’s leading sources of the AI talent and technologies that are powering a revolution in our economy,” said Ángel Cabrera, president of Georgia Tech. “It’s fitting we’ve been selected to host this new supercomputer, which will support a new wave of AI-centered innovation across the nation. We’re grateful to the NSF, and we are excited to get to work.”

Designed from the ground up for AI, Nexus will give researchers across the country access to advanced computing tools through a simple, user-friendly interface. It will support work in many fields, including climate science, health, aerospace, and robotics.

“The Nexus system's novel approach combining support for persistent scientific services with more traditional high-performance computing will enable new science and AI workflows that will accelerate the time to scientific discovery,” said Katie Antypas, National Science Foundation director of the Office of Advanced Cyberinfrastructure. “We look forward to adding Nexus to NSF's portfolio of advanced computing capabilities for the research community.”

Nexus Supercomputer — In Simple Terms

- Built for the future of science: Nexus is designed to power the most demanding AI research — from curing diseases, to understanding how the brain works, to engineering quantum materials.

- Blazing fast: Nexus can crank out over 400 quadrillion operations per second — the equivalent of everyone in the world continuously performing 50 million calculations every second.

- Massive brain plus memory: Nexus combines the power of AI and high-performance computing with 330 trillion bytes of memory to handle complex problems and giant datasets.

- Storage: Nexus will feature 10 quadrillion bytes of flash storage, equivalent to about 10 billion reams of paper. Stacked, that’s a column reaching 500,000 km high — enough to stretch from Earth to the moon and a third of the way back.

- Supercharged connections: Nexus will have lightning-fast connections to move data almost instantaneously, so researchers do not waste time waiting.

- Open to U.S. researchers: Scientists from any U.S. institution can apply to use Nexus.

Why Now?

AI is rapidly changing how science is investigated. Researchers use AI to analyze massive datasets, model complex systems, and test ideas faster than ever before. But these tools require powerful computing resources that — until now — have been inaccessible to many institutions.

This is where Nexus comes in. It will make state-of-the-art AI infrastructure available to scientists all across the country, not just those at top tech hubs.

“This supercomputer will help level the playing field,” said Suresh Marru, principal investigator of the Nexus project and director of Georgia Tech’s new Center for AI in Science and Engineering (ARTISAN). “It’s designed to make powerful AI tools easier to use and available to more researchers in more places.”

Srinivas Aluru, Regents’ Professor and senior associate dean in the College of Computing, said, “With Nexus, Georgia Tech joins the league of academic supercomputing centers. This is the culmination of years of planning, including building the state-of-the-art CODA data center and Nexus’ precursor supercomputer project, HIVE."

Like Nexus, HIVE was supported by NSF funding. Both Nexus and HIVE are supported by a partnership between Georgia Tech’s research and information technology units.

A National Collaboration

Georgia Tech is building Nexus in partnership with the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign, which runs several of the country’s top academic supercomputers. The two institutions will link their systems through a new high-speed network, creating a national research infrastructure.

“Nexus is more than a supercomputer — it’s a symbol of what’s possible when leading institutions work together to advance science,” said Charles Isbell, chancellor of the University of Illinois and former dean of Georgia Tech’s College of Computing. “I'm proud that my two academic homes have partnered on this project that will move science, and society, forward.”

What’s Next

Georgia Tech will begin building Nexus this year, with its expected completion in spring 2026. Once Nexus is finished, researchers can apply for access through an NSF review process. Georgia Tech will manage the system, provide support, and reserve up to 10% of its capacity for its own campus research.

“This is a big step for Georgia Tech and for the scientific community,” said Vivek Sarkar, the John P. Imlay Dean of Computing. “Nexus will help researchers make faster progress on today’s toughest problems — and open the door to discoveries we haven’t even imagined yet.”

News Contact

Siobhan Rodriguez

Senior Media Relations Representative

Institute Communications

Jul. 11, 2025

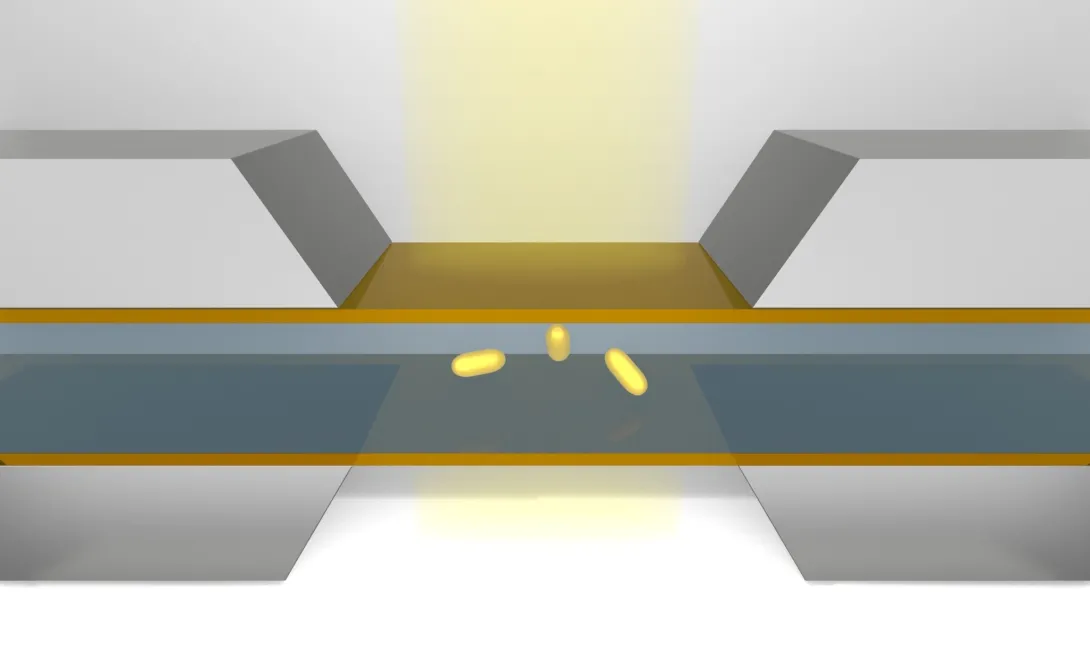

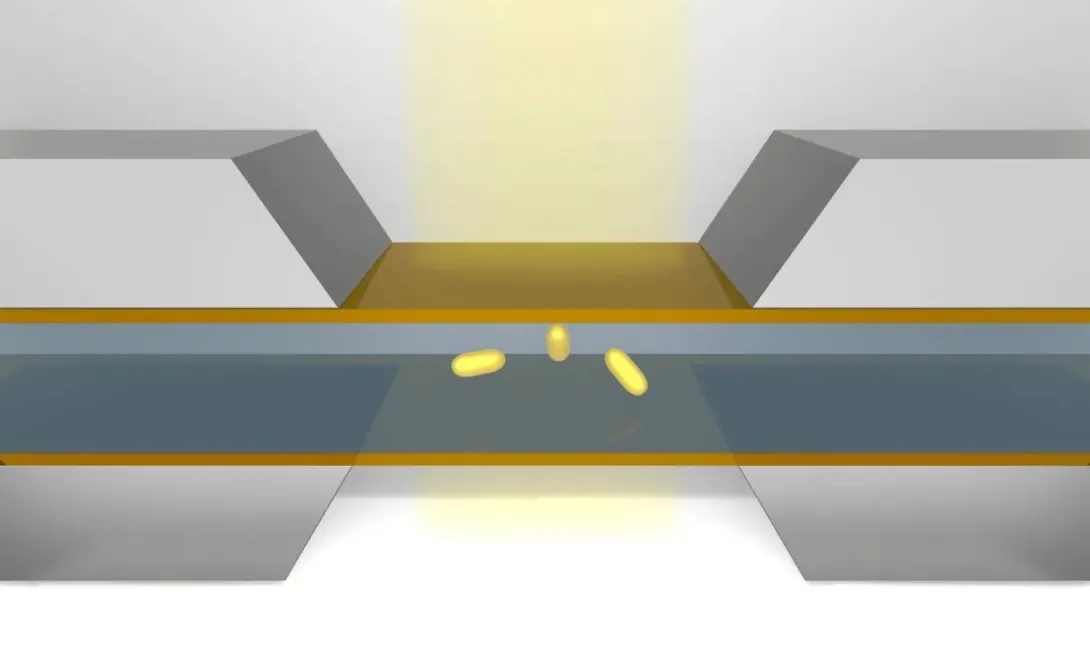

Nanoparticles – the tiniest building blocks of our world – are constantly in motion, bouncing, shifting, and drifting in unpredictable paths shaped by invisible forces and random environmental fluctuations.

Better understanding their movements is key to developing better medicines, materials, and sensors. But observing and interpreting their motion at the atomic scale has presented scientists with major challenges.

However, researchers in Georgia Tech’s School of Chemical and Biomolecular Engineering (ChBE) have developed an artificial intelligence (AI) model that learns the underlying physics governing those movements.

The team’s research, published in Nature Communications, enables scientists to not only analyze, but also generate realistic nanoparticle motion trajectories that are indistinguishable from real experiments, based on thousands of experimental recordings.

A Clearer Window into the Nanoworld

Conventional microscopes, even extremely powerful ones, struggle to observe moving nanoparticles in fluids. And traditional physics-based models, such as Brownian motion, often fail to fully capture the complexity of unpredictable nanoparticle movements, which can be influenced by factors such as viscoelastic fluids, energy barriers, or surface interactions.

To overcome these obstacles, the researchers developed a deep generative model (called LEONARDO) that can analyze and simulate the motion of nanoparticles captured by liquid-phase transmission electron microscopy (LPTEM), allowing scientists to better understand nanoscale interactions invisible to the naked eye. Unlike traditional imaging, LPTEM can observe particles as they move naturally within a microfluidic chamber, capturing motion down to the nanometer and millisecond.

“LEONARDO allows us to move beyond observation to simulation,” said Vida Jamali, assistant professor and Daniel B. Mowrey Faculty Fellow in ChBE@GT. “We can now generate high-fidelity models of nanoscale motion that reflect the actual physical forces at play. LEONARDO helps us not only see what is happening at the nanoscale but also understand why.”

To train and test LEONARDO, the researchers used a model system of gold nanorods diffusing in water. They collected more than 38,000 short trajectories under various experimental conditions, including different particle sizes, frame rates, and electron beam settings. This diversity allowed the model to generalize across a broad range of behaviors and conditions.

The Power of LEONARDO’s Generative AI

What distinguishes LEONARDO is its ability to learn from experimental data while being guided by physical principles, said study lead author Zain Shabeeb, a PhD student in ChBE@GT. LEONARDO uses a specialized “loss function” based on known laws of physics to ensure that its predictions remain grounded in reality, even when the observed behavior is highly complex or random.

“Many machine learning models are like black boxes in that they make predictions, but we don’t always know why,” Shabeeb said. “With LEONARDO, we integrated physical laws directly into the learning process so that the model’s outputs remain interpretable and physically meaningful.”

LEONARDO uses a transformer-based architecture, which is the same kind of model behind many modern language AI applications. Like how a language model learns grammar and syntax, LEONARDO learns the "grammar" of nanoparticle movement, identifying hidden reasons for the ways nanoparticles interact with their environment.

Future Impact

By simulating vast libraries of possible nanoparticle motions, LEONARDO could help train AI systems that automatically control and adjust electron microscopes for optimal imaging, paving the way for “smart” microscopes that adapt in real time, the researchers said.

“Understanding nanoscale motion is of growing importance to many fields, including drug delivery, nanomedicine, polymer science, and quantum technologies,” Jamali said. “By making it easier to interpret particle behavior, LEONARDO could help scientists design better materials, improve targeted therapies, and uncover new fundamental insights into how matter behaves at small scales."

CITATION: Zain Shabeeb , Naisargi Goyal, Pagnaa Attah Nantogmah, and Vida Jamali, “Learning the diffusion of nanoparticles in liquid phase TEM via physics-informed generative AI,” Nature Communications, 2025.

News Contact

Brad Dixon, braddixon@gatech.edu

Jul. 11, 2025

A study from Georgia Tech’s School of Chemical and Biomolecular Engineering introduces LEONARDO, a deep generative AI model that reveals the hidden dynamics of nanoparticle motion in liquid environments. By analyzing over 38,000 experimental trajectories captured through liquid-phase transmission electron microscopy (LPTEM), LEONARDO not only interprets but also generates realistic simulations of nanoscale movement. This innovation marks a major leap in understanding the physical forces at play in nanotechnology, with promising implications for medicine, materials science, and sensor development.

Jul. 10, 2025

Giga, a global initiative focused on expanding internet connectivity to schools, launched its new tech and innovation event series “Giga Talks” on June 19 with a keynote address from Pascal Van Hentenryck, a leading artificial intelligence expert from the Georgia Institute of Technology.

Van Hentenryck serves as the A. Russell Chandler III Chair and Professor in Georgia Tech’s H. Milton Stewart School of Industrial and Systems Engineering. He is also the director of Tech AI, Georgia Tech’s new strategic hub for artificial intelligence, and the U.S. National Science Foundation AI Institute for Advances in Optimization (AI4OPT), which operates under Tech AI’s umbrella.

In his talk, “AI for Social Good,” Van Hentenryck showcased how AI technologies can drive impact across key sectors—including mobility, education, healthcare, disaster response, and e-commerce. Drawing from ongoing research and real-world deployments, he emphasized the critical role of human-centered design and interdisciplinary collaboration in developing AI that benefits society at large.

“AI has tremendous potential to serve the public good when guided by ethics, equity, and purpose-driven innovation,” said Van Hentenryck. “At Georgia Tech, our work aims to harness this potential to create meaningful change in people’s lives.”

The event marked the debut of Giga Talks, a new speaker series designed to convene global thought leaders, engineers, and policymakers around timely issues in technology and innovation. The initiative supports Giga’s broader mission to connect every school in the world to the internet and unlock digital opportunities for children everywhere.

A video recording of Van Hentenryck’s talk is available on here.

News Contact

Breon Martin

AI Marketing Communications Manager

Jul. 10, 2025

Pascal Van Hentenryck, the A. Russell Chandler III Chair and professor at Georgia Tech, and director of the U.S. National Science Foundation AI Institute for Advances in Optimization (AI4OPT) and Tech AI, delivered a keynote address at the 11th IFAC Conference on Manufacturing Modelling, Management and Control (MIM 2025), hosted by the Norwegian University of Science and Technology (NTNU).

Combining Technologies for Real-World Results

Van Hentenryck introduced a series of foundational approaches—such as primal and dual optimization proxies, predict-then-optimize strategies, self-supervised learning, and deep multi-stage policies—that enable AI systems to operate effectively and responsibly in high-stakes, real-time environments. These frameworks demonstrate the power of integrating AI with domain-specific reasoning to achieve results unattainable by either field alone.

“This is not just about building smarter algorithms,” Van Hentenryck said. “It’s about designing AI that can adapt, learn, and optimize under uncertainty—across supply chains, energy systems, and manufacturing networks.”

Grounded in Real-World Impact

The keynote aligned directly with the MIM 2025 focus on logistics and production systems. Drawing from recent work in supply chain optimization and smart manufacturing, Van Hentenryck emphasized how AI4OPT’s research is already generating measurable impact in industry.

MIM 2025, organized by NTNU’s Production Management Research Group and supported by MHI and CICMHE, featured more than 40 experts delivering keynotes, presenting research, and leading breakout sessions across topics in modeling, control, and decision-making in manufacturing and logistics.

About Tech AI

Tech AI is Georgia Tech’s strategic initiative to lead in the development and application of artificial intelligence across disciplines and industries. Serving as a unifying platform for AI research, education, and collaboration, Tech AI connects researchers, industry, and government partners to drive responsible innovation in areas such as healthcare, mobility, energy, sustainability, and education. Director of Tech AI, Pascal Van Hentenryck helps guide the institute’s research vision and strategic alignment across Georgia Tech’s AI portfolio. Learn more at ai.gatech.edu.

About AI4OPT

The AI Institute for Advances in Optimization (AI4OPT) is one of the National Science Foundation’s flagship AI Institutes and is led by Georgia Tech. The institute brings together experts in artificial intelligence, optimization, and control to tackle grand challenges in supply chains, transportation, and energy systems.

AI4OPT is one of several NSF-funded AI institutes housed within Tech AI’s collaborative framework, enabling cross-disciplinary research with real-world outcomes. Learn more at ai4opt.org.

News Contact

Breon Martin

AI Marketing Communications Manager

Pagination

- Previous page

- 2 Page 2

- Next page