Aug. 21, 2025

In the first town hall with its new Dean, College of Lifetime Learning colleagues came together to explore a central question: what does it mean to learn, and how can that spirit shape the way we work?

Bill Gaudelli, Ed.D., joined the College Aug. 1 as the inaugural dean. He brings more than 35 years of experience as an educator, researcher, and academic administrator to this role.

Rather than beginning with charts or plans, the Dean opened with two polls. The first asked: What did you learn? What did you notice about your learning? How did you feel before, during, and after? The second posed a broader challenge: What is a learning organization? Colleagues shared learning experiences that ran along a fairly common path: anticipation, uncertainty, frustration, and, ultimately, accomplishment.

“Not one of you said I had no emotional response to the learning. Not a person. There was joy. There was a lot of laughter. And everyone had something to share because that is how fundamental learning is,” Dean Gaudelli observed. “And so, as a learning organization. We've got to think about how we meet the moment and the learner in a context that's totally new. We've got to figure that out in a new space, using new tools, recognizing that the desire to learn is permanent in humans.”

With these shared experiences in mind, Gaudelli introduced the concept of a learning organization, drawing from Peter Senge’s landmark work The Fifth Discipline. He outlined the five disciplines (personal mastery, mental models, shared vision, team learning, and systems thinking) and invited colleagues to see them not as abstract theory, but as a practical framework for how the College might operate.

Becoming a learning organization, Dean Gaudelli said, is not a label but a way of working: embracing curiosity, being adaptable, questioning assumptions, and understanding that the whole is stronger than its individual parts. “If we’re going to promote learning in the world, then we have to be learning ourselves,” he noted. That means committing to continuous improvement, viewing mistakes as opportunities, and aligning every role with a shared purpose.

This vision brings to life the College’s mission to support learning across the lifespan and positions the College to respond to a rapidly changing educational landscape. By building systems and culture that make learning continuous, collaborative, and transformative, Gaudelli sees an opportunity to lead not just in what the College teaches, but in how it works together.

Dr. Roslyn Martin, Director of Professional Education Programs for the College and GTPE , later reflected on the meeting. “It was powerful to reflect on the learning journey and experience the process organically to deepen our understanding,” she shared. “And I’m excited about this pivotal chapter for Georgia Tech, as the College creates more impactful learning experiences and pathways to transformative education for communities around the globe!”

In the months ahead, the College will begin crafting a new strategic plan rooted in these ideas. Gaudelli encouraged everyone to take an active role in shaping the future. His closing challenge: learn something new in the coming month, and not for the skill alone, but for the insight into how you learn. That awareness, he said, is the foundation for building a true learning organization.

Aug. 11, 2025

Team Atlanta, a group of Georgia Tech students, faculty, and alumni, achieved international fame on Friday when they won DARPA’s AI Cyber Challenge (AIxCC) and its $4 million grand prize.

AIxCC was a two-year long competition to create an artificial intelligence (AI) enabled cyber reasoning system capable of autonomously finding and patching vulnerabilities.

“This is a once in a generation competition organized by DARPA about how to utilize recent advancements in AI to use in security related tasks,” said Georgia Tech Professor Taesoo Kim.

“As hackers we started this competition as AI skeptics, but now we truly believe in the potential of adopting large language models (LLM) when solving security problems."

The Atlantis system was Team Atlanta’s submission. Atlantis is a fuzzer- or an automated software that finds vulnerabilities or bugs- and enhanced it with several different types of LLMs.

While developing the system, Team Atlanta reported the heat put out by the GPU rack was hot enough to roast marshmallows.

The team was comprised of hackers, engineers, and cybersecurity researchers. The Georgia Tech alumni on the team also represented their employers which include KAIST, POSTECH, and Samsung Research. Kim is also the vice president of Samsung Research.

News Contact

John Popham

Communications Officer II at the School of Cybersecurity and Privacy

Aug. 13, 2025

Juba Ziani is on a mission to change how the world thinks about data in artificial intelligence. An assistant professor in Georgia Tech’s H. Milton Stewart School of Industrial and Systems Engineering (ISyE), Ziani has secured a $425,000 National Science Foundation (NSF) grant to explore how smart incentives can lead to higher-quality, more widely shared datasets. His work forms part of a $1 million NSF collaboration with Columbia University computer science professor Augustin Chaintreau and senior personnel Daniel Björkegren, aiming to challenge outdated ideas and shape a more reliable future for AI.

Artificial intelligence (AI) increasingly shapes critical decisions in everyday life, from who sees a job posting or qualifies for a loan, to who is granted bail in the criminal justice system. These systems rely on historical data to learn patterns and make predictions. For example, an applicant might be approved for a loan because an AI system recognizes that previous borrowers with similar credit histories successfully repaid. But when the underlying training data is incomplete or low-quality, the consequences can be serious, disproportionately affecting those from groups historically excluded from such opportunities.

Ziani's research will explore how the economic value of data, combined with the effects of data markets and network dynamics, can lead to incentives that naturally improve dataset robustness. By identifying the conditions under which the supposed efficiency trade-off disappears, Ziani and his collaborators hope to open the door to more reliable and equitable AI systems.

Traditionally, researchers have assumed that making AI-assisted decision-making more robust and representative comes at the expense of efficiency. This assumption treats training data as fixed and unchangeable, which can place limits on the potential of AI systems. But as large-scale data platforms grow and the exchange of data becomes more accessible, the conventional trade-off between robustness and efficiency may no longer apply.

“Our project demonstrates how carefully designing incentives—both for data producers and data buyers—can enhance the quality and robustness of datasets without compromising performance,” said Ziani. “This has the potential to fundamentally reshape the way AI systems are trained and how data is collected, shared, and valued.”

With this work, Ziani aims to advance both the theory and practice of AI and data economics, ensuring that as AI continues to transform society, and does so in a way that is fair, accurate, and trustworthy.

News Contact

Erin Whitlock Brown, Communications Manager II

Aug. 08, 2025

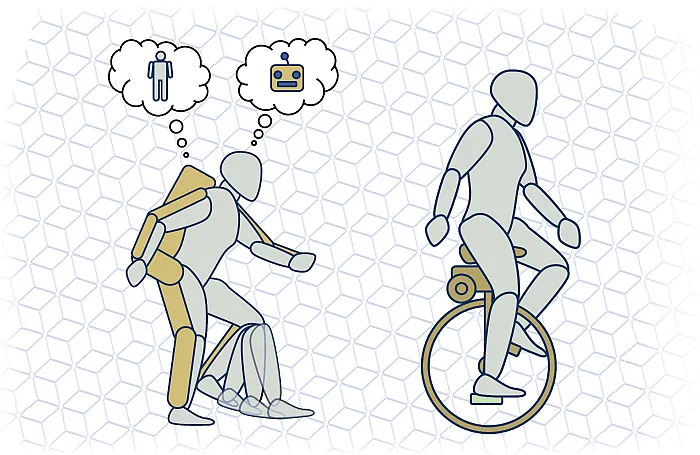

Research into tailored assistive and rehabilitative devices has seen recent advancements but the goal remains out of reach due to the sparsity of data on how humans learn complex balance tasks. To address this gap, a collaborating team of interdisciplinary faculty from Florida State University and Georgia Tech have been awarded ~$798,000 by the NSF to launch a study to better understand human motor learning as well as gain greater understanding into human robot interaction dynamics during the learning process.

Led by PI: Taylor Higgins, Assistant Professor, FAMU-FSU Department of Mechanical Engineering, partnering with Co-PIs Shreyas Kousik, Assistant Professor, Georgia Tech, George W. Woodruff School of Mechanical Engineering, and Brady DeCouto, Assistant Professor, FSU Anne Spencer Daves College of Education, Health, and Human Sciences, the research will use the acquisition of unicycle riding skill by participants to gain a better grasp on human motor learning in tasks requiring balance and complex movement in space. Although it might sound a bit odd, the fact that most people don’t know how to ride a unicycle, and the fact that it requires balance, mean that the data will cover the learning process from novice to skilled across the participant pool.

Using data acquired from human participants, the team will develop a “robotics assistive unicycle” that will be used in the training of the next pool of novice unicycle riders. This is to gauge if, and how rapidly, human motor learning outcomes improve with the assistive unicycle. The participants that engage with the robotic unicycle will also give valuable insight into developing effective human-robot collaboration strategies.

The fact that deciding to get on a unicycle requires a bit of bravery might not be great for the participants, but it’s great for the research team. The project will also allow exploration into the interconnection between anxiety and human motor learning to discover possible alleviation strategies, thus increasing the likelihood of positive outcomes for future patients and consumers of these devices.

Author

-Christa M. Ernst

This Article Refers to NSF Award # 2449160

News Contact

Sep. 19, 2025

Join the Georgia Tech Library in person and virtually Tuesday Oct. 14 through Thursday, Oct. 16 for our Inaugural AI Week, a mix of panel discussions and seminars aimed at celebrating and investigating the myriad ways researchers, students and faculty harness the burgeoning technology.

“We’re thrilled to bring this slate of events, discussions and learning opportunities to campus focused on the game-changing use of artificial intelligence happening across the Institute,” said Dean Leslie Sharp. “The Library has brought together industry experts, student practitioners and research faculty to offer a varied and intriguing set of learning opportunities for our community.”

AI Week 2025 will include five separate in-person and online events, including:

- Faculty Panel Discussion: Harnessing AI Tools to Make Teaching More Effective and Engaging

Oct. 14 | 10:30-11:15 a.m.

Scholars Event Network, first floor Price Gilbert

- Panel Discussion: Enhancing Research with AI Tools

Oct. 14 | 1-2 p.m.

Scholars Event Network, first floor Price Gilbert

- Trademark Fundamentals and Searching (ONLINE)

Oct. 15 | 2-3 p.m.

Online

- Patents in the Age of AI: Navigating the Changing Landscape (ONLINE)

Oct. 16 | 2-3 p.m.

Online

- Exploring AI Together for Study, Copilot and Firefly

Oct. 16 | 4:30-5:30 p.m.

Crosland Tower Fourth Floor Classroom

Aug. 06, 2025

The idea of people experiencing their favorite mobile apps as immersive 3D environments took a step closer to reality with a new Google-funded research iniative at Georgia Tech.

A new approach proposed by Tech researcher Yalong Yang uses generative artificial intelligence (GenAI) technologies to convert almost any mobile or web-based app into a 3D environment.

That includes application software programs from Microsoft and Adobe as well as any social media (Tiktok), entertainment (Spotify), banking (PayPal), or food service app (Uber Eats) and everything in between.

Yang aims to make the 3D environments compatible with augmented and virtual reality (AR/VR) headsets and smart glasses. He believes his research could be a breakthrough in spatial computing and change how humans interact with their favorite apps and computer systems in general.

“We’ll be able to turn around and see things we want, and we can grab them and put them together,” said Yang, an assistant professor in the School of Interactive Computing. “We’ll no longer use a mouse to scroll or the keyboard to type, but we can do more things like physical navigation.”

Yang’s proposal recently earned him recognition as a 2025 Google Research Scholar. Along with converting popular social apps, his platform will be able to instantly render Photoshop, MS Office, and other workplace applications in 3D for AR/VR devices.

“We have so many applications installed in our machines to complete all the various types of work we do,” he said. “We use Photoshop for photo editing, Premiere Pro for video editing, Word for writing documents. We want to create an AR/VR ecosystem that has all these things available in one interface with all apps working cohesively to support multitasking.”

Filling The Gap With AI

Just as Google’s Veo and Open AI’s Sora use generative-AI to create video clips, Yang believes it can be used to create interactive, immersive environments for any Android or Apple app.

“A critical gap in AR/VR is that we do not have all those existing applications, and redesigning all those apps will take forever,” he said. “It’s urgent that we have a complete ecosystem in VR to enable us to do the work we need to do. Instead of recreating everything from scratch, we need a way to convert these applications into immersive formats.”

The Google Play Store boasts 3.5 million apps for Android devices, while the Apple Store includes 1.8 million apps for iOS users.

Meanwhile, there are fewer than 10,000 apps available on the latest Meta Quest 3 headset, leaving a gap of millions of apps that will need 3D conversion.

“We envision a one-click app, and the (Android Package Kit) file output will be a Meta APK that you can install on your MetaQuest 3,” he said.

Yang said major tech companies like Apple have the resources to redesign their apps into 3D formats. However, small- to mid-sized companies that have created apps either do not have that ability or would take years to do so.

That’s where generative-AI can help. Yang plans to use it to convert source code from web-based and mobile apps into WebXR.

WebXR is a set of application programming interfaces (APIs) that enables developers to create AR/VR experiences within web browsers.

“We start with web-based content,” he said. “A lot of things are already based on the web, so we want to convert that user interface into Web XR.”

Building New Worlds

The process for converting mobile apps would be similar.

“Android uses an XML description file to define its user-interface (UI) elements. It’s very much like HTML on a web page. We believe we can use that as our input and map the elements to their desired location in a 3D environment. AI is great at translating one language to another — JavaScript to C-sharp, for example — so that can help us in this process.”

If generative-AI can create environments, the next step would be to create a seamless user experience.

“In a normal desktop or mobile application, we can only see one thing at a time, and it’s the same for a lot of VR headsets with one application occupying everything. To live in a multi-task environment, we can’t just focus on one thing because we need to keep switching our tasks, so how do we break all the elements down and let them float around and create a spatial view of them surrounding the user?”

Along with Assistant Professor Cindy Xiong, Yang is one of two researchers in the School of IC to be named a 2025 Google Research Scholar.

Four researchers from the College of Competing have received the award. The other two are Ryan Shandler from the School of Cybersecurity and Privacy and Victor Fung from the School of Computational Science and Engineering.

Reent Storie

Jul. 31, 2025

Walk into any room Aleksandra Teng Ma’s been working in this summer, and you’ll probably hear a mix of experimental sounds, snippets of Amy Winehouse vocals, and the occasional Animal Crossing tune playing in the background. That’s just how her brain works—blending tech, artistry, and everyday play into something entirely her own.

Aleksandra is a master’s student in Music Technology at Georgia Tech, but “student” barely scratches the surface. This summer, she’s been everywhere—physically in Massachusetts and intellectually somewhere between a Pride performance and a human-AI jam session at MIT.

“I’m always with my microphone and MIDI keyboard,” she says, like it’s just second nature. “I love singing and coming up with tunes.”

Live from MIT — It’s Human + AI Jamming

Forget dusty textbooks and silent labs—Aleksandra’s research life is about real-time musical interactions between humans and AI. As a visiting researcher at MIT this summer, she’s digging into what it looks like when musicians "jam" with intelligent systems. Think futuristic band practice, but with algorithms joining in.

“It’s giving me a lot of exposure to co-design methodologies,” she explains, “and letting me observe how musicians respond to each other—and to AI.”

It’s not just code and theory, either. The insights come alive when she brings them to the stage. This summer, Aleksandra’s band performed at The Music Porch in Reading, MA for Pride Month. Their cover of Pink Pony Club turned into a moment she won’t forget.

“It was so fun seeing people—especially teenagers—singing and dancing together,” she says. “That’s one of those moments where I just thought, yep, this is why I picked music tech.”

From Winehouse Covers to Ableton Experiments

Despite her research chops, Aleksandra hasn’t lost touch with the joy of just making music. She sings and plays keyboard in a band, covers Amy Winehouse songs, and occasionally writes music just for fun. (Her dream studio partner? You guessed it: Amy herself.)

She’s also been expanding her technical toolkit this summer, diving deeper into sound design with Ableton and Serum.

“Still learning,” she says, “but I’m using them for sound design in songs—and loving it.”

And then there are the unexpected “whoa” moments. Like when she built a vocal patch for the Pixies’ Where Is My Mind? to use live during a performance.

“It was haunting,” she says. “And it worked so well live.”

Dream Tech and Georgia Tech

Ask Aleksandra what she’d invent if she could mash up two instruments, and she already has an idea:

“Automatic vocal effects through a microphone with a built-in amplifier,” she says, laughing. “Honestly, someone probably already made this, but I want it anyway.”

That kind of thinking is exactly what her time at Georgia Tech has sparked. Before the program, she saw music mostly through the lens of conventional instruments. Now? She’s all about how software and hardware can expand what music even is.

Her Summer, in Sound

If Aleksandra’s summer had a vibe, it’d be:

- A creek bubbling in the background

- A long, ghostly reverb trail on a siren vocal

- And the ever-cozy tones of Animal Crossing

Not exactly your typical lab soundtrack—but that’s the beauty of it.

This fall, she’s heading back to Georgia Tech after a gap year at Bose, ready to jump into research on multimodal music source separation (AKA teaching machines to pick apart and understand layers in music the way humans do).

And yes, she’ll still be singing.

Hits with Aleksandra

- Current summer jams: Rosebud by Oklou & the new Lorde album

- What people don’t “get” about her work: “How music signals work on a granular level”

Aleksandra Ma doesn’t just study music tech—she lives it. Whether she’s tweaking reverb patches, performing under porch lights, or teaching AI how to groove, she’s showing what it really means to be a 21st-century musician.

Jul. 24, 2025

Computer vision enables AI to see the world. It’s already being used for self-driving vehicles, medical imaging, face recognition, and more.

Georgia Tech faculty and student experts advancing this field were in action in June at the globally renowned CVPR conference from IEEE and the Computer Vision Foundation. Georgia Tech was in the top 10% of all organizations for lead authors and the top 4% for number of papers. More than 2000 organizations had research accepted into CVPR's main program.

Watch the video and hear from Tech experts about what’s new and what’s coming next. Featured students include College of Computing experts Fiona Ryan, Chengyue Huang, Brisa Maneechotesuwan, and Lex Whalen.

These researchers in computer vision are showing how they are extending AI capabilities with image and video data.

HIGHLIGHTS:

- College of Computing faculty, from the Schools of Interactive Computing (IC) and Computer Science (CS), represented the majority of Tech's faculty in the CVPR papers program (8 of 10 faculty).

- IC faculty Zsolt Kira and Bo Zhu each coauthored an oral paper, the top 3% of accepted papers. IC faculty member Judy Hoffman coauthored two highlight papers, the top 20% of acceptances.

- Georgia Tech is in the top 10% of all organizations for number of first authors and the top 4% for number of papers. More than 2,000 organizations had research in the main program.

- Tech experts were on 30 research paper teams across 16 research areas. Topics with more than one Tech expert included:

• Image/video synthesis & generation

• Efficient and scalable vision

• Multi-modal learning

• Datasets and evaluation

• Humans: Face, body, gesture, etc.

• Vision, language, and reasoning

• Autonomous driving

• Computational imaging

News Contact

Joshua Preston

Communications Manager, Marketing and Research

College of Computing

jpreston7@gatech.edu

Jul. 23, 2025

A groundbreaking new medical dataset is poised to revolutionize healthcare in Africa by improving chatbots’ understanding of the continent’s most pressing medical issues and increasing their awareness of accessible treatment options.

AfriMed-QA, developed by researchers from Georgia Tech and Google, could reduce the burden on African healthcare systems.

The researchers said people in need of medical care file into overcrowded clinics and hospitals and face excruciatingly long waits with no guarantee of admission or quality treatment. There aren’t enough trained healthcare professionals available to meet the demand.

Some healthcare question-answer chatbots have been introduced to treat those in need. However, the researchers said there’s no transparent or standardized way to test or verify their effectiveness and safety.

The dataset will enable technologists and researchers to develop more robust and accessible healthcare chatbots tailored to the unique experiences and challenges of Africa.

One such new tool is Google’s MedGemma, a large-language model (LLM) designed to process medical text and images. AfriMed-QA was used for training and evaluation purposes.

AfriMed-QA stands as the most extensive dataset that evaluates LLM capabilities across various facets of African healthcare. It contains 15,000 question-answer pairs culled from over 60 medical schools across 16 countries and covering numerous medical specialties, disease conditions, and geographical challenges.

Tobi Olatunji and Charles Nimo co-developed AfriMed-QA and co-authored a paper about the dataset that will be presented at the Association for Computational Linguistics (ACL) conference next week in Vienna.

Olatunji is a graduate of Georgia Tech’s Online Master of Science in Computer Science (OMSCS) program and holds a Doctor of Medicine from the College of Medicine at the University of Ibadan in Nigeria. Nimo is a Ph.D. student in Tech’s School of Interactive Computing, where he is advised by School of IC professors Michael Best and Irfan Essa.

Focus on Africa

Nimo, Olatunji, and their collaborators created AfriMed-QA as a response to MedQA, a large-scale question-answer dataset that tests the medical proficiency of all major LLMs. That includes Google’s Gemini, OpenAI’s ChatGPT, and Anthropic’s Claude, among others.

However, because MedQA is trained solely on the U.S. Medical License Exams, Nimo said it is not adequate to serve patients in underdeveloped African countries nor the Global South at-large.

“AfriMed-QA has the contextualized and localized understanding of African medical institutions that you don’t get from Med-QA,” Nimo said. “There are specific diseases and local challenges in our dataset that you wouldn't find in any U.S.-based dataset.”

Olatunji said one problem African users may encounter using LLMs trained on MedQA is that they may advise unfeasible treatments or unaffordable prescription drugs.

“You consider the types of drugs, diagnostics, procedures, or therapies that exist in the U.S. that are quite advanced. These treatments are much more accessible, for example in the US, and Europe,” Olatunji said. “But in Africa, they’re too expensive and many times unavailable. They may cost over $100,000, and many people have no health insurance. Why recommend such treatments to someone who can’t obtain them?”

Another problem may be that the LLM doesn’t take a medical condition seriously if it isn’t predominant in the U.S.

“We tested many of these models, for example, on how they would manage sickle-cell disease signs and symptoms, and they focused on other “more likely” causes and did not rank or consider sickle cell high enough as a possible cause,” he said. “They, for example, don’t consider sickle-cell as important as anemia and cancer because sickle-cell is less prevalent in the U.S.”

In addition to sickle-cell disease, Olatunji said some of the healthcare issues facing Africa that can be improved through AfriMed-QA include:

- HIV treatment and prevention

- Poor maternal healthcare

- Widespread malaria cases

- Physician shortage

- Clinician productivity and operational efficiency

Google Partnership

Mercy Asiedu, senior author of the AfriMed-QA paper and research scientist at Google Research, has dedicated her career to improving healthcare in Africa. Her work began as a Ph.D. student at Duke University, where she invented the Callascope, a groundbreaking non-invasive tool for gynecological examinations

With her current focus on democratizing healthcare through artificial intelligence (AI), Asiedu, who is from Ghana, helped create a research consortium to develop the dataset. The consortium consists of Georgia Tech, Google, Intron, Bio-RAMP Research Labs, the University of Cape Coast, the Federation of African Medical Students Association, and Sisonkebiotik.

Sisonkebiotik is an organization of researchers that drives healthcare initiatives to advance data science, machine learning, and AI in Africa.

Olatunji leads the Bio-RAMP Research Lab, a community of healthcare and AI researchers, and he is the founder and CEO of Intron, which develops natural-language processing technologies for African communities.

In May, Google released MedGemma, which uses both the MedQA and Afri-MedQA datasets to form a more globally accessible healthcare chatbot. MedGemma has several versions, including 4-billion and 27-billion parameter models, which support multimodal inputs that combine images and text.

“We are proud the latest medical-focused LLM from Google, MedGemma, leverages AfriMed-QA and improves performance in African contexts,” Asiedu said.

“We started by asking how we could reduce the burden on Africa’s healthcare systems. If we can get these large-language models to be as good as experts and make them more localized with geo-contextualization, then there’s the potential to task-shift to that.”

The project is supported by the Gates Foundation and PATH, a nonprofit that improves healthcare in developing countries.

Jul. 16, 2025

The National Science Foundation (NSF) has awarded Georgia Tech and its partners $20 million to build a powerful new supercomputer that will use artificial intelligence (AI) to accelerate scientific breakthroughs.

Called Nexus, the system will be one of the most advanced AI-focused research tools in the U.S. Nexus will help scientists tackle urgent challenges such as developing new medicines, advancing clean energy, understanding how the brain works, and driving manufacturing innovations.

“Georgia Tech is proud to be one of the nation’s leading sources of the AI talent and technologies that are powering a revolution in our economy,” said Ángel Cabrera, president of Georgia Tech. “It’s fitting we’ve been selected to host this new supercomputer, which will support a new wave of AI-centered innovation across the nation. We’re grateful to the NSF, and we are excited to get to work.”

Designed from the ground up for AI, Nexus will give researchers across the country access to advanced computing tools through a simple, user-friendly interface. It will support work in many fields, including climate science, health, aerospace, and robotics.

“The Nexus system's novel approach combining support for persistent scientific services with more traditional high-performance computing will enable new science and AI workflows that will accelerate the time to scientific discovery,” said Katie Antypas, National Science Foundation director of the Office of Advanced Cyberinfrastructure. “We look forward to adding Nexus to NSF's portfolio of advanced computing capabilities for the research community.”

Nexus Supercomputer — In Simple Terms

- Built for the future of science: Nexus is designed to power the most demanding AI research — from curing diseases, to understanding how the brain works, to engineering quantum materials.

- Blazing fast: Nexus can crank out over 400 quadrillion operations per second — the equivalent of everyone in the world continuously performing 50 million calculations every second.

- Massive brain plus memory: Nexus combines the power of AI and high-performance computing with 330 trillion bytes of memory to handle complex problems and giant datasets.

- Storage: Nexus will feature 10 quadrillion bytes of flash storage, equivalent to about 10 billion reams of paper. Stacked, that’s a column reaching 500,000 km high — enough to stretch from Earth to the moon and a third of the way back.

- Supercharged connections: Nexus will have lightning-fast connections to move data almost instantaneously, so researchers do not waste time waiting.

- Open to U.S. researchers: Scientists from any U.S. institution can apply to use Nexus.

Why Now?

AI is rapidly changing how science is investigated. Researchers use AI to analyze massive datasets, model complex systems, and test ideas faster than ever before. But these tools require powerful computing resources that — until now — have been inaccessible to many institutions.

This is where Nexus comes in. It will make state-of-the-art AI infrastructure available to scientists all across the country, not just those at top tech hubs.

“This supercomputer will help level the playing field,” said Suresh Marru, principal investigator of the Nexus project and director of Georgia Tech’s new Center for AI in Science and Engineering (ARTISAN). “It’s designed to make powerful AI tools easier to use and available to more researchers in more places.”

Srinivas Aluru, Regents’ Professor and senior associate dean in the College of Computing, said, “With Nexus, Georgia Tech joins the league of academic supercomputing centers. This is the culmination of years of planning, including building the state-of-the-art CODA data center and Nexus’ precursor supercomputer project, HIVE."

Like Nexus, HIVE was supported by NSF funding. Both Nexus and HIVE are supported by a partnership between Georgia Tech’s research and information technology units.

A National Collaboration

Georgia Tech is building Nexus in partnership with the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign, which runs several of the country’s top academic supercomputers. The two institutions will link their systems through a new high-speed network, creating a national research infrastructure.

“Nexus is more than a supercomputer — it’s a symbol of what’s possible when leading institutions work together to advance science,” said Charles Isbell, chancellor of the University of Illinois and former dean of Georgia Tech’s College of Computing. “I'm proud that my two academic homes have partnered on this project that will move science, and society, forward.”

What’s Next

Georgia Tech will begin building Nexus this year, with its expected completion in spring 2026. Once Nexus is finished, researchers can apply for access through an NSF review process. Georgia Tech will manage the system, provide support, and reserve up to 10% of its capacity for its own campus research.

“This is a big step for Georgia Tech and for the scientific community,” said Vivek Sarkar, the John P. Imlay Dean of Computing. “Nexus will help researchers make faster progress on today’s toughest problems — and open the door to discoveries we haven’t even imagined yet.”

News Contact

Siobhan Rodriguez

Senior Media Relations Representative

Institute Communications

Pagination

- Previous page

- Page 5

- Next page