Oct. 20, 2025

The Royal Society of NSW and the Learned Academies are hosting their 2025 Forum, “AI: The Hope and the Hype,” on November 6 at Government House, Sydney. The event will explore how artificial intelligence can deliver real-world benefits while managing its risks.

We’re proud to share that Tech AI’s own Pascal Van Hentenryck, A. Russell Chandler III Chair and Director of Georgia Tech’s AI Hub, will be among the featured speakers—bringing Georgia Tech’s global perspective on building trustworthy, impactful AI systems.

Learn more about the forum: royalsoc.org.au/events/rsnsw-and-learned-academies-forum-2025

Oct. 15, 2025

Instructors creating online courses have long faced a tradeoff: use text-based materials that are easy to update, or invest in engaging but time-consuming video formats. As a result, learners often get either flexibility or immersion, but rarely both.

“In a field that moves as fast as artificial intelligence, it’s important to be able to update material frequently,” says David Joyner, executive director of online education in the College of Computing. “That’s usually a problem because re-recording means going back into the studio and trying to make the new content fit in with the old.”

Joyner’s latest massive open online course (MOOC), Foundations of Generative AI, uses artificial intelligence to solve that challenge. Images for the course are created using Sora and DALL·E 3, while early drafts of quizzes were generated by GPT-5. The course also uses Grady, an AI autograder that provides feedback on open-ended essays.

The most striking innovation is DAI-vid (pronounced day-eye-vid), a video avatar of Joyner that leads the instruction. To create it, Joyner uploaded a five-minute clip of himself to the generative AI platform HeyGen, along with course scripts and other inputs. The result is a lifelike digital instructor who can let Joyner update his lessons far more easily.

“With AI, we can just modify the text and have the updated video pop right out,” Joyner says. “It takes minutes at my desk instead of an hour in the studio.”

This approach allows Joyner to keep course materials current and produce new videos entirely on his own. “It’s strange, but in a lot of ways this course feels more like it’s mine than the ones where I’m on camera,” he says. “Because AI lets me handle every part of production myself, the finished product feels like my complete work.”

Joyner sees this experiment as an example of AI’s potential to enhance human talent rather than replace it. “Give me AI and I can do five times more than I could alone,” he says. “But give it to our professional video producers, and they will still far outpace me, because expertise matters most. AI just amplifies it.”

Foundations of Generative AI is now available on edX, and the same material is also part of the OMSCS course CS7637: Knowledge-Based AI.

Oct. 06, 2025

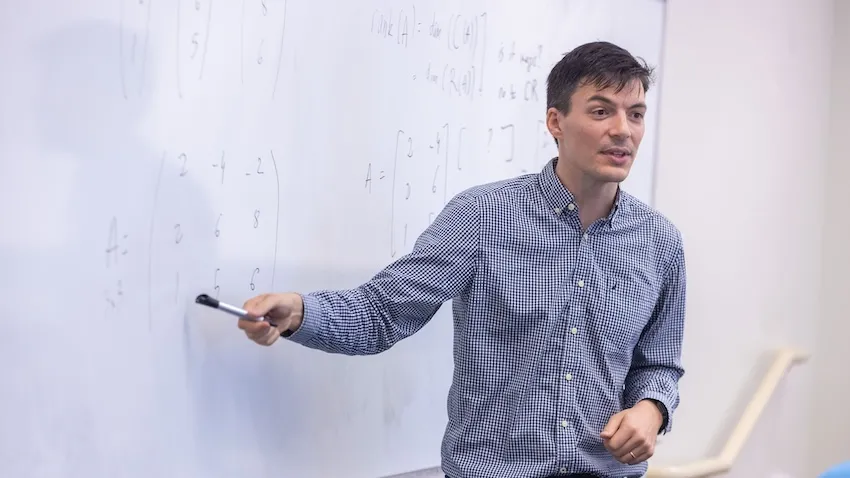

Students in machine learning and linear algebra courses this semester are learning from one of Georgia Tech’s most celebrated instructors.

Raphaël Pestourie has earned back-to-back selections to the Institute’s Course Instructor Opinion Survey (CIOS) honor roll, placing him among the top-ranked teachers for Fall 2024 and Spring 2025.

By returning to the classroom this semester to teach two more courses, Pestourie continues to leverage proven experience to mentor the next generation of researchers in his field.

“Students played a very important part in the survey process, and I thank them for making the classes great,” said Pestourie, an assistant professor in the School of Computational Science and Engineering (CSE).

“I'm incredibly grateful that students shared their feedback so that I could go the extra mile to not only apply my expertise to teach in ways that I think work, but transform my instruction to reach students in the most impactful way I can.”

CIOS honor rolls recognize instructors for outstanding teaching and educational impact, based on student feedback provided through end-of-course surveys.

Student praise of Pestourie’s CSE 8803: Scientific Machine Learning class placed him on the Fall 2024 CIOS honor roll. He earned selection to the Spring 2025 honor roll for his instruction of CX 4230: Computer Simulation.

CSE 8803 is a graduate-level, special topics class that Pestourie created around his field of expertise. Scientific machine learning involves merging two traditionally distinct fields: scientific computing and machine learning.

In scientific computing, researchers build and use models based on established physical laws. Machine learning differs in that it employs data-driven models to find patterns without prior assumptions. Combining the two fields opens new ways to analyze data and solve challenging problems in science and engineering.

Pestourie organized student-focused scientific machine learning symposiums in Fall 2023 and 2024. CSE 8803 students work on projects throughout the course and present their work at these symposiums. Pestourie will use the same approach this semester.

Compared to CSE 8803, CX 4230 is an undergraduate course that teaches students how to create computer models of complex systems. A complex system has many interacting entities that influence each other’s behaviors and patterns. Disease spread in a human network is one example of a complex system.

CX 4230 is a required course for computer science students studying the Modeling & Simulation thread. It is also an elective course in the Scientific and Engineering Computing minor.

“I see 8803 as my educational baby. Being acknowledged for it with a CIOS honor roll felt great,” Pestourie said.

“In a way, I'm prouder of CX 4230 because it was a large, undergraduate regular offering that I was teaching for the first time. The honor roll selection came almost as a surprise.”

To be eligible for the honor roll recognition, instructors must have a minimum CIOS response rate of 70%. Composite scores for three CIOS items are then used to rank instructors. Those items are:

- Instructor’s respect and concern for students

- Instructor’s level of enthusiasm about the course

- Instructor’s ability to stimulate interest in the subject matter

Georgia Tech’s Center for Teaching and Learning (CTL) and the Office of Academic Effectiveness present the CIOS Honor Rolls. CTL recognizes honor roll recipients at its Celebrating Teaching Day events, held annually in March.

CTL offers the Class of 1969 Teaching Fellowship, in which Pestourie participated in the 2024-2025 cohort. The program aims to broaden perspectives with insight into evidence-based best practices and exposure to new and innovative teaching methods.

The fellowship offers one-on-one consultations with a teaching and learning specialist. Cohorts meet weekly in the fall semester and monthly in the spring semester for instruction seminars.

The fellowship facilitates peer observations where instructors visit other classrooms, exchange feedback, and learn effective techniques to try in their own classes.

“I'm very grateful for the Class of 1969 fellowship program and to Karen Franklin, who coordinates it,” Pestourie said. “The honor roll is not just a one-person award. Support from the Institute and other people in the program made it happen.”

Like in Fall 2023 and 2024, Pestourie is teaching CSE 8803: Scientific Machine Learning again this semester. Additionally, he teaches CSE 8801: Linear Algebra, Probability, and Statistics.

Linear algebra and applied probability are among the fundamental subjects in modern data science. Like his scientific machine learning class, Pestourie created CSE 8801. This semester marks the second time Pestourie is teaching the course since Fall 2024.

Pestourie designed CSE 8801 as a refresher course for newer graduate students. This addresses a point of need to help students get off to a good start at Georgia Tech. By offering guidance early in their graduate careers, Pestourie’s work in the classroom also aims to cultivate future collaborators and serve his academic community.

“I see teaching as our one shot at making a good first impression as a research field and a community,” he said.

“I see my work as a teacher as training my future colleagues, and I see it as my duty to our community to do my best in attracting the best talent toward our research field.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Sep. 26, 2025

Two Georgia Tech Ph.D. students created a student-run, faculty-graded, fully-accredited course that links math, engineering and machine learning.

Andrew Rosemberg, with assistance from Michael Klamkin, both student researchers with the U.S. National Science Foundation AI Research Institute for Advances in Optimization (AI4OPT), designed the course to bridge gaps they saw in existing classrooms.

“While Georgia Tech offers excellent courses on optimization, control, and learning, we found no single class that connected all these fields in a cohesive way,” Rosemberg said. “In our research, it was clear these topics are deeply interconnected.”

Problem-driven learning

The course starts with fundamental problems and works backward to the methods required to solve them. Rosemberg said this approach was intentional. He said that courses often center around methods in isolation rather than showing how the methods contribute to the larger context. This keeps the course focused on problem-driven discovery.

The class also serves as a way for Rosemberg and Klamkin to strengthen their own teaching and mentoring skills.

Goals and structure

The primary goal of the course is to help students build a clear understanding of how mathematical programming, classical optimal control, and machine learning techniques such as reinforcement learning connect to one another. Students are also working to produce a structured book by the end of the semester.

“The hope is that this resource will not only solidify our own learning but also serve as a guide for other students who want to approach these problems in the future,” Rosemberg said.

Responsibilities are distributed across participants, with each student delivering lectures, reviewing peers’ work, and contributing to collective discussions. Rosemberg and Klamkin provide additional support where needed, while faculty mentor and director of AI4OPT, Pascal Van Hentenryck, ensures the class stays aligned with broader academic objectives.

Student ownership and collaboration

Rosemberg noted that the student-led model gives students a deeper sense of ownership, making them responsible for their own learning, and having a stronger impact. This model allows students to determine what to learn and why, which promotes critical thinking.

The course uses GitHub as its primary workflow platform. Rosemberg said adds transparency and prepares students for real-world research practices.

“GitHub functions much like university systems such as Canvas or Piazza. It also has the added benefit of making all contributions visible to the world,” Rosemberg explained. “This helps students take pride and ownership of their work, while also introducing them to Git, an essential tool for software development and modern STEM research.”

Emerging insights and challenges

Students have begun aligning their research with course themes, including shaping qualifying exam topics around the intersections of operations research, optimal control and reinforcement learning. Rosemberg said exploring the comparative strengths of these fields side by side has been one of the most rewarding outcomes.

Balancing independence with guidance has proven to be the greatest challenge. He said they have been evolving alongside the students in real time and have learned to emphasize mutual responsibility to promote the collective progress of the class.

Looking ahead

Rosemberg said future iterations of the course may place more emphasis on setting expectations early, given the effort required to deliver a lecture in this format.

His advice for others who may want to replicate the model is to focus on building a committed core team.

“Start with a small, motivated group,” Rosemberg said. “Like a startup, success depends less on the structure and more on the dedication of the people involved.”

News Contact

Jaci Bjorne

Sep. 11, 2025

A recently awarded $20 million NSF Nexus Supercomputer grant to Georgia Tech and partner institutes promises to bring incredible computing power to the CODA building. But what makes this supercomputer different and how will it impact research in labs on campus, across disciplinary units, and across institutions?

Purpose Built for AI Discovery

Nexus is Georgia Tech’s next-generation supercomputer, replacing the HIVE. Most operational high-performance computing systems utilized for research were designed before the explosion in Machine Learning and AI. This revolution has already shown successes for scientific research and data analysis in many domains, but the compute power, complex connectivity, and data storage needs for these systems have limited their access to the academic research community. The Nexus supercomputer design process retained a robust HPC system as a base while integrating artificial intelligence, machine learning and large-scale data science analysis from the ground up.

Expert Support for Faculty and Researchers

The Institute for Data Engineering and Science (IDEaS) and the College of Computing house the Center for Artificial Intelligence in Science and Engineering (ARTISAN) group. This team has collective experience in working with national computational, cloud, commercial and institutional resources for computational activities, and decades of experience in scientific tools that aid in assisting both teaching and research faculty. Nexus is the next logical step, bringing together everything they’ve learned to build a national resource optimized for the future of AI-driven science.

Principal Research Scientist for the ARTISAN team, Suresh Marru, highlighted the need for this new resource, “AI is a core part of the Nexus vision. Today, researchers often spend more time setting up experiments, managing data, or figuring out how to run jobs on remote clusters than doing science. With Nexus, we’re flipping that script. By embedding AI into the platform, we help automate routine tasks, suggest optimal ways to run simulations, and even assist in generating input or analyzing results. This means researchers can move faster from question to insight. Instead of wrestling with infrastructure, they can focus on discovery.”

An Accessible AI Resource for GT & US Scientific Research

90% of Nexus capacity will be made available to the national research community through the NSF Advanced Computing Systems & Services (ACSS) program. Researchers from across the country, at universities, labs, and institutions of all sizes, will have access to this next-generation AI-ready supercomputer. For Georgia Tech research faculty and staff, the new system has multiple benefits:

- 10% of the time on the machine will be available for use by Georgia Tech researchers

- Nexus will allow GT researchers a chance to try out the latest hardware for AI computing

- Thanks to cyberinfrastructure tools from the ARTISAN group, Nexus will be easier to access than previous NSF supercomputers

Interim Executive Director of IDEaS and Regents' Professor David Sherrill notes, "Nexus brings Georgia Tech's leadership in research computing to a whole new level. It will be the first NSF Category I Supercomputer hosted on Georgia Tech's campus. The Nexus hardware and software will boost research in the foundations of AI, and applications of AI in science and engineering."

Sep. 03, 2025

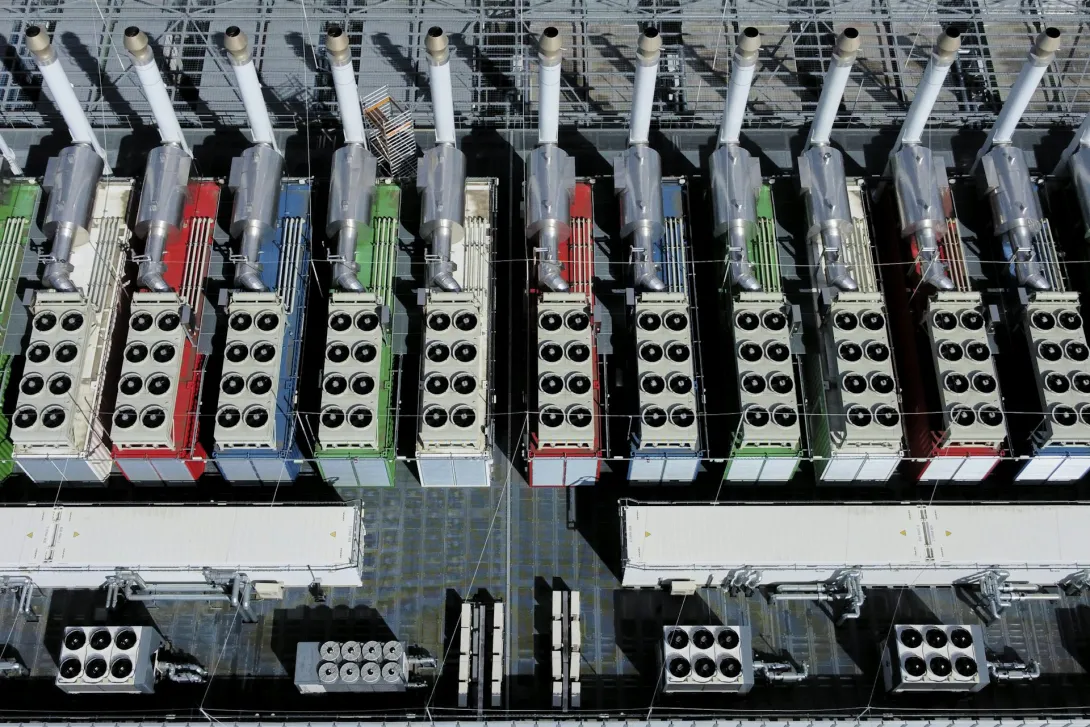

Artificial intelligence is growing fast, and so are the number of computers that power it. Behind the scenes, this rapid growth is putting a huge strain on the data centers that run AI models. These facilities are using more energy than ever.

AI models are getting larger and more complex. Today’s most advanced systems have billions of parameters, the numerical values derived from training data, and run across thousands of computer chips. To keep up, companies have responded by adding more hardware, more chips, more memory and more powerful networks. This brute force approach has helped AI make big leaps, but it’s also created a new challenge: Data centers are becoming energy-hungry giants.

Some tech companies are responding by looking to power data centers on their own with fossil fuel and nuclear power plants. AI energy demand has also spurred efforts to make more efficient computer chips.

I’m a computer engineer and a professor at Georgia Tech who specializes in high-performance computing. I see another path to curbing AI’s energy appetite: Make data centers more resource aware and efficient.

Energy and Heat

Modern AI data centers can use as much electricity as a small city. And it’s not just the computing that eats up power. Memory and cooling systems are major contributors, too. As AI models grow, they need more storage and faster access to data, which generates more heat. Also, as the chips become more powerful, removing heat becomes a central challenge.

Data centers house thousands of interconnected computers. Alberto Ortega/Europa Press via Getty Images

Cooling isn’t just a technical detail; it’s a major part of the energy bill. Traditional cooling is done with specialized air conditioning systems that remove heat from server racks. New methods like liquid cooling are helping, but they also require careful planning and water management. Without smarter solutions, the energy requirements and costs of AI could become unsustainable.

Even with all this advanced equipment, many data centers aren’t running efficiently. That’s because different parts of the system don’t always talk to each other. For example, scheduling software might not know that a chip is overheating or that a network connection is clogged. As a result, some servers sit idle while others struggle to keep up. This lack of coordination can lead to wasted energy and underused resources.

A Smarter Way Forward

Addressing this challenge requires rethinking how to design and manage the systems that support AI. That means moving away from brute-force scaling and toward smarter, more specialized infrastructure.

Here are three key ideas:

Address variability in hardware. Not all chips are the same. Even within the same generation, chips vary in how fast they operate and how much heat they can tolerate, leading to heterogeneity in both performance and energy efficiency. Computer systems in data centers should recognize differences among chips in performance, heat tolerance and energy use, and adjust accordingly.

Adapt to changing conditions. AI workloads vary over time. For instance, thermal hotspots on chips can trigger the chips to slow down, fluctuating grid supply can cap the peak power that centers can draw, and bursts of data between chips can create congestion in the network that connects them. Systems should be designed to respond in real time to things like temperature, power availability and data traffic.

Break down silos. Engineers who design chips, software and data centers should work together. When these teams collaborate, they can find new ways to save energy and improve performance. To that end, my colleagues, students and I at Georgia Tech’s AI Makerspace, a high-performance AI data center, are exploring these challenges hands-on. We’re working across disciplines, from hardware to software to energy systems, to build and test AI systems that are efficient, scalable and sustainable.

Scaling With Intelligence

AI has the potential to transform science, medicine, education and more, but risks hitting limits on performance, energy and cost. The future of AI depends not only on better models, but also on better infrastructure.

To keep AI growing in a way that benefits society, I believe it’s important to shift from scaling by force to scaling with intelligence.![]()

This article is republished from The Conversation under a Creative Commons license. Read the original article.

News Contact

Author:

Divya Mahajan, assistant professor of Computer Engineering, Georgia Institute of Technology

Media Contact:

Shelley Wunder-Smith

shelley.wunder-smith@research.gatech.edu

Sep. 02, 2025

In the morning, before you even open your eyes, your wearable device has already checked your vitals. By the time you brush your teeth, it has scanned your sleep patterns, flagged a slight irregularity, and adjusted your health plan. As you take your first sip of coffee, it’s already predicted your risks for the week ahead.

Georgia Tech researchers warn that this version of AI healthcare imagines a patient who is "affluent, able-bodied, tech-savvy, and always available." Those who don’t fit that mold, they argue, risk becoming invisible in the healthcare system.

The Ideal Future

In their study, published in the Proceedings of the ACM Conference on Human Factors in Computing Systems, the researchers analyzed 21 AI-driven health tools, ranging from fertility apps and wearable devices to diagnostic platforms and chatbots. They used sociological theory to understand the vision of the future these tools promote — and the patients they leave out.

“These systems envision care that is seamless, automatic, and always on,” said Catherine Wieczorek, a Ph.D. student in human-centered computing in the School of Interactive Computing and lead author of the study. “But they also flatten the messy realities of illness, disability, and socioeconomic complexity.”

Four Futures, One Narrow Lens

During their analysis, the researchers discovered four recurring narratives in AI-powered healthcare:

- Care that never sleeps. Devices track your heart rate, glucose levels, and fertility signals — all in real time. You are always being watched, because that’s framed as “care.”

- Efficiency as empathy. AI is faster, more objective, and more accurate. Unlike humans, it doesn’t get tired or biased. This pitch downplays the value of human judgment and connection.

- Prevention as perfection. A world where illness is avoided through early detection if you have the right sensors, the right app, and the right lifestyle.

- The optimized body. You’re not just healthy, you’re high-performing. The tech isn’t just treating you; it’s upgrading you.

“It’s like healthcare is becoming a productivity tool,” Wieczorek said. “You’re not just a patient anymore. You’re a project.”

Not Just a Tool, But a Teammate

This study also points to a critical transformation in which AI is no longer just a diagnostic tool; it’s a decision-maker. Described by the researchers as “both an agent and a gatekeeper,” AI now plays an active role in how care is delivered.

In some cases, AI systems are even named and personified, like Chloe, an IVF decision-support tool. “Chloe equips clinicians with the power of AI to work better and faster,” its promotional materials state. By framing AI this way — as a collaborator rather than just software — these systems subtly redefine who, or what, gets to be treated.

“When you give AI names, personalities, or decision-making roles, you’re doing more than programming. You’re shifting accountability and agency. That has consequences,” said Shaowen Bardzell, chair of Georgia Tech’s School of Interactive Computing and co-author of the study.

“It blurs the boundaries,” Wieczorek noted. “When AI takes on these roles, it’s reshaping how decisions are made and who holds authority in care.”

Calculated Care

Many AI tools promise early detection, hyper-efficiency, and optimized outcomes. But the study found that these systems risk sidelining patients with chronic illness, disabilities, or complex medical needs — the very people who rely most on healthcare.

“These technologies are selling worldviews,” Wieczorek explained. “They’re quietly defining who healthcare is for, and who it isn’t.”

By prioritizing predictive algorithms and automation, AI can strip away the context and humanity that real-world care requires.

“Algorithms don’t see nuance. It’s difficult for a model to understand how a patient might be juggling multiple diagnoses or understand what it means to manage illness, while also navigating other important concerns like financial insecurity or caregiving. They are predetermined inputs and outputs,” Wieczorek said. “While these systems claim to streamline care, they are also encoding assumptions about who matters and how care should work. And when those assumptions go unchallenged, the most vulnerable patients are often the ones left out.”

AI for ALL

The researchers argue that future AI systems must be developed in collaboration with those who don’t fit in the vision of a “perfect patient.”

“Innovation without ethics risks reinforcing existing inequalities. It’s about better tech and better outcomes for real people,” Bardzell said. “We’re not anti-innovation. But technological progress isn’t just about what we can do. It’s about what we should do — and for whom.”

Wieczorek and Bardzell aren’t trying to stop AI from entering healthcare. They’re asking AI developers to understand who they’re really serving.

Funding:

This work was supported by the National Science Foundation (Grant #2418059).

News Contact

Michelle Azriel, Sr. Writer-Editor

Sep. 02, 2025

A new version of Georgia Tech’s virtual teaching assistant, Jill Watson, has demonstrated that artificial intelligence can significantly improve the online classroom experience. Developed by the Design Intelligence Laboratory (DILab) and the U.S. National Science Foundation AI Institute for Adult Learning and Online Education (AI-ALOE), the latest version of Jill Watson integrates OpenAI’s ChatGPT and is outperforming OpenAI’s own assistant in real-world educational settings.

Jill Watson not only answers student questions with high accuracy. It also improves teaching presence and correlates with better academic performance. Researchers believe this is the first documented instance of a chatbot enhancing teaching presence in online learning for adult students.

How Jill Watson Shaped Intelligent Teaching Assistants

First introduced in 2016 using IBM’s Watson platform, Jill Watson was the first AI-powered teaching assistant deployed in real classes. It began by responding to student questions on discussion forums like Piazza using course syllabi and a curated knowledge base of past Q&As. Widely covered by major media outlets including The Chronicle of Higher Education, The Wall Street Journal, and The New York Times, the original Jill pioneered new territory in AI-supported learning.

Subsequent iterations addressed early biases in the training data and transitioned to more flexible platforms like Google’s BERT in 2019, allowing Jill to work across learning management systems such as EdStem and Canvas. With the rise of generative AI, the latest version now uses ChatGPT to engage in extended, context-rich dialogue with students using information drawn directly from courseware, textbooks, video transcripts, and more.

Future of Personalized, AI-Powered Learning

Designed around the Community of Inquiry (CoI) framework, Jill Watson aims to enhance “teaching presence,” one of three key factors in effective online learning, alongside cognitive and social presence. Teaching presence includes both the design of course materials and facilitation of instruction. Jill supports this by providing accurate, personalized answers while reinforcing the structure and goals of the course.

The system architecture includes a preprocessed knowledge base, a MongoDB-powered memory for storing conversation history, and a pipeline that classifies questions, retrieves contextually relevant content, and moderates responses. Jill is built to avoid generating harmful content and only responds when sufficient verified course material is available.

Field-Tested in Georgia and Beyond

The first AI-powered teaching assistant was developed for Georgia Tech’s Online Master of Science in Computer Science (OMSCS) program. By fall 2023, Jill Watson was deployed in Georgia Tech’s OMSCS artificial intelligence course, serving more than 600 students, as well as in an English course at Wiregrass Georgia Technical College, part of the Technical College System of Georgia (TCSG).

A controlled A/B experiment in the OMSCS course allowed researchers to compare outcomes between students with and without access to Jill Watson, even though all students could use ChatGPT. The findings are striking:

- Jill Watson’s accuracy on synthetic test sets ranged from 75% to 97%, depending on the content source. It consistently outperformed OpenAI’s Assistant, which scored around 30%.

- Students with access to Jill Watson showed stronger perceptions of teaching presence, particularly in course design and organization, as well as higher social presence.

- Academic performance also improved slightly: students with Jill saw more A grades (66% vs. 62%) and fewer C grades (3% vs. 7%).

A Smarter, Safer Chatbot

While Jill Watson uses ChatGPT for natural language generation, it restricts outputs to validated course material and verifies each response using textual entailment. According to a study by Taneja et al. (2024), Jill not only delivers more accurate answers than OpenAI’s Assistant but also avoids producing confusing or harmful content at significantly lower rates.

Compared to OpenAI’s Assistant, Jill Watson (ChatGPT) not only achieves higher accuracy but also produces confusing or harmful content at significantly lower rates. Jill Watson answers correctly 78.7% of the time, with only 2.7% of its errors categorized as harmful and 54.0% as confusing. In contrast, OpenAI’s Assistant demonstrates a much lower accuracy of 30.7%, with harmful failures occurring 14.4% of the time and confusing failures rising to 69.2%. Additionally, Jill Watson has a lower retrieval failure rate of 43.2%, compared to 68.3% for the OpenAI Assistant.

What’s Next for Jill

The team plans to expand testing across introductory computing courses at Georgia Tech and technical colleges. They also aim to explore Jill Watson’s potential to improve cognitive presence, particularly critical thinking and concept application. Although quantitative results for cognitive presence are still inconclusive, anecdotal feedback from students has been positive. One OMSCS student wrote:

“The Jill Watson upgrade is a leap forward. With persistent prompting I managed to coax it from explicit knowledge to tacit knowledge. Kudos to the team!”

The researchers also expect Jill to reduce instructional workload by handling routine questions and enabling more focus on complex student needs.

Additionally, AI-ALOE is collaborating with the publishing company John Wiley & Sons, Inc., to develop a Jill Watson virtual teaching assistant for one of their courses, with the instructor and university chosen by Wiley. If successful, this initiative could potentially scale to hundreds or even thousands of classes across the country and around the world, transforming the way students interact with course content and receive support.

A Georgia Tech-Led Collaboration

The Jill Watson project is supported by Georgia Tech, the US National Science Foundation’s AI-ALOE Institute (Grants #2112523 and #2247790), and the Bill & Melinda Gates Foundation.

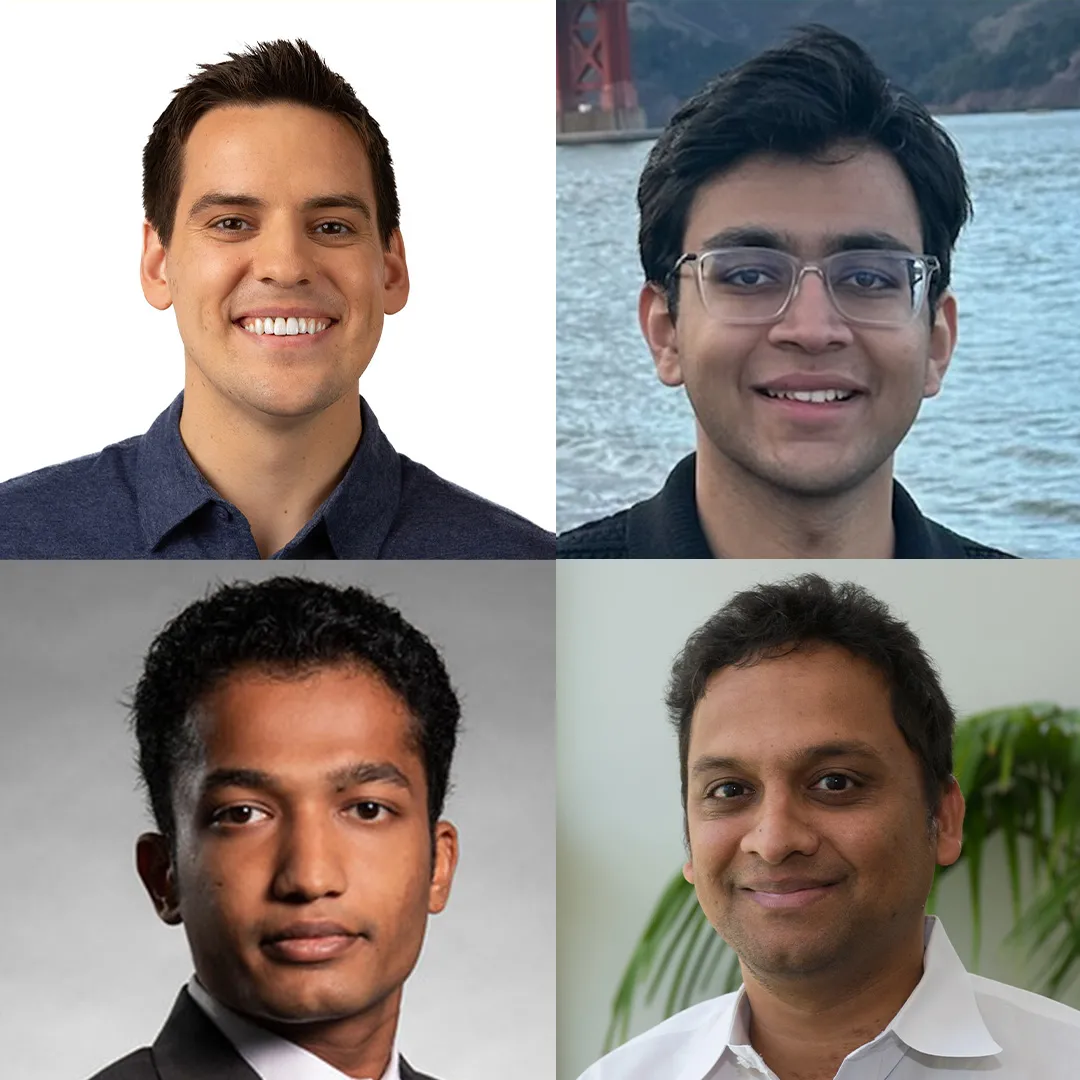

Core team members are Saptrishi Basu, Jihou Chen, Jake Finnegan, Isaac Lo, JunSoo Park, Ahamad Shapiro and Karan Taneja, under the direction of professor Ashok Goel and Sandeep Kakar. The team works under Beyond Question LLC, an AI-based educational technology startup.

News Contact

Breon Martin

Aug. 20, 2025

Daniel Yue, assistant professor of IT Management at the Scheller College of Business, has been awarded the prestigious Best Dissertation Award by the Technology and Innovation Management Division of the Academy of Management. The recognition celebrates the most impactful doctoral research in the field of business and innovation.

Yue’s dissertation, developed during his Ph.D. at Harvard Business School, explores a paradox at the heart of the AI industry: why do firms openly share their innovations, like scientific knowledge, software, and models, despite the apparent lack of direct financial return? His work sheds light on the strategic and economic mechanisms that drive this openness, offering new frameworks for understanding how firms contribute to and benefit from shared technological progress.

“We typically think of firms as trying to capture value from their innovations,” Yue explained. “But in AI, we see companies freely publishing research and releasing open-source software. My dissertation investigates why this happens and what firms gain from it.”

News Contact

Kristin Lowe (She/Her)

Content Strategist

Georgia Institute of Technology | Scheller College of Business

kristin.lowe@scheller.gatech.edu

Aug. 25, 2025

Georgia Tech researchers have designed the first benchmark that tests how well existing AI tools can interpret advice from YouTube financial influencers, also known as finfluencers.

Lead author Michael Galarnyk, Ph.D. Machine Learning ’28, joined lead authors Veer Kejriwal, B.S. Computer Science ’25, and Agam Shah, Ph.D. Machine Learning ’26, along with co-authors Yash Bhardwaj, École Polytechnique, M.S. Trustworthy and Responsible AI ‘27; Nicholas Meyer, B.S. Electrical and Computer Engineering ’22 and Quantitative and Computational Finance ’24; Anand Krishnan, Stanford University, B.S. Computer Science ‘27; and, Sudheer Chava, Alton M. Costley Chair and professor of Finance at Georgia Tech.

Aptly named VideoConviction, the multimodal benchmark included hundreds of video clips. Experts labelled each clip with the influencer’s recommendation (buy, sell, or hold) and how strongly the influencer seemed to believe in their advice, based on tone, delivery, and facial expressions. The goal? To see how accurately AI can pick up on both the message and the conviction behind it.

“Our work shows that financial reasoning remains a challenge for even the most advanced models,” said Michael Galarnyk, lead author. “Multimodal inputs bring some improvement, but performance often breaks down on harder tasks that require distinguishing between casual discussion and meaningful analysis. Understanding where these models fail is a first step toward building systems that can reason more reliably in high stakes domains.”

News Contact

Kristin Lowe (She/Her)

Content Strategist

Georgia Institute of Technology | Scheller College of Business

kristin.lowe@scheller.gatech.edu

Pagination

- Previous page

- Page 4

- Next page