Nov. 17, 2025

“How will AI kill Creature?”

That was the question posed to Scheller College of Business Evening MBA students Katie Bowen (’25), Ellie Cobb (’26), and Christopher Jones (’26) in a marketing practicum course that paired them with Creature, a brand, product, and marketing transformation studio.

For 10 weeks, the students worked as consultants in a project that challenged them to rethink the role of artificial intelligence in creative industries. Course instructor Jarrett Oakley, director of Marketing at TOTO USA, guided the student project as they developed strategies to help Creature navigate the evolving landscape of AI-driven marketing.

Business School Meets Real Business

“Nothing accelerates the value of a business school education like applying it in real time to real businesses,” Oakley said. “This course mirrored a consulting engagement, turning classroom learning into actionable expertise through direct collaboration with local firms. It was designed to spark creative thinking, build confidence, and bridge theory with practice.”

What began as a traditional strategic analysis quickly evolved into a forward-looking exploration of AI’s impact on branding, user experience, and performance creative. “Our team realized early on that AI wasn’t a threat but a powerful tool,” the students shared. “We found that AI’s real impact lies not in replacing creativity, but in reshaping expectations, accelerating timelines, and redefining performance standards. It also gives forward-thinking agencies like Creature the opportunity to guide clients still catching up to the AI curve.”

Creature’s founders, Margaret Strickland and Matt Berberian, welcomed the collaboration. “We solve creative challenges across brand, product, and performance,” said Strickland. “AI is transforming each of these areas. The students helped us see how to stay ahead of the curve.”

Students applied frameworks like SWOT, Porter’s Five Forces, and the G-STIC model to diagnose challenges and develop actionable strategies. Weekly meetings with Creature allowed for iterative feedback and refinement.

One of the team’s most surprising insights came from primary research: many agencies hesitate to disclose their use of AI, fearing clients will demand lower prices. “We recommended Creature define and share their AI philosophy,” said the students. “Clients want transparency and innovation, and they’ll choose partners who embrace AI, not hide from it.”

Creature took the advice to heart. Since the project concluded, the firm has launched a new AI consulting offering, SNSE by Creature, and implemented automation across operations, resulting in a 21% boost in efficiency. They’ve also adopted an AI manifesto to guide future initiatives.

A Transformative Student Experience

Katie Bowen, Evening MBA '25

“This project let us apply MBA concepts to a real-world business challenge. We dove into Creature’s business and tailored our analysis to their needs. It pushed us to think critically about how companies stay competitive when AI tools are widely accessible. Using strategy, innovation, and marketing frameworks, we bridged theory and practice to deliver forward-looking recommendations.”

Ellie Cobb, Evening MBA ‘26

“This project strengthened my ability to use AI effectively in both personal and professional contexts—not just knowing how to use it, but when not to. Exploring such a fast-evolving topic made me more agile and open-minded, ready to follow where research and emerging trends lead.”

Christopher Jones, Evening MBA ‘26

“The Marketing Practicum with Creature was an eye-opening experience that deepened my understanding of AI’s impact on business. It sharpened my critical thinking as I navigated conflicting information about AI, and gave me practical insight into business strategy, from integrating new technology to managing innovation and diversifying product offerings.”

Education With Impact

Oakley believes the practicum will have lasting impact. “These students now understand how traditional marketing strategy integrates with emerging AI capabilities. They’re ready to lead in a rapidly evolving industry.”

As AI continues to reshape marketing, partnerships like the one between Scheller and Creature demonstrate the power of collaboration, innovation, and education in preparing future leaders for whatever comes next.

News Contact

Kristin Lowe (She/Her)

Content Strategist

Georgia Institute of Technology | Scheller College of Business

kristin.lowe@scheller.gatech.edu

Nov. 14, 2025

311 chatbots make it easier for people to report issues to their local government without long wait times on the phone. However, a new study finds that the technology might inhibit civic engagement.

311 systems allow residents to report potholes, broken fire hydrants, and other municipal issues. In recent years, the use of artificial intelligence (AI) to provide 311 services to community residents has boomed across city and state governments. This includes an artificial virtual assistant (AVA) developed by third-party vendors for the City of Atlanta in 2023.

Through survey data, researchers from Tech’s School of Interactive Computing found that many residents are generally positive about 311 chatbots. In addition to eliminating long wait times over the phone, they also offer residents quick answers to permit applications, waste collection, and other frequently asked questions.

However, the study, which was conducted in Atlanta, indicates that 311 chatbots could be causing residents to feel isolated from public officials and less aware of what’s happening in their community.

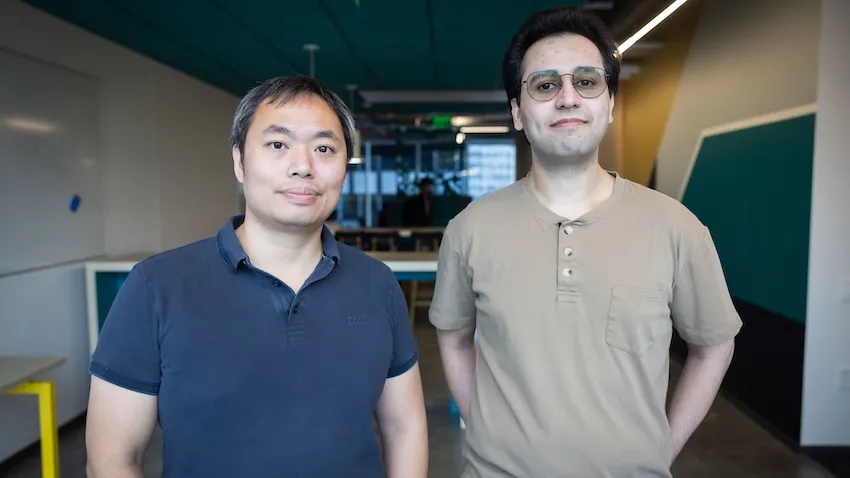

Jieyu Zhou, a Ph.D. student in the School of IC, said it doesn’t have to be that way.

Uniting Communities

Zhou and her advisor, Assistant Professor Christopher MacLellan, published a paper at the 2025 ACM Designing Interactive Systems (DIS) Conference that focuses on improving public service chatbot design and amplifying their civic impact. They collaborated with Professor Carl DiSalvo, Associate Professor Lynn Dombrowski, and graduate students Rui Shen and Yue You.

Zhou said 311 chatbots have the potential to be agents that drive community organization and improve quality of life.

“Current chatbots risk isolating users in their own experience,” Zhou said. “In the 311 system, people tend to report their own individual issues but lose a sense of what is happening in their broader community.

“People are very positive about these tools, but I think there’s an opportunity as we envision what civic chatbots could be. It’s important for us to emphasize that social element — engaging people within the community and connecting them with government representatives, community organizers, and other community members.”

Zhou and MacLellan said 311 chatbots can leave users wondering if others in their communities share their concerns.

“If people are at a town hall meeting, they can get a sense of whether the problems they are experiencing are shared by others,” Zhou said. “We can’t do that with a chatbot. It’s like an isolated room, and we’re trying to open the doors and the windows.”

Adding a Human Touch

In their paper, the researchers note that one of the biggest criticisms of 311 chatbots is they can’t replace interpersonal interaction.

Unlike chatbots, people working in local government offices are likely to:

- Have direct knowledge of issues

- Provide appropriate referrals

- Empathize with the resident’s concerns

MacLellan said residents are likely to grow frustrated with a chatbot when reporting issues that require this level of contextual knowledge.

One person in the researchers’ survey noted that the chatbot they used didn’t understand that their report was about a sidewalk issue, not a street issue.

“Explaining such a situation to a human representative is straightforward,” MacLellan said. “However, when the issue being raised does not fall within any of the categories the chatbot is built to address, it often misinterprets the query and offers information that isn’t helpful.”

The researchers offer some design suggestions that can help chatbots foster community engagement and improve community well-being:

- Escalation. Regarding the sidewalk report, the chatbot did not offer a way to escalate the query to a human who could resolve it. Zhou said that this is a feature that chatbots should have but often lack.

- Transparency. Chatbots could provide details about recent and frequently reported community issues. They should inform users early in the call process about known problems to help avoid an overload of user complaints.

- Education. Chatbots can keep users updated about what’s happening in their communities.

- Collective action. Chatbots can help communities organize and gather ideas to address challenges and solve problems.

“Government agencies may focus mainly on fixing individual issues,” Zhou said, “But recognizing community-level patterns can inspire collective creativity. For example, one participant suggested that if many people report a broken swing at a playground, it could spark an initiative to design a new playground together—going far beyond just fixing it.”

These are just a few examples of things, the researchers argue, that 311 services were originally designed to achieve.

“Communities were already collaborating on identifying and reporting issues,” Zhou said. “These chatbots should reflect the original intentions and collaboration practices of the communities they serve.

“Our research suggests we can increase the positive impact of civic chatbots by including social aspects within the design of the system, connecting people, and building a community view.”

Nov. 12, 2025

One of the top conferences for AI and computer games is recognizing a School of Interactive Computing professor with its first-ever test-of-time award.

At its event this week in Alberta, Canada, the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment (AIIDE) is honoring Professor Mark Riedl. The award also honors University of Utah Professor and Division of Games Chair Michael Young, Riedl’s Ph.D. advisor.

Riedl studied under Young at North Carolina State University.

Their 2005 paper, From Linear Story Generation to Branching Story Graphs, highlighted the challenges of using AI to create interactive gaming narratives in which user actions influence the story’s progression.

In 2005, computer game systems that supported linear, non-branching games were widely used. Riedl introduced an innovative mathematical formula for interactive stories ranging from choose-your-own-adventure novels to modern computer games.

“We didn’t use the term ‘generative AI’ back then, but I was working on AI for the generation of creative artifacts,” Riedl said. “This was before we had practical deep learning or large language models.

“One of the reasons this paper is still relevant 20 years later is that it didn’t just present a technology, it attempted to provide a framework for solving a grand challenge in AI.”

That challenge is still ongoing, Riedl said. Game designers continue to struggle with balancing story coherence against the amount of narrative control afforded to users.

“When users exercise a high degree of control within the environment, it is likely that their actions will change the state of the world in ways that may interfere with the causal dependencies between actions as intended within a storyline,” Riedl and Young wrote in the paper.

“Narrative mediation makes linear narratives interactive. The question is: Is the expressive power of narrative mediation at least as powerful as the story graph representation?”

AIIDE is being held this week at the University of Alberta in Edmonton, Alberta. Riedl will receive the award on Wednesday.

Nov. 03, 2025

A new deep learning architectural framework could boost the development and deployment efficiency of autonomous vehicles and humanoid robots. The framework will lower training costs and reduce the amount of real-world data needed for training.

World foundation models (WFMs) enable physical AI systems to learn and operate within synthetic worlds created by generative artificial intelligence (genAI). For example, these models use predictive capabilities to generate up to 30 seconds of video that accurately reflects the real world.

The new framework, developed by a Georgia Tech researcher, enhances the processing speed of the neural networks that simulate these real-world environments from text, images, or video inputs.

The neural networks that make up the architectures of large language models like ChatGPT and visual models like Sora process contextual information using the “attention mechanism.”

Attention refers to a model’s ability to focus on the most relevant parts of input.

The Neighborhood Attention Extension (NATTEN) allows models that require GPUs or high-performance computing systems to process information and generate outputs more efficiently.

Processing speeds can increase by up to 2.6 times, said Ali Hassani, a Ph.D. student in the School of Interactive Computing and the creator of NATTEN. Hassani is advised by Associate Professor Humphrey Shi.

Hassani is also a research scientist at Nvidia, where he introduced NATTEN to Cosmos — a family of WFMs the company uses to train robots, autonomous vehicles, and other physical AI applications.

“You can map just about anything from a prompt or an image or any combination of frames from an existing video to predict future videos,” Hassani said. “Instead of generating words with an LLM, you’re generating a world.

“Unlike LLMs that generate a single token at a time, these models are compute-heavy. They generate many images — often hundreds of frames at a time — so the models put a lot of work on the GPU. NATTEN lets us decrease some of that work and proportionately accelerate the model.”

Nov. 13, 2025

A new study from Georgia Tech’s Jimmy and Rosalynn Carter School of Public Policy is one of the first to estimate how changes in productivity due to AI will affect energy consumption.

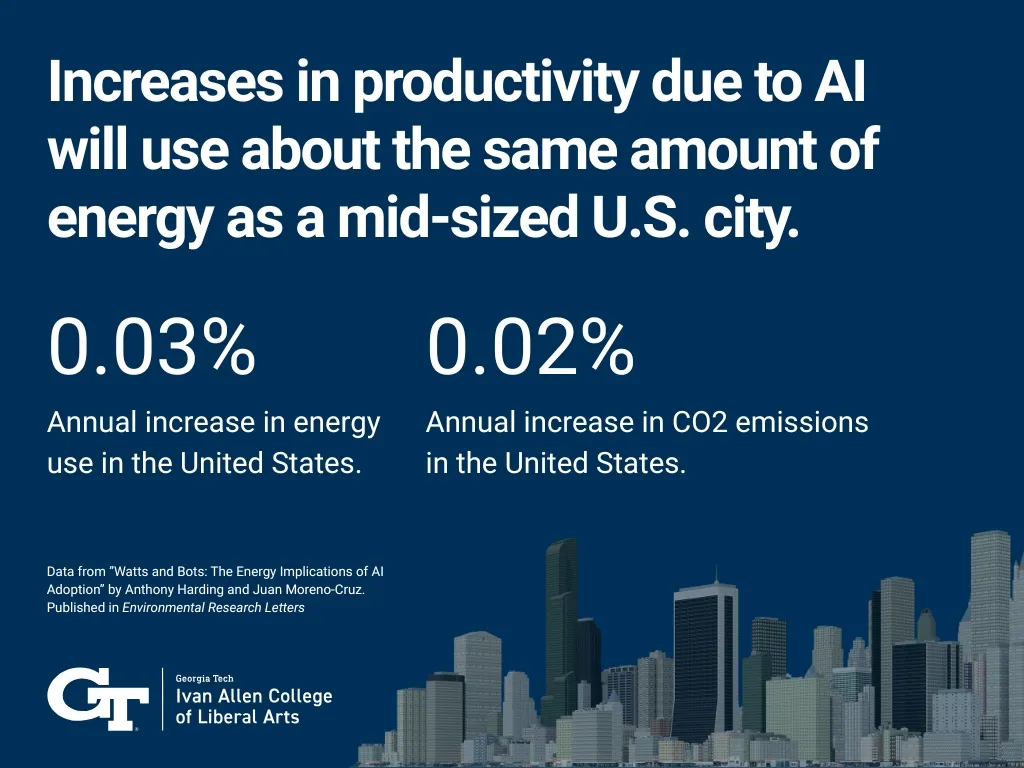

The paper, written by Anthony Harding and co-author Juan Moreno-Cruz at the University of Waterloo, suggests that greater productivity due to AI will result in a 0.03% annual increase in energy use in the United States and a 0.02% increase in CO2 emissions. That’s about equal to the yearly electricity use of a mid-sized U.S. city.

“If AI is as transformational as some expect it to be, it makes it even more important to think about the knock-on effects throughout the economy, beyond just the demands of the technology itself,” Harding said. “U.S. energy demand has stabilized since the mid-2000s. There is potential for AI to disrupt this, but there is also large uncertainty.”

Nov. 11, 2025

School of Mathematics Professor Anton Leykin is part of a research team selected to receive support through the AI for Math Fund, a new grant program created to accelerate the development of artificial intelligence (AI) and machine learning tools for mathematics.

“This grant gives me a foothold in a new world where AI can be used in a very concrete way,” says Leykin. “It’s an opportunity to move beyond the hype and develop tools that truly benefit mathematical research.”

With a total of $18 million in inaugural grants to 29 project teams, the AI for Math Fund backs initiatives that create open-source tools, expand high-quality datasets for AI training, and make advanced systems more accessible to mathematicians. The fund received 280 grant applications from researchers and mathematicians worldwide.

Building bridges

Leykin’s global team includes researchers from the University of South Carolina, University of Warwick, and Cornell University. Their project, “Bridging Proof and Computation: For a Verified Lean-Macaulay2 Interface,” aims to connect two powerful systems: Lean, a platform for assisting and formalizing mathematical proofs, and Macaulay2, a computational algebra system widely used in research.

By developing a native interface — a built-in connection that allows the two systems to work together without external tools — and a Lean-based domain-specific language, the project will enable communication between these systems. This will allow Lean users to formulate tactics that involve sophisticated computation done by algorithms implemented in Macaulay2; in return, Macaulay2 users can formalize computer-assisted proofs via Lean with a little help from AI.

“This integration has the potential to transform how mathematicians work,” says Leykin. “It will not only connect Lean and Macaulay2 but also lay the groundwork for a general interface that could benefit other computer algebra systems in the future.”

His goal is to create a robust proof-assistance system where AI can help generate strategies and validate proofs, driving progress in areas that require both computational power and rigorous verification.

About the AI for Math Fund

A joint initiative developed in partnership between Renaissance Philanthropy and founding donor XTX Markets, the AI for Math Fund is one of the largest philanthropic commitments supporting the development of AI and machine learning tools to advance mathematics. Individual grants range up to $1 million for 24 months of work on open-source projects and research.

News Contact

Laura Segraves Smith, writer

Oct. 27, 2025

Pop culture has often depicted robots as cold, metallic, and menacing, built for domination, not compassion. But at Georgia Tech, the future of robotics is softer, smarter, and designed to help.

“When people think of robots, they usually imagine something like The Terminator or RoboCop: big, rigid, and made of metal,” said Hong Yeo, the G.P. “Bud” Peterson and Valerie H. Peterson Professor in the George W. Woodruff School of Mechanical Engineering. “But what we’re developing is the opposite. These artificial muscles are soft, flexible, and responsive — more like human tissue than machine.”

Yeo’s latest study, published in Materials Horizons, explores AI-powered muscles made from lifelike materials paired with intelligent control systems. The technology learns from the body and adapts in real time, creating motion that feels natural, responsive, and safe enough to support recovery.

Muscles That Think, Materials That Feel

Traditional robotics relies on steel, wires, and motors, but rarely captures the nuances of human motion. Yeo’s research takes a different approach. He uses hierarchically structured fibers, which are flexible materials built in layers, much like muscle and tendon. They can sense, adapt, and even “remember” how they’ve moved before.

Yeo trains machine learning algorithms to adjust those pliable materials in real time with the right amount of force or flexibility for each task.

“These muscles don’t only respond to commands,” Yeo said. “They learn from experience. They can adapt and self-correct, which makes motion smoother and more natural.”

The result of that research is deeply human. For someone recovering from a stroke or limb loss, each deliberate movement rebuilds not just strength — it rebuilds confidence, independence, and a sense of self.

A Glove That Gives Freedom Back

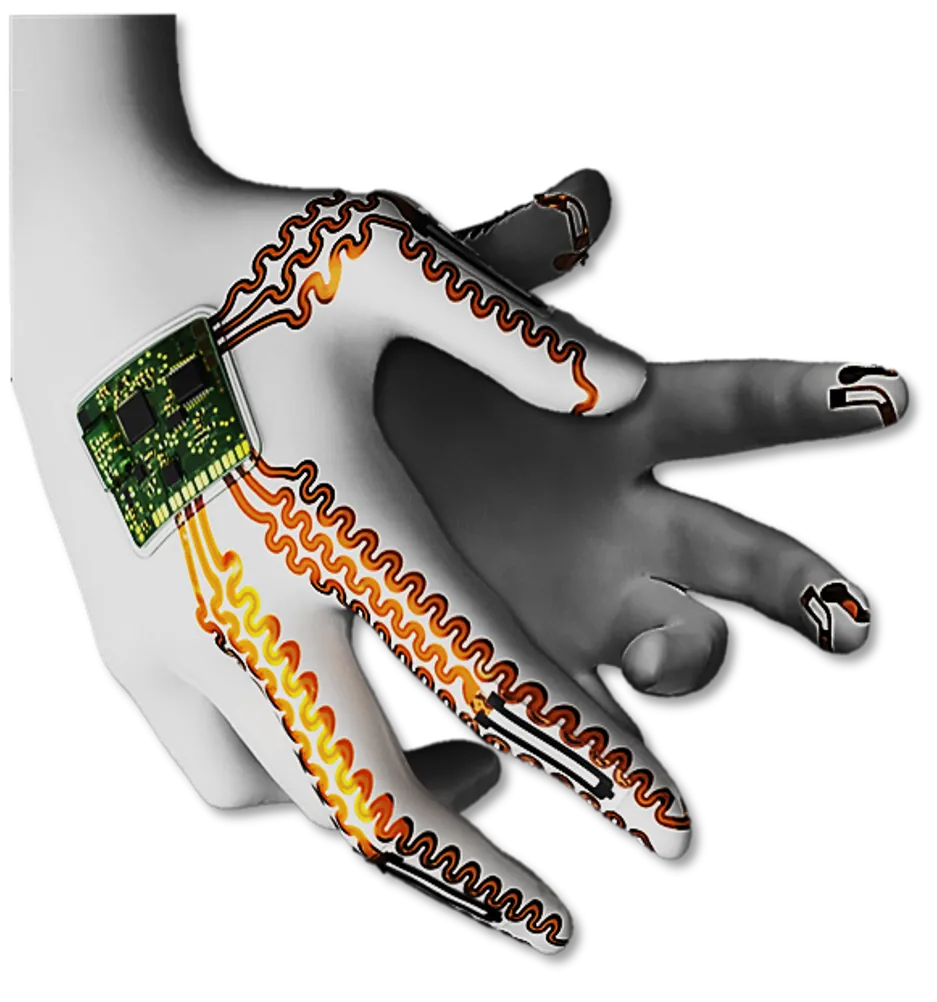

One of the first real-world applications is a prosthetic glove powered by artificial muscles (published in ACS Nano, 2025), a device that behaves more like a helping hand than a mechanical tool. Traditional prosthetics rely on rigid motors and preset motions, but Yeo’s design mirrors the natural give-and-take of real muscle.

Inside the glove, thin layers of stretchable fibers and sensors contract, twist, and flex in sync with the wearer’s intent. The glove can fine-tune grip strength, reduce tremors, and respond instantly to the user’s movements, bringing dexterity back to everyday life.

That kind of precision matters most in the smallest tasks: fastening a button, lifting a glass, holding a child’s hand.

“These aren’t just movements,” Yeo said. “They’re freedoms.”

For Yeo, the idea of restoring freedom through movement has driven his research from the very beginning.

A Mission Rooted in Loss

Yeo's work is deeply personal. His path to biomedical engineering began with loss — the sudden death of his father while Yeo was still in college. That moment reshaped his sense of purpose, redirecting his focus from machines that move to technologies that heal.

“Initially, I was thinking about designing cars,” he said. “But after my father’s death, I kind of woke up. Maybe I could do something that helps save someone’s life.”

That purpose continues to guide his lab’s work today, building technologies that help people recover what they’ve lost.

Achieving that vision, however, means tackling some of engineering’s toughest challenges.

Soft Machines, Hard Problems

Creating lifelike muscles isn’t easy. They need to be soft but strong, responsive but safe. And they must avoid triggering the body’s immune system. That means building materials that can survive inside the body — and learn to belong there.

“We always think about not only function, but adaptability,” Yeo said. “If it’s going to be part of someone’s body, it has to work with them, not against them.”

His team calibrates these synthetic fibers like precision instruments — tested, adjusted, and re-tuned until they operate in sync with the body’s natural movements. Over time, they develop a kind of “muscle memory,” adapting fluidly to changing conditions. That dynamic adaptability, Yeo explained, is what separates a machine from a prosthetic that truly feels alive.

From Collaboration to Innovation

Solving problems this complex requires more than one discipline. It takes an entire ecosystem of collaboration. Yeo’s lab brings together experts in mechanical engineering, materials science, medicine, and computer science to design smarter, safer devices.

“You can’t solve this kind of problem in isolation,” he said. “We need all of it — polymers, artificial intelligence, biomechanics — working together.”

That collaborative model is supported by the National Science Foundation (NSF), the National Institutes of Health, and Georgia Tech’s Institute for Matter and Systems. In 2023, Yeo received a $3 million NSF grant to train the next generation of engineers building smart medical technology.

His team now works closely with healthcare providers and industry partners to bring these devices out of the lab and into patients’ lives.

The Future You Can Feel

The future of robotics, according to Yeo, won’t be defined by power or complexity but by feel.

“If it feels foreign, people won’t use it,” he said. “But if it feels like part of you, that’s when it can truly change lives.”

It’s the opposite of The Terminator, where machines replace us. Yeo is designing these machines to help us reclaim ourselves.

News Contact

Michelle Azriel Writer/Editor, Research Communications

Oct. 23, 2025

Building on more than a year of successful collaboration, Dolby Labs has extended its investment in Georgia Tech’s College of Computing for a second year, donating $600,000 to support cutting-edge research.

Dolby and the College each have laboratories in the Coda building, which promotes collaboration at various levels. The audiovisual technology company supported seven research projects last year, spanning computing systems and AI modeling. The partnership also includes events such as this month’s co-hosted student seminar.

“This partnership has reinforced the importance of taking an interdisciplinary approach to our research,” said Vivek Sarkar, Dean of Computing, who worked in industry for two decades before returning to academia.

“I’d like to see us go even deeper in finding ways to combine faculty from different schools and different research areas to work with one partner.”

[VIDEO: GT Computing Dean Discusses Dolby Deal Details with Senior VP]

Yalong Yang, an assistant professor at Georgia Tech’s School of Interactive Computing, is one of the researchers who received Dolby support last year. He and his lab have been working on creating interactive, immersive versions of stories from the New York Times.

“We’re particularly interested in the engagement side,” Yang said. “That’s what Dolby’s business is about.” Yang and his collaborators have been showing the immersive stories to test subjects while collecting data on heart rate and eye movement.

These collaborations have resulted in several published papers. The code developed is released as open source, enabling anyone to use it. Meanwhile, Dolby scientists can tailor the code for their own needs.

“We deliberately look for ambitious, farther-looking projects," said Shriram Revankar, senior vice president of Dolby’s Advanced Technology Group.

[RELATED: Dean's Session Spotlights Industry Role in Preparing Students for Workforce Success]

"These are the risks that academia can take and do well in, because they have constant access to new students and other faculty."

At its core, the partnership is about developing relationships among faculty, students, and Dolby, according to Humphrey Shi.

"The students get experience in solving real-world problems for an international corporation, and Dolby’s researchers expand their knowledge through connecting with Tech faculty," said Shi, an associate professor in interactive computing whose research has also been supported by Dolby.

News Contact

Ann Claycombe

Communications Director

Georgia Tech College of Computing

claycombe@cc.gatech.edu

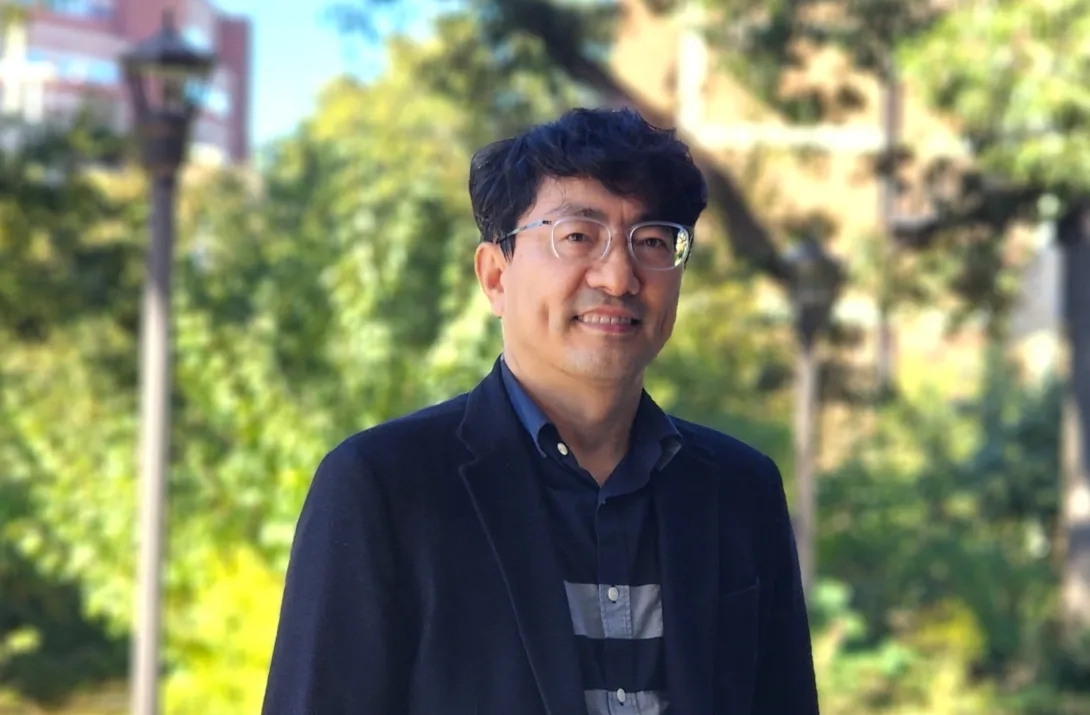

Oct. 21, 2025

The Center for 21st Century Universities (C21U) is excited to announce that Sanghyun Jang will join Georgia Tech as a C21U visiting research scholar starting on October 20, 2025. He comes from South Korea, where he served as director of the education data center at the Korea Education and Research Information Service (KERIS). For one year, he will be based in Atlanta, Georgia, collaborating with C21U faculty and researchers to develop AI-based learning systems and leverage educational data to improve student outcomes.

“We are pleased to welcome Sanghyun Jang to C21U as our visiting scholar. His leadership and expertise in Korea’s national digital and AI education initiatives offer an invaluable global perspective to our goal of promoting innovation in lifelong learning. His visit will assist us in exploring new models of AI-enabled education that link K–12, higher education, and lifelong learners worldwide,” said C21U Executive Director Stephen Harmon.

Sanghyun has extensive experience leading national education data initiatives in South Korea and collaborating with international organizations like UNESCO and the World Bank. This aligns well with C21U’s mission to promote personalized, data-driven teaching and learning at scale. His expertise highlights our commitment to global collaboration and leadership in AI-powered education.

“I deeply appreciate the opportunity to join C21U and collaborate with Georgia Tech’s outstanding researchers. Their innovative work in AI and lifelong learning provides a strong foundation for meaningful international collaboration and innovation. I am excited that this visiting scholar experience will help build a global network for AI and education research by connecting KERIS, Korean universities, and Georgia Tech,” said Sanghyun Jang.

C21U’s team looks forward to sharing updates on Sanghyun’s work throughout the year. Stay tuned for upcoming events and research highlights.

Sanghyun Jang holds a doctorate in computer engineering from Dongguk University.

News Contact

Yelena M. Rivera-Vale, M.A. (she/her(s)/ella)

Communications Program Manager

C21U, College of Lifetime Learning

Georgia Institute of Technology

Strategic, Learner, Relator, Intellection, Input

Oct. 20, 2025

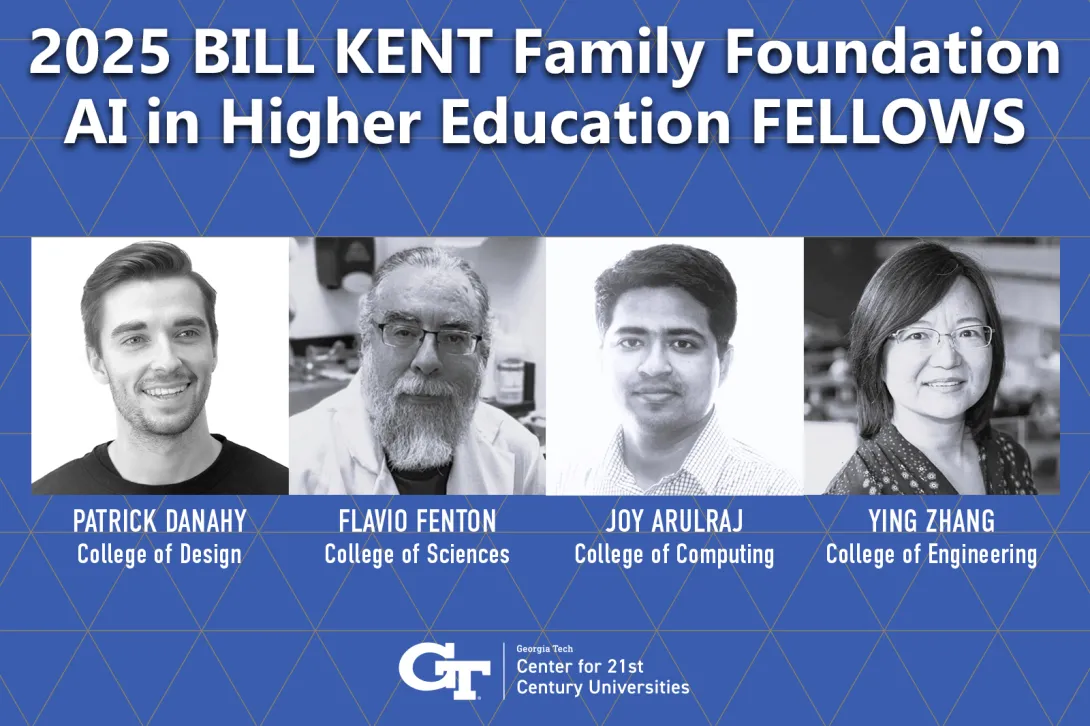

The Center for 21st Century Universities (C21U) has announced the inaugural cohort of Bill Kent Family Foundation AI in Higher Education Faculty Fellows for 2025–26. This C21U-led fellowship program supports faculty projects that explore innovative, ethical, and impactful uses of artificial intelligence in teaching and learning.

The fellows are Professor Flavio Fenton from the College of Sciences, Joy Arulraj from the College of Computing, Patrick Danahy from the College of Design, and Professor and Associate Chair of the School of Electrical and Computer Engineering Ying Zhang, from the College of Engineering. Each fellow will lead a project that advances AI’s role in higher education.

“We deeply appreciate the generosity of the Dr. Bill Kent family in establishing this first philanthropic gift to our new College. Their generous support will allow us to encourage practical applications of AI and foster an appreciation for its ethical use,” said William Gaudelli, inaugural dean of the Georgia Tech College of Lifetime Learning. “This Fellowship will ensure we grow and learn about its use thoughtfully, developing highly innovative and engaging pedagogical experiences for all life’s stages.”

Arulraj’s TokenSmith: Fast, Local, Citable LLM Tutoring introduces a privacy-conscious AI tutoring system for database courses that provides verifiable, course-aligned answers. Fenton’s AI as a Learning Assistant develops AI-enabled instructional modules for physics, neuroscience, and scientific writing to improve conceptual understanding and promote ethical AI use. Danahy’s AI-Enabled Design Ideation and Robotic 3D Printing with Open-Source Platforms integrates AI-driven design and robotic fabrication into architecture education while addressing ethics and sustainability. Zhang’s AI-Enabled Personalized Engineering Education expands personalized learning in large engineering courses through AI tutoring frameworks and integrates AI literacy into the curriculum.

“The Bill Kent Family Fellowship gives our faculty the resources and flexibility to experiment with AI in ways that directly benefit students and inform the future of higher education,” said Stephen Harmon, executive director of C21U.

The fellowship received 21 applications from all seven Georgia Tech colleges, reflecting the educational AI subject-matter experts for their units and the Institute as a whole. Fellows will develop and implement their projects during the 2025–26 academic year and share outcomes through C21U Learning Labs and other campus events.

The Bill Kent Family Foundation partnered with C21U to establish this fellowship and support faculty innovation at Georgia Tech. Through this program, the Foundation invests in projects that explore responsible and impactful uses of artificial intelligence in teaching and learning. By funding this initiative, the Foundation aims to empower educators to develop scalable instructional models, promote ethical AI practices, and prepare students for a future shaped by emerging technologies.

News Contact

Yelena M. Rivera-Vale, M.A. (she/her(s)/ella)

Communications Program Manager

Center for 21st Century Universities

College of Lifetime Learning

Georgia Institute of Technology

Strategic, Learner, Relator, Intellection, Input

Pagination

- Previous page

- Page 3

- Next page