Jan. 23, 2025

The Space Research Institute (SRI) at Georgia Tech has initiated an internal search for its inaugural executive director. This new Interdisciplinary Research Institute (IRI) will build upon the foundation laid by the Space Research Initiative.

The SRI is dedicated to advancing cutting-edge research in space-related fields, fostering interdisciplinary collaborations, and establishing strong partnerships with industry, government, academic, and international organizations. As leader of the newly established IRI, the executive director will lead the Institute's strategic vision, nurture a culture of innovation, and champion initiatives that position Georgia Tech, via the SRI, as a global leader in space research and exploration.

The SRI is composed of faculty and staff across campus who have a common interest in space exploration and discovery. Collectively, SRI will research a wide range of topics on space and how it relates to human perspective and be an ultimate hub of all things space related at Georgia Tech. It will connect all the research institutes, labs, facilities, and colleges to pioneer the conversation about space in the state of Georgia. By working hand-in-hand with academics, business partners, and students we are committed to staying at the cutting edge of innovation.

Click here to learn more about this position and how to apply.

News Contact

For any further details, please contact Rob Kadel at Rob Kadel.

Jan. 21, 2025

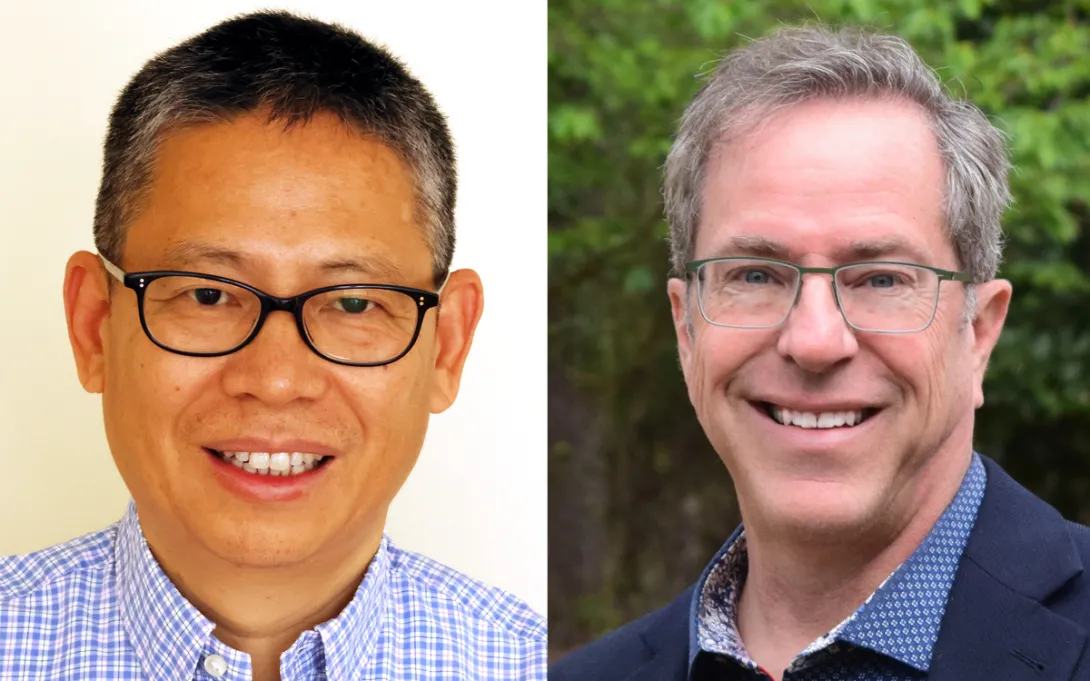

George White, senior director of strategic partnerships, has been named a member of the inaugural National Semiconductor Technology Center (NSTC) Workforce Advisory Board (WFAB).

“The appointment to the Natcast Workforce Advisory Board is truly an honor and represents an opportunity for myself and my esteemed colleagues to help increase U.S. competitiveness in this most consequential sector,” said White.

Comprising U.S. leaders focused on growing the semiconductor workforce from the private sector, higher education, workforce development organizations, the Department of Commerce, and other federal agencies, the WFAB will support the efforts of the recently established NSTC Workforce Center of Excellence (WCoE). It will offer critical input on national and regional workforce development strategies to ensure WCoE initiatives are employer-driven, worker-centered, and responsive to real-time industry challenges.

Read the full release from Natcast

Jan. 16, 2025

A researcher in Georgia Tech’s School of Interactive Computing has received the nation’s highest honor given to early career scientists and engineers.

Associate Professor Josiah Hester was one of 400 people awarded the Presidential Early Career Award for Scientists and Engineers (PECASE), the Biden Administration announced in a press release on Tuesday.

The PECASE winners’ research projects are funded by government organizations, including the National Science Foundation (NSF), the National Institutes of Health (NIH), the Centers for Disease Control and Prevention (CDC), and NASA. They will be invited to visit the White House later this year.

Hester joins Associate Professor Juan-Pablo Correa-Baena from the School of Materials Science and Engineering as the two Tech faculty who received the honor.

Hester said his nomination was based on the NSF Faculty Early Career Development Program (CAREER) award he received in 2022 as an assistant professor at Northwestern University. He said the NSF submits its nominations to the White House for the PECASE awards, but researchers are not informed until the list of winners is announced.

“For me, I always thought this was an unachievable, unassailable type of thing because of the reputation of the folks in computing who’ve won previously,” Hester said. “It was always a far-reaching goal. I was shocked. It’s something you would never in a million years think you would win.”

Hester is known for pioneering research in a new subfield of sustainable computing dedicated to creating battery-free devices powered by solar energy, kinetic energy, and radio waves. He co-led a team that developed the first battery-free handheld gaming device.

Last year, Hester co-authored an article published in the Association of Computing Machinery’s in-house journal, the Communications of the ACM, in which he coined the term “Internet of Battery-less Things.”

The Internet of Things is the network of physical computing devices capable of connecting to the internet and exchanging data. However, these devices eventually die. Landfills are overflowing with billions of them and their toxic power cells, harming our ecosystem.

In his CAREER award, Hester outlined projects that would work toward replacing the most used computing devices with sustainable, battery-free alternatives.

“I want everything to be an Internet of Batteryless Things — computational devices that could last forever,” Hester said. “I outlined a bunch of different ways that you could do that from the computer engineering side and a little bit from the human-computer interaction side. They all had a unifying theme of making computing more sustainable and climate-friendly.”

Hester is also a Sloan Research Fellow, an honor he received in 2022. In 2021, Popular Sciene named him to its Brilliant 10 list. He also received the Most Promising Engineer or Scientist Award from the American Indian Science Engineering Society, which recognizes significant contributions from the indigenous peoples of North America and the Pacific Islands in STEM disciplines.

President Bill Clinton established PECASE in 1996. The White House press release recognizes exceptional scientists and engineers who demonstrate leadership early in their careers and present innovative and far-reaching developments in science and technology.

News Contact

NATHAN DEEN

COMMUNICATIONS OFFICER

SCHOOL OF INTERACTIVE COMPUTING

Jan. 13, 2025

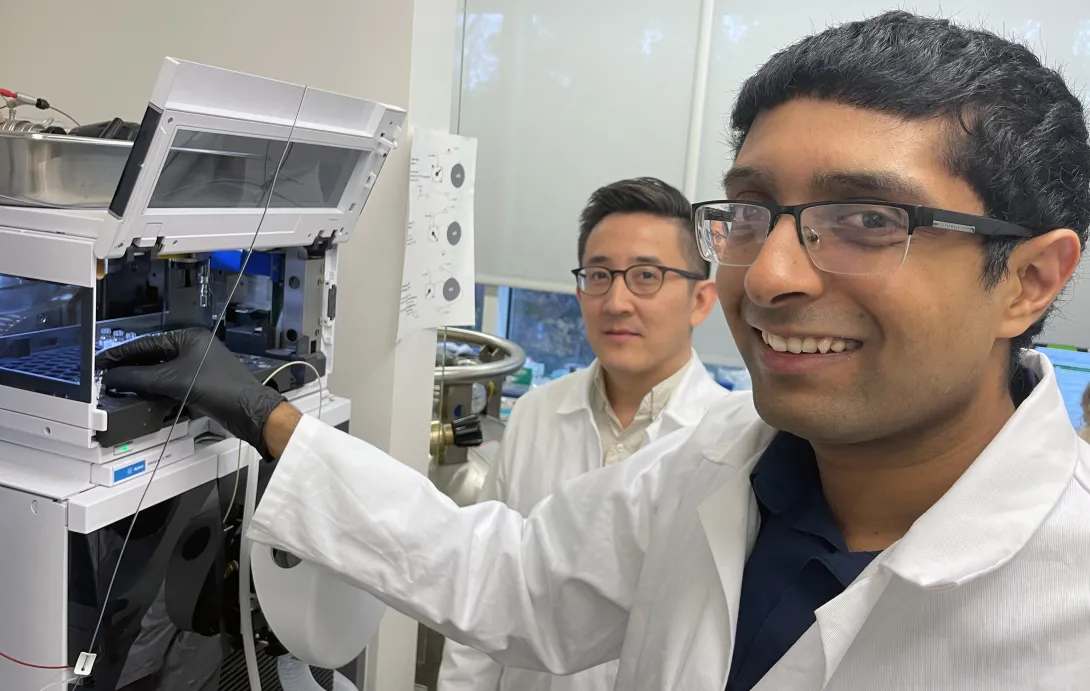

Georgia Tech researchers have developed biosensors with advanced sleuthing skills and the technology may revolutionize cancer detection and monitoring.

The tiny detectives can identify key biological markers using logical reasoning inspired by the “AND” function in computers — like, when you need your username and password to log in. And unlike traditional biosensors comprised of genetic materials — cells, bits of DNA — these are made of manufactured molecules.

These new biosensors are more precise and simpler to manufacture, reducing the number of false positives and making them more practical for clinical use. And because the sensors are cell-free, there’s a reduced risk for immunogenic side effects.

“We think the accuracy and simplicity of our biosensors will lead to accessible, personalized, and effective treatments, ultimately saving lives,” said Gabe Kwong, associate professor and Robert A. Milton Endowed Chair in the Wallace H. Coulter Department of Biomedical Engineering, who led the study, published this month in Nature Nanotechnology.

Breaking With Tradition

The researchers set out to address the limitations in current biosensors for cancer, like the ones designed for CAR-T cells to allow them to recognize tumor cells. These advanced biosensors are made of genetic material, and there is growing interest to reduce the potential for off-target toxicity by using Boolean “AND-gate” computer logic. That means they’re designed to release a signal only when two specific conditions are met.

“Traditionally, these biosensors involve genetic engineering using cell-based systems, which is a complex, time-consuming, and expensive process,” said Kwong.

So, his team developed biosensors made of iron oxide nanoparticles and special molecules called cyclic peptides. Synthesizing nanomaterials and peptides is a simpler, less costly process than genetic engineering, according to Kwong, “which means we can likely achieve large-scale, economical production of high-precision biosensors.”

Unlocking the AND-gate

Biosensors detect cancer signals and track treatment progress by turning biological signals into readable outputs for doctors. With AND-gate logic, two distinct inputs are required for an output.

Accordingly, the researchers engineered cyclic peptides — small amino acid chains — to respond only when they encounter two specific types of enzymes, proteases called granzyme B (secreted by the immune system) and matrix metalloproteinase (from cancer cells). The peptides generate a signal when both proteases are present and active.

Think of a high-security lock that needs two unique keys to open. In this scenario, the peptides are the lock, activating the sensor signal only when cancer is present and being confronted by the immune system.

“Our peptides allow for greater accuracy in detecting cancer activity,” said the study’s lead author, Anirudh Sivakumar, a postdoctoral researcher in Kwong’s Laboratory for Synthetic Immunity. “It’s very specific, which is important for knowing when immune cells are targeting and killing tumor cells.”

Super Specific

In animal studies, the biosensors successfully distinguished between tumors that responded to a common cancer treatment called immune checkpoint blockade therapy — ICBT, which enhances the immune system — from tumors that resisted treatment.

During these tests, the sensors also demonstrated their ability to avoid false signals from other, unrelated health issues, such as when the immune system confronted a flu infection in the lungs, away from the tumor.

“This level of specificity can be game changing,” Kwong said. “Imagine being able to identify which patients are responding to the therapy early in their treatment. That would save time and improve patient outcomes.”

The first step toward this simpler, precise form of cancer diagnostics began with an ambitious but humble ($50,000) seed grant from the Petit Institute for Bioengineering and Bioscience five years ago for a collaboration between Kwong’s lab and the lab of M.G. Finn, professor and chair in the School of Chemistry and Biochemistry.

It evolved into a multi-institutional project supported by grants from the National Science Foundation and National Institutes of Health that included researchers from the University of California-Riverside, as well as Georgia Tech faculty researchers Finn and Peng Qiu, associate professor in the Coulter Department.

“The progression of the research, from an initial seed grant all the way to animal studies, was very smooth,” Kwong said. “Ultimately, a collaborative, multidisciplinary effort turned our early vision into something that could have a great impact in healthcare.”

Citation: Anirudh Sivakumar, Hathaichanok Phuengkham, Hitha Rajesh, Quoc D. Mac, Leonard C. Rogers, Aaron D. Silva Trenkle, Swapnil Subhash Bawage, Robert Hincapie, Zhonghan Li, Sofia Vainikos, Inho Lee, Min Xue, Peng Qiu, M. G. Finn, Gabriel A. Kwong. “AND-gated protease-activated nanosensors for programmable detection of anti-tumour immunity.” Nature Nanotechnology (January 2025). https://doi.org/10.1038/s41565-024-01834-8

Funding: This research was supported in part by National Institutes of Health (NIH) grants 5U01CA265711, 5R01CA237210, 1DP2HD091793, and 5DP1CA280832.

News Contact

Jerry Grillo

Jan. 06, 2025

In the rapidly evolving world of manufacturing, embracing digital connectivity and artificial intelligence is crucial for optimizing operations, improving efficiency, and driving innovation. Internet of Things (IoT) is a key pillar of that process, enabling seamless communication and data exchange across the manufacturing process by connecting sensors, equipment, and applications through internet protocols.

The Georgia Tech Manufacturing Institute (GTMI) recently hosted the 10th annual Internet of Things for Manufacturing (IoTfM) Symposium, a flagship event that continues to set the standard for innovation and collaboration in the manufacturing sector. Held on Nov. 13, the symposium brought together industry leaders, researchers, and practitioners to explore the latest advancements and applications of IoT in manufacturing.

"The purpose is to bring the voice of manufacturers directly to the university community," explained Andrew Dugenske, a principal research engineer and director of the Factory Information Systems Center at GTMI. "It's about learning from industry to guide our research, education, and knowledge base, which is inherent to Georgia Tech."

Initiated over a decade ago, the IoTfM Symposium has grown into a premier event that highlights Georgia Tech's commitment to advancing manufacturing technologies.

"This symposium provides a unique platform to share and learn from cutting-edge advancements in IoT and now AI for manufacturing,” said Dago Mata, regional director of business development at Tata Consultancy Services (TCS) and one of the event’s speakers. “The opportunity to engage with industry leaders and showcase practical, real-world implementations was highly motivating."

This year’s symposium welcomed over 100 attendees from across the country. Speakers from TCS, Amazon Web Services, Southwire, and more shared insights on the latest advancements, use cases, current challenges, and future directions for IoT in manufacturing processes.

“My favorite aspect was the case studies presented by major manufacturers, highlighting successful IoT and AI implementations," said Mata, who has attended the symposium since 2018. "These provided actionable takeaways and inspiration for driving similar innovation in my projects — the blend of exclusive learning from real-world applications and the presence of diverse experts made it a truly practical and inspiring event."

A distinctive feature of the IoTfM Symposium is its commitment to providing a platform for industry partners to voice their perspectives on powerful manufacturing research, says Dugenske. "We ask our industry partners to tell us about their experiences, challenges, and future predictions. This way, we can guide our research with the real-world needs of the manufacturing sector to form stronger collaborations and better prepare our students."

This unique format not only enhances the relevance of the symposium but also fosters a collaborative environment where industry leaders can learn from each other and from Georgia Tech's academic community.

As GTMI looks to the future, the symposium will continue to evolve, incorporating new elements and expanding its reach. Dugenske envisions even greater integration with other GTMI initiatives and broader industry engagement.

"Our goal is to create an event that highlights our capabilities and builds deeper connections within the manufacturing community.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Dec. 12, 2024

As part of the CHIPS National Advanced Packaging Manufacturing Program (NAPMP), three advanced packaging research projects will receive investments of up to $100 million each. This work will accelerate the development of cutting-edge substrate and materials technologies essential to the semiconductor industry.

NAPMP was developed to support a robust U.S. ecosystem for advanced packaging, which is key to every electronic system. NAPMP will enable leading-edge research and development, domestic manufacturing facilities, and robust training and workforce development programs in advanced packaging.

In partnership with Georgia Tech and the 3D Packaging Research Center (PRC), Absolics will receive $100 million to develop revolutionary glass core substrate panel manufacturing.

“This landmark investment in Absolics is also a transformational investment in Georgia Tech,” said Tim Lieuwen, interim executive vice president for Research. “It will redefine the possibilities of our longstanding partnership by expanding Georgia Tech’s expertise in electronic packaging, which is vital to the semiconductor supply chain. This federal funding uniquely positions us to merge cutting-edge research with industry, drive economic development in Georgia, and create a workforce ready to tackle tomorrow’s manufacturing demands.”

Georgia Tech has a long history of pioneering packaging research. Through a previous collaboration with the PRC, Absolics has already invested in the state of Georgia by building a glass core substrate panel manufacturing facility in Covington.

Georgia Tech’s Institute for Matter and Systems (IMS), home to the PRC, houses specialized core facilities with the capabilities for semiconductor advanced packaging research and development.

“Awards like this reinforce the importance of collaborative research between research disciplines and the private and public sector. Without the research and administrative support provided by IMS and the Georgia Tech Office of Research Development, projects like this would not be coming to Georgia Tech.” said Eric Vogel, IMS executive director.

Georgia Tech is a leader in advanced packaging research and has been working on glass substrate packaging research and development for years. Through this new Substrate and Materials Advanced Research and Technology (SMART) Packaging Program, Absolics aims to build a glass-core packaging ecosystem. In collaboration with Absolics, Georgia Tech will receive money for research and development for a glass-core substrate research center.

“We are delighted to partner with Absolics and the broader team on this new NAPMP program focused on glass-core packaging,” said Muhannad Bakir, Dan Fielder Professor in the School of Electrical and Computer Engineering and PRC director. “Georgia Tech’s role will span program leadership, research and development of novel glass-core packages, technology transition, and workforce development.” Bakir will serve as the associate director of SMART Packaging Program, overseeing research and workforce development activities while also leading several research tasks.

"This project will advance large-area glass panel processing with innovative contributions to materials and processing, modeling and simulation, metrology and characterization, and testing and reliability. We are pleased to partner with Absolics in advancing these important technology areas," said Regents' Professor Suresh K. Sitaraman of the George W. Woodruff School of Mechanical Engineering and the PRC. In addition to technical contributions, Sitaraman will direct the new SMART Packaging Program steering committee.

“The NAPMP Materials and Substrates R&D award for glass substrates marks the culmination of extensive efforts spearheaded by Georgia Tech’s Packaging Research Center,” noted George White, senior director of strategic partnerships and the theme leader for education and workforce development in the SMART Packaging Program. “This recognition highlights the state of Georgia’s leadership in advanced substrate technology and paves the way for developing the next generation of talent in glass-based packaging.”

The program will support education and workforce development efforts by bringing training, internships, and certificate opportunities to technical colleges, the HBCU CHIPS Network, and veterans' programs.

News Contact

Amelia Neumeister | Research Communications Program Manager

Dec. 11, 2024

Gold and white pompoms fluttered while Buzz, the official mascot of the Georgia Institute of Technology, danced to marching band music. But the celebration wasn’t before a football or basketball game — instead, the cheers marked the official launch of Georgia AIM Week, a series of events and a new mobile lab designed to bring technology to all parts of Georgia

Organized by Georgia Artificial Intelligence in Manufacturing (Georgia AIM), Georgia AIM Week kicked off September 30 with a celebration on the Georgia Institute of Technology campus and culminated with another celebration on Friday at the University of Georgia in Athens and aligned with National Manufacturing Day.

In between, the Georgia AIM Mobile Studio made stops at schools and community organizations to showcase a range of technology rooted in AI and smart technology.

“Georgia AIM Week was a statewide opportunity for us to celebrate Manufacturing Day and to launch our Georgia AIM Mobile Studio,” said Donna Ennis, associate vice president, community-based engagement, for Georgia Tech’s Enterprise Innovation Institute and Georgia AIM co-director. “Georgia AIM projects planned events in cities around the state, starting here in Atlanta. Then we headed to Warner Robins, Southwest Georgia, and Athens. We’re excited about the opportunity to bring this technology to our communities and increase access and ideas related to smart technology.”

Georgia AIM is a collaboration across the state to provide the tools and knowledge to empower all communities, particularly those that have been underserved and overlooked in manufacturing. This includes rural communities, women, people of color, and veterans. Georgia AIM projects are located across the state and work within communities to create a diverse AI manufacturing workforce. The federally funded program is a collaborative project administered through Georgia Tech’s Enterprise Innovation Institute and the Georgia Tech Manufacturing Institute.

A cornerstone of Georgia AIM Week was the debut of the Georgia AIM Mobile Studio, a 53-foot custom trailer outfitted with technology that can be used in manufacturing — but also by anyone with an interest in learning about AI and smart technology. Visitors to the mobile studio can experience virtual reality, 3-D printing, drones, robots, sensors, computer vision, and circuits essential to running this new tech.

There’s even a dog — albeit a robotic one — named Nova.

The studio was designed to introduce students to the possibilities of careers in manufacturing and show small businesses some of the cost-effective ways they can incorporate 21st century technology into their manufacturing operations.

“We were awarded about $7.5 million to build this wonderful studio here,” said Kenya Asbill, who works at the Russell Innovation Center for Entrepreneurs (RICE) as the Economic Development Administration project manager for Georgia AIM. “We will be traveling around the state of Georgia to introduce artificial intelligence in manufacturing to our targeted communities, including underserved rural and urban residents.”

Some technology on the Georgia AIM Mobile Studio was designed in consultation with project partners Kitt Labs and Technologists of Color. An additional suite of “technology vignettes” were developed by students at the University of Georgia College of Engineering. RICE and UGA served as project leads for the mobile studio development, and RICE will oversee its deployment across the state in the coming months.

To request a mobile studio visit, please visit the Georgia AIM website.

During Monday’s kickoff, the Georgia Tech cheerleaders and Buzz fired up the crowd before an event that featured remarks by Acting Assistant Secretary of the U.S. EDA Christina Killingsworth; Jay Bailey, president and CEO of RICE; Beshoy Morkos, associate professor of mechanical engineering at the University of Georgia; Aaron Stebner, co-director of Georgia AIM; David Bridges, vice president of Georgia Tech’s Enterprise Innovation Institute; and lightning presentations by Georgia AIM project leads from around the state.

Following the presentations, mobile studio tours were led by Jon Exume, president and executive director, and Mark Lawson, director of technology, for Technologists of Color. The organization works to create a cohesive and thriving community of African Americans in tech.

“I’m particularly excited to witness the launch of the Georgia AIM Mobile Studio. It really will help demystify AI and bring its promise to underserved rural areas across the state,” Killingsworth said. “AI is the defining technology of our generation. It’s transforming the global economy, and it will continue to have tremendous impact on the global workforce. And while AI has the potential to democratize access to information, enhance efficiency, and allow humans to focus on the more complex, creative, and meaningful aspects of work, it also has the power to exacerbate economic disparity. As such, we must work together to embrace the promise of AI while mitigating its risks.”

Other events during Georgia AIM week included the Middle Georgia Innovation Corridor Manufacturing Expo in Warner Robins, West Georgia Manufacturing Day – Student Career Expo in LaGrange, and a visit to Colquitt County High School in Moultrie. The week wrapped on Friday, Oct. 4, at the University of Georgia in Athens with a National Manufacturing Day celebration.

“We’re focused on growing our manufacturing economy,” Ennis said. “We’re also focused on the development and deployment of innovation and talent in the manufacturing industry as it relates to AI and other technologies. Manufacturing is cool. It is a changing industry. We want our students and younger people to understand that this is a career.”

News Contact

Dec. 09, 2024

The National Academy of Inventors (NAI) is adding two more Georgia Tech researchers to its roster of innovators: Larry Heck and Younan Xia.

Heck is an artificial intelligence and speech recognition pacesetter who helped create virtual assistants for Microsoft, Samsung, Google, and Amazon. Xia is a nanomaterials pioneer whose inventions include silver nanowires commercialized for use in touchscreen displays, flexible electronics, and photovoltaics.

Election to NAI is the highest professional distinction specifically awarded to inventors. Founded in 2012, the NAI Fellows program has recognized 22 Georgia Tech innovators — 12 in just the last five years. Xia and Heck join a 2025 class of 170 new fellows representing university, government, and nonprofit organizations worldwide.

News Contact

Joshua Stewart

College of Engineering

Dec. 05, 2024

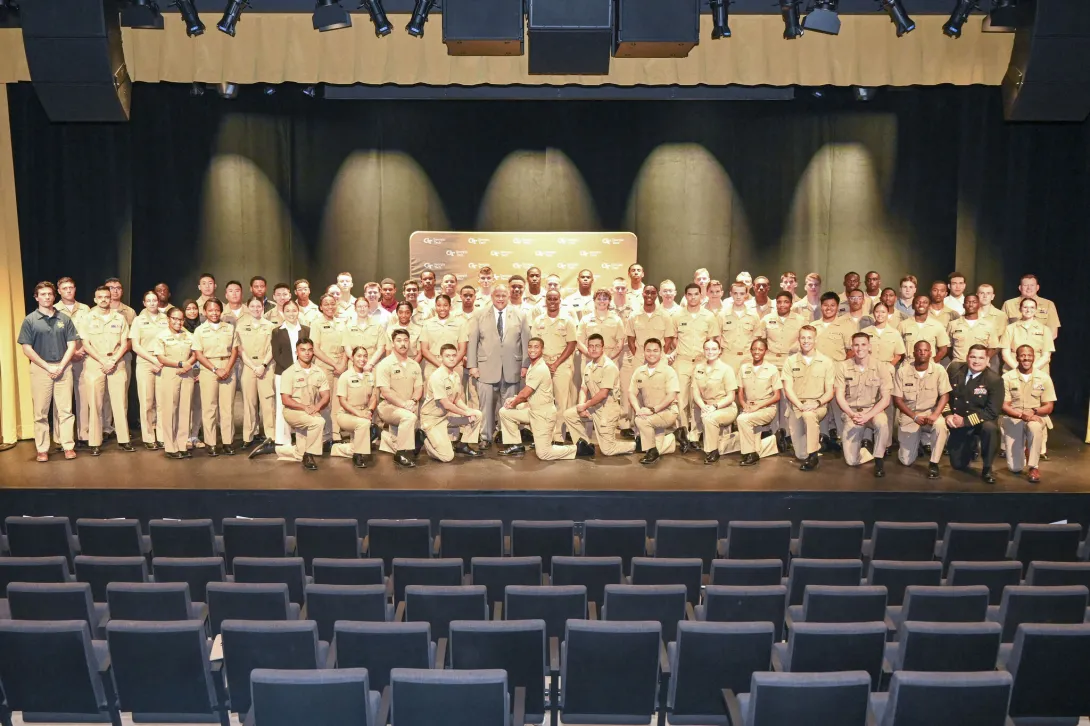

The Georgia Tech Research Institute (GTRI) proudly hosted U.S. Secretary of the Navy Carlos Del Toro during his recent campus visit. Del Toro's visit underscored the critical role of innovation and technology in national security and highlighted Georgia Tech's significant contributions to this effort.

“Our Navy-Marine Corps Team remains at the center of global and national security — maintaining freedom of the seas, international security, and global stability,” he explained in his remarks at the John Lewis Student Center. “To win the fight of the future, we must embrace and implement emerging technologies.”

The Secretary provided an update on science and technology research to the Atlanta Region Naval Reserve Officer Training Corps unit, comprised of midshipmen from Georgia Tech, Georgia State University, Kennesaw State University, Morehouse College, Spelman College, and Clark Atlanta University. Del Toro has worked to establish a new Naval Science and Technology Strategy to address current and future challenges faced by the Navy and Marine Corps. The strategy serves as a global call to service and innovation for stakeholders in academia, industry, and government.

“The Georgia Tech Research Institute has answered this call,” he said.

A key pillar of the new strategy, says Del Toro, was the establishment of the Department of the Navy’s Science and Technology Board in 2023, “with the intent that the board provide independent advice and counsel to the department on matters and policies relating to scientific, technical, manufacturing, acquisition, logistics, medicine, and business management functions.”

The board, which includes Georgia Tech Manufacturing Institute (GTMI) Executive Director Thomas Kurfess, has conducted six studies in its inaugural year to identify new technologies for rapid adoption and provide near-term, practical recommendations for quick implementation by the Navy.

“I recently led the team for developing a strategy for integrating additive manufacturing into the Navy’s overall shipbuilding and repair strategy,” says Kurfess. “We just had final approval of our recommendations — we are making a significant impact on the Navy with respect to additive manufacturing.”

Del Toro's visit to Georgia Tech reaffirms the Institute's role as a leader in research and innovation, particularly in areas critical to national security. The collaboration between Georgia Tech and the Department of the Navy continues to drive advancements that ensure the safety and effectiveness of the nation's naval forces.

“Innovation is at the heart of our efforts at Georgia Tech and GTMI,” says Kurfess. “It is an honor to put that effort toward ensuring our country’s safety and national security in partnership with the U.S. Navy.”

“As our department continues to reimagine and refocus our innovation efforts,” said Del Toro, “I encourage all of you — our nation’s scientists, engineers, researchers, and inventors — to join us.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Dec. 05, 2024

As automation and AI continue to transform the manufacturing industry, the need for seamless integration across all production stages has reached an all-time high. By digitally designing products, controlling the machinery that builds them, and collecting precise data at each step, digital integration streamlines the entire manufacturing process — cutting down on waste materials, cost, and production time.

Recently, the Georgia Tech Manufacturing Institute (GTMI) teamed up with OPEN MIND Technologies to host an immersive, weeklong training session on hyperMILL, an advanced manufacturing software enabling this digital integration.

OPEN MIND, the developer of hyperMILL, has been a longtime supporter of research operations in Georgia Tech’s Advanced Manufacturing Pilot Facility (AMPF). “Our adoption of their software solutions has allowed us to explore the full potential of machines and to make sure we keep forging new paths,” said Steven Ferguson, a principal research scientist at GTMI.

Software like hyperMILL helps plan the most efficient and accurate way to cut, shape, or 3D print materials on different machines, making the process faster and easier. Hosted at the AMPF, the immersive training offered 10 staff members and students a hands-on platform to use the software while practicing machining and additive manufacturing techniques.

“The number of new features and tricks that the software has every year makes it advantageous to stay current and get a refresher course,” said Alan Burl, a Ph.D. student in the George W. Woodruff School of Mechanical Engineering who attended the training session. “More advanced users can learn new tips and tricks while simultaneously exposing new users to the power of a fully featured, computer-aided manufacturing software.”

OPEN MIND Technologies has partnered with Georgia Tech for over five years to support digital manufacturing research, offering biannual training in their latest software to faculty and students.

“Meeting the new graduate students each fall is something that I look forward to,” said Brad Rooks, an application engineer at OPEN MIND and one of the co-leaders of the training session. “This particular group posed questions that were intuitive and challenging to me as a trainer — their inquisitive nature drove me to look at our software from fresh perspectives.”

The company is also a member of GTMI’s Manufacturing 4.0 Consortium, a membership-based group that unites industry, academia, and government to develop and implement advanced manufacturing technologies and train the workforce for the market.

“The strong reputation of GTMI in the manufacturing industry, and more importantly, the reputation of the students, faculty, and researchers who support research within our facilities, enables us to forge strategic partnerships with companies like OPEN MIND,” says Ferguson, who also serves as executive director of the consortium. “These relationships are what makes working with and within GTMI so special.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Pagination

- Previous page

- 8 Page 8

- Next page