Nov. 22, 2024

The Department of Commerce has granted the Semiconductor Research Corporation (SRC), its partners, and Georgia Institute of Technology $285 million to establish and operate the 18th Manufacturing USA Institute. The Semiconductor Manufacturing and Advanced Reseach with Twins (SMART USA) will focus on using digital twins to accelerate the development and deployment of microelectronics. SMART USA, with more than 150 expected partner entities representing industry, academia, and the full spectrum of supply chain design and manufacturing, will span more than 30 states and have combined funding totaling $1 billion.

This is the first-of-its-kind CHIPS Manufacturing USA Institute.

“Georgia Tech’s role in the SMART USA Institute amplifies our trailblazing chip and advanced packaging research and leverages the strengths of our interdisciplinary research institutes,” said Tim Lieuwen, interim executive vice president for Research. “We believe innovation thrives where disciplines and sectors intersect. And the SMART USA Institute will help us ensure that the benefits of our semiconductor and advanced packaging discoveries extend beyond our labs, positively impacting the economy and quality of life in Georgia and across the United States.”

The 3D Systems Packaging Research Center (PRC), directed by School of Electrical and Computer Engineering Dan Fielder Professor Muhannad Bakir, played an integral role in developing the winning proposal. Georgia Tech will be designated as the Digital Innovation Semiconductor Center (DISC) for the Southeastern U.S.

“We are honored to collaborate with SRC and their team on this new Manufacturing USA Institute. Our partnership with SRC spans more than two decades, and we are thrilled to continue this collaboration by leveraging the Institute’s wide range of semiconductor and advanced packaging expertise,” said Bakir.

Through the Institute of Matter and Systems’ core facilities, housed in the Marcus Nanotechnology Building, DISC will accelerate semiconductor and advanced packaging development.

“The awarding of the Digital Twin Manufacturing USA Institute is a culmination of more than three years of work with the Semiconductor Research Corporation and other valued team members who share a similar vision of advancing U.S. leadership in semiconductors and advanced packaging,” said George White, senior director for strategic partnerships at Georgia Tech.

“As a founding member of the SMART USA Institute, Georgia Tech values this long-standing partnership. Its industry and academic partners, including the HBCU CHIPS Network, stand ready to make significant contributions to realize the goals and objectives of the SMART USA Institute,” White added.

Georgia Tech also plans to capitalize on the supply chain and optimization strengths of the No. 1-ranked H. Milton Stewart School of Industrial and Systems Engineering (ISyE). ISyE experts will help develop supply-chain digital twins to optimize and streamline manufacturing and operational efficiencies.

David Henshall, SRC vice president of Business Development, said, “The SMART USA Institute will advance American digital twin technology and apply it to the full semiconductor supply chain, enabling rapid process optimization, predictive maintenance, and agile responses to chips supply chain disruptions. These efforts will strengthen U.S. global competitiveness, ensuring our country reaps the rewards of American innovation at scale.”

News Contact

Amelia Neumeister | Research Communications Program Manager

Nov. 21, 2024

In a significant step towards fostering international collaboration and advancing cutting-edge technologies in manufacturing, Georgia Tech recently signed Memorandums of Understanding (MoUs) with the Korea Institute of Industrial Technology (KITECH) and the Korea Automotive Technology Institute (KATECH). Facilitated by the Georgia Tech Manufacturing Institute (GTMI), this landmark event underscores Georgia Tech’s commitment to global partnerships and innovation in manufacturing and automotive technologies.

“This is a great fit for the institute, the state of Georgia, and the United States, enhancing international cooperation,” said Thomas Kurfess, GTMI executive director and Regents’ Professor in the George W. Woodruff School of Mechanical Engineering (ME). “An MoU like this really gives us an opportunity to bring together a larger team to tackle international problems.”

“An MoU signing between Georgia Tech and entities like KITECH and KATECH signifies a formal agreement to pursue shared goals and explore collaborative opportunities, including joint research projects, academic exchanges, and technological advancements,” said Seung-Kyum Choi, an associate professor in ME and a major contributor in facilitating both partnerships. “Partnering with these influential institutions positions Georgia Tech to expand its global footprint and enhance its impact, particularly in areas like AI-driven manufacturing and automotive technologies.”

The state of Georgia has seen significant growth in investments from Korean companies. Over the past decade, approximately 140 Korean companies have committed around $23 billion to various projects in Georgia, creating over 12,000 new jobs in 2023 alone. This influx of investment underscores the strong economic ties between Georgia and South Korea, further bolstered by partnerships like those with KITECH and KATECH.

“These partnerships not only provide access to new resources and advanced technologies,” says Choi, “but create opportunities for joint innovation, furthering GTMI’s mission to drive transformative breakthroughs in manufacturing on a global scale.”

The MoUs with KITECH and KATECH are expected to facilitate a wide range of collaborative activities, including joint research projects that leverage the strengths of both institutions, academic exchanges that enrich the educational experiences of students and faculty, and technological advancements that push the boundaries of current manufacturing and automotive technologies.

“My hopes for the future of Georgia Tech’s partnerships with KITECH and KATECH are centered on fostering long-term, impactful collaborations that drive innovation in manufacturing and automotive technologies,” Choi noted. “These partnerships do not just expand our reach; they solidify our leadership in shaping the future of manufacturing, keeping Georgia Tech at the forefront of industry breakthroughs worldwide.”

Georgia Tech has a history of successful collaborations with Korean companies, including a multidecade partnership with Hyundai. Recently, the Institute joined forces with the Korea Institute for Advancement of Technology (KIAT) to establish the KIAT-Georgia Tech Semiconductor Electronics Center to advance semiconductor research, fostering sustainable partnerships between Korean companies and Georgia Tech researchers.

“Partnering with KATECH and KITECH goes beyond just technological innovation,” said Kurfess, “it really enhances international cooperation, strengthens local industry, drives job creation, and boosts Georgia’s economy.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Nov. 19, 2024

In 2017, a long, oddly shaped asteroid passed by Earth. Called ‘Oumuamua, it was the first known interstellar object to visit our solar system, but it wasn’t an isolated incident — less than two years later, in 2019, a second interstellar object (ISO) was discovered.

“‘Oumuamua was found passing just 15 million miles from Earth — that’s much closer than Mars or Venus,” says James Wray. “But it was formed in an entirely different solar system. Studying these objects could give us incredible insight into extrasolar planets, and how our planet fits into the universe.”

Wray, a professor in the School of Earth and Atmospheric Sciences at Georgia Tech, has just been awarded a Simons Foundation Pivot Fellowship to do just that. Pivot Fellowships are among the most prestigious sources of funding for cutting-edge research, and support leading researchers who have the deep interest, curiosity and drive to make contributions to a new discipline.

Wray has primarily studied the geoscience of Mars. He will leverage knowledge of nearby planets to understand ISOs and planets much farther away. “I want to understand how planets got to be the way they are, and if they could have ever hosted life,” he explains. “Extrasolar planets give us many more places to ask those questions than our solar system does, but they're too distant to visit with spacecraft. ISOs provide a unique opportunity to explore other solar systems without leaving our own.”

The Fellowship will provide salary support as well as funding for research, travel, and professional development. “Seed funds like this are so valuable,” says Wray. “I’m incredibly grateful to the Simons Foundation. I’d also like to thank Georgia Tech for its support,” he adds, sharing that the Center for Space Technology and Research supported a related research effort at the University of Hawaii earlier this year. “My mentor and I were able to spend some of that time improving our Pivot Fellowship proposal, which played a critical role in securing this Fellowship.”

In search of ISOs

Wray will study small solar system bodies like asteroids and comets to decode the processes of planet formation and space weathering, and will analyze data from the 2017 and 2019 ISOs.

He will also work alongside collaborators including Karen Meech of the University of Hawaii, who led the paper characterizing ‘Oumuamua, to conceptualize what an intercept mission might look like.

“We still have a lot of questions regarding ISOs,” he says. “Hundreds of papers have already been written about them, but we still don't know the answers.” One key mystery is the composition of the bodies: both the 2017 and 2019 objects were compositionally different from those in our solar system.

“Are they inherently different from the bodies in our solar system, or did the long journey to our solar system make them that way? Is our solar system different from others?” Wray asks. “We could answer so many questions with even a simple picture of the next ISO that comes close enough for us to intercept with spacecraft.”

A cosmic timeline

While there is no guarantee that another ISO might be spotted in our solar system, the timing is opportune — upcoming telescope surveys are poised to detect such interstellar objects. “In mid-2025, when I will start this Fellowship, the new Rubin Observatory will begin scanning the entire sky,” Wray says. “It has the potential to discover up to several new ISOs per year.”

“ISO visits are always brief,” he adds, “so the research needs to be in place for when one is spotted.” If an interstellar object is detected, Wray and Meech will be poised to leverage specialized telescopes in Hawaii, along with others worldwide, to better understand it, studying its size, shape, and composition — and potentially sending spacecraft to image it.

“We might never find another ISO — or they might be the key to imminent breakthroughs in understanding our place in the galaxy,” Wray adds. “I'm extremely grateful to the Simons Foundation for the flexibility to pursue this research at whatever pace the cosmos allows.”

News Contact

Written by Selena Langner

Nov. 15, 2024

The Institute for Matter and Systems (IMS) at Georgia Tech has announced the fall 2024 core facility seed grant recipients. The primary purpose of this program is to give graduate students in diverse disciplines working on original and unfunded research in micro- and nanoscale projects the opportunity to access the most advanced academic cleanroom space in the Southeast. In addition to accessing the labs' high-level fabrication, lithography, and characterization tools, the awardees will have the opportunity to gain proficiency in cleanroom and tool methodology and access the consultation services provided by research staff members in IMS. Seed Grant awardees are also provided travel support to present their research at a scientific conference.

In addition to student research skill development, this biannual grant program gives faculty with novel research topics the ability to develop preliminary data to pursue follow-up funding sources. The Core Facility Seed Grant program is supported in part by the Southeastern Nanotechnology Infrastructure Corridor (SENIC), a member of the National Science Foundation’s National Nanotechnology Coordinated Infrastructure (NNCI).

The five winning projects in this round were awarded IMS cleanroom and lab access time to be used over the next year.

The Fall 2024 IMS Core Facility Seed Grant recipients are:

Manufacturing of a Diamagnetically Enhanced PEM Electrolysis Cell

PI: Alvaro Romero-Calvo

Student: Shay Vitale

Daniel Guggenheim School of Aerospace Engineering

Biomimicking Organ-On-a-Chip Models

PI: Nick Housley

Student: Aref Valipour

School of Biological Sciences

Single-shot LWIR Hyperspectral Imaging Using Meta-optics

PI: Shu Jia

Student: Jooyeong Yun (School of Electrical and Computer Engineering)

The Wallace H. Coulter Department of Biomedical Engineering

Large-area Three-dimensional Nanolithography Using Two-photon Polymerization

PI: Sourabh Saha

Student: Golnaz Aminaltojjari

George W. Woodruff School of Mechanical Engineering

Effects of Geochemical Constraints on the Redistribution of Rare Earth Elements (REE) during Chemical Weathering

PI: Yuanzhi Tang

Student: Hang Xu

School of Earth and Atmospheric Sciences

Nov. 14, 2024

Georgia AIM (Artificial Intelligence in Manufacturing) was recently awarded the 'Tech for Good' award from the Technology Association of Georgia (TAG), the state’s largest tech organization.

The accolade was presented at the annual TAG Technology Awards ceremony on Nov. 6 at Atlanta’s Fox Theatre. The TAG Technology Awards promote inclusive technology throughout Georgia, and any state company, organization, or leader is eligible to apply.

Tech for Good, one of TAG’s five award categories, honors a program or project that uses technology to promote inclusiveness and equity by serving Georgia communities and individuals who are underrepresented in the tech space.

Georgia AIM is comprised of 16 projects across the state that connect smart technology to manufacturing through K-12 education, workforce development, and manufacturer outreach. The federally funded program is a collaborative project administered through Georgia Tech’s Enterprise Innovation Institute and the Georgia Tech Manufacturing Institute.

TAG is a Georgia AIM partner and provides workforce development programs that train people and assist them in making successful transitions into tech careers.

Donna Ennis, Georgia AIM’s co-director, accepted the award on behalf of the organization.

“Georgia AIM’s mission is to equitably develop and deploy talent and innovation for AI in manufacturing, and the Tech for Good Award reinforces our focus on revolutionizing the manufacturing economy for Georgia and the entire country,” Ennis said in her acceptance speech.

She cited the organization’s many coalition members across the state: the Technical College System of Georgia; Spelman College; the Georgia AIM Mobile Studio team at the Russell Innovation Center for Entrepreneurs and the University of Georgia; the Southwest Georgia Regional Commission; the Georgia Cyber Innovation & Training Center; and TAG and Georgia AIM’s partners in the Middle Georgia Innovation corridor, including 21st Century Partnership and the Houston Development Authority.

Ennis also acknowledged the U.S. Economic Development Administration for funding the project and helping to bring it to fruition. “But most of all,” she said, “I want to thank our manufacturers and communities across Georgia who are at the forefront of creating a new economy through AI in manufacturing. It is a privilege to assist you on this journey of technology and discovery.”

News Contact

Nov. 13, 2024

Amino acids are essential for nearly every process in the human body. Often referred to as ‘the building blocks of life,’ they are also critical for commercial use in products ranging from pharmaceuticals and dietary supplements, to cosmetics, animal feed, and industrial chemicals.

And while our bodies naturally make amino acids, manufacturing them for commercial use can be costly — and that process often emits greenhouse gasses like carbon dioxide (CO2).

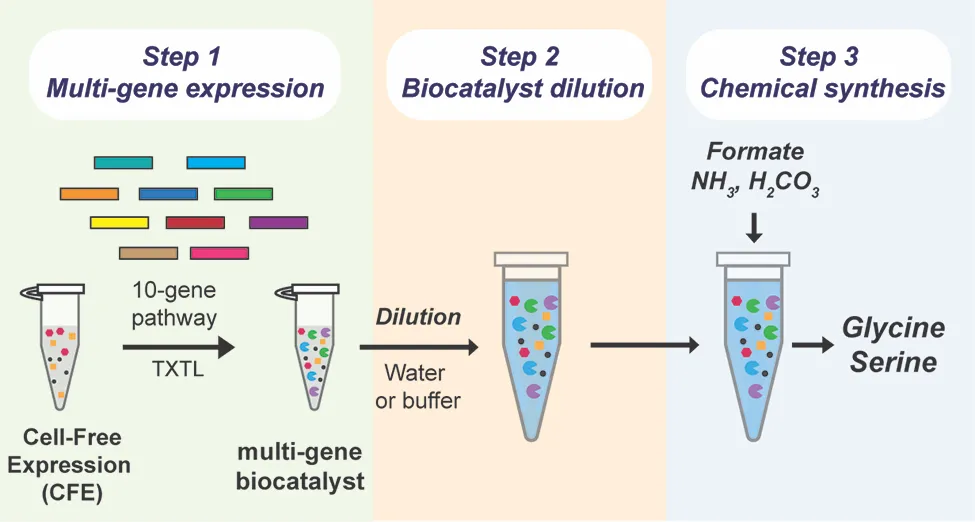

In a landmark study, a team of researchers has created a first-of-its kind methodology for synthesizing amino acids that uses more carbon than it emits. The research also makes strides toward making the system cost-effective and scalable for commercial use.

“To our knowledge, it’s the first time anyone has synthesized amino acids in a carbon-negative way using this type of biocatalyst,” says lead corresponding author Pamela Peralta-Yahya, who emphasizes that the system provides a win-win for industry and environment. “Carbon dioxide is readily available, so it is a low-cost feedstock — and the system has the added bonus of removing a powerful greenhouse gas from the atmosphere, making the synthesis of amino acids environmentally friendly, too.”

The study, “Carbon Negative Synthesis of Amino Acids Using a Cell-Free-Based Biocatalyst,” published today in ACS Synthetic Biology, is publicly available. The research was led by Georgia Tech in collaboration with the University of Washington, Pacific Northwest National Laboratory, and the University of Minnesota.

The Georgia Tech research contingent includes Peralta-Yahya, a professor with joint appointments in the School of Chemistry and Biochemistry and School of Chemical and Biomolecular Engineering (ChBE); first author Shaafique Chowdhury, a Ph.D. student in ChBE; Ray Westenberg, a Ph.D student in Bioengineering; and Georgia Tech alum Kimberly Wennerholm (B.S. ChBE ’23).

Costly chemicals

There are two key challenges to synthesizing amino acids on a large scale: the cost of materials, and the speed at which the system can generate amino acids.

While many living systems like cyanobacteria can synthesize amino acids from CO2, the rate at which they do it is too slow to be harnessed for industrial applications, and these systems can only synthesize a limited number of chemicals.

Currently, most commercial amino acids are made using bioengineered microbes. “These specially designed organisms convert sugar or plant biomass into fuel and chemicals,” explains first author Chowdhury, “but valuable food resources are consumed if sugar is used as the feedstock — and pre-processing plant biomass is costly.” These processes also release CO2 as a byproduct.

Chowdhury says the team was curious “if we could develop a commercially viable system that could use carbon dioxide as a feedstock. We wanted to build a system that could quickly and efficiently convert CO2 into critical amino acids, like glycine and serine.”

The team was particularly interested in what could be accomplished by a ‘cell-free’ system that leveraged some process of a cellular system — but didn’t actually involve living cells, Peralta-Yahya says, adding that systems using living cells need to use part of their CO2 to fuel their own metabolic processes, including cell growth, and have not yet produced sufficient quantities of amino acids.

“Part of what makes a cell-free system so efficient,” Westenberg explains, “is that it can use cellular enzymes without needing the cells themselves. By generating the enzymes and combining them in the lab, the system can directly convert carbon dioxide into the desired chemicals. Because there are no cells involved, it doesn’t need to use the carbon to support cell growth — which vastly increases the amount of amino acids the system can produce.”

A novel solution

While scientists have used cell-free systems before, one of the necessary chemicals, the cell lysate biocatalyst, is extremely costly. For a cell-free system to be economically viable at scale, the team needed to limit the amount of cell lysate the system needed.

After creating the ten enzymes necessary for the reaction, the team attempted to dilute the biocatalyst using a technique called ‘volumetric expansion.’ “We found that the biocatalyst we used was active even after being diluted 200-fold,” Peralta-Yahya explains. “This allows us to use significantly less of this high-cost material — while simultaneously increasing feedstock loading and amino acid output.”

It’s a novel application of a cell-free system, and one with the potential to transform both how amino acids are produced, and the industry’s impact on our changing climate.

“This research provides a pathway for making this method cost-effective and scalable,” Peralta-Yahya says. “This system might one day be used to make chemicals ranging from aromatics and terpenes, to alcohols and polymers, and all in a way that not only reduces our carbon footprint, but improves it.”

Funding: Advanced Research Project Agency-Energy (ARPA-E), U.S. Department of Energy and the U.S. Department of Energy, Office of Science, Biological and Environmental Research Program.

News Contact

Written by Selena Langner

Nov. 11, 2024

A first-of-its-kind algorithm developed at Georgia Tech is helping scientists study interactions between electrons. This innovation in modeling technology can lead to discoveries in physics, chemistry, materials science, and other fields.

The new algorithm is faster than existing methods while remaining highly accurate. The solver surpasses the limits of current models by demonstrating scalability across chemical system sizes ranging from large to small.

Computer scientists and engineers benefit from the algorithm’s ability to balance processor loads. This work allows researchers to tackle larger, more complex problems without the prohibitive costs associated with previous methods.

Its ability to solve block linear systems drives the algorithm’s ingenuity. According to the researchers, their approach is the first known use of a block linear system solver to calculate electronic correlation energy.

The Georgia Tech team won’t need to travel far to share their findings with the broader high-performance computing community. They will present their work in Atlanta at the 2024 International Conference for High Performance Computing, Networking, Storage and Analysis (SC24).

[MICROSITE: Georgia Tech at SC24]

“The combination of solving large problems with high accuracy can enable density functional theory simulation to tackle new problems in science and engineering,” said Edmond Chow, professor and associate chair of Georgia Tech’s School of Computational Science and Engineering (CSE).

Density functional theory (DFT) is a modeling method for studying electronic structure in many-body systems, such as atoms and molecules.

An important concept DFT models is electronic correlation, the interaction between electrons in a quantum system. Electron correlation energy is the measure of how much the movement of one electron is influenced by presence of all other electrons.

Random phase approximation (RPA) is used to calculate electron correlation energy. While RPA is very accurate, it becomes computationally more expensive as the size of the system being calculated increases.

Georgia Tech’s algorithm enhances electronic correlation energy computations within the RPA framework. The approach circumvents inefficiencies and achieves faster solution times, even for small-scale chemical systems.

The group integrated the algorithm into existing work on SPARC, a real-space electronic structure software package for accurate, efficient, and scalable solutions of DFT equations. School of Civil and Environmental Engineering Professor Phanish Suryanarayana is SPARC’s lead researcher.

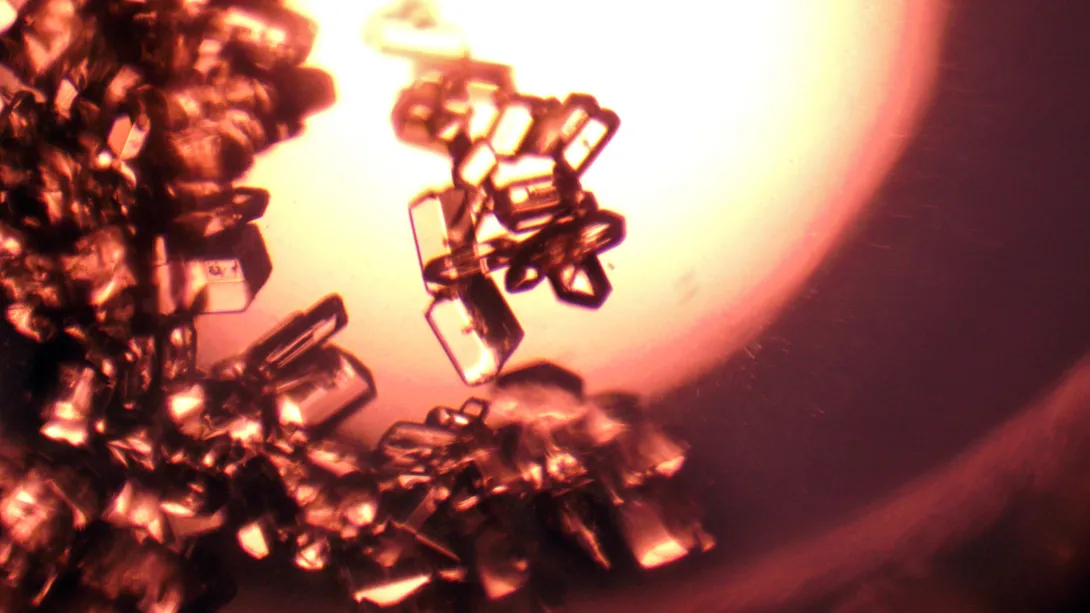

The group tested the algorithm on small chemical systems of silicon crystals numbering as few as eight atoms. The method achieved faster calculation times and scaled to larger system sizes than direct approaches.

“This algorithm will enable SPARC to perform electronic structure calculations for realistic systems with a level of accuracy that is the gold standard in chemical and materials science research,” said Suryanarayana.

RPA is expensive because it relies on quartic scaling. When the size of a chemical system is doubled, the computational cost increases by a factor of 16.

Instead, Georgia Tech’s algorithm scales cubically by solving block linear systems. This capability makes it feasible to solve larger problems at less expense.

Solving block linear systems presents a challenging trade-off in solving different block sizes. While larger blocks help reduce the number of steps of the solver, using them demands higher computational cost per step on computer processors.

Tech’s solution is a dynamic block size selection solver. The solver allows each processor to independently select block sizes to calculate. This solution further assists in scaling, and improves processor load balancing and parallel efficiency.

“The new algorithm has many forms of parallelism, making it suitable for immense numbers of processors,” Chow said. “The algorithm works in a real-space, finite-difference DFT code. Such a code can scale efficiently on the largest supercomputers.”

Georgia Tech alumni Shikhar Shah (Ph.D. CSE 2024), Hua Huang (Ph.D. CSE 2024), and Ph.D. student Boqin Zhang led the algorithm’s development. The project was the culmination of work for Shah and Huang, who completed their degrees this summer. John E. Pask, a physicist at Lawrence Livermore National Laboratory, joined the Tech researchers on the work.

Shah, Huang, Zhang, Suryanarayana, and Chow are among more than 50 students, faculty, research scientists, and alumni affiliated with Georgia Tech who are scheduled to give more than 30 presentations at SC24. The experts will present their research through papers, posters, panels, and workshops.

SC24 takes place Nov. 17-22 at the Georgia World Congress Center in Atlanta.

“The project’s success came from combining expertise from people with diverse backgrounds ranging from numerical methods to chemistry and materials science to high-performance computing,” Chow said.

“We could not have achieved this as individual teams working alone.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Oct. 30, 2024

The National Science Foundation (NSF) awarded a syndicate of eight Southeast universities — with Georgia Tech as the lead — a $15 million grant to support the development of a regional innovation ecosystem that addresses underrepresentation and increases entrepreneurship and technology-oriented workforce development.

The NSF Innovation Corps (I-Corps) Southeast Hub is a five-year project based on the I-Corps model, which assists academics in moving their research from the lab to the market.

Led by Georgia Tech’s Office of Commercialization and Enterprise Innovation Institute, the NSF I-Corps Southeast Hub encompasses four states — Georgia, Florida, South Carolina, and Alabama.

Its member schools include:

- Clemson University

- Morehouse College

- University of Alabama

- University of Central Florida

- University of Florida

- University of Miami

- University of South Florida

In January 2025, when the NSF I-Corps Southeast Hub officially launches, the consortium of schools will expand to include the University of Puerto Rico. Additionally, through Morehouse College’s activation, Spelman College and the Morehouse School of Medicine will also participate in supporting the project.

With a combined economic output of more than $3.2 trillion, the NSF I-Corps Southeast Hub region represents more than 11% of the entire U.S. economy. As a region, those states and Puerto Rico have a larger economic output than France, Italy, or Canada.

“This is a great opportunity for us to engage in regional collaboration to drive innovation across the Southeast to strengthen our regional economy and that of Puerto Rico,” said the Enterprise Innovation Institute’s Nakia Melecio, director of the NSF I-Corps Southeast Hub. As director, Melecio will oversee strategic management, data collection, and overall operations.

Additionally, Melecio serves as a national faculty instructor for the NSF I-Corps program.

“This also allows us to collectively tackle some of the common challenges all four of our states face, especially when it comes to being intentionally inclusive in reaching out to communities that historically haven’t always been invited to participate,” he said.

That means bringing solutions to market that not only solve problems but are intentional about including researchers from Black and Hispanic-serving institutions, Melecio said.

Keith McGreggor, director of Georgia Tech’s VentureLab, is the faculty lead charged with designing the curriculum and instruction for the NSF I-Corps Southeast Hub’s partners.

McGreggor has extensive I-Corps experience. In 2012, Georgia Tech was among the first institutions in the country selected to teach the I-Corps curriculum, which aims to further research commercialization. McGreggor served as the lead instructor for I-Corps-related efforts and led training efforts across the Southeast, as well as for teams in Puerto Rico, Mexico, and the Republic of Ireland.

Raghupathy “Siva” Sivakumar, Georgia Tech’s vice president of Commercialization and chief commercialization officer, is the project’s principal investigator.

The NSF I-Corps Southeast Hub is one of three announced by the NSF. The others are in the Northwest and New England regions, led by the University of California, Berkeley, and the Massachusetts Institute of Technology, respectively. The three I-Corps Hubs are part of the NSF’s planned expansion of its National Innovation Network, which now includes 128 colleges and universities across 48 states.

As designed, the NSF I-Corps Southeast Hub will leverage its partner institutions’ strengths to break down barriers to researchers’ pace of lab-to-market commercialization.

"Our Hub member institutions have successfully commercialized transformative technologies across critical sectors, including advanced manufacturing, renewable energy, cybersecurity, and biomedical fields,” said Sivakumar. “We aim to achieve two key objectives: first, to establish and expand a scalable model that effectively translates research into viable commercial ventures; and second, to address pressing societal needs.

"This includes not only delivering innovative solutions but also cultivating a diverse pipeline of researchers and innovators, thereby enhancing interest in STEM fields — science, technology, engineering, and mathematics.”

U.S. Rep. Nikema Williams, D-Atlanta, is a proponent of the Hub’s STEM component.

“As a biology major-turned-congresswoman, I know firsthand that STEM education and research open doors far beyond the lab or classroom.,” Williams said. “This National Science Foundation grant means Georgia Tech will be leading the way in equipping researchers and grad students to turn their discoveries into real-world impact — as innovators, entrepreneurs, and business leaders.

“I’m especially excited about the partnership with Morehouse College and other minority-serving institutions through this Hub, expanding pathways to innovation and entrepreneurship for historically marginalized communities and creating one more tool to close the racial wealth gap.”

That STEM aspect, coupled with supporting the growth of a regional ecosystem, will speed commercialization, increase higher education-industry collaborations, and boost the network of diverse entrepreneurs and startup founders, said David Bridges, vice president of the Enterprise Innovation Institute.

“This multi-university, regional approach is a successful model because it has been proven that bringing a diversity of stakeholders together leads to unique solutions to very difficult problems,” he said. “And while the Southeast faces different challenges that vary from state to state and Puerto Rico has its own needs, they call for a more comprehensive approach to solving them. Adopting a region-oriented focus allows us to understand what these needs are, customize tailored solutions, and keep not just our hub but our nation economically competitive.”

News Contact

Péralte C. Paul

peralte@gatech.edu

404.316.1210

Oct. 24, 2024

The U.S. Department of Energy (DOE) has awarded Georgia Tech researchers a $4.6 million grant to develop improved cybersecurity protection for renewable energy technologies.

Associate Professor Saman Zonouz will lead the project and leverage the latest artificial technology (AI) to create Phorensics. The new tool will anticipate cyberattacks on critical infrastructure and provide analysts with an accurate reading of what vulnerabilities were exploited.

“This grant enables us to tackle one of the crucial challenges facing national security today: our critical infrastructure resilience and post-incident diagnostics to restore normal operations in a timely manner,” said Zonouz.

“Together with our amazing team, we will focus on cyber-physical data recovery and post-mortem forensics analysis after cybersecurity incidents in emerging renewable energy systems.”

As the integration of renewable energy technology into national power grids increases, so does their vulnerability to cyberattacks. These threats put energy infrastructure at risk and pose a significant danger to public safety and economic stability. The AI behind Phorensics will allow analysts and technicians to scale security efforts to keep up with a growing power grid that is becoming more complex.

This effort is part of the Security of Engineering Systems (SES) initiative at Georgia Tech’s School of Cybersecurity and Privacy (SCP). SES has three pillars: research, education, and testbeds, with multiple ongoing large, sponsored efforts.

“We had a successful hiring season for SES last year and will continue filling several open tenure-track faculty positions this upcoming cycle,” said Zonouz.

“With top-notch cybersecurity and engineering schools at Georgia Tech, we have begun the SES journey with a dedicated passion to pursue building real-world solutions to protect our critical infrastructures, national security, and public safety.”

Zonouz is the director of the Cyber-Physical Systems Security Laboratory (CPSec) and is jointly appointed by Georgia Tech’s School of Cybersecurity and Privacy (SCP) and the School of Electrical and Computer Engineering (ECE).

The three Georgia Tech researchers joining him on this project are Brendan Saltaformaggio, associate professor in SCP and ECE; Taesoo Kim, jointly appointed professor in SCP and the School of Computer Science; and Animesh Chhotaray, research scientist in SCP.

Katherine Davis, associate professor at the Texas A&M University Department of Electrical and Computer Engineering, has partnered with the team to develop Phorensics. The team will also collaborate with the NREL National Lab, and industry partners for technology transfer and commercialization initiatives.

The Energy Department defines renewable energy as energy from unlimited, naturally replenished resources, such as the sun, tides, and wind. Renewable energy can be used for electricity generation, space and water heating and cooling, and transportation.

News Contact

John Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Oct. 22, 2024

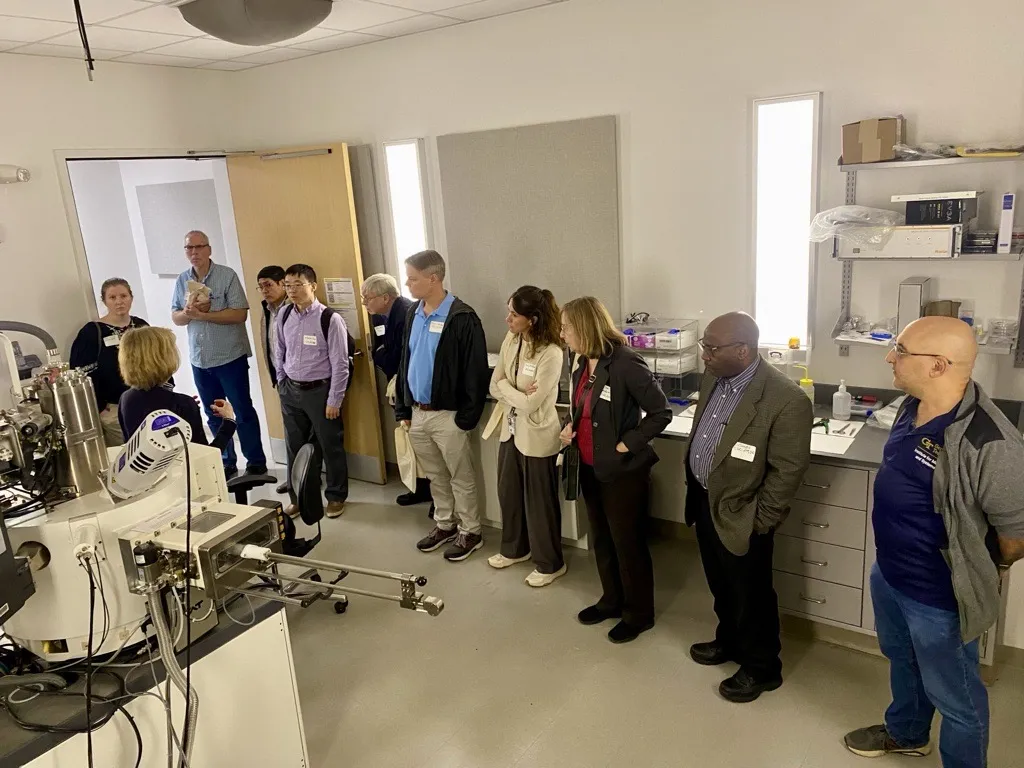

The Institute for Matter and Systems (IMS) held its opening showcase on October 15, 2024, in the Marcus Nanotechnology Building at Georgia Tech.

“We're trying to link people from fundamental science through materials, measurements, modelling software, systems, economics, and public policy,” said Eric Vogel, IMS executive director.

Vogel noted that IMS does this in four ways— through research support, fabrication and characterization core facilities, education and outreach programs and strategic external engagement.

The Institute for Matter and Systems arose from the union of the Institute of Electronics and Nanotechnology and the Institute for Materials. Each of the latter two interdisciplinary research institutes focused on major national priorities — the National Nanotechnology Initiative and the Materials Genome Initiative, respectively. The work done by IMS researchers flies at the intersection of technology, innovation, and science, with a focus on creating technological and societal transformation through devices, processes and components.

The event featured the second annual Oliver Brand Memorial Lectureship on Electronics and Nanotechnology. The lecture was presented by Michael Strano, whose research focuses on micro-robotics.

After the lecture, guests were invited to explore IMS’s research centers and facilities. Walking tours of the micro/nano fabrication cleanroom and material characterization facility showcased the core facilities available to those who engage with IMS. Booths featuring IMS supported research centers allowed guests to explore the breadth of research activities happening within the research institute.

Pagination

- Previous page

- 9 Page 9

- Next page