Nov. 18, 2025

Viral videos abound with humanoid robots performing amazing feats of acrobatics and dance but finding videos of a humanoid robot performing a common household task or traversing a new multi-terrain environment easily, and without human control, are much rarer. This is because training humanoid robots to perform these seemingly simple functions involves the need for simulation training data that lack the complex dynamics and degrees of freedom of motion that are inherent in humanoid robots.

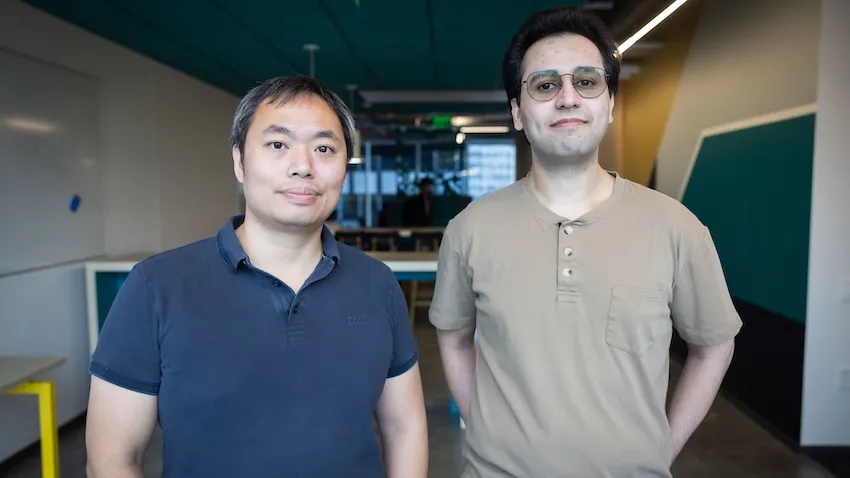

To achieve better training outcomes with faster deployment results, Fukang Liu and Feiyang Wu, graduate students under Professor Ye Zhao from the Woodruff School of Mechanical Engineering and faculty member of the Institute for Robotics and Intelligent Machines, have published a duo of papers in IEEE Robotics and Automation Letters. This is a collaborative work with three other IRIM affiliated faculties, Profs. Danfei Xu, Yue Chen, and Sehoon Ha, as well as Prof. Anqi Wu from School of Computational Science and Engineering.

To develop more reliable motion learning for humanoid robots and enable humanoid robots to perform complex whole-body movements in the real world, Fukang led a team and developed Opt2Skill, a hybrid robot learning framework that combines model-based trajectory optimization with reinforcement learning. Their framework integrates dynamics and contacts into the trajectory planning process and generates high-quality, dynamically feasible datasets, which result in more reliable motion learning for humanoid robots and improved position tracking and task success rates. This approach shows a promising way to augment the performance and generalization of humanoid RL policies using dynamically feasible motion datasets. Incorporating torque data also improved motion stability and force tracking in contact-rich scenarios, demonstrating that torque information plays a key role in learning physically consistent and contact-rich humanoid behaviors.

While other datasets, such as inverse kinematics or human demonstrations, are valuable, they don’t always capture the dynamics needed for reliable whole-body humanoid control.” said by Fukang Liu. “With our Opt2Skill framework, we combine trajectory optimization with reinforcement learning to generate and leverage high-quality, dynamically feasible motion data. This integrated approach gives robots a richer and more physically grounded training process, enabling them to learn these complex tasks more reliably and safely for real-world deployment. - Fukang Liu

In another line of humanoid research, Feiyang established a one-stage training framework that allows humanoid robots to learn locomotion more efficiently and with greater environmental adaptability. Their framework, Learn-to-Teach (L2T), unlike traditional two-stage “teacher-student” approaches, which first train an expert in simulation and then retrain a limited-perception student, teaches both simultaneously, sharing knowledge and experiences in real time. The result of this two-way training is a 50% reduction in training data and time, while maintaining or surpassing state-of-the-art performance in humanoid locomotion. The lightweight policy learned through this process enables the lab’s humanoid robot to traverse more than a dozen real-world terrains—grass, gravel, sand, stairs, and slopes—without retraining or depth sensors.

By training an expert and a deployable controller together, we can turn rich simulation feedback into a lightweight policy that runs on real hardware, letting our humanoid adapt to uneven, unstructured terrain with far less data and hand-tuning than traditional methods. - Feiyang Wu

By the application of these training processes, the team hopes to speed the development of deployable humanoid robots for home use, manufacturing, defense, and search and rescue assistance in dangerous environments. These methods also support advances in embodied intelligence, enabling robots to learn richer, more context-aware behaviors.Additionally, the training data process can be applied to research to improve the functionality and adaptability of human assistive devices for medical and therapeutic uses.

As humanoid robots move from controlled labs into messy, unpredictable real-world environments, the key is developing embodied intelligence—the ability for robots to sense, adapt, and act through their physical bodies,” said Professor Ye Zhao. “The innovations from our students push us closer to robots that can learn robust skills, navigate diverse terrains, and ultimately operate safely and reliably alongside people. - Prof. Ye Zhao

Author - Christa M. Ernst

Citations

Liu F, Gu Z, Cai Y, Zhou Z, Jung H, Jang J, Zhao S, Ha S, Chen Y, Xu D, Zhao Y. Opt2skill: Imitating dynamically-feasible whole-body trajectories for versatile humanoid loco-manipulation. IEEE Robotics and Automation Letters. 2025 Oct 13.

Wu F, Nal X, Jang J, Zhu W, Gu Z, Wu A, Zhao Y. Learn to teach: Sample-efficient privileged learning for humanoid locomotion over real-world uneven terrain. IEEE Robotics and Automation Letters. 2025 Jul 23.

News Contact

Nov. 17, 2025

“How will AI kill Creature?”

That was the question posed to Scheller College of Business Evening MBA students Katie Bowen (’25), Ellie Cobb (’26), and Christopher Jones (’26) in a marketing practicum course that paired them with Creature, a brand, product, and marketing transformation studio.

For 10 weeks, the students worked as consultants in a project that challenged them to rethink the role of artificial intelligence in creative industries. Course instructor Jarrett Oakley, director of Marketing at TOTO USA, guided the student project as they developed strategies to help Creature navigate the evolving landscape of AI-driven marketing.

Business School Meets Real Business

“Nothing accelerates the value of a business school education like applying it in real time to real businesses,” Oakley said. “This course mirrored a consulting engagement, turning classroom learning into actionable expertise through direct collaboration with local firms. It was designed to spark creative thinking, build confidence, and bridge theory with practice.”

What began as a traditional strategic analysis quickly evolved into a forward-looking exploration of AI’s impact on branding, user experience, and performance creative. “Our team realized early on that AI wasn’t a threat but a powerful tool,” the students shared. “We found that AI’s real impact lies not in replacing creativity, but in reshaping expectations, accelerating timelines, and redefining performance standards. It also gives forward-thinking agencies like Creature the opportunity to guide clients still catching up to the AI curve.”

Creature’s founders, Margaret Strickland and Matt Berberian, welcomed the collaboration. “We solve creative challenges across brand, product, and performance,” said Strickland. “AI is transforming each of these areas. The students helped us see how to stay ahead of the curve.”

Students applied frameworks like SWOT, Porter’s Five Forces, and the G-STIC model to diagnose challenges and develop actionable strategies. Weekly meetings with Creature allowed for iterative feedback and refinement.

One of the team’s most surprising insights came from primary research: many agencies hesitate to disclose their use of AI, fearing clients will demand lower prices. “We recommended Creature define and share their AI philosophy,” said the students. “Clients want transparency and innovation, and they’ll choose partners who embrace AI, not hide from it.”

Creature took the advice to heart. Since the project concluded, the firm has launched a new AI consulting offering, SNSE by Creature, and implemented automation across operations, resulting in a 21% boost in efficiency. They’ve also adopted an AI manifesto to guide future initiatives.

A Transformative Student Experience

Katie Bowen, Evening MBA '25

“This project let us apply MBA concepts to a real-world business challenge. We dove into Creature’s business and tailored our analysis to their needs. It pushed us to think critically about how companies stay competitive when AI tools are widely accessible. Using strategy, innovation, and marketing frameworks, we bridged theory and practice to deliver forward-looking recommendations.”

Ellie Cobb, Evening MBA ‘26

“This project strengthened my ability to use AI effectively in both personal and professional contexts—not just knowing how to use it, but when not to. Exploring such a fast-evolving topic made me more agile and open-minded, ready to follow where research and emerging trends lead.”

Christopher Jones, Evening MBA ‘26

“The Marketing Practicum with Creature was an eye-opening experience that deepened my understanding of AI’s impact on business. It sharpened my critical thinking as I navigated conflicting information about AI, and gave me practical insight into business strategy, from integrating new technology to managing innovation and diversifying product offerings.”

Education With Impact

Oakley believes the practicum will have lasting impact. “These students now understand how traditional marketing strategy integrates with emerging AI capabilities. They’re ready to lead in a rapidly evolving industry.”

As AI continues to reshape marketing, partnerships like the one between Scheller and Creature demonstrate the power of collaboration, innovation, and education in preparing future leaders for whatever comes next.

News Contact

Kristin Lowe (She/Her)

Content Strategist

Georgia Institute of Technology | Scheller College of Business

kristin.lowe@scheller.gatech.edu

Nov. 14, 2025

311 chatbots make it easier for people to report issues to their local government without long wait times on the phone. However, a new study finds that the technology might inhibit civic engagement.

311 systems allow residents to report potholes, broken fire hydrants, and other municipal issues. In recent years, the use of artificial intelligence (AI) to provide 311 services to community residents has boomed across city and state governments. This includes an artificial virtual assistant (AVA) developed by third-party vendors for the City of Atlanta in 2023.

Through survey data, researchers from Tech’s School of Interactive Computing found that many residents are generally positive about 311 chatbots. In addition to eliminating long wait times over the phone, they also offer residents quick answers to permit applications, waste collection, and other frequently asked questions.

However, the study, which was conducted in Atlanta, indicates that 311 chatbots could be causing residents to feel isolated from public officials and less aware of what’s happening in their community.

Jieyu Zhou, a Ph.D. student in the School of IC, said it doesn’t have to be that way.

Uniting Communities

Zhou and her advisor, Assistant Professor Christopher MacLellan, published a paper at the 2025 ACM Designing Interactive Systems (DIS) Conference that focuses on improving public service chatbot design and amplifying their civic impact. They collaborated with Professor Carl DiSalvo, Associate Professor Lynn Dombrowski, and graduate students Rui Shen and Yue You.

Zhou said 311 chatbots have the potential to be agents that drive community organization and improve quality of life.

“Current chatbots risk isolating users in their own experience,” Zhou said. “In the 311 system, people tend to report their own individual issues but lose a sense of what is happening in their broader community.

“People are very positive about these tools, but I think there’s an opportunity as we envision what civic chatbots could be. It’s important for us to emphasize that social element — engaging people within the community and connecting them with government representatives, community organizers, and other community members.”

Zhou and MacLellan said 311 chatbots can leave users wondering if others in their communities share their concerns.

“If people are at a town hall meeting, they can get a sense of whether the problems they are experiencing are shared by others,” Zhou said. “We can’t do that with a chatbot. It’s like an isolated room, and we’re trying to open the doors and the windows.”

Adding a Human Touch

In their paper, the researchers note that one of the biggest criticisms of 311 chatbots is they can’t replace interpersonal interaction.

Unlike chatbots, people working in local government offices are likely to:

- Have direct knowledge of issues

- Provide appropriate referrals

- Empathize with the resident’s concerns

MacLellan said residents are likely to grow frustrated with a chatbot when reporting issues that require this level of contextual knowledge.

One person in the researchers’ survey noted that the chatbot they used didn’t understand that their report was about a sidewalk issue, not a street issue.

“Explaining such a situation to a human representative is straightforward,” MacLellan said. “However, when the issue being raised does not fall within any of the categories the chatbot is built to address, it often misinterprets the query and offers information that isn’t helpful.”

The researchers offer some design suggestions that can help chatbots foster community engagement and improve community well-being:

- Escalation. Regarding the sidewalk report, the chatbot did not offer a way to escalate the query to a human who could resolve it. Zhou said that this is a feature that chatbots should have but often lack.

- Transparency. Chatbots could provide details about recent and frequently reported community issues. They should inform users early in the call process about known problems to help avoid an overload of user complaints.

- Education. Chatbots can keep users updated about what’s happening in their communities.

- Collective action. Chatbots can help communities organize and gather ideas to address challenges and solve problems.

“Government agencies may focus mainly on fixing individual issues,” Zhou said, “But recognizing community-level patterns can inspire collective creativity. For example, one participant suggested that if many people report a broken swing at a playground, it could spark an initiative to design a new playground together—going far beyond just fixing it.”

These are just a few examples of things, the researchers argue, that 311 services were originally designed to achieve.

“Communities were already collaborating on identifying and reporting issues,” Zhou said. “These chatbots should reflect the original intentions and collaboration practices of the communities they serve.

“Our research suggests we can increase the positive impact of civic chatbots by including social aspects within the design of the system, connecting people, and building a community view.”

Nov. 12, 2025

One of the top conferences for AI and computer games is recognizing a School of Interactive Computing professor with its first-ever test-of-time award.

At its event this week in Alberta, Canada, the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment (AIIDE) is honoring Professor Mark Riedl. The award also honors University of Utah Professor and Division of Games Chair Michael Young, Riedl’s Ph.D. advisor.

Riedl studied under Young at North Carolina State University.

Their 2005 paper, From Linear Story Generation to Branching Story Graphs, highlighted the challenges of using AI to create interactive gaming narratives in which user actions influence the story’s progression.

In 2005, computer game systems that supported linear, non-branching games were widely used. Riedl introduced an innovative mathematical formula for interactive stories ranging from choose-your-own-adventure novels to modern computer games.

“We didn’t use the term ‘generative AI’ back then, but I was working on AI for the generation of creative artifacts,” Riedl said. “This was before we had practical deep learning or large language models.

“One of the reasons this paper is still relevant 20 years later is that it didn’t just present a technology, it attempted to provide a framework for solving a grand challenge in AI.”

That challenge is still ongoing, Riedl said. Game designers continue to struggle with balancing story coherence against the amount of narrative control afforded to users.

“When users exercise a high degree of control within the environment, it is likely that their actions will change the state of the world in ways that may interfere with the causal dependencies between actions as intended within a storyline,” Riedl and Young wrote in the paper.

“Narrative mediation makes linear narratives interactive. The question is: Is the expressive power of narrative mediation at least as powerful as the story graph representation?”

AIIDE is being held this week at the University of Alberta in Edmonton, Alberta. Riedl will receive the award on Wednesday.

Nov. 03, 2025

A new deep learning architectural framework could boost the development and deployment efficiency of autonomous vehicles and humanoid robots. The framework will lower training costs and reduce the amount of real-world data needed for training.

World foundation models (WFMs) enable physical AI systems to learn and operate within synthetic worlds created by generative artificial intelligence (genAI). For example, these models use predictive capabilities to generate up to 30 seconds of video that accurately reflects the real world.

The new framework, developed by a Georgia Tech researcher, enhances the processing speed of the neural networks that simulate these real-world environments from text, images, or video inputs.

The neural networks that make up the architectures of large language models like ChatGPT and visual models like Sora process contextual information using the “attention mechanism.”

Attention refers to a model’s ability to focus on the most relevant parts of input.

The Neighborhood Attention Extension (NATTEN) allows models that require GPUs or high-performance computing systems to process information and generate outputs more efficiently.

Processing speeds can increase by up to 2.6 times, said Ali Hassani, a Ph.D. student in the School of Interactive Computing and the creator of NATTEN. Hassani is advised by Associate Professor Humphrey Shi.

Hassani is also a research scientist at Nvidia, where he introduced NATTEN to Cosmos — a family of WFMs the company uses to train robots, autonomous vehicles, and other physical AI applications.

“You can map just about anything from a prompt or an image or any combination of frames from an existing video to predict future videos,” Hassani said. “Instead of generating words with an LLM, you’re generating a world.

“Unlike LLMs that generate a single token at a time, these models are compute-heavy. They generate many images — often hundreds of frames at a time — so the models put a lot of work on the GPU. NATTEN lets us decrease some of that work and proportionately accelerate the model.”

Sep. 03, 2025

Artificial intelligence is growing fast, and so are the number of computers that power it. Behind the scenes, this rapid growth is putting a huge strain on the data centers that run AI models. These facilities are using more energy than ever.

AI models are getting larger and more complex. Today’s most advanced systems have billions of parameters, the numerical values derived from training data, and run across thousands of computer chips. To keep up, companies have responded by adding more hardware, more chips, more memory and more powerful networks. This brute force approach has helped AI make big leaps, but it’s also created a new challenge: Data centers are becoming energy-hungry giants.

Some tech companies are responding by looking to power data centers on their own with fossil fuel and nuclear power plants. AI energy demand has also spurred efforts to make more efficient computer chips.

I’m a computer engineer and a professor at Georgia Tech who specializes in high-performance computing. I see another path to curbing AI’s energy appetite: Make data centers more resource aware and efficient.

Energy and Heat

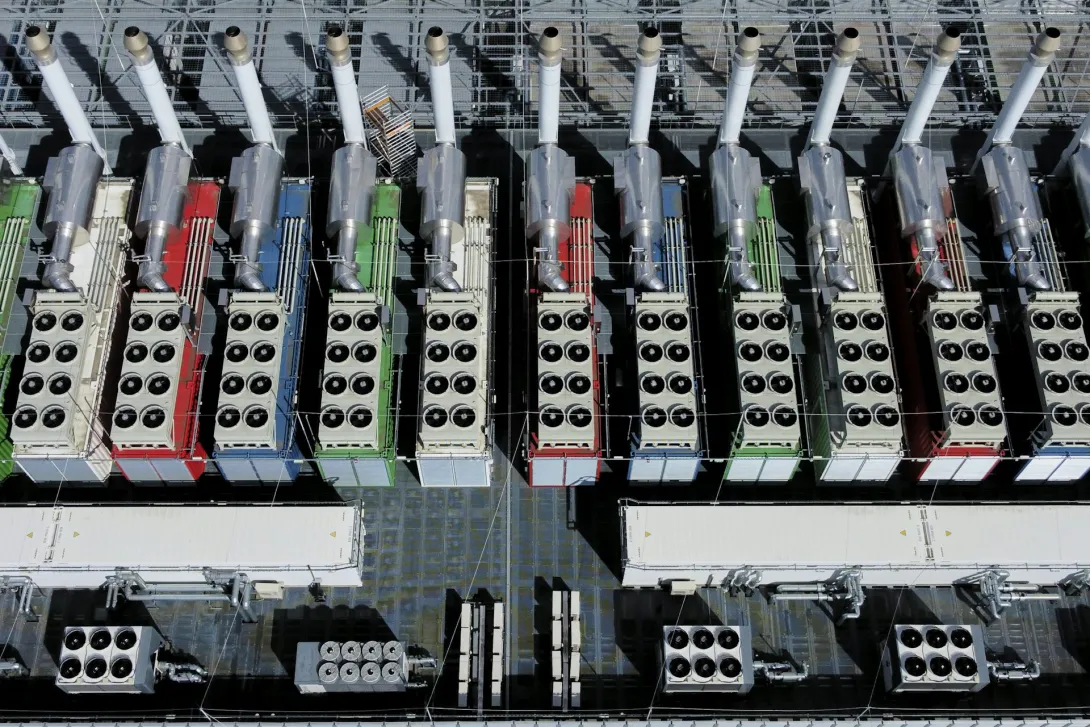

Modern AI data centers can use as much electricity as a small city. And it’s not just the computing that eats up power. Memory and cooling systems are major contributors, too. As AI models grow, they need more storage and faster access to data, which generates more heat. Also, as the chips become more powerful, removing heat becomes a central challenge.

Data centers house thousands of interconnected computers. Alberto Ortega/Europa Press via Getty Images

Cooling isn’t just a technical detail; it’s a major part of the energy bill. Traditional cooling is done with specialized air conditioning systems that remove heat from server racks. New methods like liquid cooling are helping, but they also require careful planning and water management. Without smarter solutions, the energy requirements and costs of AI could become unsustainable.

Even with all this advanced equipment, many data centers aren’t running efficiently. That’s because different parts of the system don’t always talk to each other. For example, scheduling software might not know that a chip is overheating or that a network connection is clogged. As a result, some servers sit idle while others struggle to keep up. This lack of coordination can lead to wasted energy and underused resources.

A Smarter Way Forward

Addressing this challenge requires rethinking how to design and manage the systems that support AI. That means moving away from brute-force scaling and toward smarter, more specialized infrastructure.

Here are three key ideas:

Address variability in hardware. Not all chips are the same. Even within the same generation, chips vary in how fast they operate and how much heat they can tolerate, leading to heterogeneity in both performance and energy efficiency. Computer systems in data centers should recognize differences among chips in performance, heat tolerance and energy use, and adjust accordingly.

Adapt to changing conditions. AI workloads vary over time. For instance, thermal hotspots on chips can trigger the chips to slow down, fluctuating grid supply can cap the peak power that centers can draw, and bursts of data between chips can create congestion in the network that connects them. Systems should be designed to respond in real time to things like temperature, power availability and data traffic.

Break down silos. Engineers who design chips, software and data centers should work together. When these teams collaborate, they can find new ways to save energy and improve performance. To that end, my colleagues, students and I at Georgia Tech’s AI Makerspace, a high-performance AI data center, are exploring these challenges hands-on. We’re working across disciplines, from hardware to software to energy systems, to build and test AI systems that are efficient, scalable and sustainable.

Scaling With Intelligence

AI has the potential to transform science, medicine, education and more, but risks hitting limits on performance, energy and cost. The future of AI depends not only on better models, but also on better infrastructure.

To keep AI growing in a way that benefits society, I believe it’s important to shift from scaling by force to scaling with intelligence.![]()

This article is republished from The Conversation under a Creative Commons license. Read the original article.

News Contact

Author:

Divya Mahajan, assistant professor of Computer Engineering, Georgia Institute of Technology

Media Contact:

Shelley Wunder-Smith

shelley.wunder-smith@research.gatech.edu

Sep. 02, 2025

A new version of Georgia Tech’s virtual teaching assistant, Jill Watson, has demonstrated that artificial intelligence can significantly improve the online classroom experience. Developed by the Design Intelligence Laboratory (DILab) and the U.S. National Science Foundation AI Institute for Adult Learning and Online Education (AI-ALOE), the latest version of Jill Watson integrates OpenAI’s ChatGPT and is outperforming OpenAI’s own assistant in real-world educational settings.

Jill Watson not only answers student questions with high accuracy. It also improves teaching presence and correlates with better academic performance. Researchers believe this is the first documented instance of a chatbot enhancing teaching presence in online learning for adult students.

How Jill Watson Shaped Intelligent Teaching Assistants

First introduced in 2016 using IBM’s Watson platform, Jill Watson was the first AI-powered teaching assistant deployed in real classes. It began by responding to student questions on discussion forums like Piazza using course syllabi and a curated knowledge base of past Q&As. Widely covered by major media outlets including The Chronicle of Higher Education, The Wall Street Journal, and The New York Times, the original Jill pioneered new territory in AI-supported learning.

Subsequent iterations addressed early biases in the training data and transitioned to more flexible platforms like Google’s BERT in 2019, allowing Jill to work across learning management systems such as EdStem and Canvas. With the rise of generative AI, the latest version now uses ChatGPT to engage in extended, context-rich dialogue with students using information drawn directly from courseware, textbooks, video transcripts, and more.

Future of Personalized, AI-Powered Learning

Designed around the Community of Inquiry (CoI) framework, Jill Watson aims to enhance “teaching presence,” one of three key factors in effective online learning, alongside cognitive and social presence. Teaching presence includes both the design of course materials and facilitation of instruction. Jill supports this by providing accurate, personalized answers while reinforcing the structure and goals of the course.

The system architecture includes a preprocessed knowledge base, a MongoDB-powered memory for storing conversation history, and a pipeline that classifies questions, retrieves contextually relevant content, and moderates responses. Jill is built to avoid generating harmful content and only responds when sufficient verified course material is available.

Field-Tested in Georgia and Beyond

The first AI-powered teaching assistant was developed for Georgia Tech’s Online Master of Science in Computer Science (OMSCS) program. By fall 2023, Jill Watson was deployed in Georgia Tech’s OMSCS artificial intelligence course, serving more than 600 students, as well as in an English course at Wiregrass Georgia Technical College, part of the Technical College System of Georgia (TCSG).

A controlled A/B experiment in the OMSCS course allowed researchers to compare outcomes between students with and without access to Jill Watson, even though all students could use ChatGPT. The findings are striking:

- Jill Watson’s accuracy on synthetic test sets ranged from 75% to 97%, depending on the content source. It consistently outperformed OpenAI’s Assistant, which scored around 30%.

- Students with access to Jill Watson showed stronger perceptions of teaching presence, particularly in course design and organization, as well as higher social presence.

- Academic performance also improved slightly: students with Jill saw more A grades (66% vs. 62%) and fewer C grades (3% vs. 7%).

A Smarter, Safer Chatbot

While Jill Watson uses ChatGPT for natural language generation, it restricts outputs to validated course material and verifies each response using textual entailment. According to a study by Taneja et al. (2024), Jill not only delivers more accurate answers than OpenAI’s Assistant but also avoids producing confusing or harmful content at significantly lower rates.

Compared to OpenAI’s Assistant, Jill Watson (ChatGPT) not only achieves higher accuracy but also produces confusing or harmful content at significantly lower rates. Jill Watson answers correctly 78.7% of the time, with only 2.7% of its errors categorized as harmful and 54.0% as confusing. In contrast, OpenAI’s Assistant demonstrates a much lower accuracy of 30.7%, with harmful failures occurring 14.4% of the time and confusing failures rising to 69.2%. Additionally, Jill Watson has a lower retrieval failure rate of 43.2%, compared to 68.3% for the OpenAI Assistant.

What’s Next for Jill

The team plans to expand testing across introductory computing courses at Georgia Tech and technical colleges. They also aim to explore Jill Watson’s potential to improve cognitive presence, particularly critical thinking and concept application. Although quantitative results for cognitive presence are still inconclusive, anecdotal feedback from students has been positive. One OMSCS student wrote:

“The Jill Watson upgrade is a leap forward. With persistent prompting I managed to coax it from explicit knowledge to tacit knowledge. Kudos to the team!”

The researchers also expect Jill to reduce instructional workload by handling routine questions and enabling more focus on complex student needs.

Additionally, AI-ALOE is collaborating with the publishing company John Wiley & Sons, Inc., to develop a Jill Watson virtual teaching assistant for one of their courses, with the instructor and university chosen by Wiley. If successful, this initiative could potentially scale to hundreds or even thousands of classes across the country and around the world, transforming the way students interact with course content and receive support.

A Georgia Tech-Led Collaboration

The Jill Watson project is supported by Georgia Tech, the US National Science Foundation’s AI-ALOE Institute (Grants #2112523 and #2247790), and the Bill & Melinda Gates Foundation.

Core team members are Saptrishi Basu, Jihou Chen, Jake Finnegan, Isaac Lo, JunSoo Park, Ahamad Shapiro and Karan Taneja, under the direction of professor Ashok Goel and Sandeep Kakar. The team works under Beyond Question LLC, an AI-based educational technology startup.

News Contact

Breon Martin

Aug. 20, 2025

Daniel Yue, assistant professor of IT Management at the Scheller College of Business, has been awarded the prestigious Best Dissertation Award by the Technology and Innovation Management Division of the Academy of Management. The recognition celebrates the most impactful doctoral research in the field of business and innovation.

Yue’s dissertation, developed during his Ph.D. at Harvard Business School, explores a paradox at the heart of the AI industry: why do firms openly share their innovations, like scientific knowledge, software, and models, despite the apparent lack of direct financial return? His work sheds light on the strategic and economic mechanisms that drive this openness, offering new frameworks for understanding how firms contribute to and benefit from shared technological progress.

“We typically think of firms as trying to capture value from their innovations,” Yue explained. “But in AI, we see companies freely publishing research and releasing open-source software. My dissertation investigates why this happens and what firms gain from it.”

News Contact

Kristin Lowe (She/Her)

Content Strategist

Georgia Institute of Technology | Scheller College of Business

kristin.lowe@scheller.gatech.edu

Aug. 25, 2025

Georgia Tech researchers have designed the first benchmark that tests how well existing AI tools can interpret advice from YouTube financial influencers, also known as finfluencers.

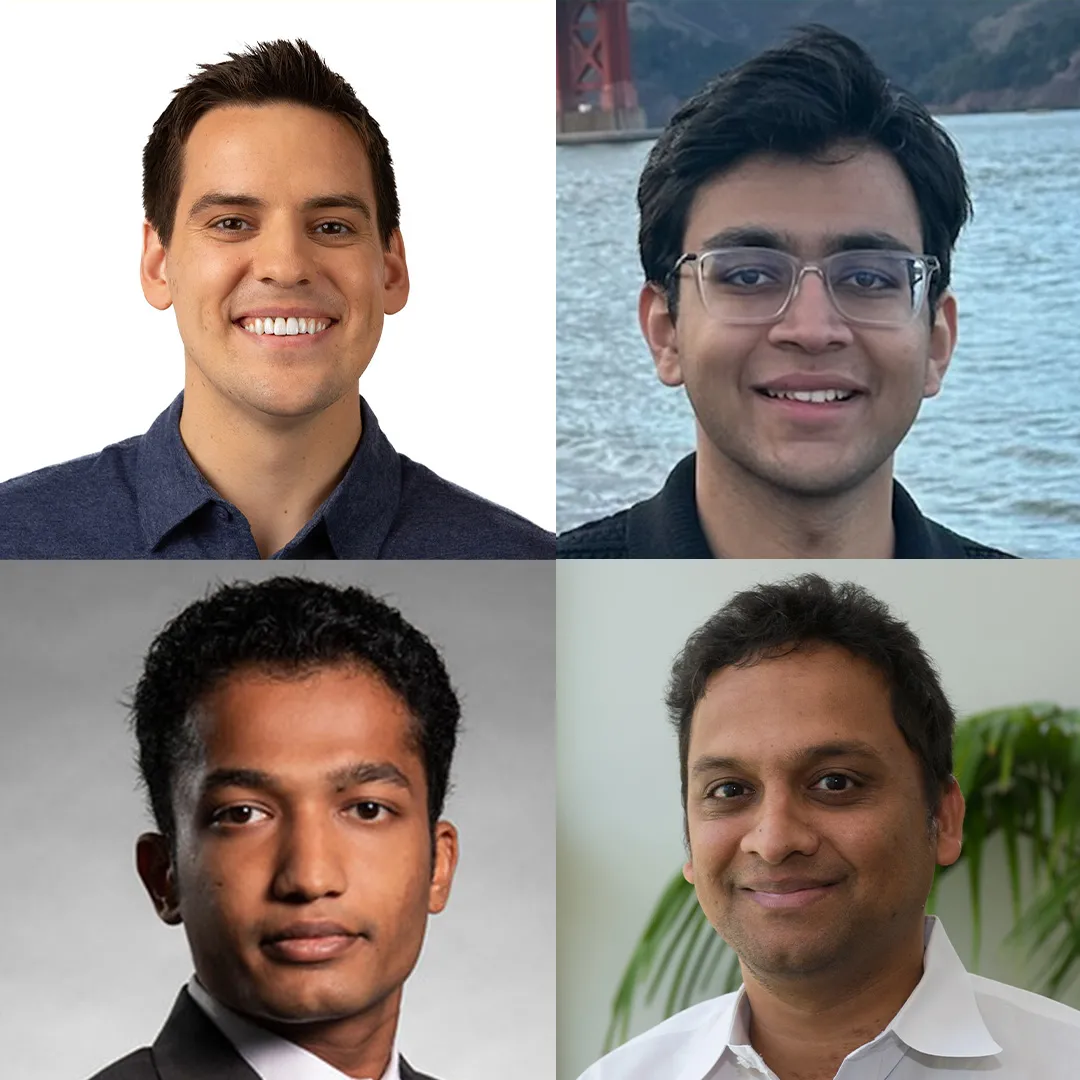

Lead author Michael Galarnyk, Ph.D. Machine Learning ’28, joined lead authors Veer Kejriwal, B.S. Computer Science ’25, and Agam Shah, Ph.D. Machine Learning ’26, along with co-authors Yash Bhardwaj, École Polytechnique, M.S. Trustworthy and Responsible AI ‘27; Nicholas Meyer, B.S. Electrical and Computer Engineering ’22 and Quantitative and Computational Finance ’24; Anand Krishnan, Stanford University, B.S. Computer Science ‘27; and, Sudheer Chava, Alton M. Costley Chair and professor of Finance at Georgia Tech.

Aptly named VideoConviction, the multimodal benchmark included hundreds of video clips. Experts labelled each clip with the influencer’s recommendation (buy, sell, or hold) and how strongly the influencer seemed to believe in their advice, based on tone, delivery, and facial expressions. The goal? To see how accurately AI can pick up on both the message and the conviction behind it.

“Our work shows that financial reasoning remains a challenge for even the most advanced models,” said Michael Galarnyk, lead author. “Multimodal inputs bring some improvement, but performance often breaks down on harder tasks that require distinguishing between casual discussion and meaningful analysis. Understanding where these models fail is a first step toward building systems that can reason more reliably in high stakes domains.”

News Contact

Kristin Lowe (She/Her)

Content Strategist

Georgia Institute of Technology | Scheller College of Business

kristin.lowe@scheller.gatech.edu

Aug. 11, 2025

Team Atlanta, a group of Georgia Tech students, faculty, and alumni, achieved international fame on Friday when they won DARPA’s AI Cyber Challenge (AIxCC) and its $4 million grand prize.

AIxCC was a two-year long competition to create an artificial intelligence (AI) enabled cyber reasoning system capable of autonomously finding and patching vulnerabilities.

“This is a once in a generation competition organized by DARPA about how to utilize recent advancements in AI to use in security related tasks,” said Georgia Tech Professor Taesoo Kim.

“As hackers we started this competition as AI skeptics, but now we truly believe in the potential of adopting large language models (LLM) when solving security problems."

The Atlantis system was Team Atlanta’s submission. Atlantis is a fuzzer- or an automated software that finds vulnerabilities or bugs- and enhanced it with several different types of LLMs.

While developing the system, Team Atlanta reported the heat put out by the GPU rack was hot enough to roast marshmallows.

The team was comprised of hackers, engineers, and cybersecurity researchers. The Georgia Tech alumni on the team also represented their employers which include KAIST, POSTECH, and Samsung Research. Kim is also the vice president of Samsung Research.

News Contact

John Popham

Communications Officer II at the School of Cybersecurity and Privacy

Pagination

- 1 Page 1

- Next page