Nov. 14, 2024

Georgia AIM (Artificial Intelligence in Manufacturing) was recently awarded the 'Tech for Good' award from the Technology Association of Georgia (TAG), the state’s largest tech organization.

The accolade was presented at the annual TAG Technology Awards ceremony on Nov. 6 at Atlanta’s Fox Theatre. The TAG Technology Awards promote inclusive technology throughout Georgia, and any state company, organization, or leader is eligible to apply.

Tech for Good, one of TAG’s five award categories, honors a program or project that uses technology to promote inclusiveness and equity by serving Georgia communities and individuals who are underrepresented in the tech space.

Georgia AIM is comprised of 16 projects across the state that connect smart technology to manufacturing through K-12 education, workforce development, and manufacturer outreach. The federally funded program is a collaborative project administered through Georgia Tech’s Enterprise Innovation Institute and the Georgia Tech Manufacturing Institute.

TAG is a Georgia AIM partner and provides workforce development programs that train people and assist them in making successful transitions into tech careers.

Donna Ennis, Georgia AIM’s co-director, accepted the award on behalf of the organization.

“Georgia AIM’s mission is to equitably develop and deploy talent and innovation for AI in manufacturing, and the Tech for Good Award reinforces our focus on revolutionizing the manufacturing economy for Georgia and the entire country,” Ennis said in her acceptance speech.

She cited the organization’s many coalition members across the state: the Technical College System of Georgia; Spelman College; the Georgia AIM Mobile Studio team at the Russell Innovation Center for Entrepreneurs and the University of Georgia; the Southwest Georgia Regional Commission; the Georgia Cyber Innovation & Training Center; and TAG and Georgia AIM’s partners in the Middle Georgia Innovation corridor, including 21st Century Partnership and the Houston Development Authority.

Ennis also acknowledged the U.S. Economic Development Administration for funding the project and helping to bring it to fruition. “But most of all,” she said, “I want to thank our manufacturers and communities across Georgia who are at the forefront of creating a new economy through AI in manufacturing. It is a privilege to assist you on this journey of technology and discovery.”

News Contact

Nov. 13, 2024

Amino acids are essential for nearly every process in the human body. Often referred to as ‘the building blocks of life,’ they are also critical for commercial use in products ranging from pharmaceuticals and dietary supplements, to cosmetics, animal feed, and industrial chemicals.

And while our bodies naturally make amino acids, manufacturing them for commercial use can be costly — and that process often emits greenhouse gasses like carbon dioxide (CO2).

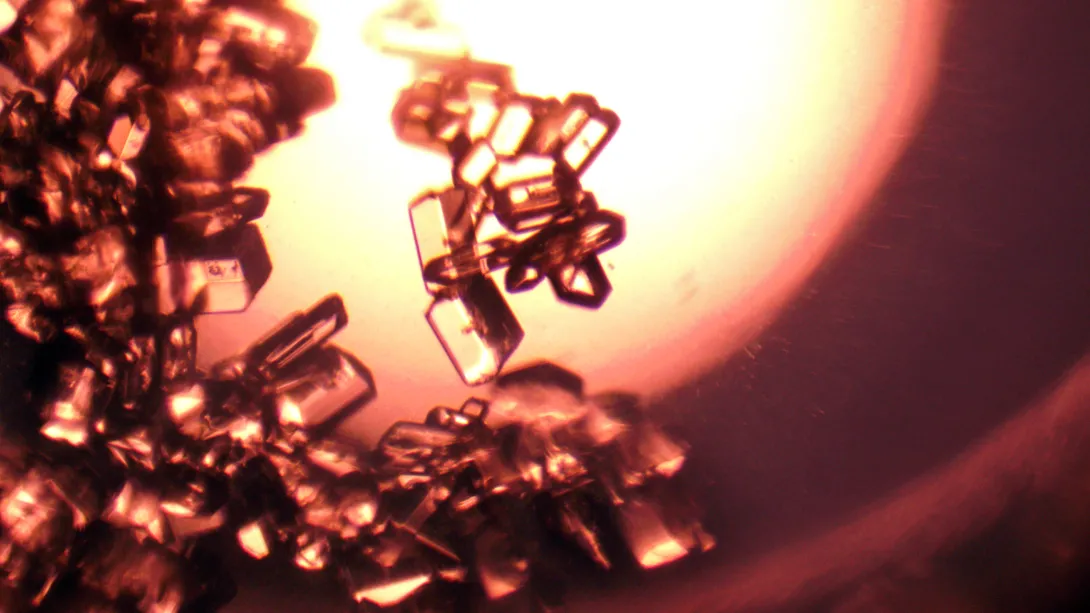

In a landmark study, a team of researchers has created a first-of-its kind methodology for synthesizing amino acids that uses more carbon than it emits. The research also makes strides toward making the system cost-effective and scalable for commercial use.

“To our knowledge, it’s the first time anyone has synthesized amino acids in a carbon-negative way using this type of biocatalyst,” says lead corresponding author Pamela Peralta-Yahya, who emphasizes that the system provides a win-win for industry and environment. “Carbon dioxide is readily available, so it is a low-cost feedstock — and the system has the added bonus of removing a powerful greenhouse gas from the atmosphere, making the synthesis of amino acids environmentally friendly, too.”

The study, “Carbon Negative Synthesis of Amino Acids Using a Cell-Free-Based Biocatalyst,” published today in ACS Synthetic Biology, is publicly available. The research was led by Georgia Tech in collaboration with the University of Washington, Pacific Northwest National Laboratory, and the University of Minnesota.

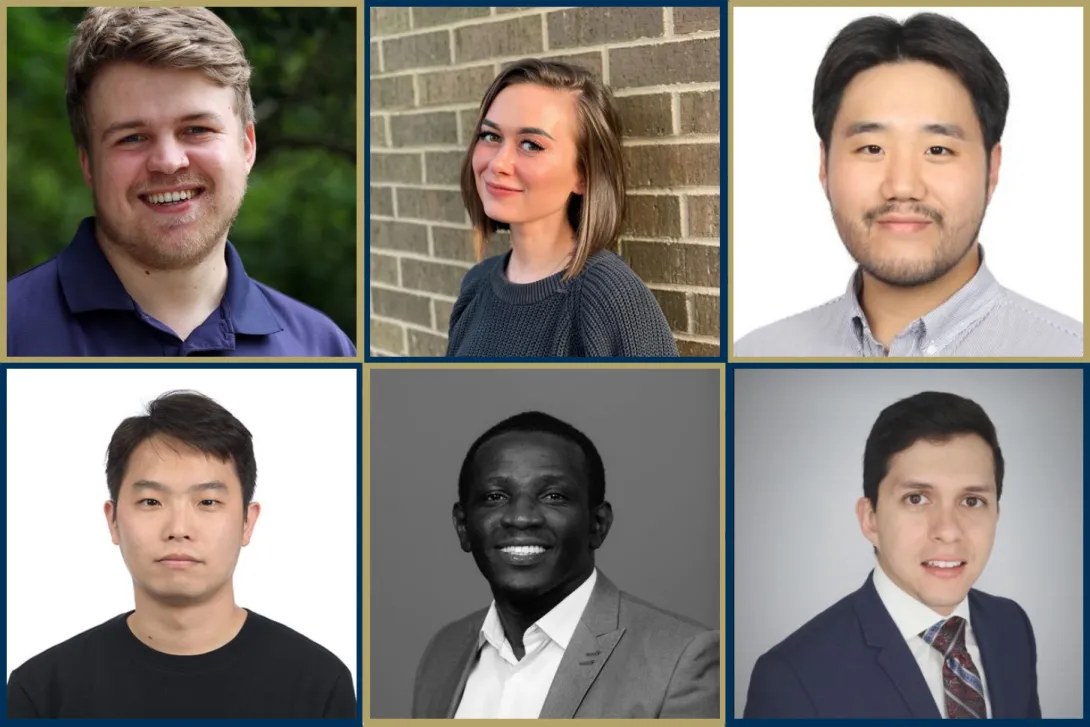

The Georgia Tech research contingent includes Peralta-Yahya, a professor with joint appointments in the School of Chemistry and Biochemistry and School of Chemical and Biomolecular Engineering (ChBE); first author Shaafique Chowdhury, a Ph.D. student in ChBE; Ray Westenberg, a Ph.D student in Bioengineering; and Georgia Tech alum Kimberly Wennerholm (B.S. ChBE ’23).

Costly chemicals

There are two key challenges to synthesizing amino acids on a large scale: the cost of materials, and the speed at which the system can generate amino acids.

While many living systems like cyanobacteria can synthesize amino acids from CO2, the rate at which they do it is too slow to be harnessed for industrial applications, and these systems can only synthesize a limited number of chemicals.

Currently, most commercial amino acids are made using bioengineered microbes. “These specially designed organisms convert sugar or plant biomass into fuel and chemicals,” explains first author Chowdhury, “but valuable food resources are consumed if sugar is used as the feedstock — and pre-processing plant biomass is costly.” These processes also release CO2 as a byproduct.

Chowdhury says the team was curious “if we could develop a commercially viable system that could use carbon dioxide as a feedstock. We wanted to build a system that could quickly and efficiently convert CO2 into critical amino acids, like glycine and serine.”

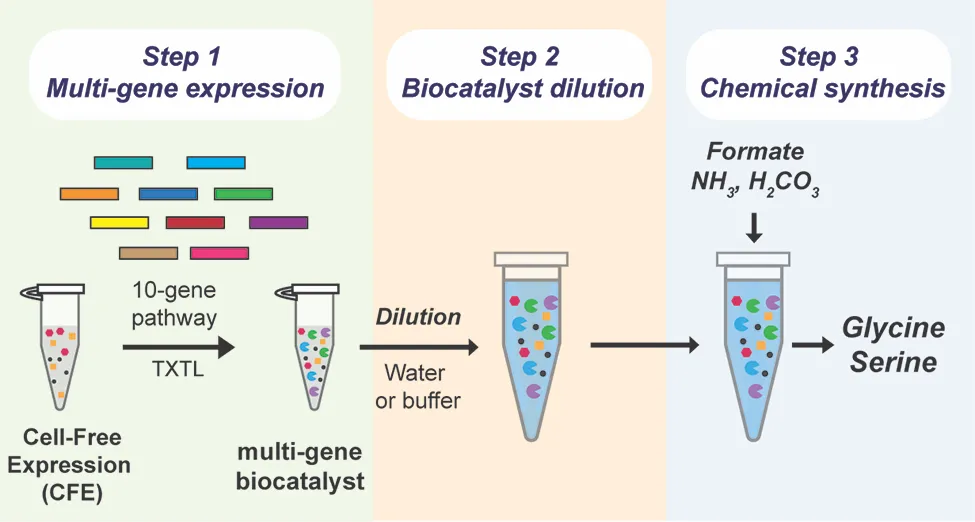

The team was particularly interested in what could be accomplished by a ‘cell-free’ system that leveraged some process of a cellular system — but didn’t actually involve living cells, Peralta-Yahya says, adding that systems using living cells need to use part of their CO2 to fuel their own metabolic processes, including cell growth, and have not yet produced sufficient quantities of amino acids.

“Part of what makes a cell-free system so efficient,” Westenberg explains, “is that it can use cellular enzymes without needing the cells themselves. By generating the enzymes and combining them in the lab, the system can directly convert carbon dioxide into the desired chemicals. Because there are no cells involved, it doesn’t need to use the carbon to support cell growth — which vastly increases the amount of amino acids the system can produce.”

A novel solution

While scientists have used cell-free systems before, one of the necessary chemicals, the cell lysate biocatalyst, is extremely costly. For a cell-free system to be economically viable at scale, the team needed to limit the amount of cell lysate the system needed.

After creating the ten enzymes necessary for the reaction, the team attempted to dilute the biocatalyst using a technique called ‘volumetric expansion.’ “We found that the biocatalyst we used was active even after being diluted 200-fold,” Peralta-Yahya explains. “This allows us to use significantly less of this high-cost material — while simultaneously increasing feedstock loading and amino acid output.”

It’s a novel application of a cell-free system, and one with the potential to transform both how amino acids are produced, and the industry’s impact on our changing climate.

“This research provides a pathway for making this method cost-effective and scalable,” Peralta-Yahya says. “This system might one day be used to make chemicals ranging from aromatics and terpenes, to alcohols and polymers, and all in a way that not only reduces our carbon footprint, but improves it.”

Funding: Advanced Research Project Agency-Energy (ARPA-E), U.S. Department of Energy and the U.S. Department of Energy, Office of Science, Biological and Environmental Research Program.

News Contact

Written by Selena Langner

Nov. 13, 2024

The Strategic Energy Institute and the Energy, Policy, and Innovation Center at Georgia Tech are proud to announce the winners of the James G. Campbell Fellowship and Spark Awards for 2024.

Michael Biehler, a fifth-year Ph.D. student in the H. Milton Stewart School of Industrial and Systems Engineering, has been selected as the recipient of the 2024 James G. Campbell Fellowship. The Fellowship is an annual award given to a Georgia Tech graduate student studying renewable energy systems. Candidates are nominated by their advisors in recognition of their exceptional academic achievements in the field of renewable energy.

Biehler’s research leverages multi-modal machine learning to tackle critical challenges in manufacturing, such as enhancing energy efficiency in manufacturing processes. He is advised by Jianjun (Jan) Shi, Carolyn J. Stewart Chair and Professor in the School of Industrial and Systems Engineering.

“I consider this award an incredible honor, and the support means a lot to me, especially with the recent arrival of our second daughter—it will make a significant difference for us,” says Biehler.

The Annual Spark Award recognizes current graduate students who have exhibited outstanding leadership in promoting student engagement with energy research at Georgia Tech, with evidence of broader impacts and service/leadership. The Spark Award recipients for 2024 include Georgia Tech graduate students Richard Asiamah, Erik Barbosa, Keun Hee Kim, Sanggyun Kim, and Erin Phillips.

Richard Asiamah is a third-year Ph.D. student in the School of Electrical and Computer Engineering (ECE). His research focuses on power systems optimization, emphasizing the efficient integration of renewable energy resources into the electricity grid. Asiamah has recently worked as a graduate electrical engineering intern at the National Renewable Energy Laboratory in Golden, Colorado, and is currently serving as the president of the ECE Graduate Students’ Organization.

Erik Barbosa is pursuing a doctorate in mechanical engineering and works under Akanksha Menon, assistant professor in the Woodruff School of Mechanical Engineering. His work in the Water Energy Research Lab focuses on utilizing inorganic salt hydrates to develop thermochemical energy storage, ranging from the material level to system scale, to decarbonize heat for building applications. Barbosa has been actively engaged with mentoring undergraduate students and high schoolers by exposing them to innovative technologies that decarbonize energy.

Keun Hee Kim is a Ph.D. candidate in the Woodruff School. Kim’s research focuses on developing solid polymer electrolytes and artificial interlayers for lithium metal batteries, and synthesizing oxygen evolution reaction and oxygen reduction reaction catalyst materials for proton exchange membrane fuel cells and water electrolyzers.

Sanggyun Kim is a fourth-year Ph.D. student in materials science and engineering, advised by Juan-Pablo Correa-Baena, assistant professor in the School of Materials Science and Engineering. Kim’s research focuses on understanding the complex interfacial interactions between hybrid organic-inorganic halide perovskite films and newly designed charge transport layers in perovskite solar cells (PSCs). His goal is to drive progress in solar energy technology by integrating novel polymer- and molecule-based interlayers, improving the efficiency and stability of PSCs to support more sustainable photovoltaic solutions.

Erin Phillips is a doctoral student in the School of Chemistry and Biochemistry. Her research addresses difficulties associated with lignin valorization, which includes controlling the isolation of lignin from the original irregular lignocellulose structure and depolymerizing lignin into aromatic monomer units via mechanocatalysis. These aromatics can further be valorized as renewable sources for the creation of biofuels and other green chemicals. Phillips is currently serving as the president of the Technical Association of the Pulp and Paper Industry (TAPPI) student chapter at Georgia Tech.

News Contact

News Contact: Priya Devarajan || SEI Communications Program Manager

Nov. 11, 2024

A first-of-its-kind algorithm developed at Georgia Tech is helping scientists study interactions between electrons. This innovation in modeling technology can lead to discoveries in physics, chemistry, materials science, and other fields.

The new algorithm is faster than existing methods while remaining highly accurate. The solver surpasses the limits of current models by demonstrating scalability across chemical system sizes ranging from large to small.

Computer scientists and engineers benefit from the algorithm’s ability to balance processor loads. This work allows researchers to tackle larger, more complex problems without the prohibitive costs associated with previous methods.

Its ability to solve block linear systems drives the algorithm’s ingenuity. According to the researchers, their approach is the first known use of a block linear system solver to calculate electronic correlation energy.

The Georgia Tech team won’t need to travel far to share their findings with the broader high-performance computing community. They will present their work in Atlanta at the 2024 International Conference for High Performance Computing, Networking, Storage and Analysis (SC24).

[MICROSITE: Georgia Tech at SC24]

“The combination of solving large problems with high accuracy can enable density functional theory simulation to tackle new problems in science and engineering,” said Edmond Chow, professor and associate chair of Georgia Tech’s School of Computational Science and Engineering (CSE).

Density functional theory (DFT) is a modeling method for studying electronic structure in many-body systems, such as atoms and molecules.

An important concept DFT models is electronic correlation, the interaction between electrons in a quantum system. Electron correlation energy is the measure of how much the movement of one electron is influenced by presence of all other electrons.

Random phase approximation (RPA) is used to calculate electron correlation energy. While RPA is very accurate, it becomes computationally more expensive as the size of the system being calculated increases.

Georgia Tech’s algorithm enhances electronic correlation energy computations within the RPA framework. The approach circumvents inefficiencies and achieves faster solution times, even for small-scale chemical systems.

The group integrated the algorithm into existing work on SPARC, a real-space electronic structure software package for accurate, efficient, and scalable solutions of DFT equations. School of Civil and Environmental Engineering Professor Phanish Suryanarayana is SPARC’s lead researcher.

The group tested the algorithm on small chemical systems of silicon crystals numbering as few as eight atoms. The method achieved faster calculation times and scaled to larger system sizes than direct approaches.

“This algorithm will enable SPARC to perform electronic structure calculations for realistic systems with a level of accuracy that is the gold standard in chemical and materials science research,” said Suryanarayana.

RPA is expensive because it relies on quartic scaling. When the size of a chemical system is doubled, the computational cost increases by a factor of 16.

Instead, Georgia Tech’s algorithm scales cubically by solving block linear systems. This capability makes it feasible to solve larger problems at less expense.

Solving block linear systems presents a challenging trade-off in solving different block sizes. While larger blocks help reduce the number of steps of the solver, using them demands higher computational cost per step on computer processors.

Tech’s solution is a dynamic block size selection solver. The solver allows each processor to independently select block sizes to calculate. This solution further assists in scaling, and improves processor load balancing and parallel efficiency.

“The new algorithm has many forms of parallelism, making it suitable for immense numbers of processors,” Chow said. “The algorithm works in a real-space, finite-difference DFT code. Such a code can scale efficiently on the largest supercomputers.”

Georgia Tech alumni Shikhar Shah (Ph.D. CSE 2024), Hua Huang (Ph.D. CSE 2024), and Ph.D. student Boqin Zhang led the algorithm’s development. The project was the culmination of work for Shah and Huang, who completed their degrees this summer. John E. Pask, a physicist at Lawrence Livermore National Laboratory, joined the Tech researchers on the work.

Shah, Huang, Zhang, Suryanarayana, and Chow are among more than 50 students, faculty, research scientists, and alumni affiliated with Georgia Tech who are scheduled to give more than 30 presentations at SC24. The experts will present their research through papers, posters, panels, and workshops.

SC24 takes place Nov. 17-22 at the Georgia World Congress Center in Atlanta.

“The project’s success came from combining expertise from people with diverse backgrounds ranging from numerical methods to chemistry and materials science to high-performance computing,” Chow said.

“We could not have achieved this as individual teams working alone.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nov. 05, 2024

The aviation industry’s commitment to meaningful carbon reduction underscores the need for investing in Sustainable Aviation Fuel (SAF), which provides the most promising solution to achieving net-zero carbon by 2050. According to the International Air Transport Association (IATA), with the right policy measures and financial instruments in place, SAF could help the industry achieve 65% of this reduction. As a key transportation center in the U.S., the Southeast holds immense potential to become a hub for SAF production and adoption.

This prospect was the focus of a recent workshop organized by three Georgia Tech units – the Ray C. Anderson Center for Sustainable Business and its initiative, the Drawdown Georgia Business Compact; the School of Public Policy; and the Strategic Energy Institute; along with the U.S. Department of Energy Bioenergy Technologies Office. The workshop gathered together multiple stakeholder groups representing federal, state, and local government, industry, academia, and the aviation sector to chart a path forward for the Southeast.

News Contact

Titiksha Fernandes | Ray C Anderson Center for Sustainable Business

Nov. 05, 2024

Southern Company, Georgia Institute of Technology (Georgia Tech), Smart Wires, and other partners have announced that they are collaborating on a new U.S. Department of Energy-funded project. Scheduled for 2025, the project will jointly implement advanced power flow control (APFC) and dynamic line rating (DLR) technologies to support the connection of renewable energy sources and new demand more quickly.

This project, led by the Georgia Tech Center for Distributed Energy with professor Deepak Divan as Principal Investigator, was selected by the U.S. Department of Energy in November 2021 as one of four projects to receive funding for grid enhancing technologies (GETs) that improve grid reliability, optimize existing grid infrastructure, and support the connection of renewable energy. It will use Smart Wires’ APFC solution— SmartValve™—in a mobile deployment combined with its DLR software—SUMO—and also develop control algorithms that improve and fine-tune how these solutions can work in synergy to optimize use of the grid.

“The launch of this innovative project represents an important step toward more efficient and reliable integration of cleaner energy sources,” said Tim Lieuwen, interim executive vice president for Research at Georgia Institute of Technology. “This collaboration allows us to identify, develop and test new ways to manage the power grid in Georgia by co-deploying APFC and DLR technologies.”

While the effectiveness of these solutions is well documented in multiple third-party reports, such as RMI’s GETting Interconnected in PJM, this project will be the first large-scale implementation of both technologies together. It will specifically examine their combined impact and result in the development of design control algorithms to unlock the combined power of these solutions and maximize their efficiency.

SUMO identifies when lines have spare capacity based on real-time weather conditions, while SmartValves can redirect power flows to quickly utilize this spare capacity. This also applies in reverse, with SUMO identifying when the dynamic ratings of lines are less than the static rating. If it’s a hot day, for example, SmartValves can redirect power flows away from these circuits to others with capacity, reducing the risk of system faults while improving operational safety.

The mobile deployment of SmartValves can be installed and in-service within one week. This provides a rapidly deployable solution that avoids extended outages and can be easily moved between sites as system needs evolve over time.

“We’re delighted to provide both SmartValves and SUMO in this project to move the dial in terms of deploying multiple GETs in synergy and optimizing their use,” said Joaquin Peirano, General Manager for the Americas at Smart Wires. “The commitment of utilities like Southern Company to get the most from their existing grid with GETs, combined with the positive regulatory developments such as FERC’s recent Order 1920, positions the U.S. to capture the full value these technologies can provide on transmission grids.”

The project will be delivered in 2025 and will involve a one-year performance period to provide Southern Company with operational experience that can be shared with other utilities and pave the way for greater use of GETs in the U.S.

About Georgia Institute of Technology

The Georgia Institute of Technology is a leading research university, committed to improving the human condition through advanced science and technology. Georgia Tech’s engineering and computing colleges are the largest and among the highest-ranked in the nation. The Institute also offers outstanding programs in business, design, liberal arts, and sciences.

With $1.37 billion annually in research, development, and sponsored activities across all six colleges and the Georgia Tech Research Institute, Georgia Tech is an engine of economic development for the state of Georgia, the Southeast, and the nation. Georgia Tech routinely ranks among the top U.S. universities in volume of research conducted; In 2023, the Institute ranked 17th among U.S. academic institutions in research and development expenditures, according to the National Science Foundation’s Higher Education Research and Development Survey.

About Smart Wires

Smart Wires is the world’s leading grid enhancing technology and services provider. We help electric utilities unlock capacity and solve their critical grid issues, using our solutions to create a more flexible, reliable and affordable grid. This enables a faster, more cost-efficient path to meet growing electricity demand with clean energy generation, at lowest cost to consumers. Headquartered in the Research Triangle of North Carolina, Smart Wires has a global workforce of passionate and visionary industry-leading experts across four continents, who work every day to transform grids globally. In collaboration with our customers and partners, we’ve unlocked over 3.5 Gigawatts capacity—enough to power over 2.5 million homes—supporting the faster integration of clean energy and new demand, enhancing security of supply and delivering cost savings to consumers.

Together, we are reimagining the grid for net zero.

News Contact

Priya Devarajan | SEI Communications Program Manager

Nov. 01, 2024

National Science Foundation Awards $15M to Georgia Tech-Led Consortium

of Universities for Societal-Oriented Innovation and Commercialization Effort

Multi-state I-Corps Hubs project designed to strengthen regional innovation ecosystem and address inequities in access to capital and commercialization opportunities

ATLANTA — The National Science Foundation (NSF) awarded a syndicate of 8eight Southeast universities — with Georgia Tech as the lead — a $15 million grant to support the development of a regional innovation ecosystem with a focus on addressing underrepresentation and increasing entrepreneurship and technology-oriented workforce development.

The NSF Innovation Corps (I-Corps) Southeast Hub, as the project is called, is a five-year project and is based on the I-Corps model, which assists academics in moving their research from the lab and into the market.

Led by Georgia Tech’s Office of Commercialization and Enterprise Innovation Institute, the NSF I-Corps Southeast Hub encompasses four states — Georgia, Florida, South Carolina, and Alabama.

Its member schools include:

- Clemson University

- Morehouse College

- University of Alabama

- University of Central Florida

- University of Florida

- University of Miami

- University of South Florida

In January 2025, when the NSF I-Corps Southeast Hub officially launches, the consortium of schools will expand to also include the University of Puerto Rico. Additionally, through Morehouse College’s activation, Spelman College and the Morehouse School of Medicine will also participate in supporting the project.

With a combined economic output of more than $3.2 trillion, the NSF I-Corps Southeast Hub region represents more than 11% of the entire U.S. economy. As a region, those states and Puerto Rico have a larger economic output than France, Italy, or Canada.

“This is a great opportunity for us to engage in regional collaboration to drive innovation across the Southeast to strengthen our regional economy and that of Puerto Rico,” said the Enterprise Innovation Institute’s Nakia Melecio, director of the NSF I-Corps Southeast Hub. As director, Melecio will oversee strategic management, data collection, and overall operations.

Additionally, Melecio serves as a national faculty instructor for the NSF I-Corps program.

“This also allows us to collectively tackle some of the common challenges all four of our states face, especially when it comes to being intentionally inclusive in reaching out to communities that historically haven’t always been invited to participate,” he said.

That means not just bringing solutions to market that not only solve problems but is intentional about including researchers from a diversity of schools that are inclusive of Black and Hispanic serving institutions, Melecio said.

Keith McGreggor, director of Georgia Tech’s VentureLab, is the faculty lead and charged with designing the curriculum and instruction for the NSF I-Corps Southeast Hub’s partners.

McGreggor has extensive I-Corps experience. In 2012, Georgia Tech was among the first institutions in the country selected to teach the I-Corps curriculum, which aims to further research commercialization. McGreggor served as the lead instructor for I-Corps-related efforts and led training efforts across the Southeast, as well as for teams in Puerto Rico, Mexico and the Republic of Ireland.

Raghupathy “Siva” Sivakumar, Georgia Tech’s vice president of commercialization, is the project’s principal investigator.

The NSF I-Corps Southeast Hub is one of three — the others being in the Northwest and New England regions, led by the University of California, Berkeley and the Massachusetts Institute of Technology, respectively — announced by the NSF. The three I-Corps Hubs are part of the NSF’s planned expansion of its National Innovation Network, which now includes 128 colleges and universities across 48 states.

As designed, the NSF I-Corps Southeast Hub will leverage its partner institutions’ strengths to break down barriers to researchers’ pace of lab to market commercialization.

“Our Hub member schools collectively have brought transformative technologies to market in advanced manufacturing, renewable energy, cybersecurity, and the biomedical sectors,” Sivakumar said. “Our goal is to accomplish two things. It builds and expands a scalable model to translate research into viable commercial ventures. It also addresses societal needs, not just from the standpoint of bringing solutions that solve them but building a diverse pipeline of researchers and innovators and interest in STEM [science, technology, engineering, and math]-related fields.”

U.S. Rep. Nikema Williams (D-Atlanta) is a proponent of the Hub’s STEM component.

“As a biology major-turned-Congresswoman, I know firsthand that STEM education and research open doors far beyond the lab or classroom.,” Williams said. “This National Science Foundation grant means Georgia Tech will be leading the way in equipping researchers and grad students to turn their discoveries into real-world impact — as innovators, entrepreneurs, and business leaders.

“I’m especially excited about the partnership with Morehouse College and other Minority Serving Institutions through this Innovation Hub, expanding pathways to innovation and entrepreneurship for historically marginalized communities and creating one more tool to close the racial wealth gap.”

That STEM aspect, coupled with supporting growth of a regional ecosystem, will speed commercialization, increase higher education-industry collaborations, and boost the network of diverse entrepreneurs and startup founders, said David Bridges, vice president of the Enterprise Innovation Institute.

“This multi-university, regional approach is a successful model because it has been proven that bringing a diversity of stakeholders together leads to unique solutions to very difficult problems,” Bridges said. “And while the Southeast faces different challenges that vary from state to state and Puerto Rico has its own needs, they call for a more comprehensive approach to solving them. Adopting a region-oriented focus allows us to understand what these needs are, customize tailored solutions and keep not just our hub but our nation economically competitive.”

News Contact

Péralte Paul

Oct. 30, 2024

The National Science Foundation (NSF) awarded a syndicate of eight Southeast universities — with Georgia Tech as the lead — a $15 million grant to support the development of a regional innovation ecosystem that addresses underrepresentation and increases entrepreneurship and technology-oriented workforce development.

The NSF Innovation Corps (I-Corps) Southeast Hub is a five-year project based on the I-Corps model, which assists academics in moving their research from the lab to the market.

Led by Georgia Tech’s Office of Commercialization and Enterprise Innovation Institute, the NSF I-Corps Southeast Hub encompasses four states — Georgia, Florida, South Carolina, and Alabama.

Its member schools include:

- Clemson University

- Morehouse College

- University of Alabama

- University of Central Florida

- University of Florida

- University of Miami

- University of South Florida

In January 2025, when the NSF I-Corps Southeast Hub officially launches, the consortium of schools will expand to include the University of Puerto Rico. Additionally, through Morehouse College’s activation, Spelman College and the Morehouse School of Medicine will also participate in supporting the project.

With a combined economic output of more than $3.2 trillion, the NSF I-Corps Southeast Hub region represents more than 11% of the entire U.S. economy. As a region, those states and Puerto Rico have a larger economic output than France, Italy, or Canada.

“This is a great opportunity for us to engage in regional collaboration to drive innovation across the Southeast to strengthen our regional economy and that of Puerto Rico,” said the Enterprise Innovation Institute’s Nakia Melecio, director of the NSF I-Corps Southeast Hub. As director, Melecio will oversee strategic management, data collection, and overall operations.

Additionally, Melecio serves as a national faculty instructor for the NSF I-Corps program.

“This also allows us to collectively tackle some of the common challenges all four of our states face, especially when it comes to being intentionally inclusive in reaching out to communities that historically haven’t always been invited to participate,” he said.

That means bringing solutions to market that not only solve problems but are intentional about including researchers from Black and Hispanic-serving institutions, Melecio said.

Keith McGreggor, director of Georgia Tech’s VentureLab, is the faculty lead charged with designing the curriculum and instruction for the NSF I-Corps Southeast Hub’s partners.

McGreggor has extensive I-Corps experience. In 2012, Georgia Tech was among the first institutions in the country selected to teach the I-Corps curriculum, which aims to further research commercialization. McGreggor served as the lead instructor for I-Corps-related efforts and led training efforts across the Southeast, as well as for teams in Puerto Rico, Mexico, and the Republic of Ireland.

Raghupathy “Siva” Sivakumar, Georgia Tech’s vice president of Commercialization and chief commercialization officer, is the project’s principal investigator.

The NSF I-Corps Southeast Hub is one of three announced by the NSF. The others are in the Northwest and New England regions, led by the University of California, Berkeley, and the Massachusetts Institute of Technology, respectively. The three I-Corps Hubs are part of the NSF’s planned expansion of its National Innovation Network, which now includes 128 colleges and universities across 48 states.

As designed, the NSF I-Corps Southeast Hub will leverage its partner institutions’ strengths to break down barriers to researchers’ pace of lab-to-market commercialization.

"Our Hub member institutions have successfully commercialized transformative technologies across critical sectors, including advanced manufacturing, renewable energy, cybersecurity, and biomedical fields,” said Sivakumar. “We aim to achieve two key objectives: first, to establish and expand a scalable model that effectively translates research into viable commercial ventures; and second, to address pressing societal needs.

"This includes not only delivering innovative solutions but also cultivating a diverse pipeline of researchers and innovators, thereby enhancing interest in STEM fields — science, technology, engineering, and mathematics.”

U.S. Rep. Nikema Williams, D-Atlanta, is a proponent of the Hub’s STEM component.

“As a biology major-turned-congresswoman, I know firsthand that STEM education and research open doors far beyond the lab or classroom.,” Williams said. “This National Science Foundation grant means Georgia Tech will be leading the way in equipping researchers and grad students to turn their discoveries into real-world impact — as innovators, entrepreneurs, and business leaders.

“I’m especially excited about the partnership with Morehouse College and other minority-serving institutions through this Hub, expanding pathways to innovation and entrepreneurship for historically marginalized communities and creating one more tool to close the racial wealth gap.”

That STEM aspect, coupled with supporting the growth of a regional ecosystem, will speed commercialization, increase higher education-industry collaborations, and boost the network of diverse entrepreneurs and startup founders, said David Bridges, vice president of the Enterprise Innovation Institute.

“This multi-university, regional approach is a successful model because it has been proven that bringing a diversity of stakeholders together leads to unique solutions to very difficult problems,” he said. “And while the Southeast faces different challenges that vary from state to state and Puerto Rico has its own needs, they call for a more comprehensive approach to solving them. Adopting a region-oriented focus allows us to understand what these needs are, customize tailored solutions, and keep not just our hub but our nation economically competitive.”

News Contact

Péralte C. Paul

peralte@gatech.edu

404.316.1210

Oct. 24, 2024

The U.S. Department of Energy (DOE) has awarded Georgia Tech researchers a $4.6 million grant to develop improved cybersecurity protection for renewable energy technologies.

Associate Professor Saman Zonouz will lead the project and leverage the latest artificial technology (AI) to create Phorensics. The new tool will anticipate cyberattacks on critical infrastructure and provide analysts with an accurate reading of what vulnerabilities were exploited.

“This grant enables us to tackle one of the crucial challenges facing national security today: our critical infrastructure resilience and post-incident diagnostics to restore normal operations in a timely manner,” said Zonouz.

“Together with our amazing team, we will focus on cyber-physical data recovery and post-mortem forensics analysis after cybersecurity incidents in emerging renewable energy systems.”

As the integration of renewable energy technology into national power grids increases, so does their vulnerability to cyberattacks. These threats put energy infrastructure at risk and pose a significant danger to public safety and economic stability. The AI behind Phorensics will allow analysts and technicians to scale security efforts to keep up with a growing power grid that is becoming more complex.

This effort is part of the Security of Engineering Systems (SES) initiative at Georgia Tech’s School of Cybersecurity and Privacy (SCP). SES has three pillars: research, education, and testbeds, with multiple ongoing large, sponsored efforts.

“We had a successful hiring season for SES last year and will continue filling several open tenure-track faculty positions this upcoming cycle,” said Zonouz.

“With top-notch cybersecurity and engineering schools at Georgia Tech, we have begun the SES journey with a dedicated passion to pursue building real-world solutions to protect our critical infrastructures, national security, and public safety.”

Zonouz is the director of the Cyber-Physical Systems Security Laboratory (CPSec) and is jointly appointed by Georgia Tech’s School of Cybersecurity and Privacy (SCP) and the School of Electrical and Computer Engineering (ECE).

The three Georgia Tech researchers joining him on this project are Brendan Saltaformaggio, associate professor in SCP and ECE; Taesoo Kim, jointly appointed professor in SCP and the School of Computer Science; and Animesh Chhotaray, research scientist in SCP.

Katherine Davis, associate professor at the Texas A&M University Department of Electrical and Computer Engineering, has partnered with the team to develop Phorensics. The team will also collaborate with the NREL National Lab, and industry partners for technology transfer and commercialization initiatives.

The Energy Department defines renewable energy as energy from unlimited, naturally replenished resources, such as the sun, tides, and wind. Renewable energy can be used for electricity generation, space and water heating and cooling, and transportation.

News Contact

John Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Oct. 24, 2024

Eight Georgia Tech researchers were honored with the ACM Distinguished Paper Award for their groundbreaking contributions to cybersecurity at the recent ACM Conference on Computer and Communications Security (CCS).

Three papers were recognized for addressing critical challenges in the field, spanning areas such as automotive cybersecurity, password security, and cryptographic testing.

“These three projects underscore Georgia Tech's leadership in advancing cybersecurity solutions that have real-world impact, from protecting critical infrastructure to ensuring the security of future computing systems and improving everyday digital practices,” said School of Cybersecurity and Privacy (SCP) Chair Michael Bailey.

One of the papers, ERACAN: Defending Against an Emerging CAN Threat Model, was co-authored by Ph.D. student Zhaozhou Tang, Associate Professor Saman Zonouz, and College of Engineering Dean and Professor Raheem Beyah. This research focuses on securing the controller area network (CAN), a vital system used in modern vehicles that is increasingly targeted by cyber threats.

"This project is led by our Ph.D. student Zhaozhou Tang with the Cyber-Physical Systems Security (CPSec) Lab," said Zonouz. "Impressively, this was Zhaozhou's first paper in his Ph.D., and he deserves special recognition for this groundbreaking work on automotive cybersecurity."

The work introduces a comprehensive defense system to counter advanced threats to vehicular CAN networks, and the team is collaborating with the Hyundai America Technical Center to implement the research. The CPSec Lab is a collaborative effort between SCP and the School of Electrical and Computer Engineering (ECE).

In another paper, Testing Side-Channel Security of Cryptographic Implementations Against Future Microarchitectures, Assistant Professor Daniel Genkin collaborated with international researchers to define security threats in new computing technology.

"We appreciate ACM for recognizing our work," said Genkin. “Tools for early-stage testing of CPUs for emerging side-channel threats are crucial to ensuring the security of the next generation of computing devices.”

The third paper, Unmasking the Security and Usability of Password Masking, was authored by graduate students Yuqi Hu, Suood Al Roomi, Sena Sahin, and Frank Li, SCP and ECE assistant professor. This study investigated the effectiveness and provided recommendations for implementing password masking and the practice of hiding characters as they are typed and offered.

"Password masking is a widely deployed security mechanism that hasn't been extensively investigated in prior works," said Li.

The assistant professor credited the collaborative efforts of his students, particularly Yuqi Hu, for leading the project.

The ACM Conference on Computer and Communications Security (CCS) is the flagship annual conference of the Special Interest Group on Security, Audit and Control (SIGSAC) of the Association for Computing Machinery (ACM). The conference was held from Oct. 14-18 in Salt Lake City.

News Contact

John Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Pagination

- Previous page

- 8 Page 8

- Next page