Jan. 06, 2025

In the rapidly evolving world of manufacturing, embracing digital connectivity and artificial intelligence is crucial for optimizing operations, improving efficiency, and driving innovation. Internet of Things (IoT) is a key pillar of that process, enabling seamless communication and data exchange across the manufacturing process by connecting sensors, equipment, and applications through internet protocols.

The Georgia Tech Manufacturing Institute (GTMI) recently hosted the 10th annual Internet of Things for Manufacturing (IoTfM) Symposium, a flagship event that continues to set the standard for innovation and collaboration in the manufacturing sector. Held on Nov. 13, the symposium brought together industry leaders, researchers, and practitioners to explore the latest advancements and applications of IoT in manufacturing.

"The purpose is to bring the voice of manufacturers directly to the university community," explained Andrew Dugenske, a principal research engineer and director of the Factory Information Systems Center at GTMI. "It's about learning from industry to guide our research, education, and knowledge base, which is inherent to Georgia Tech."

Initiated over a decade ago, the IoTfM Symposium has grown into a premier event that highlights Georgia Tech's commitment to advancing manufacturing technologies.

"This symposium provides a unique platform to share and learn from cutting-edge advancements in IoT and now AI for manufacturing,” said Dago Mata, regional director of business development at Tata Consultancy Services (TCS) and one of the event’s speakers. “The opportunity to engage with industry leaders and showcase practical, real-world implementations was highly motivating."

This year’s symposium welcomed over 100 attendees from across the country. Speakers from TCS, Amazon Web Services, Southwire, and more shared insights on the latest advancements, use cases, current challenges, and future directions for IoT in manufacturing processes.

“My favorite aspect was the case studies presented by major manufacturers, highlighting successful IoT and AI implementations," said Mata, who has attended the symposium since 2018. "These provided actionable takeaways and inspiration for driving similar innovation in my projects — the blend of exclusive learning from real-world applications and the presence of diverse experts made it a truly practical and inspiring event."

A distinctive feature of the IoTfM Symposium is its commitment to providing a platform for industry partners to voice their perspectives on powerful manufacturing research, says Dugenske. "We ask our industry partners to tell us about their experiences, challenges, and future predictions. This way, we can guide our research with the real-world needs of the manufacturing sector to form stronger collaborations and better prepare our students."

This unique format not only enhances the relevance of the symposium but also fosters a collaborative environment where industry leaders can learn from each other and from Georgia Tech's academic community.

As GTMI looks to the future, the symposium will continue to evolve, incorporating new elements and expanding its reach. Dugenske envisions even greater integration with other GTMI initiatives and broader industry engagement.

"Our goal is to create an event that highlights our capabilities and builds deeper connections within the manufacturing community.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Dec. 19, 2024

Associate Professor Matthew McDowell has been selected as the next Associate Chair for Research in the George W. Woodruff School of Mechanical Engineering. He will step into the role on January 1, 2025.

The Associate Chair for Research is responsible for working with the Woodruff School’s faculty to develop a strategic research plan for future growth and investments in the School, as well as identifying new research opportunities, helping to foster strategic relationships with government, industry, and foundations, and synergizing research efforts with other units in the College of Engineering and across the Institute.

“I am thrilled to be chosen for this role, and I look forward to working with the faculty, students, researchers, and staff of the Woodruff School to enhance and support our world-class research program,” said McDowell.

McDowell joined Georgia Tech in the fall of 2015 as an assistant professor with a joint appointment in the Woodruff School and the School of Materials Science and Engineering (MSE). He was named Carter N. Paden, Jr. Distinguished Chair earlier this year and serves as co-director of the Georgia Tech Advanced Battery Center (GTABC). Through this center, McDowell and Professor Gleb Yushin (MSE) are building community at the Institute, enhancing research and educational relationships with industry partners, and creating a new battery manufacturing facility on Georgia Tech’s campus.

“I am excited to work with Matt in advancing the research priorities and goals of the Woodruff School,” said Devesh Ranjan, Eugene C. Gwaltney Jr. School Chair and professor. “Through his exceptional leadership of the Georgia Tech Advanced Battery Center, Matt has demonstrated a deep commitment to excellence in scholarship and to fostering partnerships that drive innovative, collaborative research across the Institute. I am confident in the positive transformation he will bring to our program in this new role.”

Read More on the ME Newspage

News Contact

Dec. 18, 2024

Johney Green Jr., M.S. ME 1993, Ph.D. ME 2000, has been chosen to serve as the new laboratory director for Savannah River National Laboratory (SRNL). A proud Yellow Jacket, Green received both his master’s and doctoral degrees in mechanical engineering from Georgia Tech and currently serves on the Strategic Energy Institute’s (SEI) External Advisory Board. He also served on the board of the George W. Woodruff School of Mechanical Engineering from 2017 to 2022.

“SRNL has truly found an exceptional leader in Johney. His vision and dedication are inspiring, and I am genuinely excited to see the remarkable contributions he will make in advancing SRNL,” said Christine Conwell, SEI interim executive director. “We look forward to his continued partnership with SEI and the positive impact he will bring to the energy community in 2025 and beyond.”

The Battelle Savannah River Alliance (SRNL’s parent organization) selected Green for this role, describing him as “a dynamic leader who brings deep, wide-ranging scientific expertise to this new position.”

With an annual operating budget of about $400 million, SRNL is a multiprogram national lab leading research and development for the Department of Energy’s (DOE) Offices of Environmental Management and Legacy Management and the National Nuclear Security Administration’s weapons and nonproliferation programs.

Green currently serves as associate laboratory director for mechanical and thermal engineering sciences at the National Renewable Energy Laboratory (NREL). In this position, he oversees a diverse portfolio of research programs including transportation, buildings, wind, water, geothermal, advanced manufacturing, concentrating solar power, and Arctic research. His leadership impacts a workforce of about 750 and involves managing a budget of more than $300 million.

At NREL, Green transformed the lab’s wind site into the innovative Flatirons Campus and transitioned the campus from a single-program wind research site to a multiprogram research campus that serves as the foundational experimental platform for the DOE’s Advanced Research on Integrated Energy Systems (ARIES) initiative.

"We are immensely proud to call Johney a Woodruff School alumnus. His achievements and service to Tech through advisory board engagement inspires us, and we are excited to see him step into this prestigious role at SRNL. We look forward to deepening our collaboration with him as he continues to make a powerful impact,” said Devesh Ranjan, Eugene C. Gwaltney, Jr. School Chair and professor in the Woodruff School.

Prior to his role at NREL, Green held several key leadership roles at Oak Ridge National Laboratory (ORNL). As director of the Energy and Transportation Science Division and group leader for fuels, engines, and emissions research, he managed a broad science and technology portfolio and user facilities that made significant science and engineering advances in building technologies; sustainable industrial and manufacturing processes; fuels, engines, emissions, and transportation analysis; and vehicle systems integration. While Green was the division director, ORNL developed the Additive Manufacturing Integrated Energy (AMIE) demonstration project, a model of innovative vehicle-to-grid integration technologies and next-generation manufacturing processes.

Early in his career, Green conducted combustion research to stabilize gasoline engine operation under extreme conditions. During the course of that research, he joined a team working with Ford Motor Co., seeking ways to simultaneously extend exhaust gas recirculation limits in diesel engines and reduce nitrogen oxide and particulate matter emissions. He continued this collaboration as a visiting scientist at Ford's Scientific Research Laboratory, conducting modeling and experimental research for advanced diesel engines designed for light-duty vehicles. On assignment to the DOE’s Vehicle Technologies Office, Green also served as technical coordinator for the 21st Century Truck Partnership. He also contributed to a dozen of ORNL's 150-plus top scientific discoveries.

Green was the recipient of a National GEM Consortium Master’s Fellowhip sponsored by Georgia Tech and ORNL, and he served as the National GEM Consortium chairperson from 2022-2024. He is a Fellow of the American Association for the Advancement of Science and an SAE International Fellow. He has received several awards during his career and holds two U.S. patents in combustion science.

News Contact

Priya Devarajan || SEI Communications Program Manager

Dec. 11, 2024

Gold and white pompoms fluttered while Buzz, the official mascot of the Georgia Institute of Technology, danced to marching band music. But the celebration wasn’t before a football or basketball game — instead, the cheers marked the official launch of Georgia AIM Week, a series of events and a new mobile lab designed to bring technology to all parts of Georgia

Organized by Georgia Artificial Intelligence in Manufacturing (Georgia AIM), Georgia AIM Week kicked off September 30 with a celebration on the Georgia Institute of Technology campus and culminated with another celebration on Friday at the University of Georgia in Athens and aligned with National Manufacturing Day.

In between, the Georgia AIM Mobile Studio made stops at schools and community organizations to showcase a range of technology rooted in AI and smart technology.

“Georgia AIM Week was a statewide opportunity for us to celebrate Manufacturing Day and to launch our Georgia AIM Mobile Studio,” said Donna Ennis, associate vice president, community-based engagement, for Georgia Tech’s Enterprise Innovation Institute and Georgia AIM co-director. “Georgia AIM projects planned events in cities around the state, starting here in Atlanta. Then we headed to Warner Robins, Southwest Georgia, and Athens. We’re excited about the opportunity to bring this technology to our communities and increase access and ideas related to smart technology.”

Georgia AIM is a collaboration across the state to provide the tools and knowledge to empower all communities, particularly those that have been underserved and overlooked in manufacturing. This includes rural communities, women, people of color, and veterans. Georgia AIM projects are located across the state and work within communities to create a diverse AI manufacturing workforce. The federally funded program is a collaborative project administered through Georgia Tech’s Enterprise Innovation Institute and the Georgia Tech Manufacturing Institute.

A cornerstone of Georgia AIM Week was the debut of the Georgia AIM Mobile Studio, a 53-foot custom trailer outfitted with technology that can be used in manufacturing — but also by anyone with an interest in learning about AI and smart technology. Visitors to the mobile studio can experience virtual reality, 3-D printing, drones, robots, sensors, computer vision, and circuits essential to running this new tech.

There’s even a dog — albeit a robotic one — named Nova.

The studio was designed to introduce students to the possibilities of careers in manufacturing and show small businesses some of the cost-effective ways they can incorporate 21st century technology into their manufacturing operations.

“We were awarded about $7.5 million to build this wonderful studio here,” said Kenya Asbill, who works at the Russell Innovation Center for Entrepreneurs (RICE) as the Economic Development Administration project manager for Georgia AIM. “We will be traveling around the state of Georgia to introduce artificial intelligence in manufacturing to our targeted communities, including underserved rural and urban residents.”

Some technology on the Georgia AIM Mobile Studio was designed in consultation with project partners Kitt Labs and Technologists of Color. An additional suite of “technology vignettes” were developed by students at the University of Georgia College of Engineering. RICE and UGA served as project leads for the mobile studio development, and RICE will oversee its deployment across the state in the coming months.

To request a mobile studio visit, please visit the Georgia AIM website.

During Monday’s kickoff, the Georgia Tech cheerleaders and Buzz fired up the crowd before an event that featured remarks by Acting Assistant Secretary of the U.S. EDA Christina Killingsworth; Jay Bailey, president and CEO of RICE; Beshoy Morkos, associate professor of mechanical engineering at the University of Georgia; Aaron Stebner, co-director of Georgia AIM; David Bridges, vice president of Georgia Tech’s Enterprise Innovation Institute; and lightning presentations by Georgia AIM project leads from around the state.

Following the presentations, mobile studio tours were led by Jon Exume, president and executive director, and Mark Lawson, director of technology, for Technologists of Color. The organization works to create a cohesive and thriving community of African Americans in tech.

“I’m particularly excited to witness the launch of the Georgia AIM Mobile Studio. It really will help demystify AI and bring its promise to underserved rural areas across the state,” Killingsworth said. “AI is the defining technology of our generation. It’s transforming the global economy, and it will continue to have tremendous impact on the global workforce. And while AI has the potential to democratize access to information, enhance efficiency, and allow humans to focus on the more complex, creative, and meaningful aspects of work, it also has the power to exacerbate economic disparity. As such, we must work together to embrace the promise of AI while mitigating its risks.”

Other events during Georgia AIM week included the Middle Georgia Innovation Corridor Manufacturing Expo in Warner Robins, West Georgia Manufacturing Day – Student Career Expo in LaGrange, and a visit to Colquitt County High School in Moultrie. The week wrapped on Friday, Oct. 4, at the University of Georgia in Athens with a National Manufacturing Day celebration.

“We’re focused on growing our manufacturing economy,” Ennis said. “We’re also focused on the development and deployment of innovation and talent in the manufacturing industry as it relates to AI and other technologies. Manufacturing is cool. It is a changing industry. We want our students and younger people to understand that this is a career.”

News Contact

Dec. 05, 2024

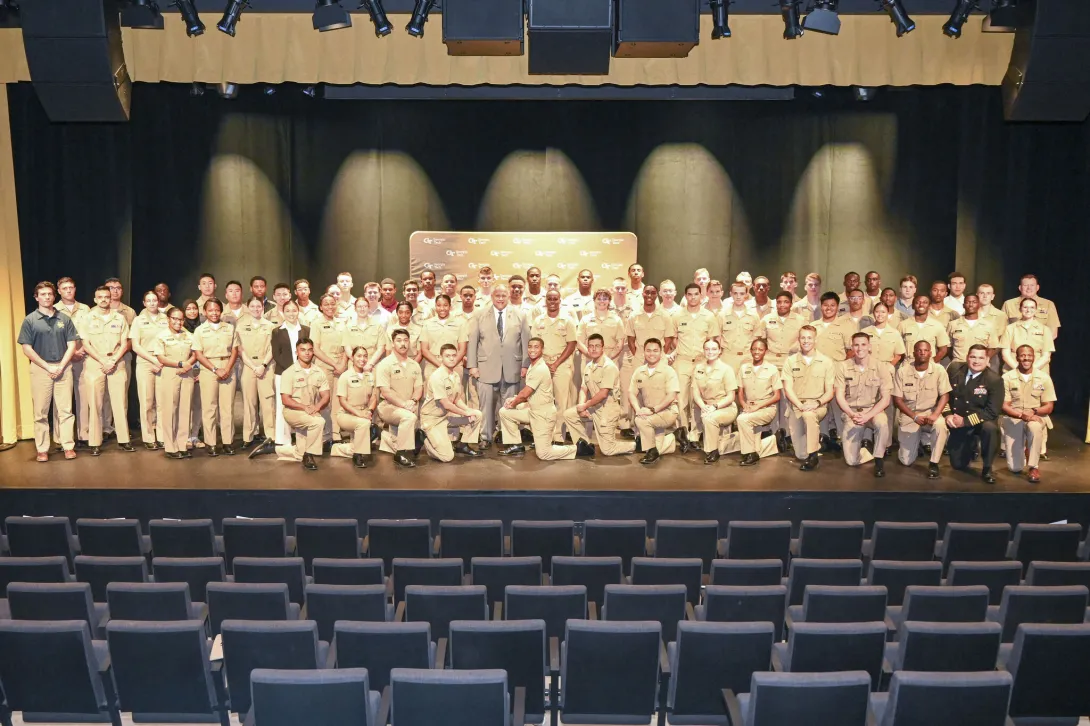

The Georgia Tech Research Institute (GTRI) proudly hosted U.S. Secretary of the Navy Carlos Del Toro during his recent campus visit. Del Toro's visit underscored the critical role of innovation and technology in national security and highlighted Georgia Tech's significant contributions to this effort.

“Our Navy-Marine Corps Team remains at the center of global and national security — maintaining freedom of the seas, international security, and global stability,” he explained in his remarks at the John Lewis Student Center. “To win the fight of the future, we must embrace and implement emerging technologies.”

The Secretary provided an update on science and technology research to the Atlanta Region Naval Reserve Officer Training Corps unit, comprised of midshipmen from Georgia Tech, Georgia State University, Kennesaw State University, Morehouse College, Spelman College, and Clark Atlanta University. Del Toro has worked to establish a new Naval Science and Technology Strategy to address current and future challenges faced by the Navy and Marine Corps. The strategy serves as a global call to service and innovation for stakeholders in academia, industry, and government.

“The Georgia Tech Research Institute has answered this call,” he said.

A key pillar of the new strategy, says Del Toro, was the establishment of the Department of the Navy’s Science and Technology Board in 2023, “with the intent that the board provide independent advice and counsel to the department on matters and policies relating to scientific, technical, manufacturing, acquisition, logistics, medicine, and business management functions.”

The board, which includes Georgia Tech Manufacturing Institute (GTMI) Executive Director Thomas Kurfess, has conducted six studies in its inaugural year to identify new technologies for rapid adoption and provide near-term, practical recommendations for quick implementation by the Navy.

“I recently led the team for developing a strategy for integrating additive manufacturing into the Navy’s overall shipbuilding and repair strategy,” says Kurfess. “We just had final approval of our recommendations — we are making a significant impact on the Navy with respect to additive manufacturing.”

Del Toro's visit to Georgia Tech reaffirms the Institute's role as a leader in research and innovation, particularly in areas critical to national security. The collaboration between Georgia Tech and the Department of the Navy continues to drive advancements that ensure the safety and effectiveness of the nation's naval forces.

“Innovation is at the heart of our efforts at Georgia Tech and GTMI,” says Kurfess. “It is an honor to put that effort toward ensuring our country’s safety and national security in partnership with the U.S. Navy.”

“As our department continues to reimagine and refocus our innovation efforts,” said Del Toro, “I encourage all of you — our nation’s scientists, engineers, researchers, and inventors — to join us.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Dec. 05, 2024

As automation and AI continue to transform the manufacturing industry, the need for seamless integration across all production stages has reached an all-time high. By digitally designing products, controlling the machinery that builds them, and collecting precise data at each step, digital integration streamlines the entire manufacturing process — cutting down on waste materials, cost, and production time.

Recently, the Georgia Tech Manufacturing Institute (GTMI) teamed up with OPEN MIND Technologies to host an immersive, weeklong training session on hyperMILL, an advanced manufacturing software enabling this digital integration.

OPEN MIND, the developer of hyperMILL, has been a longtime supporter of research operations in Georgia Tech’s Advanced Manufacturing Pilot Facility (AMPF). “Our adoption of their software solutions has allowed us to explore the full potential of machines and to make sure we keep forging new paths,” said Steven Ferguson, a principal research scientist at GTMI.

Software like hyperMILL helps plan the most efficient and accurate way to cut, shape, or 3D print materials on different machines, making the process faster and easier. Hosted at the AMPF, the immersive training offered 10 staff members and students a hands-on platform to use the software while practicing machining and additive manufacturing techniques.

“The number of new features and tricks that the software has every year makes it advantageous to stay current and get a refresher course,” said Alan Burl, a Ph.D. student in the George W. Woodruff School of Mechanical Engineering who attended the training session. “More advanced users can learn new tips and tricks while simultaneously exposing new users to the power of a fully featured, computer-aided manufacturing software.”

OPEN MIND Technologies has partnered with Georgia Tech for over five years to support digital manufacturing research, offering biannual training in their latest software to faculty and students.

“Meeting the new graduate students each fall is something that I look forward to,” said Brad Rooks, an application engineer at OPEN MIND and one of the co-leaders of the training session. “This particular group posed questions that were intuitive and challenging to me as a trainer — their inquisitive nature drove me to look at our software from fresh perspectives.”

The company is also a member of GTMI’s Manufacturing 4.0 Consortium, a membership-based group that unites industry, academia, and government to develop and implement advanced manufacturing technologies and train the workforce for the market.

“The strong reputation of GTMI in the manufacturing industry, and more importantly, the reputation of the students, faculty, and researchers who support research within our facilities, enables us to forge strategic partnerships with companies like OPEN MIND,” says Ferguson, who also serves as executive director of the consortium. “These relationships are what makes working with and within GTMI so special.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Dec. 03, 2024

Tequila A.L. Harris, a professor in the George W. Woodruff School of Mechanical Engineering at Georgia Tech, leads energy and manufacturing initiatives at the Strategic Energy Institute. Her research explores the connectivity between the functionality of nano- to macro-level films, components, and systems based on their manufacture or design and their life expectancy, elucidating mechanisms by which performance or durability can be predicted. She uses both simulations and experimentation to better understand this connectivity.

By addressing complex, fundamental problems, Harris aims to make an impact on many industries, in particular energy (e.g., polymer electrolyte membrane fuel cells), flexible electronics (e.g., organic electronics), and clean energy (e.g., water), among others.

Harris has experience in developing systematic design and manufacturing methodologies for complex systems that directly involve material characterization, tooling design and analysis, computational and analytical modeling, experimentation, and system design and optimization. Currently, her research projects focus on investigating the fundamental science associated with fluid transport, materials processing, and design issues for energy/electronic/environmental systems. Below is a brief Q&A with Harris, where she discusses her research and how it influences the energy and manufacturing initiatives at Georgia Tech.

- What is your field of expertise and at what point in your life did you first become interested in this area?

In graduate school, I aimed to become a roboticist but shifted my focus after realizing I was not passionate about coding. This led me to explore manufacturing, particularly scaled manufacturing processes that transform fluids into thin films for applications in energy systems. Subsequently, my expertise is in coating science and technology and manufacturing system development.

- What questions or challenges sparked your current energy research? What are the big issues facing your research area right now?

We often ask how we can process materials more cost-effectively and create complex architectures that surpass current capabilities. In energy systems, particularly with fuel cells, reducing the number of manufacturing steps is crucial, as each additional step increases costs and complexity. As researchers, we focus on understanding the implications of minimizing these steps and how they affect the properties and performance of the final devices. My group studies these relationships to find innovative manufacturing solutions. A major challenge in the manufacture of materials lies in scaling efficiently while maintaining performance and keeping costs low enough for commercial adoption. This is a pressing issue, especially for enabling technologies such as batteries, fuel cells, and flexible electronics needed for electric vehicles, where the production volumes are on the order of billions per year.

- What interests you the most in leading the research initiative on energy and manufacturing? Why is your initiative important to the development of Georgia Tech’s energy research strategy?

What interests me most is the inherent possibility of advancing energy technologies holistically, from materials sourcing and materials production to public policy. More specifically, my interests are in understanding how we can scale the manufacture of burgeoning technologies for a variety of areas (energy, food, pharmaceuticals, packaging, and flexible electronics, among others) while reducing cost and increasing production yield. In this regard, we aim to incorporate artificial intelligence and machine learning in addition to considering limitations surrounding the production lifecycle. The challenges that exist to meet these goals cannot be done in a silo but rather as part of interdisciplinary teams who converge on specific problems. Georgia Tech is uniquely positioned to make significant impacts in the energy and manufacturing ecosystem, thanks to our robust infrastructure and expertise. With many manufacturers relocating to Georgia, particularly in the "energy belt" for EVs, batteries, and recycling facilities, Georgia Tech can serve as a crucial partner in advancing these industries and their technologies.

- What are the broader global and social benefits of the research you and your team conduct on energy and manufacturing?

The global impact of advancing manufacturing technologies is significant for processing at relevant economy of scales. To meet such demands, we cannot always rely on existing manufacturing know-how. The Harris group holds the intellectual property on innovative processes that allow for the faster fabrication of individual or multiple materials, and that exhibit higher yields and improved performance than existing methods. Improvements in manufacturing systems often result in reduced waste, which is beneficial to the overall materials development ecosystem. Another global and societal benefit is workforce development. The students on my team are well-trained in the manufacture of materials using tools that are amenable to the most advanced and scalable manufacturing platform, roll-to-roll manufacturing, with integrated coating and printing tools. This unique skill set equips our students to thrive and become leaders in their careers.

- What are your plans for engaging a wider Georgia Tech faculty pool with the broader energy community?

By leveraging the new modular pilot-scale roll-to-roll manufacturing facility that integrates slot die coating, gravure/flexography printing, and inkjet printing, I plan to continue reaching out to faculty and industrial partners to find avenues for us to collaborate on a variety of interdisciplinary projects. The goal is to create groups that can help us advance materials development more rapidly by working as a collective from the beginning, versus considering scalable manufacturing pathways as an afterthought. By bringing interdisciplinary groups (chemists, materials scientists, engineers, etc.) together early, we can more efficiently and effectively overcome traditional delays in getting materials to market or, worse, the inability to push materials to market (which is commonly known as the valley of death). This can only be achieved by dismantling barriers that hinder early collaboration. This new facility aims to foster collaborative work among stakeholders, promoting the integrated development and characterization of various materials systems and technologies, and ultimately leading to more efficient manufacturing practices.

- What are your hobbies?

I enjoy cooking and exploring my creativity in this space by combining national and international ingredients to make interesting and often delicious fusion cuisines. I also enjoy roller skating, cycling, and watching movies with my family and friends.

- Who has influenced you the most?

From a professional standpoint, my research team influences me the most. After I present them with a problem, they are encouraged and expected to think beyond our initial starting point. This ability to freely think and conceive of novel solutions sparks many new ideas on which to build future ideas. The best cases have kept me up at night, inspiring me to think about how to approach new problems and funding opportunities. I carry their experiences and challenges with me. Their influence on me is profound and is fundamentally why I am a professor.

News Contact

Priya Devarajan || SEI Communications Program Manager

Nov. 26, 2024

As head of the Department of Energy’s (DOE’s) Office of Science, the nation’s largest federal sponsor of physical sciences research, Under Secretary Geri Richmond understands the vital role of higher education in advancing U.S. science and innovation. On Monday, Nov. 18, she visited Georgia Tech with Chief of Staff in the Office of the Under Secretary for Science and Innovation Ariel Marshall, Ph.D. Chem 14, to meet with students and faculty and discuss future opportunities for collaboration.

During the visit, Richmond and Marshall toured Dr. Thomas Orlando’s electron and photo induced chemistry on surfaces lab; the Invention Studio; Dr. Akanksha Menon’s water-energy research lab; and the AI Maker Space.

Richmond also joined the Women+ in Chemistry student group for a roundtable discussion. An advocate for underrepresented groups in STEM fields, Richmond is the founding director of the Committee on the Advancement of Women Chemists (COACh). COACh is a grassroots organization dedicated to ensuring equal opportunities for all in science.

Georgia Tech’s longstanding partnership with the DOE is centered on research and technology development aimed at advancing energy systems and promoting sustainability. The Institute plays a key role in the DOE’s national initiatives, contributing to transformative work in energy efficiency, renewable energy, nuclear power, and environmental sustainability. Through joint research programs, grants, and initiatives, Georgia Tech continues to drive innovation and push the boundaries of energy solutions for a sustainable future.

Nov. 21, 2024

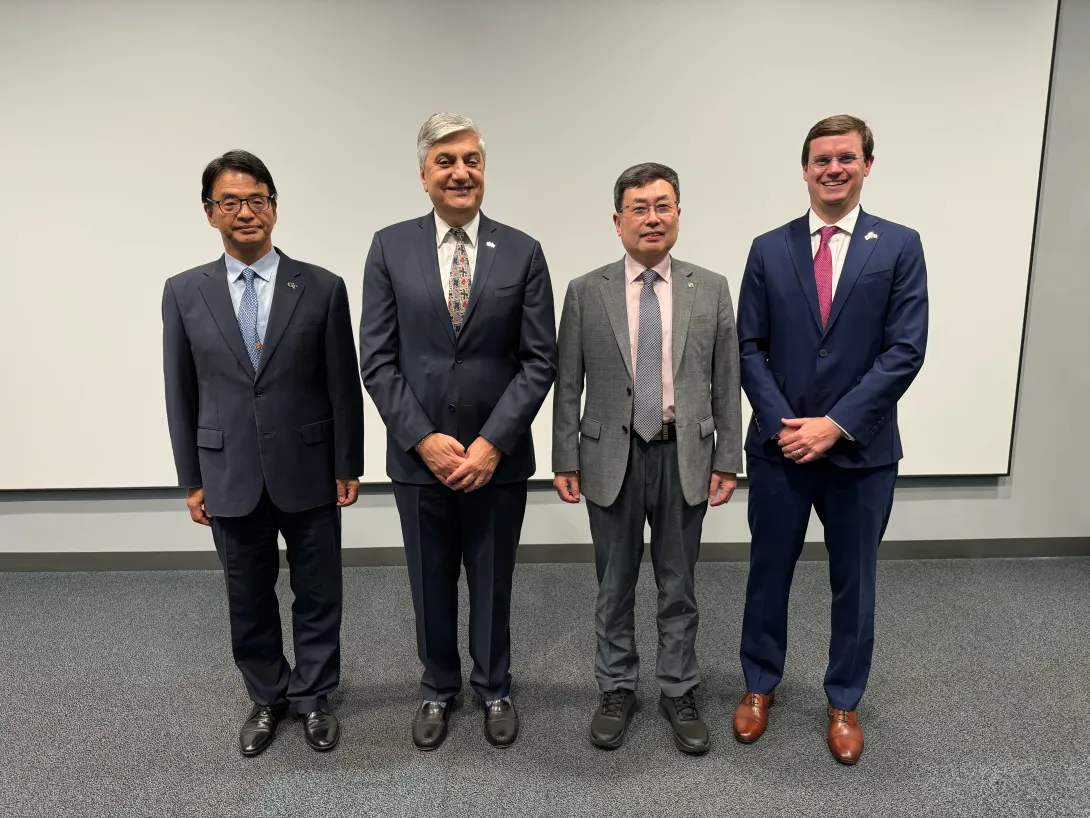

In a significant step towards fostering international collaboration and advancing cutting-edge technologies in manufacturing, Georgia Tech recently signed Memorandums of Understanding (MoUs) with the Korea Institute of Industrial Technology (KITECH) and the Korea Automotive Technology Institute (KATECH). Facilitated by the Georgia Tech Manufacturing Institute (GTMI), this landmark event underscores Georgia Tech’s commitment to global partnerships and innovation in manufacturing and automotive technologies.

“This is a great fit for the institute, the state of Georgia, and the United States, enhancing international cooperation,” said Thomas Kurfess, GTMI executive director and Regents’ Professor in the George W. Woodruff School of Mechanical Engineering (ME). “An MoU like this really gives us an opportunity to bring together a larger team to tackle international problems.”

“An MoU signing between Georgia Tech and entities like KITECH and KATECH signifies a formal agreement to pursue shared goals and explore collaborative opportunities, including joint research projects, academic exchanges, and technological advancements,” said Seung-Kyum Choi, an associate professor in ME and a major contributor in facilitating both partnerships. “Partnering with these influential institutions positions Georgia Tech to expand its global footprint and enhance its impact, particularly in areas like AI-driven manufacturing and automotive technologies.”

The state of Georgia has seen significant growth in investments from Korean companies. Over the past decade, approximately 140 Korean companies have committed around $23 billion to various projects in Georgia, creating over 12,000 new jobs in 2023 alone. This influx of investment underscores the strong economic ties between Georgia and South Korea, further bolstered by partnerships like those with KITECH and KATECH.

“These partnerships not only provide access to new resources and advanced technologies,” says Choi, “but create opportunities for joint innovation, furthering GTMI’s mission to drive transformative breakthroughs in manufacturing on a global scale.”

The MoUs with KITECH and KATECH are expected to facilitate a wide range of collaborative activities, including joint research projects that leverage the strengths of both institutions, academic exchanges that enrich the educational experiences of students and faculty, and technological advancements that push the boundaries of current manufacturing and automotive technologies.

“My hopes for the future of Georgia Tech’s partnerships with KITECH and KATECH are centered on fostering long-term, impactful collaborations that drive innovation in manufacturing and automotive technologies,” Choi noted. “These partnerships do not just expand our reach; they solidify our leadership in shaping the future of manufacturing, keeping Georgia Tech at the forefront of industry breakthroughs worldwide.”

Georgia Tech has a history of successful collaborations with Korean companies, including a multidecade partnership with Hyundai. Recently, the Institute joined forces with the Korea Institute for Advancement of Technology (KIAT) to establish the KIAT-Georgia Tech Semiconductor Electronics Center to advance semiconductor research, fostering sustainable partnerships between Korean companies and Georgia Tech researchers.

“Partnering with KATECH and KITECH goes beyond just technological innovation,” said Kurfess, “it really enhances international cooperation, strengthens local industry, drives job creation, and boosts Georgia’s economy.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Nov. 19, 2024

In 2017, a long, oddly shaped asteroid passed by Earth. Called ‘Oumuamua, it was the first known interstellar object to visit our solar system, but it wasn’t an isolated incident — less than two years later, in 2019, a second interstellar object (ISO) was discovered.

“‘Oumuamua was found passing just 15 million miles from Earth — that’s much closer than Mars or Venus,” says James Wray. “But it was formed in an entirely different solar system. Studying these objects could give us incredible insight into extrasolar planets, and how our planet fits into the universe.”

Wray, a professor in the School of Earth and Atmospheric Sciences at Georgia Tech, has just been awarded a Simons Foundation Pivot Fellowship to do just that. Pivot Fellowships are among the most prestigious sources of funding for cutting-edge research, and support leading researchers who have the deep interest, curiosity and drive to make contributions to a new discipline.

Wray has primarily studied the geoscience of Mars. He will leverage knowledge of nearby planets to understand ISOs and planets much farther away. “I want to understand how planets got to be the way they are, and if they could have ever hosted life,” he explains. “Extrasolar planets give us many more places to ask those questions than our solar system does, but they're too distant to visit with spacecraft. ISOs provide a unique opportunity to explore other solar systems without leaving our own.”

The Fellowship will provide salary support as well as funding for research, travel, and professional development. “Seed funds like this are so valuable,” says Wray. “I’m incredibly grateful to the Simons Foundation. I’d also like to thank Georgia Tech for its support,” he adds, sharing that the Center for Space Technology and Research supported a related research effort at the University of Hawaii earlier this year. “My mentor and I were able to spend some of that time improving our Pivot Fellowship proposal, which played a critical role in securing this Fellowship.”

In search of ISOs

Wray will study small solar system bodies like asteroids and comets to decode the processes of planet formation and space weathering, and will analyze data from the 2017 and 2019 ISOs.

He will also work alongside collaborators including Karen Meech of the University of Hawaii, who led the paper characterizing ‘Oumuamua, to conceptualize what an intercept mission might look like.

“We still have a lot of questions regarding ISOs,” he says. “Hundreds of papers have already been written about them, but we still don't know the answers.” One key mystery is the composition of the bodies: both the 2017 and 2019 objects were compositionally different from those in our solar system.

“Are they inherently different from the bodies in our solar system, or did the long journey to our solar system make them that way? Is our solar system different from others?” Wray asks. “We could answer so many questions with even a simple picture of the next ISO that comes close enough for us to intercept with spacecraft.”

A cosmic timeline

While there is no guarantee that another ISO might be spotted in our solar system, the timing is opportune — upcoming telescope surveys are poised to detect such interstellar objects. “In mid-2025, when I will start this Fellowship, the new Rubin Observatory will begin scanning the entire sky,” Wray says. “It has the potential to discover up to several new ISOs per year.”

“ISO visits are always brief,” he adds, “so the research needs to be in place for when one is spotted.” If an interstellar object is detected, Wray and Meech will be poised to leverage specialized telescopes in Hawaii, along with others worldwide, to better understand it, studying its size, shape, and composition — and potentially sending spacecraft to image it.

“We might never find another ISO — or they might be the key to imminent breakthroughs in understanding our place in the galaxy,” Wray adds. “I'm extremely grateful to the Simons Foundation for the flexibility to pursue this research at whatever pace the cosmos allows.”

News Contact

Written by Selena Langner

Pagination

- Previous page

- 7 Page 7

- Next page