Aug. 14, 2025

This study examines how short-term variability in wind power—known as wind intermittency—affects real-time electricity system imbalances in U.S. regional power markets. The authors, Victoria Godwin and Matthew E. Oliver of the Georgia Institute of Technology and EPIcenter affiliates, analyze data from four major system operators: Bonneville Power Administration (BPA), New York ISO (NYISO), Southwest Power Pool (SPP), and PJM Interconnection. They focus on Area Control Error (ACE), a real-time metric used by grid operators to measure the mismatch between electricity supply and demand, adjusted for frequency deviations. Maintaining ACE near zero is essential for grid stability.

The authors find that a doubling of hourly wind generation variance increases average hourly ACE by 2% in BPA, 3.7% in NYISO, and 11.4% in SPP—equivalent to 1.2 MW, 1.8 MW, and 9.35 MW increases in system imbalance, respectively. PJM shows no significant effect, likely due to less granular data. They also show that sudden increases in wind generation are more likely to cause oversupply (positive ACE), while sudden drops lead to undersupply (negative ACE), confirming asymmetric operational impacts.

Read Full Story on the EPIcenter Website

News Contact

Gilbert Gonzalez || EPIcenter

Media Contact: Priya Devarajan | Strategic Energy Institute

Jul. 18, 2025

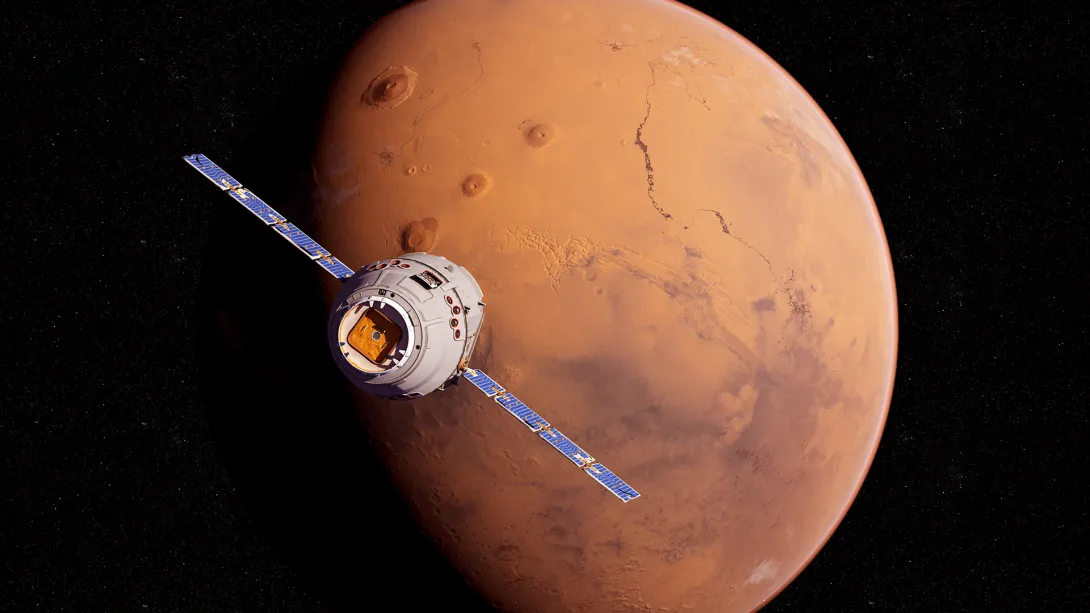

As more satellites launch into space, the satellite industry has sounded the alarm about the danger of collisions in low Earth orbit (LEO). What is less understood is what might happen as more missions head to a more targeted destination: the moon.

According to The Planetary Society, more than 30 missions are slated to launch to the moon between 2024 and 2030, backed by the U.S., China, Japan, India, and various private corporations. That compares to over 40 missions to the moon between 1959 and 1979 and a scant three missions between 1980 and 2000.

A multidisciplinary team at Georgia Tech has found that while collision probabilities in orbits around the moon are very low compared to Earth orbit, spacecraft in lunar orbit will likely need to conduct multiple costly collision avoidance maneuvers each year. The Journal of Spacecraft and Rockets published the Georgia Tech collision-avoidance study in March.

“The number of close approaches in lunar orbit is higher than some might expect, given that there are only tens of satellites, rather than the thousands in low Earth orbit,” says paper co-author Mariel Borowitz, associate professor in the Sam Nunn School of International Affairs in the Ivan Allen College of Liberal Arts.

Borowitz and other researchers attribute these risky approaches in part to spacecraft often choosing a limited number of favorable orbits and the difficulty of monitoring the exact location of spacecraft that are more than 200,000 miles away.

“There is significant uncertainty about the exact location of objects around the moon. This, combined with the high cost associated with lunar missions, means that operators often undertake maneuvers even when the probability is very low — up to one in 10 million,” Borowitz explains.

The Georgia Tech research is the first published study showing short- and long-term collision risks in cislunar orbits. Using a series of Monte Carlo simulations, the researchers modeled the probability of various outcomes in a process that cannot be easily predicted because of random variables.

“Our analysis suggests that satellite operators must perform up to four maneuvers annually for each satellite for a fleet of 50 satellites in low lunar orbit (LLO),” said one of the study’s authors, Brian Gunter, associate professor in the Daniel Guggenheim School of Aerospace Engineering.

He noted that with only 10 satellites in LLO, a satellite might still need a yearly maneuver. This is supported by what current cislunar operators have reported.

Favored Orbits

Most close encounters are expected to occur near the moon’s equator, an intersection point between the orbit planes of commonly used “frozen” and low lunar orbits, which are preferred by many operators. Other possible regions of congestion can occur at the Lagrangian points, or regions where the gravitational forces of Earth and the moon balance out. Stable orbits in these regions have names such as Halo and Lyapunov orbits.

“Lagrangian points are an interesting place to put a satellite because it can maintain its orbit for long periods with very little maneuvering and thrusting. Frozen orbits, too. Anywhere outside these special areas, you have to spend a lot of fuel to maintain an orbit,” he said.

Gunter and other researchers worry that if operators aren’t coordinated about how they plan lunar missions, opportunities for collision will increase in these popular orbits.

“The close approaches were much more common than I would have intuitively anticipated,” says lead study author Stef Crum.

The 2024 graduate of Georgia Tech’s aerospace engineering doctoral program notes that, considering the small number of satellites in lunar orbit, the need for multiple maneuvers was “really surprising.”

Crum, who is also co-founder of Reditus Space, a startup he founded in 2024 to provide reusable orbital re-entry services, adds that the cislunar environment is so challenging because “it’s incredibly vast.”

His research also examines ways to improve object monitoring in cislunar space. Maintaining continuous custody of these objects is difficult because a target’s position must be monitored over the entire duration of its trajectory.

“That wasn’t feasible for translunar orbits, given the vast volume of cislunar orbit, which stretches multiple millions of kilometers in three dimensions,” he says.

By estimating a satellite’s orbit using observed data and constraining the presumed location and direction of the satellite, rather than continuous tracking (a process known as continuous custody), Crum greatly simplified the process.

“You no longer need thousands of satellites or a set of enormous satellites to cover all potential trajectories,” he explains. “Instead, one or a few satellites are required, and operators can lose custody for a time as long as the connection is reacquired later.”

Since the team started their study, there has been a lot of interest in the moon and cislunar activity — both NASA and China’s National Space Administration are planning to send humans to the moon. In the last two years, India, Japan, the U.S., China, Russia, and four private companies have attempted missions to the moon.

Why the Moon

Spacefaring nations’ intense interest in exploring the lunar surface comes as no surprise given that the moon offers a variety of resources, including solar power, water, oxygen, and metals like iron, titanium, and uranium. It also contains Helium-3, a potential fuel for nuclear fusion, and rare earth metals vital for modern technology. With the recent discovery of water ice, it could be a plentiful source for rocket fuel that can be created from liquifying oxygen and hydrogen needed to launch deep space missions to destinations like Mars. In February, Georgia Tech announced that researchers have developed new algorithms to help Intuitive Machines’ lunar lander find water ice on the moon.

Commercial space companies like Axiom Space and Redwire Space, as well as space agencies, are actively building lunar infrastructure, from satellite constellations to orbital platforms to support communication, navigation, scientific research, and eventually space tourism.

A key project involves the Lunar Gateway, a joint venture of NASA and international space agencies like ESA, JAXA, and CSA, as well as commercial partners. Humanity’s first space station around the moon will serve as a central hub for human exploration of the moon and is considered a stepping stone for future deep space missions.

Getting Ahead of a Gold Rush to the Moon

All this activity underscores the urgency to get out in front of potential crowding issues — something that hasn’t occurred in LEO, where near-miss collisions, or conjunctions, are frequent. LEO, which is 100 to 1,200 miles above the Earth’s surface, is host to more than 14,000 satellites and 120 million pieces of debris from launches, collisions, and wear and tear, reports Reuters.

“Using the near-Earth environment as an example, the space object population has gone from approximately 6,000 active satellites in the early 2020s to an anticipated 60,000 satellites in the coming decade if the projected number of large satellite constellations currently in the works gets deployed. That poses many challenges in terms of how we can manage that sustainably,” observed Gunter. “If something similar happens in the lunar environment, say if Artemis (NASA’s program to establish the first long-term presence on the moon) is successful and a lunar base is established, and there is discovery of volatiles or water deposits, it could initiate a kind of gold rush effect that might accelerate the number of actors in cislunar space.”

For this reason, Borowitz argues for the need to begin working on coordination, either in the planning of the orbits for future missions or by sharing information about the location of objects operating in lunar orbit. She pointed out that spacecraft outfitted for moon missions are expensive, making a collision highly costly. Also, debris from such a scenario would spread in an unpredictable way, which could be problematic for other objects.

Gunter agreed, noting, “If we’re not careful, we could be putting a lot of things in this same path. We must ensure we build out the cislunar orbital environment in a smart way, where we’re not intentionally putting spacecraft in the same orbital spaces. If we do that, everyone should be able to get what they want and not be in each other’s way.”

Borowitz says some coordination efforts are underway with the UN Committee on the Peaceful Uses of Outer Space and the creation of an action team on lunar activities; however, international diplomacy is a time-consuming process, and it can be a challenge to keep pace with advancements in technology.

She contends that the Georgia Tech study could provide baseline data that “could be helpful for international coordination efforts, helping to ensure that countries better understand potential future risks.”

Gunter and Borowitz say that follow-on research for the team could involve looking into the Lunar Gateway orbit and other special orbits to see how crowded that space will likely get, and then do an end-to-end simulation of these orbits to determine the most effective way to build them out to avoid collision risks. Ultimately, they intend to develop guidelines to help ensure that future space actors headed to the moon can operate safely.

Jun. 25, 2025

More than half a century after the United States won the race to the moon, the White House is setting its sights on a new frontier: Mars. In a move reminiscent of the Apollo era, the administration has proposed landing Americans on the red planet by the end of 2026 — a bold initiative that has reignited national ambition and drawn comparisons to the space race of the 20th century.

At Georgia Tech, researchers are already considering the mission’s implications, from engineering challenges to international diplomacy. While the White House has framed the mission as a demonstration of American leadership, experts say its success will depend on collaboration — across disciplines, sectors, and borders.

“This is more than a space race,” said Christos Athanasiou, an assistant professor in the Daniel Guggenheim School of Aerospace Engineering. “Mars isn’t just the next step for space exploration — it’s a stress test for everything we’ve learned about sustainability, resilience, and engineering under uncertainty.”

Engineering for the Red Planet

For Athanasiou, the Mars mission is a test of human ingenuity, creativity, and endurance. Unlike the moon, Mars is months away by spacecraft, with no quick return option. That distance introduces a host of engineering challenges that must be solved before a single boot touches Martian soil.

“Ensuring astronaut safety on such a long-duration mission requires us to understand how the Earth materials we will be using in our mission behave in extraterrestrial conditions,” he said.

In his recent TEDx talk, Athanasiou emphasized that the mission must also consider its environmental impact. Mars may be barren, but it is not immune to contamination. Athanasiou believes that strategies used for environmental remediation on Earth — such as waste recycling, habitat sustainability, and pollution control — can be adapted to protect the Martian environment.

“If we can build structures that survive Mars using recycled materials, AI, and Earth-born ingenuity, we’ll unlock entirely new ways to live — both out there and back here,” he said.

Reading the Martian Landscape

James Wray, a professor in the School of Earth and Atmospheric Sciences, has spent years analyzing Mars’ surface using data from orbiters and rovers. He sees the planet as both a scientific treasure trove and a logistical puzzle.

“Mars has vast lava plains, dust storms, and steep canyons that pose real risks to human settlement,” Wray said.

But beneath the challenges lies opportunity. Mars is home to significant deposits of water ice, especially near the poles and just below the surface in some mid-latitude regions. That water could be used not only for drinking but also for producing oxygen and rocket fuel — critical resources for long-term habitation and return missions.

“The presence of water ice near the surface is a game changer. It could support life, and more importantly, it could support us,” Wray said.

He also noted that Mars’ thin atmosphere — just 1% the density of Earth’s — complicates everything from landing spacecraft to shielding astronauts from cosmic radiation. “We’ve learned a lot from robotic missions. Now it’s time to apply that knowledge to human exploration.”

Diplomacy Beyond Earth

Lincoln Hines, an assistant professor in the Sam Nunn School of International Affairs, says that the Mars mission could have significant diplomatic implications. “The Mars mission has little to no bearing on space security; it has no military value,” he said. However, he noted that international cooperation could still play a valuable role in reducing the financial burden of such a costly endeavor.

Hines warned that shifting U.S. priorities from the moon to Mars could strain the international partnerships built through the Artemis program. He explained that some countries may view the Mars initiative as a distraction from the more immediate and economically promising lunar goals. Political instability in the U.S., he added, could further erode trust in its long-term commitments. “Countries may lose faith that the United States is a reliable partner to cooperate with for its lunar program if Mars seems to be the new priority,” he said.

He also pointed to existing legal frameworks like the Outer Space Treaty, which prohibits sovereign claims on celestial bodies, and the Rescue Agreement, which obliges nations to assist astronauts in distress. While these agreements provide a foundation, Hines emphasized that they don’t fully address the complexities of future Mars missions.

Establishing international norms for Mars exploration, he said, will be challenging. “Norms are really hard to develop,” Hines explained, noting that countries often hesitate to commit to rules without assurance that others will do the same. Still, he suggested that Mars — with its limited material value — might offer a rare opportunity for cooperation, if nations are willing to engage in good faith.

News Contact

Siobhan Rodriguez

Senior Media Relations Representative

Institute Communications

May. 13, 2025

Gaurav Doshi, assistant professor in applied economics and a faculty affiliate of the Georgia Tech Energy Policy and Innovation Center researches, among other topics, ways to make the benefits of large electrification projects more transparent.

It’s a chicken and egg situation: Should renewable energy projects launch first hoping that transmission lines to pipe generated power to distant places will follow on their heels? Or should the transmission lines be stood up first as a way to attract investments in renewable energy projects? Which comes before the other? It’s a question that has intrigued Gaurav Doshi, assistant professor at the School of Economics at Georgia Tech, for a while now. His award-winning paper about this research explores the downstream effects of building power lines.

After a bachelor’s and master’s degree in applied economics from the Indian Institute of Technology at Kanpur, Doshi earned his doctorate in the same field from the University of Wisconsin at Madison in 2023. He explored questions about environmental economics as part of his doctoral work.

“Once I started researching energy markets in the U.S., I kept getting deeper and coming up with new questions,” Doshi says. Among the many his work explores: What are the effects of infrastructure policies and how can they help decarbonization efforts? What are some of the unintended consequences policy makers need to think about?

One of his current research projects has roots in his doctoral work. It explores how to quantify the benefits of difficult-to-quantify environmental infrastructure projects. Case in point: Decarbonization will likely lead to more electrification from renewable energy resources and will need power lines to transport this energy to places of demand. The costs for such infrastructure are pretty transparent as part of government project funding. But the benefits are less so, Doshi points out. To develop effective policy, both the costs and benefits need clear visibility. “Otherwise the question arises ‘why should we spend billions of dollars of taxpayer money if we don’t know the benefits?’”

News Contact

Written by: Poornima Apte

Contact: Priya Devarajan || SEI Communications Program Manager

Apr. 30, 2025

The Energy Policy and Innovation Center (EPIcenter) at Georgia Tech has announced the selection of six students for its inaugural Summer Research Program. The doctoral candidates, pursuing degrees in electrical and computer engineering, economics, computer science, and public policy, will be on campus working full-time on their dissertation research throughout the summer semester and present their findings in a final showcase.

EPIcenter will provide a full stipend and tuition for the 2025 summer semester to support the students.

“I look forward to hosting a fantastic cohort of early-career energy scholars this summer,” said Laura Taylor, EPIcenter’s director. “The summer research program will not only help the students advance their research while engaging in interdisciplinary dialogue but also offers professional development opportunities to position them for a strong start to their careers.”

The students will work with EPIcenter staff and be provided with on-campus workshops on written and oral communications. Biweekly meetings over the summer will offer the students an opportunity to share their work, progress, and ideas with each other and the EPIcenter faculty affiliates. In addition, the students will have the opportunity to engage with programs and distinguished guests of the center.

For students interested in presenting their research at a conference, EPIcenter also will provide travel grants of up to $600 pursuant to having their paper/presentation posted on the EPIcenter website.

"I applied to the Summer Research Program because its structure and community aligned perfectly with my summer plan on dissertation work in energy policy,” said Yifan Liu. “I aim to finalize key dissertation chapters and engage closely with peers and mentors to prepare me for the job market."

The program offers students an opportunity to promote their work through the EPIcenter communication channels including the website, news feeds, blogs, and the SEI newsletter.

“I am very excited to spend my summer at EPIcenter exploring how battery storage entry affects competition in the electricity market,” said Maghfira “Afi” Ramadhani, one of the student affiliates selected for the summer research program. “Specifically, I look at how the rollout of battery storage in the Texas electricity market impacts renewable curtailment, fossil-fuel generator markup, and generator entry and exit.”

With a variety of backgrounds and perspectives on energy, each of the students in the summer program brings something unique to EPIcenter.

La’Darius Thomas: “My project explores the potential of peer-to-peer energy trading systems in promoting decentralized, sustainable energy solutions. I aim to contribute to the development of energy models that empower individuals and communities to directly participate in electricity markets.”

Niraj Palsule: “I intend to gain interdisciplinary insights interfacing energy transition technology and policy developments by participating in the EPIcenter Summer Research Program.”

John Kim: “I believe the EPIcenter Summer Research Program will deepen my investigation of how environmental hazards disproportionately affect vulnerable communities through research on power outage impacts and lead contamination. This summer, I hope to refine my analysis and complete research on the socioeconomic dimensions of power reliability and environmental resilience.”

Mehmet “Akif” Aglar: "I applied to the EPIcenter Summer Research Program because it offers the chance to work alongside and learn from a community of highly qualified researchers across various fields. I believe the opportunity to present my work, receive feedback, and benefit from the structure the program provides will be invaluable for advancing my research."

About EPICenter

The mission of the Energy Policy and Innovation Center is to conduct rigorous studies and deliver high impact insights that address critical regional, national, and global energy issues from a Southeastern U.S. perspective. EPICenter is pioneering a holistic approach that calls upon multidisciplinary expertise to engage the public on the issues that emerge as the energy transformation unfolds. The center operates within Georgia Tech’s Strategic Energy Institute.

News Contact

Priya Devarajan || SEI Communications Program Manager

Apr. 15, 2025

Daniel Molzahn will readily admit he’s a Cheesehead.

Born and brought up in Wisconsin, the associate professor at the School of Electrical and Computer Engineering attended the University of Wisconsin, Madison, for undergraduate and graduate studies. It was also at Madison that he decided to go into the family business: power engineering.

Molzahn’s grandfather was a Navy electrician in World War II and later completed a bachelor’s in electrical engineering. He eventually was plant director at a big coal plant in Green Bay. Molzahn’s dad was also a power engineer and worked at a utility company, focusing on nuclear power.

It was not uncommon for family vacations to include a visit to a coal mine or a nuclear power plant. Being steeped in everything power engineering eventually seeped into Molzahn’s bones. “I remember seeing all the infrastructure that goes into producing energy and it was endlessly fascinating for me,” he says.

That endless fascination has worked its way into Molzahn’s research today—at the intersection of computation and power systems.

News Contact

Written by: Poornima Apte

News Contact: Priya Devarajan || SEI Communications Program Manager

Mar. 03, 2025

Students in Matthew Oliver’s economics of environment and international energy markets classes likely don’t have a clue about his unusual journey to the lectern: “I was bent on being a rock and roll musician from the time I was 16, and so I ended up dropping out of the University of Memphis after just three semesters,” says Oliver, an associate professor in the School of Economics at the Georgia Institute of Technology. “I was on tour for eight years — and I was starting to feel burned out.”

At a crossroads, Oliver decided to end his musical career — a choice he credits with launching him into academia. “I was 28 and wondering what to do with my life, so I reenrolled in college and discovered economics.” With a longtime love of the environment and growing concern for the climate, says Oliver, “I grew fascinated with solar power and other renewables and the new markets emerging around them.”

Today, his work in energy and environmental economics has implications for policies shaping the energy transition, from subsidies for rooftop solar to the expansion of battery storage.

“The current frontier of energy economics is electricity and renewables, and these are areas I am passionate about,” he says.

PVs and amped up electric use

One of Oliver’s core research thrusts is the solar rebound effect (SRE). This phenomenon involves a quirk of human behavior: When people install solar photovoltaic (PV) panels on the roofs of their homes, they often consume more electricity. “The introduction of solar energy does not perfectly displace grid-supplied energy, but instead reduces demand for grid-supplied energy on a less than one-for-one basis, because the household increases its total electricity consumption,” says Oliver. The bottom line: Solar PV systems may not lead to as much carbon emission reduction as anticipated.

News Contact

Written by: Leda Zimmerman

News Contact: Priya Devarajan, SEI Communications Program Manager

Jan. 30, 2025

The "solar rebound effect" is a phenomenon where households with residential solar photovoltaic (PV) systems end up consuming more electricity in response to greater solar energy generation. This outcome arises because the cost savings from generating their own electricity lead to increased usage. A recent study by Matthew E. Oliver from the Georgia Institute of Technology and his co-authors, Juan Moreno-Cruz from the University of Waterloo and Kenneth Gillingham from Yale University, delves into this effect, providing crucial insights for policymakers and researchers.

The study, titled "Microeconomics of the Solar Rebound under Net Metering," explores how different net metering policies influence the solar rebound effect. Net metering allows households to sell excess electricity generated by their solar panels back to the grid, often at the retail rate. This policy makes solar PV systems more financially attractive but also impacts household behavior.

The authors developed a theoretical framework to understand the solar rebound. They found that under classic net metering, the rebound is primarily an income effect. Households feel wealthier due to the savings on their electricity bills and thus consume more electricity. However, under net billing, where excess electricity is compensated at a lower rate, a substitution effect also comes into play. This means households might change their consumption patterns based on the relative costs of electricity from the grid versus their solar panels.

The study also incorporates behavioral economics concepts like moral licensing and warm glow effects. Moral licensing occurs when people justify increased consumption because they feel they are already doing something good, like generating green energy. Warm glow refers to the positive feelings from contributing to environmental sustainability, which can either increase or decrease consumption depending on the household's values.

One of the key takeaways from the study is the importance of the regulatory environment. Policymakers need to carefully design net metering policies to balance promoting solar adoption while accounting for the possibility that rebound effects may offset the desired outcomes of grid resilience and reduced greenhouse gas emissions. For instance, switching from net metering to net billing might reduce the rebound effect, leading to better environmental outcomes.

The welfare analysis conducted by the authors shows that the solar rebound's impact on social welfare depends on various factors, including the cleanliness of the electricity grid and the external costs of electricity production. In cleaner grids, the rebound might be less detrimental, while in grids reliant on fossil fuels, it could negate some of the environmental benefits of solar adoption.

This research underscores the complexity of energy policy and the need for nuanced approaches that consider both economic and behavioral factors. By understanding the solar rebound effect, stakeholders can make more informed decisions to promote sustainable energy use.

For more detailed insights, you can explore the full study by Matthew E. Oliver and his co-authors. Their work provides a robust foundation for future empirical research and policy development in the field of renewable energy.

This article was written with the assistance of Microsoft Copilot (Jan. 27, 2025) and edited by Georgia Tech EPIcenter's Gilbert X. Gonzalez and Matthew E. Oliver.

News Contact

News Contact: Priya Devarajan || SEI Communications Program Manager

Written by: Gilbert X. Gonzalez, EPIcenter, Matthew Oliver, EPIcenter Faculty Affiliate

Jun. 03, 2024

Georgia Tech's new GROWER VIP is creating the country's most comprehensive real-time power outage tracker for research use. The database will help researchers explore questions about the causes and effects of power outages and how policy interventions can help strengthen grid resilience.

Why now?

This understanding is urgent in the wake of increasingly extreme climate change-driven weather events and natural disasters, as well as the federal government’s investment of more than $15 billion in grid modernization under the Inflation Reduction Act and Bipartisan Infrastructure Law.

The database will help researchers learn more about the causes of outages and their societal impacts, such as on housing prices, business activity, public health, and crime. It will also help them obtain greater insight into which communities experience the most frequent and longest outages and what can be done to help.

How does it work?

- Utility companies report real-time power outages, but the data is fractured across different service territories and states.

- Users can’t download data directly, making the information difficult to use for research and evaluation.

- Because of this, it's hard for researchers and agencies to understand the extent and scope of problems with the energy grid.

To address these challenges, the GROWER team developed algorithms and web scrapers. They use Amazon Web Services to crawl the utility websites every 15 minutes and collect the power outage data for many states in one place.

Who’s Involved?

The Grid Resilience, Outage, Weather, and Emergency Response (GROWER) Lab is a Vertically Integrated Project launched in 2024 by faculty and students in the Ivan Allen College of Liberal Arts and the College of Engineering.

Brian Y. An, an assistant professor in the School of Public Policy, and Constance Crozier, an assistant professor in the School of Industrial and Systems Engineering, lead the project alongside John Kim, the lab manager and a public policy Ph.D. student. The group includes 15 students in computer science, city and regional planning, business, public policy, and industrial systems and engineering programs.

What’s Next?

The GROWER team has already begun applying findings from the dataset to research questions.

They are writing a paper based on data showing that racial and ethnic minorities experience more frequent and longer power outages than other groups and have also begun examining the effects of power outages on crime and medical emergencies.

This summer, they will partner with the Oak Ridge National Laboratory to provide technical assistance to the Department of Energy Grid Deployment Office, which is the lead federal agency administering grid modernization grants.

“It is incredibly rewarding to connect with research groups in and out of Georgia Tech who share this vision with us,” An said. “We’re excited to conduct robust research that will inform real-word policy making across the country."

News Contact

Di Minardi

Ivan Allen College of Liberal Arts

May. 03, 2024

On April 12, the Energy, Policy, and Innovation Center (EPICenter) hosted its second round of the “Friday Lightning Talk Series” at the Scholars Event Network space in the Price Gilbert Library.

Eight multidisciplinary participants from Georgia Tech, including postdoctoral students, graduate students, research faculty, and research associates from public policy, economics, electrical and computer engineering, industrial and systems engineering, and EPICenter, presented an overview of an energy-related research project during the session.

Laura Taylor, chair of the School of Economics and interim director of EPICenter, introduced the organization’s new faculty affiliate program through which affiliates, their students, and postdocs present and share research ideas and receive feedback from the audience.

Topics covered during the session included understanding the social costs of natural gas deregulation, managing EV charging during emergencies, exploring whether daylight saving time saves energy, the green energy workforce, the effects of community solar on household energy use, the Atlanta Energyshed project, clean hydrogen production in Georgia, and household responses to grid emergencies.

The interactive session was well attended with over 25 attendees asking thought-provoking questions and providing suggestions on future areas to explore.

The first round was held on March 1 and was such a success that this second round had a full slate of presenters and a full house of audience members. The agendas for both lightning round talks are available below, along with links to presentation slides.

A unit of the Strategic Energy Institute of Georgia Tech, EPICenter’s mission is to conduct rigorous research and deliver high-impact insights that address the energy needs of the southeastern U.S., while keeping a national and global perspective. EPICenter calls upon broad, multidisciplinary expertise to engage the public and create solutions for critical emerging issues as our nation’s energy transformation unfolds.

News Contact

Priya Devarajan || SEI Communications Program Manager

Pagination

- 1 Page 1

- Next page