Oct. 05, 2023

The start of the fall semester can be busy for most Georgia Tech students, but this is especially true for Rafael Orozco. The Ph.D. student in Computational Science and Engineering (CSE) is part of a research group that presented at a major conference in August and is now preparing to host a research meeting in November.

We used the lull between events, research, and classes to meet with Orozco and learn more about his background and interests in this Meet CSE profile.

Student: Rafael Orozco

Research Interests: Medical Imaging; Seismic Imaging; Generative Models; Inverse Problems; Bayesian Inference; Uncertainty Quantification

Hometown: Sonora, Mexico

Tell us briefly about your educational background and how you came to Georgia Tech.

I studied in Mexico through high school. Then, I did my first two years of undergrad at the University of Arizona and transferred to Bucknell University. I was attracted to Georgia Tech’s CSE program because it is a unique combination of domain science and computer science. It feels like I am both a programmer and a scientist.

How did you first become interested in computer science and machine learning?

In high school, I saw a video demonstration of a genetic algorithm on the internet and became interested in the technology. My high school in Mexico did not have a computer science class, but a teacher mentored me and helped me compete at the Mexican Informatics Olympiad. When I started at Arizona, I researched the behavior of clouds from a Bayesian perspective. Since then, my research interests have always involved using Bayesian techniques to infer unknowns.

You mentioned your background a few times. Since it is National Hispanic Heritage Month, what does this observance mean to you?

I am quite proud to be a part of this group. In Mexico and the U.S., fellow Hispanics have supported me and my pursuits, so I know firsthand of their kindness and resourcefulness. I think that Hispanic people welcome others, celebrating the joy our culture brings, and they appreciate that our country uses the opportunity to reflect on Hispanic history.

You study in Professor Felix Herrmann’s Seismic Laboratory for Imaging and Modeling (SLIM) group. In your own words, what does this research group do?

We develop techniques and software for imaging Earth’s subsurface structures. These range from highly performant partial differential equation solvers to randomized numerical algebra to generative artificial intelligence (AI) models.

One of the driving goals of each software package we develop is that it needs to be scalable to real world applications. This entails imaging seismic areas that can be kilometers cubed in volume, represented typically by more than 100,000,000 simulation grid cells. In my medical applications, high-resolution images of human brains that can be resolved to less than half a millimeter.

The International Meeting for Applied Geoscience and Energy (IMAGE) is a recent conference where SLIM gave nine presentations. What research did you present here?

The challenge of applying machine learning to seismic imaging is that there are no examples of what the earth looks like. While making high quality reference images of human tissues for supervised machine learning is possible, no one can “cut open” the earth to understand exactly what it looks like.

To address this challenge, I presented an algorithm that combines generative AI with an unsupervised training objective. We essentially trick the generative model into outputting full earth models by making it blind to which part of the Earth we are asking for. This is like when you take an exam where only a few questions will be graded, but you don’t know which ones, so you answer all the questions just in case.

While seismic imaging is the basis of SLIM research, there are other applications for the group’s work. Can you discuss more about this?

The imaging techniques that the energy industry has been using for decades toward imaging Earth’s subsurface can be applied almost seamlessly to create medical images of human sub tissue.

Lately, we have been tackling the particularly difficult modality of using high frequency ultrasound to image through the human skull. In our recent paper, we are exploring a powerful combination between machine learning and physics-based methods that allows us to speed up imaging while adding uncertainty quantification.

We presented the work at this year’s MIDL conference (Medical Imaging with Deep Learning) in July. The medical community was excited with our preliminary results and gave me valuable feedback on how we can help bring this technique closer to clinical viability.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Sep. 06, 2023

“A stitch in time saves nine,” goes the old saying. For a company in Georgia, that adage became very real when damage to a key piece of machinery threatened its operation. The group helping with the stitch in time was the Georgia Manufacturing Extension Partnership (GaMEP), a program of Georgia Tech's Enterprise Innovation Institute that — for more than 60 years — has been helping small- to medium-sized manufacturers in Georgia stay competitive and grow, boosting economic development across the state.

Silon US, a Peachtree City manufacturer that designs and produces engineered compounds used to create a wide range of products — from automotive applications to building materials, such as PEX piping and wire and cable, was experiencing problems with their extrusion line during a time of increasing customer demand. Problems with the drive mechanism on that extrusion line, a piece of equipment critical to the company’s ability to produce, threatened to shut them down. With replacement parts several weeks away, was it safe to continue operating? At what throughput rates? How much collateral damage might be incurred if they continued to operate?

That’s when Silon managers turned to GaMEP for help.

After working through ideas with GaMEP’s manufacturing experts, the team installed wireless condition monitoring sensors that provide continuous, real-time insights on their manufacturing assets’ health. With the sensors, Silon was able to find a sweet spot that not only allowed them to continue operating but also kept them from overexerting the equipment, preventing further damage.

The solution to that problem has now become a routine part of Silon’s process, as company technicians continue to use this sensor technology for early detection of any deviations or anomalies in the machinery’s health, allowing the company’s maintenance team to proactively respond by adjusting scheduled maintenance to avoid costly downtime.

GaMEP’s Sean Madhavaraman says, “Silon is more productive than ever and on track for growth. The strong results in this challenge are a great example of the decades-long focus of GaMEP to educate and train managers and employees in best practices, to develop and implement the latest technology, and to work together with businesses to find solutions.”

Daniel Raubenheimer and Matt Gammon, Silon’s general managers, also lauded GaMEP, saying, “GaMEP’s extensive experience within the manufacturing realm has been a great benefit to our company. The wireless condition monitoring sensors allow us to predict future breakdowns and mitigate a potential catastrophe — allowing us to operate in a safe manner, while saving money, time, and effort.”

News Contact

Blair Meeks

Institute Communications

Jul. 26, 2023

This feature supports Georgia Tech's presence at the International Conference on Machine Learning, July 23-29 in Honolulu.

Honolulu Highlights | ICML 2023

Students and faculty have been focused and energized in their efforts this week engaging with the international machine learning community at ICML. See some of those efforts, hear from students themselves in our video series, and read about their latest contributions in #AI.

Georgia Tech’s experts and larger research community are invested in a future where artificial intelligence (AI) solutions can benefit individuals and communities across our planet. Meet the machine learning maestros among Georgia Tech’s faculty at the International Conference on Machine Learning — July 23-29, 2023, in Honolulu — and learn about their work. The faculty in the main program are working with partners across many domains and industries to help invent powerful new ways for technology to benefit all our futures.

One of the experts in Honolulu is Wenjing Liao, an assistant professor in the School of Mathematics. In addition to machine learning, Liao's research interests include imaging, signal processing, and high dimensional data analysis.

Learn more about the Georgia Tech contingent at the ICML here. Read more about machine learning research at Georgia Tech here.

News Contact

Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Jun. 28, 2023

Alex Robel is improving how computer models of melting ice sheets incorporate data from field expeditions and satellites by creating a new open-access software package — complete with state-of-the-art tools and paired with ice sheet models that anyone can use, even on a laptop or home computer.

Improving these models is critical: while melting ice sheets and glaciers are top contributors to sea level rise, there are still large uncertainties in sea level projections at 2100 and beyond.

“Part of the problem is that the way that many models have been coded in the past has not been conducive to using these kinds of tools,” Robel, an assistant professor in the School of Earth and Atmospheric Sciences, explains. “It's just very labor-intensive to set up these data assimilation tools — it usually involves someone refactoring the code over several years.”

“Our goal is to provide a tool that anyone in the field can use very easily without a lot of labor at the front end,” Robel says. “This project is really focused around developing the computational tools to make it easier for people who use ice sheet models to incorporate or inform them with the widest possible range of measurements from the ground, aircraft and satellites.”

Now, a $780,000 NSF CAREER grant will help him to do so.

The National Science Foundation Faculty Early Career Development Award is a five-year funding mechanism designed to help promising researchers establish a personal foundation for a lifetime of leadership in their field. Known as CAREER awards, the grants are NSF’s most prestigious funding for untenured assistant professors.

“Ultimately,” Robel says, “this project will empower more people in the community to use these models and to use these models together with the observations that they're taking.”

Ice sheets remember

“Largely, what models do right now is they look at one point in time, and they try their best — at that one point in time — to get the model to match some types of observations as closely as possible,” Robel explains. “From there, they let the computer model simulate what it thinks that ice sheet will do in the future.”

In doing so, the models often assume that the ice sheet starts in a state of balance, and that it is neither gaining nor losing ice at the start of the simulation. The problem with this approach is that ice sheets dynamically change, responding to past events — even ones that have happened centuries ago. “We know from models and from decades of theory that the natural response time scale of thick ice sheets is hundreds to thousands of years,” Robel adds.

By informing models with historical records, observations, and measurements, Robel hopes to improve their accuracy. “We have observations being made by satellites, aircraft, and field expeditions,” says Robel. “We also have historical accounts, and can go even further back in time by looking at geological observations or ice cores. These can tell us about the long history of ice sheets and how they've changed over hundreds or thousands of years.”

Robel’s team plans to use a set of techniques called data assimilation to adjust, or ‘nudge’, models. “These data assimilation techniques have been around for a really long time,” Robel explains. “For example, they’re critical to weather forecasting: every weather forecast that you see on your phone was ultimately the product of a weather model that used data assimilation to take many observations and apply them to a model simulation.”

“The next part of the project is going to be incorporating this data assimilation capability into a cloud-based computational ice sheet model,” Robel says. “We are planning to build an open source software package in Python that can use this sort of data assimilation method with any kind of ice sheet model.”

Robel hopes it will expand accessibility. “Currently, it's very labor-intensive to set up these data assimilation tools, and while groups have done it, it usually involves someone re-coding and refactoring the code over several years.”

Building software for accessibility

Robel’s team will then apply their software package to a widely used model, which now has an online, browser-based version. “The reason why that is particularly useful is because the place where this model is running is also one of the largest community repositories for data in our field,” Robel says.

Called Ghub, this relatively new repository is designed to be a community-wide place for sharing data on glaciers and ice sheets. “Since this is also a place where the model is living, by adding this capability to this cloud-based model, we'll be able to directly use the data that's already living in the same place that the model is,” Robel explains.

Users won’t need to download data, or have a high-speed computer to access and use the data or model. Researchers collecting data will be able to upload their data to the repository, and immediately see the impact of their observations on future ice sheet melt simulations. Field researchers could use the model to optimize their long-term research plans by seeing where collecting new data might be most critical for refining predictions.

“We really think that it is critical for everyone who's doing modeling of ice sheets to be doing this transient data simulation to make sure that our simulations across the field are all doing the best possible job to reproduce and match observations,” Robel says. While in the past, the time and labor involved in setting up the tools has been a barrier, “developing this particular tool will allow us to bring transient data assimilation to essentially the whole field.”

Bringing Real Data to Georgia’s K-12 Classrooms

The broad applications and user-base expands beyond the scientific community, and Robel is already developing a K-12 curriculum on sea level rise, in partnership with Georgia Tech CEISMC Researcher Jayma Koval. “The students analyze data from real tide gauges and use them to learn about statistics, while also learning about sea level rise using real data,” he explains.

Because the curriculum matches with state standards, teachers can download the curriculum, which is available for free online in partnership with the Southeast Coastal Ocean Observing Regional Association (SECOORA), and incorporate it into their preexisting lesson plans. “We worked with SECOORA to pilot a middle school curriculum in Atlanta and Savannah, and one of the things that we saw was that there are a lot of teachers outside of middle school who are requesting and downloading the curriculum because they want to teach their students about sea level rise, in particular in coastal areas,” Robel adds.

In Georgia, there is a data science class that exists in many high schools that is part of the computer science standards for the state. “Now, we are partnering with a high school teacher to develop a second standards-aligned curriculum that is meant to be taught ideally in a data science class, computer class or statistics class,” Robel says. “It can be taught as a module within that class and it will be the more advanced version of the middle school sea level curriculum.”

The curriculum will guide students through using data analysis tools and coding in order to analyze real sea level data sets, while learning the science behind what causes variations and sea level, what causes sea level rise, and how to predict sea level changes.

“That gets students to think about computational modeling and how computational modeling is an important part of their lives, whether it's to get a weather forecast or play a computer game,” Robel adds. “Our goal is to get students to imagine how all these things are combined, while thinking about the way that we project future sea level rise.”

Jun. 16, 2023

The discovery of nucleic acids is a recent event in the history of scientific phenomenon, and there is still much learn from the enigma that is genetic code.

Advances in computing techniques though have ushered in a new age of understanding the macromolecules that form life as we know it. Now, one Georgia Tech research group is receiving well-deserved accolades for their applications in data science and machine learning toward single-cell omics research.

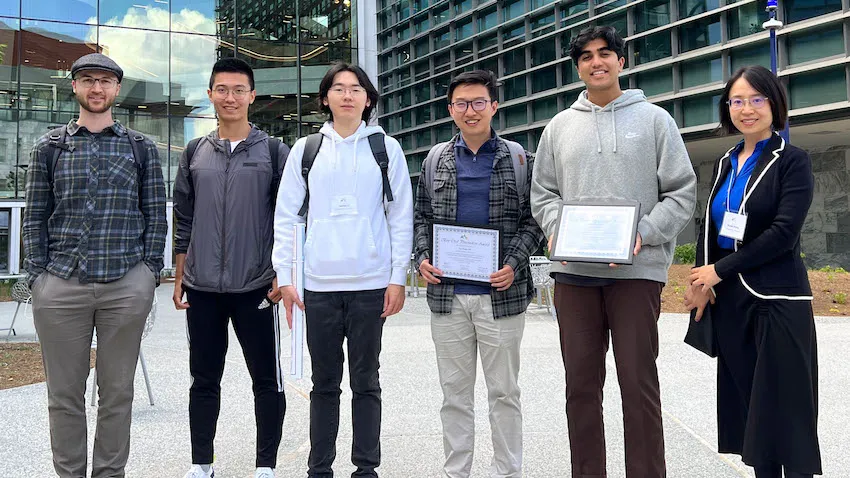

Students studying under Xiuwei Zhang, an assistant professor in the School of Computational Science and Engineering (CSE), received awards in April at the Atlanta Workshop on Single-cell Omics (AWSOM 2023).

School of CSE Ph.D. student Ziqi Zhang received the best oral presentation award, while Mihir Birfna, an undergraduate student majoring in computer science, took the best poster prize.

Along with providing computational tools for biological researchers, the group’s papers presented at AWSOM 2023 could benefit populations of people as the research could lead to improved disease detection and prevention. They can also provide a better understanding of causes and treatments of cancer and new ability to accurately simulate cellular processes.

“I am extremely proud of the entire research group and very thankful of their work and our teamwork within our lab,” said Xiuwei Zhang. “These awards are encouraging because it means we are on the right track of developing something that will contribute both to the biology community and the computational community.”

Ziqi Zhang presented the group’s findings of their deep learning framework called scDisInFact, which can carry out multiple key single cell RNA-sequencing (scRNA-seq) tasks all at once and outperform current models that focus on the same tasks individually.

The group successfully tested scDisInFact on simulated and real Covid-19 datasets, demonstrating applicability in future studies of other diseases.

Bafna’s poster introduced CLARIFY, a tool that connects biochemical signals occurring within a cell and intercellular communication molecules. Previously, the inter- and intra-cell signaling were often studied separately due to the complexity of each problem.

Oncology is one field that stands to benefit from CLARIFY. CLARIFY helps to understand the interactions between tumor cells and immune cells in cancer microenvironments, which is crucial for enabling success of cancer immunotherapy.

At AWSOM 2023, the group presented a third paper on scMultiSim. This simulator generates data found in multi-modal single-cell experiments through modeling various biological factors underlying the generated data. It enables quantitative evaluations of a wide range of computational methods in single-cell genomics. That has been a challenging problem due to lack of ground truth information in biology, Xiuwei Zhang said.

“We want to answer certain basic questions in biology, like how did we get these different cell types like skin cells, bone cells, and blood cells,” she said. “If we understand how things work in normal and healthy cells, and compare that to the data of diseased cells, then we can find the key differences of those two and locate the genes, proteins, and other molecules that cause problems.”

Xiuwei Zhang’s group specializes in applying machine learning and optimization skills in analysis of single-cell omics data and scRNA-seq methods. Their main interest area is studying mechanisms of cellular differentiation— the process when young, immature cells mature and take on functional characteristics.

A growing, yet effective approach to research in molecular biology, scRNA-seq gives insight of existence and behavior of different types of cells. This helps researchers better understand genetic disorders, detect mechanisms that cause tumors and cancer, and develop new treatments, cures, and drugs.

If microenvironments filled with various macromolecules and genetic material are considered datasets, the need for researchers like Xiuwei Zhang and her group is obvious. These massive, complex datasets present both challenges and opportunities for the group experienced in computational and biological research.

Collaborating authors include School of CSE Ph.D. students Hechen Li and Michael Squires, School of Electrical and Computer Engineering Ph.D. student Xinye Zhao, Wallace H. Coulter Department of Biomedical Engineering Associate Professor Peng Qiu, and Xi Chen, an assistant professor at Southern University of Science and Technology in Shenzhen, China.

The group’s presentations at AWSOM 2023 exhibited how their work makes progress in biomedical research, as well as advancing scientific computing methods in data science, machine learning, and simulation.

scDisInFact is an optimization tool that can perform batch effect removal, condition-associated key gene detection, and perturbation, which is made possible by considering major variation factors in the data. Without considering all these factors, current models can only do these tasks individually, but scDisInFact can do each task better and all at the same time.

CLARIFY delves into how cells employ genetic material to communicate internally, using gene regulatory networks (GRNs) and externally, called cell-cell interactions (CCIs). Many computational methods can infer GRNs and inference methods have been proposed for CCIs, but until CLARIFY, no method existed to infer GRNs and CCIs in the same model.

scMultiSim simulations perform closer to real-world conditions than current simulators that model only one or two biological factors. This helps researchers more realistically test their computational methods, which can guide directions for future method development.

Whether they be computer scientists, biologists, or non-academics alike, the advantage of interdisciplinary and collaborative research, like Xiuwei Zhang’s group, is its wide-reaching impact that advances technology to improve the human condition.

“We’re exploring the possibilities that can be realized by advanced computational methods combined with cutting edge biotechnology,” said Xiuwei Zhang. “Since biotechnology keeps evolving very fast and we want to help push this even further by developing computational methods, together we will propel science forward.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Apr. 19, 2023

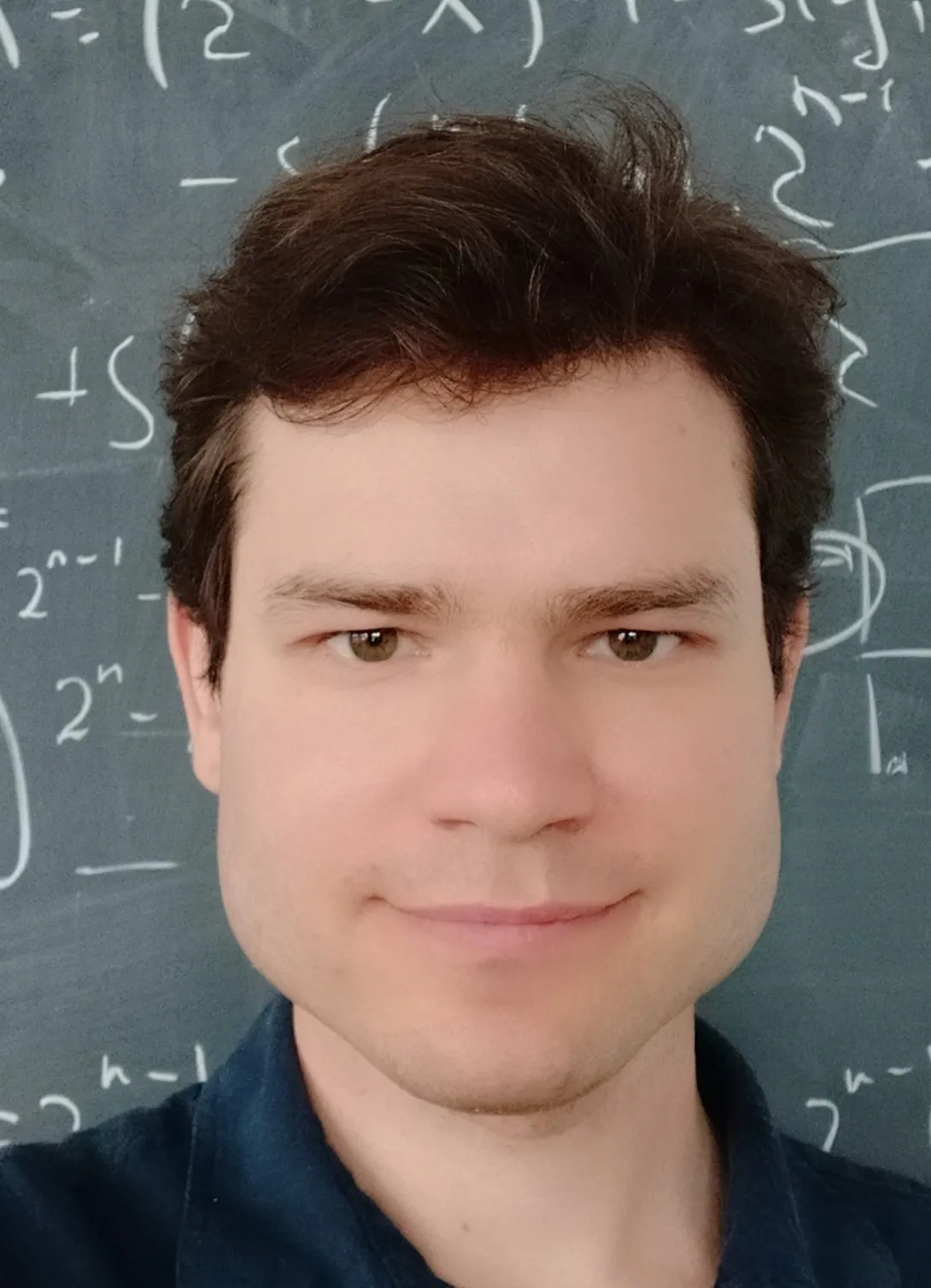

Anton Bernshteyn is forging connections and creating a language to help computer scientists and mathematicians collaborate on new problems — in particular, bridging the gap between solvable, finite problems and more challenging, infinite problems. Now, an NSF CAREER grant will help him achieve that goal.

The National Science Foundation Faculty Early Career Development Award is a five-year grant designed to help promising researchers establish a foundation for a lifetime of leadership in their field. Known as CAREER awards, the grants are NSF’s most prestigious funding for untenured assistant professors.

Bernshteyn, an assistant professor in the School of Mathematics, will focus on “Developing a unified theory of descriptive combinatorics and local algorithms” — connecting concepts and work being done in two previously separate mathematical and computer science fields. “Surprisingly,” Bernshteyn says, “it turns out that these two areas are closely related, and that ideas and results from one can often be applied in the other.”

“This relationship is going to benefit both areas tremendously,” Bernshteyn says. “It significantly increases the number of tools we can use”

By pioneering this connection, Bernshteyn hopes to connect techniques that mathematicians use to study infinite structures (like dynamic, continuously evolving structures found in nature), with the algorithms computer scientists use to model large – but still limited – interconnected networks and systems (like a network of computers or cell phones).

“The final goal, for certain types of problems,” he continues, “is to take all these questions about complicated infinite objects and translate them into questions about finite structures, which are much easier to work with and have applications in practical large-scale computing.”

Creating a unified theory

It all started with a paper Bernshteyn wrote in 2020, which showed that mathematics and computer science could be used in tandem to develop powerful problem-solving techniques. Since the fields used different terminology, however, it soon became clear that a “dictionary” or a unified theory would need to be created to help specialists communicate and collaborate. Now that dictionary is being built, bringing together two previously-distinct fields: distributed computing (a field of computer science), and descriptive set theory (a field of mathematics).

Computer scientists use distributed computing to study so-called “distributed systems,” which model extremely large networks — like the Internet — that involve millions of interconnected machines that are operating independently (for example, blockchain, social networks, streaming services, and cloud computing systems).

“Crucially, these systems are decentralized,” Bernshteyn says. ”Although parts of the network can communicate with each other, each of them has limited information about the network’s overall structure and must make decisions based only on this limited information.” Distributed systems allow researchers to develop strategies — called distributed algorithms — that “enable solving difficult problems with as little knowledge of the structure of the entire network as possible,” he adds.

At first, distributed algorithms appear entirely unrelated to the other area Bernshteyn’s work brings together: descriptive set theory, an area of pure mathematics concerned with infinite sets defined by “simple” mathematical formulas.

“Sets that do not have such simple definitions typically have properties that make them unsuitable for applications in other areas of mathematics. For example, they are often non-measurable – meaning that it is impossible, even in principle, to determine their length, area, or volume," Bernshteyn says.

Because undefinable sets are difficult to work with, descriptive set theory aims to understand which problems have “definable”— and therefore more widely applicable— solutions. Recently, a new subfield called descriptive combinatorics has emerged. “Descriptive combinatorics focuses specifically on problems inspired by the ways collections of discrete, individual objects can be organized,” Bernshteyn explains. “Although the field is quite young, it has already found a number of exciting applications in other areas of math.”

The key connection? Since the algorithms used by computer scientists in distributed computing are designed to perform well on extremely large networks, they can also be used by mathematicians interested in infinite problems.

Solving infinite problems

Infinite problems often occur in nature, and the field of descriptive combinatorics has been particularly successful in helping to understand dynamical systems: structures that evolve with time according to specified laws (such as the flow of water in a river or the movement of planets in the Solar System). “Most mathematicians work with continuous, infinite objects, and hence they may benefit from the insight contributed by descriptive set theory,” Bernshteyn adds.

However, while infinite problems are common, they are also notoriously difficult to solve. “In infinite problems, there is no software that can tell you if the problem is solvable or not. There are infinitely many things to try, so it is impossible to test all of them. But if we can make our problems finite, we can sometimes determine which ones can and cannot be solved efficiently,” Bernshteyn says. “We may be able to determine which combinatorial problems can be solved in the infinite setting and get an explicit solution.”

“It turns out that, with some work, it is possible to implement the algorithms used in distributed computing on infinite networks, providing definable solutions to various combinatorial problems,” Bernshteyn says. “Conversely, in certain limited settings it is possible to translate definable solutions to problems on infinite structures into efficient distributed algorithms — although this part of the story is yet to be fully understood.”

A new frontier

As a recently emerged field, descriptive combinatorics is rapidly evolving, putting Bernshteyn and his research on the cutting edge of discovery. “There’s this new communication between separate fields of math and computer science—this huge synergy right now—it’s incredibly exciting,” Bernshteyn says.

Introducing new researchers to descriptive combinatorics, especially graduate students, is another priority for Bernshteyn. His CAREER grant funds will be especially dedicated to training graduate students who might not have had prior exposure to descriptive set theory. Bernshteyn also aims to design a suite of materials ranging from textbooks, lecture notes, instructional videos, workshops, and courses to support students and scholars as they enter this new field.

“There’s so much knowledge that’s been acquired,” Bernshteyn says. “There’s work being done by people within computer science, set theory, and so on. But researchers in these fields speak different languages, so to say, and a lot of effort needs to go into creating a way for them to understand each other. Unifying these fields will ultimately allow us to understand them all much better than we did before. Right now we’re only starting to glimpse what’s possible.”

News Contact

Written by Selena Langner

Apr. 18, 2023

Our world is powered by chemical reactions. From new medicines and biotechnology to sustainable energy solutions developing and understanding the chemical reactions behind innovations is a critical first step in pioneering new advances. And a key part of developing new chemistries is discovering how the rates of those chemical reactions can be accelerated or changed.

For example, even an everyday chemical reaction, like toasting bread, can substantially change in speed and outcome — by increasing the heat, the speed of the reaction increases, toasting the bread faster. Adding another chemical ingredient — like buttering the bread before frying it — also changes the outcome of the reaction: the bread might brown and crisp rather than toast. The lesson? Certain chemical reactions can be accelerated or changed by adding or altering key variables, and understanding those factors is crucial when trying to create the desired reaction (like avoiding burnt toast!).

Chemists currently use quantum chemistry techniques to predict the rates and energies of chemical reactions, but the method is limited: predictions can usually only be made for up to a few hundred atoms. In order to scale the predictions to larger systems, and predict the environmental effects of reactions, a new framework needs to be developed.

Jesse McDaniel (School of Chemistry and Biochemistry) is creating that framework by leveraging computer modeling techniques. Now, a new NSF CAREER grant will help him do so. The National Science Foundation Faculty Early Career Development Award is a five-year grant designed to help promising researchers establish a foundation for a lifetime of leadership in their field. Known as CAREER awards, the grants are NSF’s most prestigious funding for untenured assistant professors.

“I am excited about the CAREER research because we are really focusing on fundamental questions that are central to all of chemistry,” McDaniel says about the project.

Pioneering a new framework

“Chemical reactions are inherently quantum mechanical in nature,” McDaniel explains. “Electrons rearrange as chemical bonds are broken and formed.” While this type of quantum chemistry can allow scientists to predict the rates and energies of different reactions, these predictions are limited to only tens or hundreds of atoms. That’s where McDaniel’s team comes in. They’re developing modeling techniques based on quantum chemistry that could function over multiple scales, using computer models to scale the predictions. They hope this will help predict environmental effects on chemical reaction rates.

By developing modeling techniques that can be applied to reactions at multiple scales, McDaniel aims to expand scientist’s ability to predict and model chemical reactions, and how they interact with their environments. “Our goal is to understand the microscopic mechanisms and intermolecular interactions through which chemical reactions are accelerated within unique solvation environments such as microdroplets, thin films, and heterogenous interfaces,” McDaniel says. He hopes that it will allow for computational modeling of chemical reactions in much larger systems.

Interdisciplinary research

As a theoretical and computational chemist, McDaniel’s chemistry experiments don’t take place in a typical chemistry lab — rather, they take place in a computer lab, where Georgia Tech’s robust computer science and software development community functions as a key resource.

“We run computer simulations on high performance computing clusters,” McDaniel explains. “In this regard, we benefit from the HPC infrastructure at Georgia Tech, including the Partnership for an Advanced Computing Environment (PACE) team, as well as the computational resources provided in the new CODA building.”

“Software is also a critical part of our research,” he continues. “My colleague Professor David Sherrill and his group are lead developers of the Psi4 quantum chemistry software, and this software comprises a core component of our multi-scale modeling efforts.”

In this respect, McDaniel is eager to to involve the next generation of chemists and computer scientists, showcasing the connection between these different fields. McDaniel’s team will partner with regional high school teachers, collaborating to integrate software and data science tools within the high school educational curriculum.

“One thing I like about this project,” McDaniel says, “is that all types of chemists — organic, inorganic, analytical, bio, physical, etc. — care about how chemical reactions happen, and how reactions are influenced by their surroundings.”

News Contact

Written by Selena Langner

Apr. 04, 2023

Georgia Tech Battery Day opened with a full house on March 30, 2023, at the Global Learning Center in the heart of Midtown Atlanta. More than 230 energy researchers and industry participants convened to discuss and advance energy storage technologies via lightning talks, panel discussions, student poster sessions, and networking sessions throughout the day. Matt McDowell, associate professor in the Woodruff School of Mechanical Engineering and the School of Materials Science and Engineering as well as the initiative lead for energy storage at the Strategic Energy Institute and the Institute of Materials, started the day with an overview of the relevant research at Georgia Tech. His talk shed light on Georgia becoming the epicenter of the battery belt of the Southeast with recent key industry investments and the robust energy-storage research community present at Georgia Tech.

According to the Metro Atlanta Chamber of Commerce, since 2020, Georgia has had $21 billion invested or announced in EV-related projects with 26,700 jobs created. With investments in alternate energy technologies growing exponentially in the nation, McDowell revealed Georgia Tech is well-positioned to make an impact on the next generation energy storage technologies and extended an open invitation to industry members to partner with researchers. As one of the most research-intensive academic institutions in the nation, Georgia Tech has more than $1.3 billion in research and other sponsored funds and produces the highest number of engineering doctoral graduates in the nation.

“More than half of Georgia Tech's strategic initiatives are focused on improving the efficiency and sustainability of energy storage, supporting clean energy sources, and mitigating climate change," said Chaouki Abdallah, executive vice president for research at Georgia Tech. "As a leader in battery technologies research, we are bringing together engineers, scientists, and researchers in academia and industry to conduct innovative research to address humanity's most urgent and complex challenges, and to advance technology and improve the human condition."

Rich Simmons, director of research and studies at the Strategic Energy Institute moderated the first panel discussion that included industry panelists from Panasonic, Cox Automotive, Bluebird Corp., Delta Airlines and Hyundai Kia. The panelists analyzed the opportunities and challenges in the electric transportation sector and explained their current focus areas in energy storage. The panel affirmed that while EVs have been around for more than three decades, the industry is still in its infancy and there is a huge potential to advance technology in all areas of the EV sector.

The discussion also brought forth important factors like safety, lifecycle, and sustainability in driving innovations in the energy storage sector. The attendees also discussed supply chain issues, a hot topic in almost all sectors of the nation, and the need to develop a diversity of resources for more resilient systems. The industry panelists affirmed a strong interest in partnering on research and development projects as well as gaining access to university talent.

Gleb Yushin, professor in the School of Material Science and Engineering and co-founder of Sila Nanotechnologies Inc., presented his battery research and development success story at Georgia Tech. Sila is a Georgia Tech start-up founded in 2011 and has produced the world’s first commercially available high-silicon-content anode for lithium-ion batteries in 2021. Materials manufactured in its U.S. facilities will power electric vehicles starting with the Mercedes-Benz G-class series in 2023.

The program included lightning talks on cutting-edge research in battery materials, specifically solid-state electrolytes and plastic crystal embedded elastomer electrolytes (PCEEs) by Seung Woo Lee, associate professor in the George W. Woodruff School of Mechanical Engineering. Santiago Grijalva, professor in the School of Electrical and Computer Engineering, discussed the challenges and opportunities for the successful use of energy storage for the grid.

Tequila Harris, initiative lead for Energy and Manufacturing and professor in the George W. Woodruff School of Mechanical Engineering, spoke to energy materials and carbon-neutral applications. Presenting a case for roll-to-roll manufacturing of battery materials, Harris said that the need for quick, high yield manufacturing processes and alternative materials and structures were important considerations for the industry.

Materials, manufacturing, and market opportunities were the topic for the next panel moderated by McDowell and included panelists from Albemarle, Novelis, Solvay, Truist Securities, and Energy Impact Partners. Analyzing the current challenges, the panelists brought up hiring and workforce development, increasing capacity and building the ecosystem, decarbonizing existing processes, and understanding federal policies and regulations.

Lightning talks later in the afternoon by researchers at Georgia Tech touched on the latest developments in the cross-disciplinary research bridging mechanical engineering, chemical engineering, AI manufacturing, and material science in energy storage research. Topics included safe rechargeable batteries with water-based electrolytes (Nian Liu, assistant professor, School of Chemical & Biomolecular Engineering), AI-accelerated manufacturing (Aaron Stebner, associate professor, School of Materials Science and Engineering), battery recycling (Hailong Chen, associate professor, School of Materials Science and Engineering), and parametric life-cycle models for a solid-state battery circular economy (Ilan Stern, research scientist from GTRI).

Another industry panel on grid, infrastructure and communities moderated by Faisal Alamgir, professor in the School of Materials Science and Engineering included panelists from Southern Company, Stryten Energy, and the Metro Atlanta Chamber of Commerce. Improving the grid resiliency and storage capacity; proximity to the energy source; optimizing and implementing new technology in an equitable way; standardization of the evolving business models; economic development and resource building through skilled workforce; educating the consumer; and getting larger portions of the grid with renewable energy were top of mind with the panelists.

“Energy-storage-related R&D efforts at Georgia Tech are extensive and include next-gen battery chemistry development, battery characterization, recycling, and energy generation and distribution,” said McDowell. “There is a tremendous opportunity to leverage the broad expertise we bring to advance energy storage systems. Battery Day has been hugely successful in not only bringing this expertise to the forefront, but also in affirming the need for continued interaction with the companies engaged in this arena. Our mission is to serve as a centralized focal point for research interactions between companies in the battery/EV space and faculty members on campus.”

News Contact

Priya Devarajan || SEI Communications Manager

Jan. 03, 2023

Though it is a cornerstone of virtually every process that occurs in living organisms, the proper folding and transport of biological proteins is a notoriously difficult and time-consuming process to experimentally study.

In a new paper published in eLife, researchers in the School of Biological Sciences and the School of Computer Science have shown that AF2Complex may be able to lend a hand.

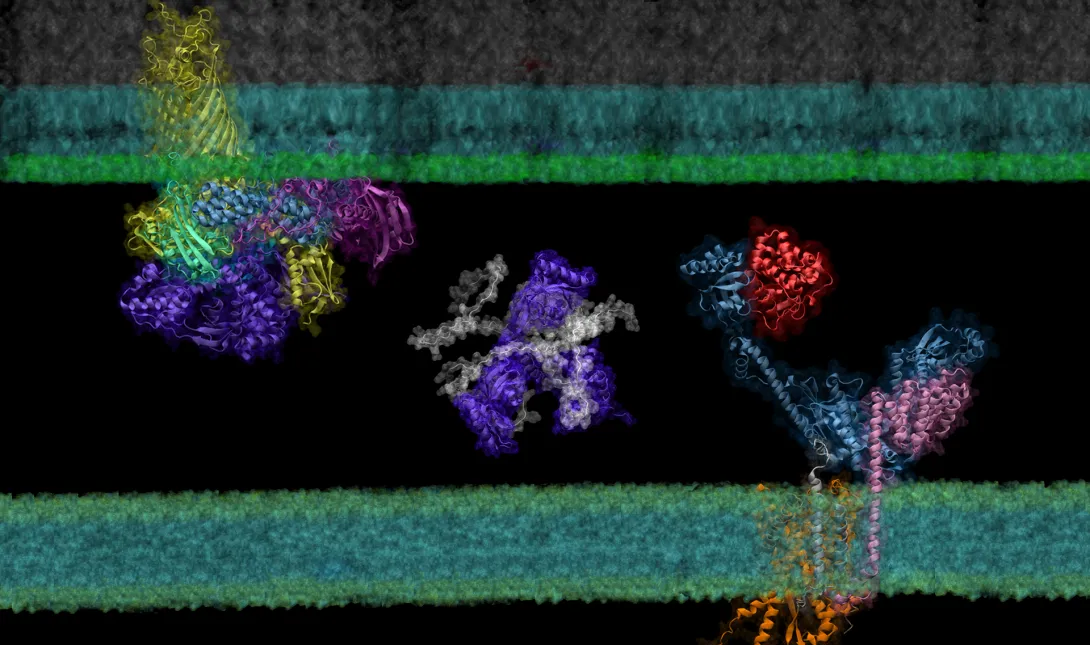

Building on the models of DeepMind’s AlphaFold 2, a machine learning tool able to predict the detailed three-dimensional structures of individual proteins, AF2Complex — short for AlphaFold 2 Complex — is a deep learning tool designed to predict the physical interactions of multiple proteins. With these predictions, AF2Complex is able to calculate which proteins are likely to interact with each other to form functional complexes in unprecedented detail.

“We essentially conduct computational experiments that try to figure out the atomic details of supercomplexes (large interacting groups of proteins) important to biological functions,” explained Jeffrey Skolnick, Regents’ Professor and Mary and Maisie Gibson Chair in the School of Biological Sciences, and one of the corresponding authors of the study. With AF2Complex, which was developed last year by the same research team, it’s “like using a computational microscope powered by deep learning and supercomputing.”

In their latest study, the researchers used this ‘computational microscope’ to examine a complicated protein synthesis and transport pathway, hoping to clarify how proteins in the pathway interact to ultimately transport a newly synthesized protein from the interior to the outer membrane of the bacteria — and identify players that experiments might have missed. Insights into this pathway may identify new targets for antibiotic and therapeutic design while providing a foundation for using AF2Complex to computationally expedite this type of biology research as a whole.

Computing complexes

Created by London-based artificial intelligence lab DeepMind, AlphaFold 2 is a deep learning tool able to generate accurate predictions about the three-dimensional structure of single proteins using just their building blocks, amino acids. Taking things a step further, AF2Complex uses these structures to predict the likelihood that proteins are able to interact to form a functional complex, what aspects of each structure are the likely interaction sites, and even what protein complexes are likely to pair up to create even larger functional groups called supercomplexes.

“The successful development of AF2Complex earlier this year makes us believe that this approach has tremendous potential in identifying and characterizing the set of protein-protein interactions important to life,” shared Mu Gao, a senior research scientist at Georgia Tech. “To further convince the broad molecular biology community, we [had to] demonstrate it with a more convincing, high impact application.”

The researchers chose to apply AF2Complex to a pathway in Escherichia coli (E. coli), a model organism in life sciences research commonly used for experimental DNA manipulation and protein production due to its relative simplicity and fast growth.

To demonstrate the tool’s power, the team examined the synthesis and transport of proteins that are essential for exchanging nutrients and responding to environmental stressors: outer membrane proteins, or OMPs for short. These proteins reside on the outermost membrane of gram-negative bacteria, a large family of bacteria characterized by the presence of inner and outer membranes, like E. coli. However, the proteins are created inside the cell and must be transported to their final destinations.

“After more than two decades of experimental studies, researchers have identified some of the protein complexes of key players, but certainly not all of them,” Gao explained. AF2Complex “could enable us to discover some novel and interesting features of the OMP biogenesis pathway that were missed in previous experimental studies.”

New insights

Using the Summit supercomputer at the Oak Ridge National Laboratory, the team, which included computer science undergraduate Davi Nakajima An, put AF2Complex to the test. They compared a few proteins known to be important in the synthesis and transport of OMPs to roughly 1,500 other proteins — all of the known proteins in E. coli’s cell envelope — to see which pairs the tool computed as most likely to interact, and which of those pairs were likely to form supercomplexes.

To determine if AF2Complex’s predictions were correct, the researchers compared the tool’s predictions to known experimental data. “Encouragingly,” said Skolnick, “among the top hits from computational screening, we found previously known interacting partners.” Even within those protein pairs known to interact, AF2Complex was able to highlight structural details of those interactions that explain data from previous experiments, lending additional confidence to the tool’s accuracy.

In addition to known interactions, AF2Complex predicted several unknown pairs. Digging further into these unexpected partners revealed details on what aspects of the pairs might interact to form larger groups of functional proteins, likely active configurations of complexes that have previously eluded experimentalists, and new potential mechanisms for how OMPs are synthesized and transported.

“Since the outer membrane pathway is both vital and unique to gram-negative bacteria, the key proteins involved in this pathway could be novel targets for new antibiotics,” said Skolnick. “As such, our work that provides molecular insights about these new drug targets might be valuable to new therapeutic design.”

Beyond this pathway, the researchers are hopeful that AF2Complex could mean big things for biology research.

“Unlike predicting structures of a single protein sequence, predicting the structural model of a supercomplex can be very complicated, especially when the components or stoichiometry of the complex is unknown,” Gao noted. “In this regard, AF2Complex could be a new computational tool for biologists to conduct trial experiments of different combinations of proteins,” potentially expediting and increasing the efficiency of this type of biology research as a whole.

AF2Complex is an open-source tool available to the public and can be downloaded here.

This work was supported in part by the DOE Office of Science, Office of Biological and Environmental Research (DOE DE-SC0021303) and the Division of General Medical Sciences of the National Institute Health (NIH R35GM118039). DOI: https://doi.org/10.7554

News Contact

Writer: Audra Davidson

Communications Officer

College of Sciences at Georgia Tech

Editor: Jess Hunt-Ralston

Director of Communications

College of Sciences at Georgia Tech

Sep. 28, 2022

Advancement in technology brings about plenty of benefits for everyday life, but it also provides cyber criminals and other potential adversaries with new opportunities to cause chaos for their own benefit.

As researchers begin to shape the future of artificial intelligence in manufacturing, Georgia Tech recognizes the potential risks to this technology once it is implemented on an industrial scale. That’s why Associate Professor Saman Zonouz will begin researching ways to protect the nation’s newest investment in manufacturing.

The project is part of the $65 million grant from the U.S. Department of Commerce’s Economic Development Administration to develop the Georgia AI Manufacturing (GA-AIM) Technology Corridor. While main purpose of the grant is to develop ways of integrating artificial intelligence into manufacturing, it will also help advance cybersecurity research, educational outreach, and workforce development in the subject as well.

“When introducing new capabilities, we don’t know about its cybersecurity weaknesses and landscape,” said Zonouz. “In the IT world, the potential cybersecurity vulnerabilities and corresponding mitigation are clear, but when it comes to artificial intelligence in manufacturing, the best practices are uncertain. We don’t know what all could go wrong.”

Zonouz will work alongside other Georgia Tech researchers in the new Advanced Manufacturing Pilot Facility (AMPF) to pinpoint where those inevitable attacks will come from and how they can be repelled. Along with a team of Ph.D. students, Zonouz will create a roadmap for future researchers, educators, and industry professionals to use when detecting and responding to cyberattacks.

“As we increasingly rely on computing and artificial intelligence systems to drive innovation and competitiveness, there is a growing recognition that the security of these systems is of paramount importance if we are to realize the anticipated gains,” said Michael Bailey, Inaugural Chair of the School of Cybersecurity and Privacy (SCP). “Professor Zonouz is an expert in the security of industrial control systems and will be a vital member of the new coalition as it seeks to provide leadership in manufacturing automation.”

Before coming to Georgia Tech, Zonouz worked with the School of Electrical and Computer Engineering (ECE) and the College of Engineering on protecting and studying the cyber-physical systems of manufacturing. He worked with Raheem Beyah, Dean of the College of Engineering and ECE professor, on several research papers including two that were published at the 26th USENIX Security Symposium, and the Network and Distributed System Security Symposium.

“As Georgia Tech continues to position itself as a leader in artificial intelligence manufacturing, interdisciplinarity collaboration is not only an added benefit, it is fundamental,” said Arijit Raychowdhury, Steve W. Chaddick School Chair and Professor of ECE. “Saman’s cybersecurity expertise will play a crucial role in the overall protection and success of GA-AIM and AMPF. ECE is proud to have him representing the school on this important project.”

The research is expected to take five years, which is typical for a project of this scale. Apart from research, there will be a workforce development and educational outreach portion of the GA-AIM program. The cyber testbed developed by Zonouz, and his team will live in the 24,000 square-foot AMPF facility.

News Contact

JP Popham

Communications Officer | School of Cybersecurity and Privacy

Georgia Institute of Technology

jpopham3@gatech.edu | scp.cc.gatech.edu

Pagination

- Previous page

- 6 Page 6

- Next page