Mar. 19, 2024

Computer science educators will soon gain valuable insights from computational epidemiology courses, like one offered at Georgia Tech.

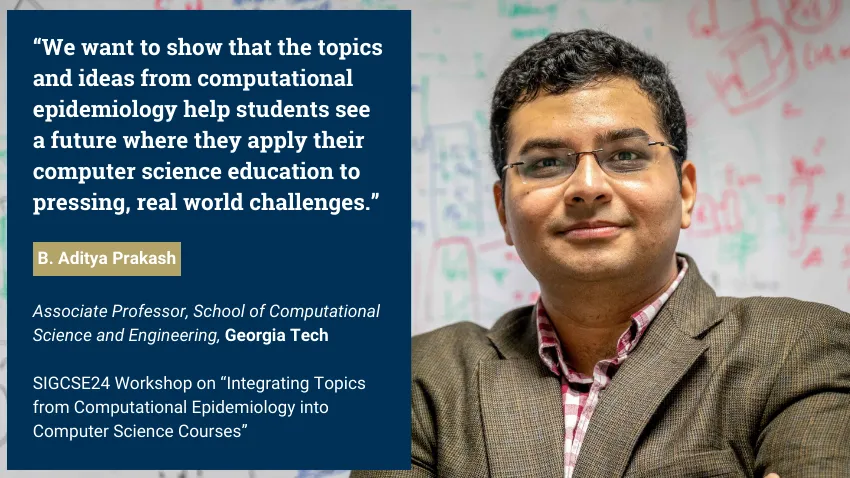

B. Aditya Prakash is part of a research group that will host a workshop on how topics from computational epidemiology can enhance computer science classes.

These lessons would produce computer science graduates with improved skills in data science, modeling, simulation, artificial intelligence (AI), and machine learning (ML).

Because epidemics transcend the sphere of public health, these topics would groom computer scientists versed in issues from social, financial, and political domains.

The group’s virtual workshop takes place on March 20 at the technical symposium for the Special Interest Group on Computer Science Education (SIGCSE). SIGCSE is one of 38 special interest groups of the Association for Computing Machinery (ACM). ACM is the world’s largest scientific and educational computing society.

“We decided to do a tutorial at SIGCSE because we believe that computational epidemiology concepts would be very useful in general computer science courses,” said Prakash, an associate professor in the School of Computational Science and Engineering (CSE).

“We want to give an introduction to concepts, like what computational epidemiology is, and how topics, such as algorithms and simulations, can be integrated into computer science courses.”

Prakash kicks off the workshop with an overview of computational epidemiology. He will use examples from his CSE 8803: Data Science for Epidemiology course to introduce basic concepts.

This overview includes a survey of models used to describe behavior of diseases. Models serve as foundations that run simulations, ultimately testing hypotheses and making predictions regarding disease spread and impact.

Prakash will explain the different kinds of models used in epidemiology, such as traditional mechanistic models and more recent ML and AI based models.

Prakash’s discussion includes modeling used in recent epidemics like Covid-19, Zika, H1N1 bird flu, and Ebola. He will also cover examples from the 19th and 20th centuries to illustrate how epidemiology has advanced using data science and computation.

“I strongly believe that data and computation have a very important role to play in the future of epidemiology and public health is computational,” Prakash said.

“My course and these workshops give that viewpoint, and provide a broad framework of data science and computational thinking that can be useful.”

While humankind has studied disease transmission for millennia, computational epidemiology is a new approach to understanding how diseases can spread throughout communities.

The Covid-19 pandemic helped bring computational epidemiology to the forefront of public awareness. This exposure has led to greater demand for further application from computer science education.

Prakash joins Baltazar Espinoza and Natarajan Meghanathan in the workshop presentation. Espinoza is a research assistant professor at the University of Virginia. Meghanathan is a professor at Jackson State University.

The group is connected through Global Pervasive Computational Epidemiology (GPCE). GPCE is a partnership of 13 institutions aimed at advancing computational foundations, engineering principles, and technologies of computational epidemiology.

The National Science Foundation (NSF) supports GPCE through the Expeditions in Computing program. Prakash himself is principal investigator of other NSF-funded grants in which material from these projects appear in his workshop presentation.

[Related: Researchers to Lead Paradigm Shift in Pandemic Prevention with NSF Grant]

Outreach and broadening participation in computing are tenets of Prakash and GPCE because of how widely epidemics can reach. The SIGCSE workshop is one way that the group employs educational programs to train the next generation of scientists around the globe.

“Algorithms, machine learning, and other topics are fundamental graduate and undergraduate computer science courses nowadays,” Prakash said.

“Using examples like projects, homework questions, and data sets, we want to show that the topics and ideas from computational epidemiology help students see a future where they apply their computer science education to pressing, real world challenges.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Mar. 14, 2024

Schmidt Sciences has selected Kai Wang as one of 19 researchers to receive this year’s AI2050 Early Career Fellowship. In doing so, Wang becomes the first AI2050 fellow to represent Georgia Tech.

“I am excited about this fellowship because there are so many people at Georgia Tech using AI to create social impact,” said Wang, an assistant professor in the School of Computational Science and Engineering (CSE).

“I feel so fortunate to be part of this community and to help Georgia Tech bring more impact on society.”

AI2050 has allocated up to $5.5 million to support the cohort. Fellows receive up to $300,000 over two years and will join the Schmidt Sciences network of experts to advance their research in artificial intelligence (AI).

Wang’s AI2050 project centers on leveraging decision-focused AI to address challenges facing health and environmental sustainability. His goal is to strengthen and deploy decision-focused AI in collaboration with stakeholders to solve broad societal problems.

Wang’s method to decision-focused AI integrates machine learning with optimization to train models based on decision quality. These models borrow knowledge from decision-making processes in high-stakes domains to improve overall performance.

Part of Wang’s approach is to work closely with non-profit and non-governmental organizations. This collaboration helps Wang better understand problems at the point-of-need and gain knowledge from domain experts to custom-build AI models.

“It is very important to me to see my research impacting human lives and society,” Wang said. That reinforces my interest and motivation in using AI for social impact.”

[Related: Wang, New Faculty Bolster School’s Machine Learning Expertise]

This year’s cohort is only the second in the fellowship’s history. Wang joins a class that spans four countries, six disciplines, and seventeen institutions.

AI2050 commits $125 million over five years to identify and support talented individuals seeking solutions to ensure society benefits from AI. Last year’s AI2050 inaugural class of 15 early career fellows received $4 million.

The namesake of AI2050 comes from the central motivating question that fellows answer through their projects:

It’s 2050. AI has turned out to be hugely beneficial to society. What happened? What are the most important problems we solved and the opportunities and possibilities we realized to ensure this outcome?

AI2050 encourages young researchers to pursue bold and ambitious work on difficult challenges and promising opportunities in AI. These projects involve research that is multidisciplinary, risky, and hard to fund through traditional means.

Schmidt Sciences, LLC is a 501(c)3 non-profit organization supported by philanthropists Eric and Wendy Schmidt. Schmidt Sciences aims to accelerate and deepen understanding of the natural world and develop solutions to real-world challenges for public benefit.

Schmidt Sciences identify under-supported or unconventional areas of exploration and discovery with potential for high impact. Focus areas include AI and advanced computing, astrophysics and space, biosciences, climate, and cross-science.

“I am most grateful for the advice from my mentors, colleagues, and collaborators, and of course AI2050 for choosing me for this prestigious fellowship,” Wang said. “The School of CSE has given me so much support, including career advice from junior and senior level faculty.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Mar. 05, 2024

Computer graphic simulations can represent natural phenomena such as tornados, underwater, vortices, and liquid foams more accurately thanks to an advancement in creating artificial intelligence (AI) neural networks.

Working with a multi-institutional team of researchers, Georgia Tech Assistant Professor Bo Zhu combined computer graphic simulations with machine learning models to create enhanced simulations of known phenomena. The new benchmark could lead to researchers constructing representations of other phenomena that have yet to be simulated.

Zhu co-authored the paper Fluid Simulation on Neural Flow Maps. The Association for Computing Machinery’s Special Interest Group in Computer Graphics and Interactive Technology (SIGGRAPH) gave it a best paper award in December at the SIGGRAPH Asia conference in Sydney, Australia.

The authors say the advancement could be as significant to computer graphic simulations as the introduction of neural radiance fields (NeRFs) was to computer vision in 2020. Introduced by researchers at the University of California-Berkley, University of California-San Diego, and Google, NeRFs are neural networks that easily convert 2D images into 3D navigable scenes.

NeRFs have become a benchmark among computer vision researchers. Zhu and his collaborators hope their creation, neural flow maps, can do the same for simulation researchers in computer graphics.

“A natural question to ask is, can AI fundamentally overcome the traditional method’s shortcomings and bring generational leaps to simulation as it has done to natural language processing and computer vision?” Zhu said. “Simulation accuracy has been a significant challenge to computer graphics researchers. No existing work has combined AI with physics to yield high-end simulation results that outperform traditional schemes in accuracy.”

In computer graphics, simulation pipelines are the equivalent of neural networks and allow simulations to take shape. They are traditionally constructed through mathematical equations and numerical schemes.

Zhu said researchers have tried to design simulation pipelines with neural representations to construct more robust simulations. However, efforts to achieve higher physical accuracy have fallen short.

Zhu attributes the problem to the pipelines’ incapability of matching the capacities of AI algorithms within the structures of traditional simulation pipelines. To solve the problem and allow machine learning to have influence, Zhu and his collaborators proposed a new framework that redesigns the simulation pipeline.

They named these new pipelines neural flow maps. The maps use machine learning models to store spatiotemporal data more efficiently. The researchers then align these models with their mathematical framework to achieve a higher accuracy than previous pipeline simulations.

Zhu said he does not believe machine learning should be used to replace traditional numerical equations. Rather, they should complement them to unlock new advantageous paradigms.

“Instead of trying to deploy modern AI techniques to replace components inside traditional pipelines, we co-designed the simulation algorithm and machine learning technique in tandem,” Zhu said.

“Numerical methods are not optimal because of their limited computational capacity. Recent AI-driven capacities have uplifted many of these limitations. Our task is redesigning existing simulation pipelines to take full advantage of these new AI capacities.”

In the paper, the authors state the once unattainable algorithmic designs could unlock new research possibilities in computer graphics.

Neural flow maps offer “a new perspective on the incorporation of machine learning in numerical simulation research for computer graphics and computational sciences alike,” the paper states.

“The success of Neural Flow Maps is inspiring for how physics and machine learning are best combined,” Zhu added.

News Contact

Nathan Deen, Communications Officer

Georgia Tech School of Interactive Computing

nathan.deen@cc.gatech.edu

Mar. 05, 2024

Georgia Tech is developing a new artificial intelligence (AI) based method to automatically find and stop threats to renewable energy and local generators for energy customers across the nation’s power grid.

The research will concentrate on protecting distributed energy resources (DER), which are most often used on low-voltage portions of the power grid. They can include rooftop solar panels, controllable electric vehicle chargers, and battery storage systems.

The cybersecurity concern is that an attacker could compromise these systems and use them to cause problems across the electrical grid like, overloading components and voltage fluctuations. These issues are a national security risk and could cause massive customer disruptions through blackouts and equipment damage.

“Cyber-physical critical infrastructures provide us with core societal functionalities and services such as electricity,” said Saman Zonouz, Georgia Tech associate professor and lead researcher for the project.

“Our multi-disciplinary solution, DerGuard, will leverage device-level cybersecurity, system-wide analysis, and AI techniques for automated vulnerability assessment, discovery, and mitigation in power grids with emerging renewable energy resources.”

The project’s long-term outcome will be a secure, AI-enabled power grid solution that can search and protect the DER’s on its network from cyberattacks.

“First, we will identify sets of critical DERs that, if compromised, would allow the attacker to cause the most trouble for the power grid,” said Daniel Molzahn, assistant professor at Georgia Tech.

“These DERs would then be prioritized for analysis and patching any identified cyber problems. Identifying the critical sets of DERs would require information about the DERs themselves- like size or location- and the power grid. This way, the utility company or other aggregator would be in the best position to use this tool.”

Additionally, the team will establish a testbed with industry partners. They will then develop and evaluate technology applications to better understand the behavior between people, devices, and network performance.

Along with Zonouz and Molzahn, Georgia Tech faculty Wenke Lee, professor, and John P. Imlay Jr. chair in software, will also lead the team of researchers from across the country.

The researchers are collaborating with the University of Illinois at Urbana-Champaign, the Department of Energy’s National Renewable Energy Lab, the Idaho National Labs, the National Rural Electric Cooperative Association, and Fortiphyd Logic. Industry partners Network Perception, Siemens, and PSE&G will advise the researchers.

The work will be carried out at Georgia Tech’s Cyber-Physical Security Lab (CPSec) within the School of Cybersecurity and Privacy (SCP) and the School of Electrical and Computer Engineering (ECE).

The U.S. Department of Energy (DOE) announced a $45 million investment at the end of February for 16 cybersecurity initiatives. The projects will identify new cybersecurity tools and technologies designed to reduce cyber risks for energy infrastructure followed by tech-transfer initiatives. The DOE’s Office of Cybersecurity, Energy Security, and Emergency Response (CESER) awarded $4.2 million for the Institute’s DerGuard project.

News Contact

JP Popham, Communications Officer II

Georgia Tech School of Cybersecurity & Privacy

john.popham@cc.gatech.edu

Feb. 05, 2024

Scientists are always looking for better computer models that simulate the complex systems that define our world. To meet this need, a Georgia Tech workshop held Jan. 16 illustrated how new artificial intelligence (AI) research could usher the next generation of scientific computing.

The workshop focused AI technology toward optimization of complex systems. Presentations of climatological and electromagnetic simulations showed these techniques resulted in more efficient and accurate computer modeling. The workshop also progressed AI research itself since AI models typically are not well-suited for optimization tasks.

The School of Computational Science and Engineering (CSE) and Institute for Data Engineering and Science jointly sponsored the workshop.

School of CSE Assistant Professors Peng Chen and Raphaël Pestourie led the workshop’s organizing committee and moderated the workshop’s two panel discussions. The duo also pitched their own research, highlighting potential of scientific AI.

Chen shared his work on derivative-informed neural operators (DINOs). DINOs are a class of neural networks that use derivative information to approximate solutions of partial differential equations. The derivative enhancement results in neural operators that are more accurate and efficient.

During his talk, Chen showed how DINOs makes better predictions with reliable derivatives. These have potential to solve data assimilation problems in weather and flooding prediction. Other applications include allocating sensors for early tsunami warnings and designing new self-assembly materials.

All these models contain elements of uncertainty where data is unknown, noisy, or changes over time. Not only is DINOs a powerful tool to quantify uncertainty, but it also requires little training data to become functional.

“Recent advances in AI tools have become critical in enhancing societal resilience and quality, particularly through their scientific uses in environmental, climatic, material, and energy domains,” Chen said.

“These tools are instrumental in driving innovation and efficiency in these and many other vital sectors.”

[Related: Machine Learning Key to Proposed App that Could Help Flood-prone Communities]

One challenge in studying complex systems is that it requires many simulations to generate enough data to learn from and make better predictions. But with limited data on hand, it is costly to run enough simulations to produce new data.

At the workshop, Pestourie presented his physics-enhanced deep surrogates (PEDS) as a solution to this optimization problem.

PEDS employs scientific AI to make efficient use of available data while demanding less computational resources. PEDS demonstrated to be up to three times more accurate than models using neural networks while needing less training data by at least a factor of 100.

PEDS yielded these results in tests on diffusion, reaction-diffusion, and electromagnetic scattering models. PEDS performed well in these experiments geared toward physics-based applications because it combines a physics simulator with a neural network generator.

“Scientific AI makes it possible to systematically leverage models and data simultaneously,” Pestourie said. “The more adoption of scientific AI there will be by domain scientists, the more knowledge will be created for society.”

[Related: Technique Could Efficiently Solve Partial Differential Equations for Numerous Applications]

Study and development of AI applications at these scales require use of the most powerful computers available. The workshop invited speakers from national laboratories who showcased supercomputing capabilities available at their facilities. These included Oak Ridge National Laboratory, Sandia National Laboratories, and Pacific Northwest National Laboratory.

The workshop hosted Georgia Tech faculty who represented the Colleges of Computing, Design, Engineering, and Sciences. Among these were workshop co-organizers Yan Wang and Ebeneser Fanijo. Wang is a professor in the George W. Woodruff School of Mechanical Engineering and Fanjio is an assistant professor in the School of Building Construction.

The workshop welcomed academics outside of Georgia Tech to share research occurring at their institutions. These speakers hailed from Emory University, Clemson University, and the University of California, Berkeley.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Jan. 04, 2024

While increasing numbers of people are seeking mental health care, mental health providers are facing critical shortages. Now, an interdisciplinary team of investigators at Georgia Tech, Emory University, and Penn State aim to develop an interactive AI system that can provide key insights and feedback to help these professionals improve and provide higher quality care, while satisfying the increasing demand for highly trained, effective mental health professionals.

A new $2,000,000 grant from the National Science Foundation (NSF) will support the research.

The research builds on previous collaboration between Rosa Arriaga, an associate professor in the College of Computing and Andrew Sherrill, an assistant professor in the Department of Psychiatry and Behavioral Sciences at Emory University, who worked together on a computational system for PTSD therapy.

Arriaga and Christopher Wiese, an assistant professor in the School of Psychology will lead the Georgia Tech team, Saeed Abdullah, an assistant professor in the College of Information Sciences and Technology will lead the Penn State team, and Sherrill will serve as overall project lead and Emory team lead.

The grant, for “Understanding the Ethics, Development, Design, and Integration of Interactive Artificial Intelligence Teammates in Future Mental Health Work” will allocate $801,660 of support to the Georgia Tech team, supporting four years of research.

“The initial three years of our project are dedicated to understanding and defining what functionalities and characteristics make an AI system a 'teammate' rather than just a tool,” Wiese says. “This involves extensive research and interaction with mental health professionals to identify their specific needs and challenges. We aim to understand the nuances of their work, their decision-making processes, and the areas where AI can provide meaningful support.In the final year, we plan to implement a trial run of this AI teammate philosophy with mental health professionals.”

While the project focuses on mental health workers, the impacts of the project range far beyond. “AI is going to fundamentally change the nature of work and workers,” Arriaga says. “And, as such, there’s a significant need for research to develop best practices for integrating worker, work, and future technology.”

The team underscores that sectors like business, education, and customer service could easily apply this research. The ethics protocol the team will develop will also provide a critical framework for best practices. The team also hopes that their findings could inform policymakers and stakeholders making key decisions regarding AI.

“The knowledge and strategies we develop have the potential to revolutionize how AI is integrated into the broader workforce,” Wiese adds. “We are not just exploring the intersection of human and synthetic intelligence in the mental health profession; we are laying the groundwork for a future where AI and humans collaborate effectively across all areas of work.”

Collaborative project

The project aims to develop an AI coworker called TEAMMAIT (short for “the Trustworthy, Explainable, and Adaptive Monitoring Machine for AI Team”). Rather than functioning as a tool, as many AI’s currently do, TEAMMAIT will act more as a human teammate would, providing constructive feedback and helping mental healthcare workers develop and learn new skills.

“Unlike conventional AI tools that function as mere utilities, an AI teammate is designed to work collaboratively with humans, adapting to their needs and augmenting their capabilities,” Wiese explains. “Our approach is distinctively human-centric, prioritizing the needs and perspectives of mental health professionals… it’s important to recognize that this is a complex domain and interdisciplinary collaboration is necessary to create the most optimal outcomes when it comes to integrating AI into our lives.”

With both technical and human health aspects to the research, the project will leverage an interdisciplinary team of experts spanning clinical psychology, industrial-organizational psychology, human-computer interaction, and information science.

“We need to work closely together to make sure that the system, TEAMMAIT, is useful and usable,” adds Arriaga. “Chris (Wiese) and I are looking at two types of challenges: those associated with the organization, as Chris is an industrial organizational psychology expert — and those associated with the interface, as I am a computer scientist that specializes in human computer interaction.”

Long-term timeline

The project’s long-term timeline reflects the unique challenges that it faces.

“A key challenge is in the development and design of the AI tools themselves,” Wiese says. “They need to be user-friendly, adaptable, and efficient, enhancing the capabilities of mental health workers without adding undue complexity or stress. This involves continuous iteration and feedback from end-users to refine the AI tools, ensuring they meet the real-world needs of mental health professionals.”

The team plans to deploy TEAMMAIT in diverse settings in the fourth year of development, and incorporate data from these early users to create development guidelines for Worker-AI teammates in mental health work, and to create ethical guidelines for developing and using this type of system.

“This will be a crucial phase where we test the efficacy and integration of the AI in real-world scenarios,” Wiese says. “We will assess not just the functional aspects of the AI, such as how well it performs specific tasks, but also how it impacts the work environment, the well-being of the mental health workers, and ultimately, the quality of care provided to patients.”

Assessing the psychological impacts on workers, including how TEAMMAIT impacts their day-to-day work will be crucial in ensuring TEAMMAIT has a positive impact on healthcare worker’s skills and wellbeing.

“We’re interested in understanding how mental health clinicians interact with TEAMMAIT and the subsequent impact on their work,” Wiese adds. “How long does it take for clinicians to become comfortable and proficient with TEAMMAIT? How does their engagement with TEAMMAIT change over the year? Do they feel like they are more effective when using TEAMMAIT? We’re really excited to begin answering these questions.

News Contact

Written by Selena Langner

Contact: Jess Hunt-Ralston

Dec. 20, 2023

A new machine learning method could help engineers detect leaks in underground reservoirs earlier, mitigating risks associated with geological carbon storage (GCS). Further study could advance machine learning capabilities while improving safety and efficiency of GCS.

The feasibility study by Georgia Tech researchers explores using conditional normalizing flows (CNFs) to convert seismic data points into usable information and observable images. This potential ability could make monitoring underground storage sites more practical and studying the behavior of carbon dioxide plumes easier.

The 2023 Conference on Neural Information Processing Systems (NeurIPS 2023) accepted the group’s paper for presentation. They presented their study on Dec. 16 at the conference’s workshop on Tackling Climate Change with Machine Learning.

“One area where our group excels is that we care about realism in our simulations,” said Professor Felix Herrmann. “We worked on a real-sized setting with the complexities one would experience when working in real-life scenarios to understand the dynamics of carbon dioxide plumes.”

CNFs are generative models that use data to produce images. They can also fill in the blanks by making predictions to complete an image despite missing or noisy data. This functionality is ideal for this application because data streaming from GCS reservoirs are often noisy, meaning it’s incomplete, outdated, or unstructured data.

The group found in 36 test samples that CNFs could infer scenarios with and without leakage using seismic data. In simulations with leakage, the models generated images that were 96% similar to ground truths. CNFs further supported this by producing images 97% comparable to ground truths in cases with no leakage.

This CNF-based method also improves current techniques that struggle to provide accurate information on the spatial extent of leakage. Conditioning CNFs to samples that change over time allows it to describe and predict the behavior of carbon dioxide plumes.

This study is part of the group’s broader effort to produce digital twins for seismic monitoring of underground storage. A digital twin is a virtual model of a physical object. Digital twins are commonplace in manufacturing, healthcare, environmental monitoring, and other industries.

“There are very few digital twins in earth sciences, especially based on machine learning,” Herrmann explained. “This paper is just a prelude to building an uncertainty aware digital twin for geological carbon storage.”

Herrmann holds joint appointments in the Schools of Earth and Atmospheric Sciences (EAS), Electrical and Computer Engineering, and Computational Science and Engineering (CSE).

School of EAS Ph.D. student Abhinov Prakash Gahlot is the paper’s first author. Ting-Ying (Rosen) Yu (B.S. ECE 2023) started the research as an undergraduate group member. School of CSE Ph.D. students Huseyin Tuna Erdinc, Rafael Orozco, and Ziyi (Francis) Yin co-authored with Gahlot and Herrmann.

NeurIPS 2023 took place Dec. 10-16 in New Orleans. Occurring annually, it is one of the largest conferences in the world dedicated to machine learning.

Over 130 Georgia Tech researchers presented more than 60 papers and posters at NeurIPS 2023. One-third of CSE’s faculty represented the School at the conference. Along with Herrmann, these faculty included Ümit Çatalyürek, Polo Chau, Bo Dai, Srijan Kumar, Yunan Luo, Anqi Wu, and Chao Zhang.

“In the field of geophysics, inverse problems and statistical solutions of these problems are known, but no one has been able to characterize these statistics in a realistic way,” Herrmann said.

“That’s where these machine learning techniques come into play, and we can do things now that you could never do before.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nov. 29, 2023

The National Institute of Health (NIH) has awarded Yunan Luo a grant for more than $1.8 million to use artificial intelligence (AI) to advance protein research.

New AI models produced through the grant will lead to new methods for the design and discovery of functional proteins. This could yield novel drugs and vaccines, personalized treatments against diseases, and other advances in biomedicine.

“This project provides a new paradigm to analyze proteins’ sequence-structure-function relationships using machine learning approaches,” said Luo, an assistant professor in Georgia Tech’s School of Computational Science and Engineering (CSE).

“We will develop new, ready-to-use computational models for domain scientists, like biologists and chemists. They can use our machine learning tools to guide scientific discovery in their research.”

Luo’s proposal improves on datasets spearheaded by AlphaFold and other recent breakthroughs. His AI algorithms would integrate these datasets and craft new models for practical application.

One of Luo’s goals is to develop machine learning methods that learn statistical representations from the data. This reveals relationships between proteins’ sequence, structure, and function. Scientists then could characterize how sequence and structure determine the function of a protein.

Next, Luo wants to make accurate and interpretable predictions about protein functions. His plan is to create biology-informed deep learning frameworks. These frameworks could make predictions about a protein’s function from knowledge of its sequence and structure. It can also account for variables like mutations.

In the end, Luo would have the data and tools to assist in the discovery of functional proteins. He will use these to build a computational platform of AI models, algorithms, and frameworks that ‘invent’ proteins. The platform figures the sequence and structure necessary to achieve a designed proteins desired functions and characteristics.

“My students play a very important part in this research because they are the driving force behind various aspects of this project at the intersection of computational science and protein biology,” Luo said.

“I think this project provides a unique opportunity to train our students in CSE to learn the real-world challenges facing scientific and engineering problems, and how to integrate computational methods to solve those problems.”

The $1.8 million grant is funded through the Maximizing Investigators’ Research Award (MIRA). The National Institute of General Medical Sciences (NIGMS) manages the MIRA program. NIGMS is one of 27 institutes and centers under NIH.

MIRA is oriented toward launching the research endeavors of young career faculty. The grant provides researchers with more stability and flexibility through five years of funding. This enhances scientific productivity and improves the chances for important breakthroughs.

Luo becomes the second School of CSE faculty to receive the MIRA grant. NIH awarded the grant to Xiuwei Zhang in 2021. Zhang is the J.Z. Liang Early-Career Assistant Professor in the School of CSE.

[Related: Award-winning Computer Models Propel Research in Cellular Differentiation]

“After NIH, of course, I first thanked my students because they laid the groundwork for what we seek to achieve in our grant proposal,” said Luo.

“I would like to thank my colleague, Xiuwei Zhang, for her mentorship in preparing the proposal. I also thank our school chair, Haesun Park, for her help and support while starting my career.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nov. 09, 2023

Members of the Georgia Artificial Intelligence in Manufacturing (Georgia AIM) team from the Georgia Institute of Technology met with local partners, manufacturers, and business leaders in Thomasville last week to discuss how investments from the $65 million statewide federal grant can accelerate the transition to automation in manufacturing in South Georgia. The meeting was held at Southern Regional Technical College (SRTC), one of the Georgia AIM partners.

“This grant is an investment in a better and brighter future for communities all across the state including Thomasville,” said Danyelle Larkin, educational outreach manager with the Center for Education Integrating Science, Mathematics, and Computing (CEISMC) at Georgia Tech. “By harnessing the power of AI, we can open up new, better-paying manufacturing jobs while preparing workers and students with the skills they need to succeed in an increasingly high-tech world.”

The meeting highlighted one of the recent developments of the Georgia AIM project: A future lab at Southern Regional Technical College dedicated to manufacturing technology. CEISMC is providing instructional support and curricula, thanks to the program’s expertise in STEM education, while collaborations with other experts at Georgia Tech and the Southwest Georgia community are identifying new technologies and opportunities for jobs in the area.

At the meeting, Aaron Stebner, co-director of Georgia AIM and associate professor of mechanical engineering and materials science and engineering, talked about the potential for AI to revitalize the economy in areas of the country that have struggled for decades.

“The reason a lot of the manufacturers are coming back and growing in the U.S. is because the automation and the AI creates a logistics model that makes it advantageous again to manufacture in the U.S. instead of overseas,” he said. Stebner also talked about how AI is automating many jobs “that humans just don’t want to do anymore and creates more space for the creative jobs that tend to create better internal motivation and higher pay.”

In addition to talking with local manufacturers and touring their facilities, Stebner participated in the Thomasville-Thomas County Chamber Connects panel discussion “Scary Smart: How AI Can Drive Your Business” with Jason Jones, president/CEO of S&L Integrated and Haile McCollum, founder and creative director of Fountaine Maury. The panel was hosted by Katie Chastan of TiskTask, a local workforce development company that is a partner in the Georgia AIM project.

During the meeting, SRTC announced the creation of a new Precision Machining and Manufacturing Lab on its Thomasville campus with an anticipated opening in the fall of 2024. The lab will host two new programs, including Precision Machining & Manufacturing and Manufacturing Engineering Technology. The Georgia AIM grant provided $499,000 in funding for the lab, as well as staffing support.

“A lab for precision manufacturing at Southern Regional Technical College breathes innovation into Thomasville’s existing industry, fueling their growth and ensuring they stay at the cutting edge of technology and competitiveness,” said Shelley Zorn, executive director of the Thomasville Payroll Development Authority.

“The result is a stronger industry base and higher paying jobs for Thomas County citizens and the region,” Zorn said. “It is also a wonderful recruiting tool for new advanced manufacturing partners.”

This could lead to new jobs for the region that reflect the roles that AI automation can create.

“As we heard from the industries gathered at the table, there is a big need for predictive and prescriptive maintenance from our industries,” added Vic Burke, vice president of academic affairs at Southern Regional Technical College. "Our manufacturers are automating more processes, which means fewer low-paying assembly jobs and more higher paying technician jobs.”

--Randy Trammell, CEISMC Communications

Nov. 09, 2023

Generative AI tools have taken the world by storm. ChatGPT reached 100 million monthly users faster than any internet application in history. The potential benefits of efficiency and productivity gains for knowledge-intensive firms are clear, and companies in industries such as professional services, health care, and finance are investing billions in adopting the technologies.

But the benefits for individual knowledge workers can be less clear. When technology can do many tasks that only humans could do in the past, what does it mean for knowledge workers? Generative AI can and will automate some of the tasks of knowledge workers, but that doesn’t necessarily mean it will replace all of them. Generative AI can also help knowledge workers find more time to do meaningful work, and improve performance and productivity. The difference is in how you use the tools.

In this article, we aim to explain how to do that well. First, to help employees and managers understand ways that generative AI can support knowledge work. And second, to identify steps that managers can take to help employees realize the potential benefits.

What Is Knowledge Work?

Knowledge work primarily involves cognitive processing of information to generate value-added outputs. It differs from manual labor in the materials used and the types of conversion processes involved. Knowledge work is typically dependent on advanced training and specialization in specific domains, gained over time through learning and experience. It includes both structured and unstructured tasks. Structured tasks are those with well-defined and well-understood inputs and outputs, as well as prespecified steps for converting inputs to outputs. Examples include payroll processing or scheduling meetings. Unstructured tasks are those where inputs, conversion procedures, or outputs are mostly ill-defined, underspecified, or unknown a priori. Examples include resolving interpersonal conflict, designing a product, or negotiating a salary.

Very few jobs are purely one or the other. Jobs consist of many tasks, some of which are structured and others which are unstructured. Some tasks are necessary but repetitive. Some are more creative or interesting. Some can be done alone, while others require working with other people. Some are common to everything the worker does, while others happen only for exceptions. As a knowledge worker, your job, then, is to manage this complex set of tasks to achieve their goals.

Computers have traditionally been good at performing structured tasks, but there are many tasks that only humans can do. Generative AI is changing the game, moving the boundaries of what computers can do and shrinking the sphere of tasks that remain as purely human activity. While it can be worrisome to think about generative AI encroaching on knowledge work, we believe that the benefits can far outweigh the costs for most knowledge workers. But realizing the benefits requires taking action now to learn how to leverage generative AI in support of knowledge work.

Continue reading: How Generative AI Will Transfer Knowledge Work

Reprinted from the Harvard Business Review, November 7, 2023.

- Maryam Alavi is the Elizabeth D. & Thomas M. Holder Chair & Professor of IT Management, Scheller College of Business, Georgia Institute of Technology.

- George Westerman is a Senior Lecturer at MIT Sloan School of Management and founder of the Global Opportunity Forum in MIT’s Office of Open Learning.

News Contact

Lorrie Burroughs

Pagination

- Previous page

- 5 Page 5

- Next page