Oct. 16, 2024

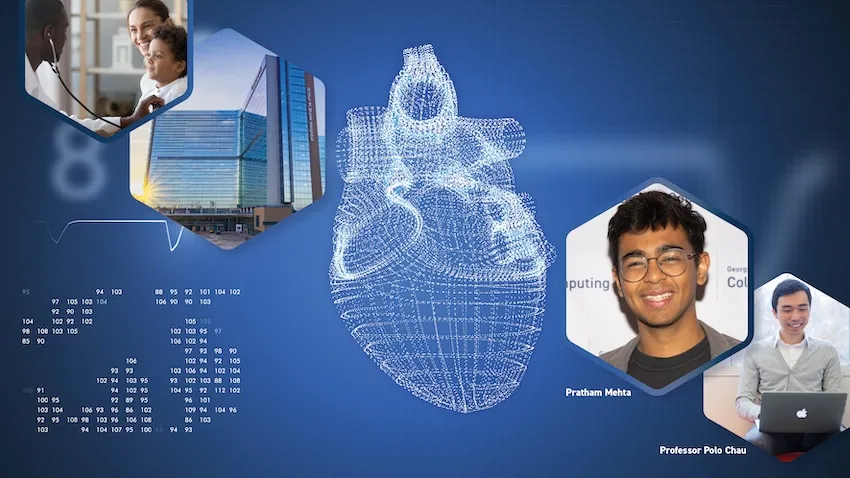

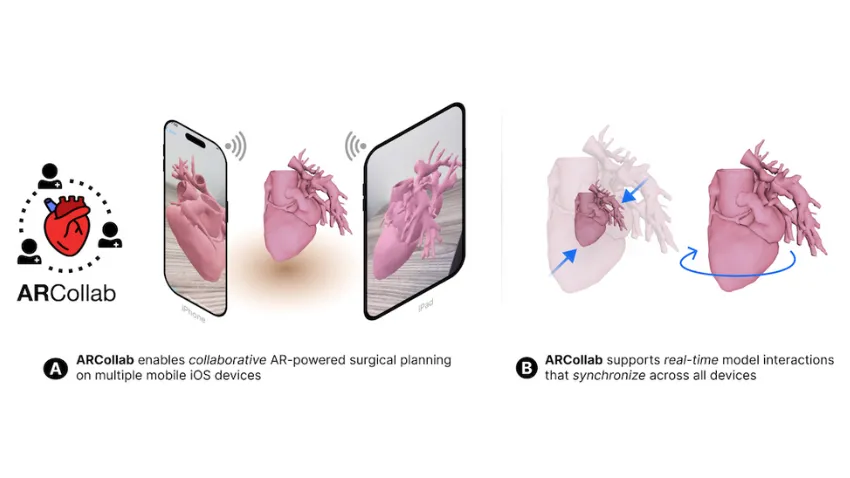

A new surgery planning tool powered by augmented reality (AR) is in development for doctors who need closer collaboration when planning heart operations. Promising results from a recent usability test have moved the platform one step closer to everyday use in hospitals worldwide.

Georgia Tech researchers partnered with medical experts from Children’s Healthcare of Atlanta (CHOA) to develop and test ARCollab. The iOS-based app leverages advanced AR technologies to let doctors collaborate together and interact with a patient’s 3D heart model when planning surgeries.

The usability evaluation demonstrates the app’s effectiveness, finding that ARCollab is easy to use and understand, fosters collaboration, and improves surgical planning.

“This tool is a step toward easier collaborative surgical planning. ARCollab could reduce the reliance on physical heart models, saving hours and even days of time while maintaining the collaborative nature of surgical planning,” said M.S. student Pratham Mehta, the app’s lead researcher.

“Not only can it benefit doctors when planning for surgery, it may also serve as a teaching tool to explain heart deformities and problems to patients.”

Two cardiologists and three cardiothoracic surgeons from CHOA tested ARCollab. The two-day study ended with the doctors taking a 14-question survey assessing the app’s usability. The survey also solicited general feedback and top features.

The Georgia Tech group determined from the open-ended feedback that:

- ARCollab enables new collaboration capabilities that are easy to use and facilitate surgical planning.

- Anchoring the model to a physical space is important for better interaction.

- Portability and real-time interaction are crucial for collaborative surgical planning.

Users rated each of the 14 questions on a 7-point Likert scale, with one being “strongly disagree” and seven being “strongly agree.” The 14 questions were organized into five categories: overall, multi-user, model viewing, model slicing, and saving and loading models.

The multi-user category attained the highest rating with an average of 6.65. This included a unanimous 7.0 rating that it was easy to identify who was controlling the heart model in ARCollab. The scores also showed it was easy for users to connect with devices, switch between viewing and slicing, and view other users’ interactions.

The model slicing category received the lowest, but formidable, average of 5.5. These questions assessed ease of use and understanding of finger gestures and usefulness to toggle slice direction.

Based on feedback, the researchers will explore adding support for remote collaboration. This would assist doctors in collaborating when not in a shared physical space. Another improvement is extending the save feature to support multiple states.

“The surgeons and cardiologists found it extremely beneficial for multiple people to be able to view the model and collaboratively interact with it in real-time,” Mehta said.

The user study took place in a CHOA classroom. CHOA also provided a 3D heart model for the test using anonymous medical imaging data. Georgia Tech’s Institutional Review Board (IRB) approved the study and the group collected data in accordance with Institute policies.

The five test participants regularly perform cardiovascular surgical procedures and are employed by CHOA.

The Georgia Tech group provided each participant with an iPad Pro with the latest iOS version and the ARCollab app installed. Using commercial devices and software meets the group’s intentions to make the tool universally available and deployable.

“We plan to continue iterating ARCollab based on the feedback from the users,” Mehta said.

“The participants suggested the addition of a ‘distance collaboration’ mode, enabling doctors to collaborate even if they are not in the same physical environment. This allows them to facilitate surgical planning sessions from home or otherwise.”

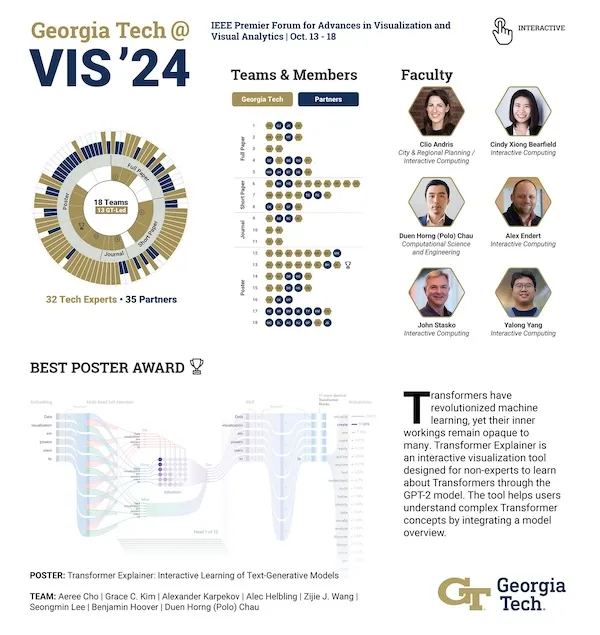

The Georgia Tech researchers are presenting ARCollab and the user study results at IEEE VIS 2024, the Institute of Electrical and Electronics Engineers (IEEE) visualization conference.

IEEE VIS is the world’s most prestigious conference for visualization research and the second-highest rated conference for computer graphics. It takes place virtually Oct. 13-18, moved from its venue in St. Pete Beach, Florida, due to Hurricane Milton.

The ARCollab research group's presentation at IEEE VIS comes months after they shared their work at the Conference on Human Factors in Computing Systems (CHI 2024).

Undergraduate student Rahul Narayanan and alumni Harsha Karanth (M.S. CS 2024) and Haoyang (Alex) Yang (CS 2022, M.S. CS 2023) co-authored the paper with Mehta. They study under Polo Chau, a professor in the School of Computational Science and Engineering.

The Georgia Tech group partnered with Dr. Timothy Slesnick and Dr. Fawwaz Shaw from CHOA on ARCollab’s development and user testing.

"I'm grateful for these opportunities since I get to showcase the team's hard work," Mehta said.

“I can meet other like-minded researchers and students who share these interests in visualization and human-computer interaction. There is no better form of learning.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Aug. 30, 2024

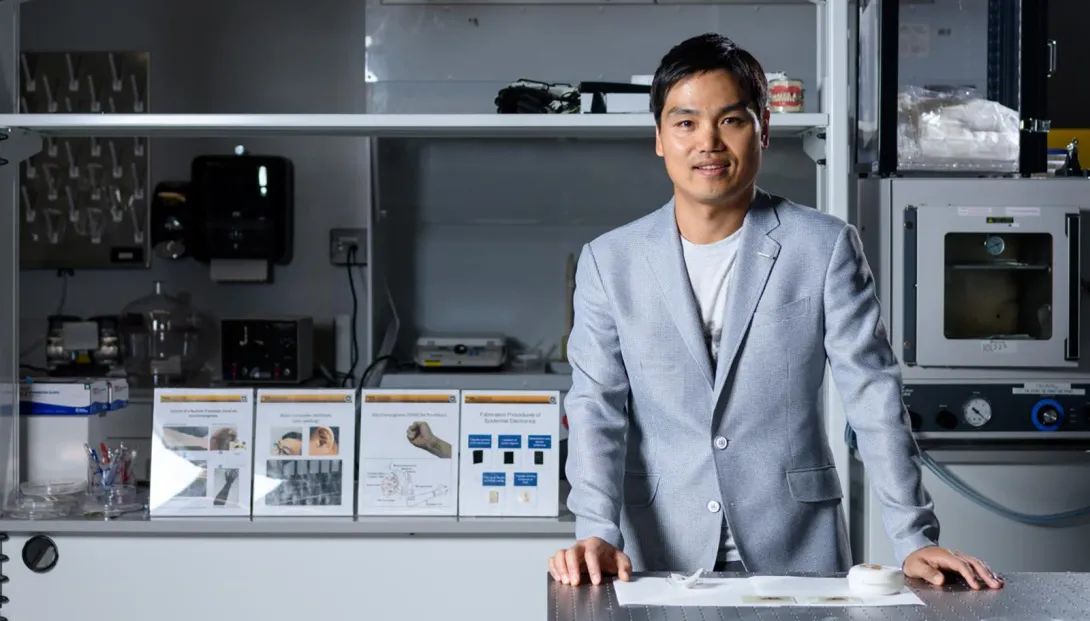

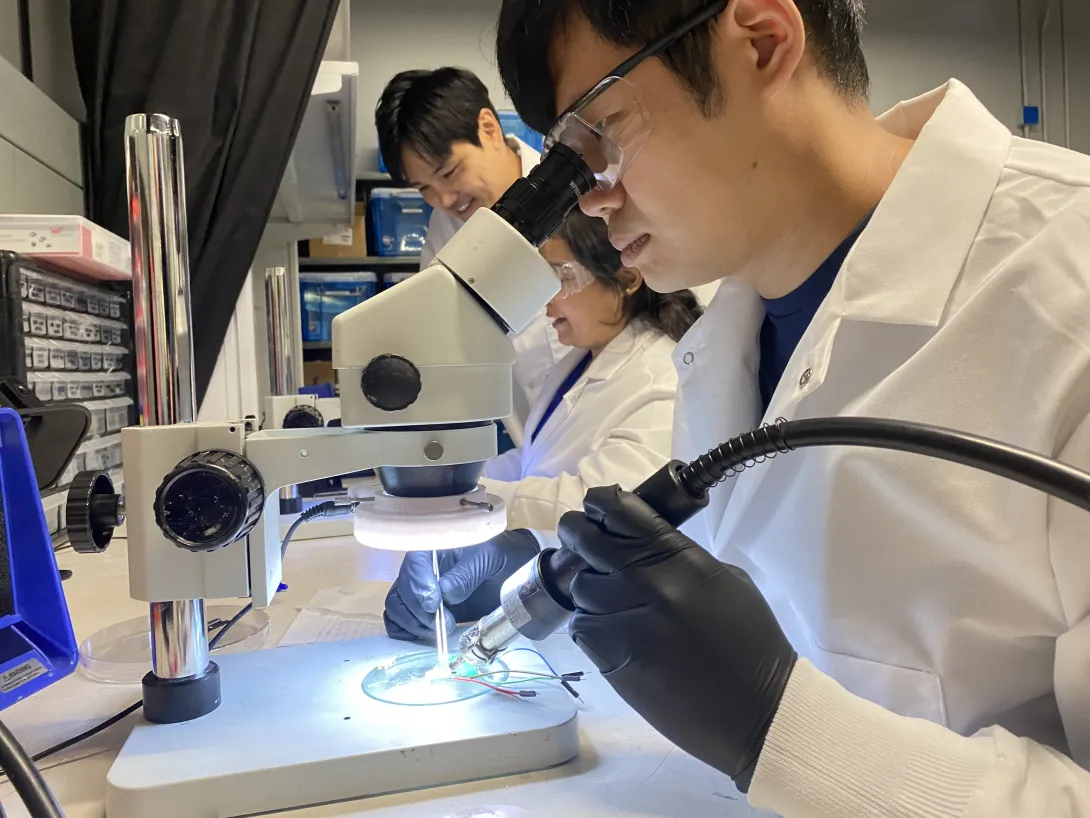

Georgia Tech researcher W. Hong Yeo has been awarded a $3 million grant to help develop a new generation of engineers and scientists in the field of sustainable medical devices.

“The workforce that will emerge from this program will tackle a global challenge through sustainable innovations in device design and manufacturing,” said Yeo, Woodruff Faculty Fellow and associate professor in the George W. Woodruff School of Mechanical Engineering and the Wallace H. Coulter Department of Biomedical Engineering at Georgia Tech and Emory University.

The funding, from the National Science Foundation (NSF) Research Training (NRT) program, will address the environmental impacts resulting from the mass production of medical devices, including the increase in material waste and greenhouse gas emissions.

Under Yeo’s leadership, the Georgia Tech team comprises multidisciplinary faculty: Andrés García (bioengineering), HyunJoo Oh (industrial design and interactive computing), Lewis Wheaton (biology), and Josiah Hester (sustainable computing). Together, they’ll train 100 graduate students, including 25 NSF-funded trainees, who will develop reuseable, reliable medical devices for a range of uses.

“We plan to educate students on how to develop medical devices using biocompatible and biodegradable materials and green manufacturing processes using low-cost printing technologies,” said Yeo. “These wearable and implantable devices will enhance disease diagnosis, therapeutics, rehabilitation, and health monitoring.”

Students in the program will be challenged by a comprehensive, multidisciplinary curriculum, with deep dives into bioengineering, public policy, physiology, industrial design, interactive computing, and medicine. And they’ll get real-world experience through collaborations with clinicians and medical product developers, working to create devices that meet the needs of patients and care providers.

The Georgia Tech NRT program aims to attract students from various backgrounds, fostering a diverse, inclusive environment in the classroom — and ultimately in the workforce.

The program will also introduce a new Ph.D. concentration in smart medical devices as part of Georgia Tech's bioengineering program, and a new M.S. program in the sustainable development of medical devices. Yeo also envisions an academic impact that extends beyond the Tech campus.

“Collectively, this NRT program's curriculum, combining methods from multiple domains, will help establish best practices in many higher education institutions for developing reliable and personalized medical devices for healthcare,” he said. “We’d like to broaden students' perspectives, move past the current technology-first mindset, and reflect the needs of patients and healthcare providers through sustainable technological solutions.”

News Contact

Jerry Grillo

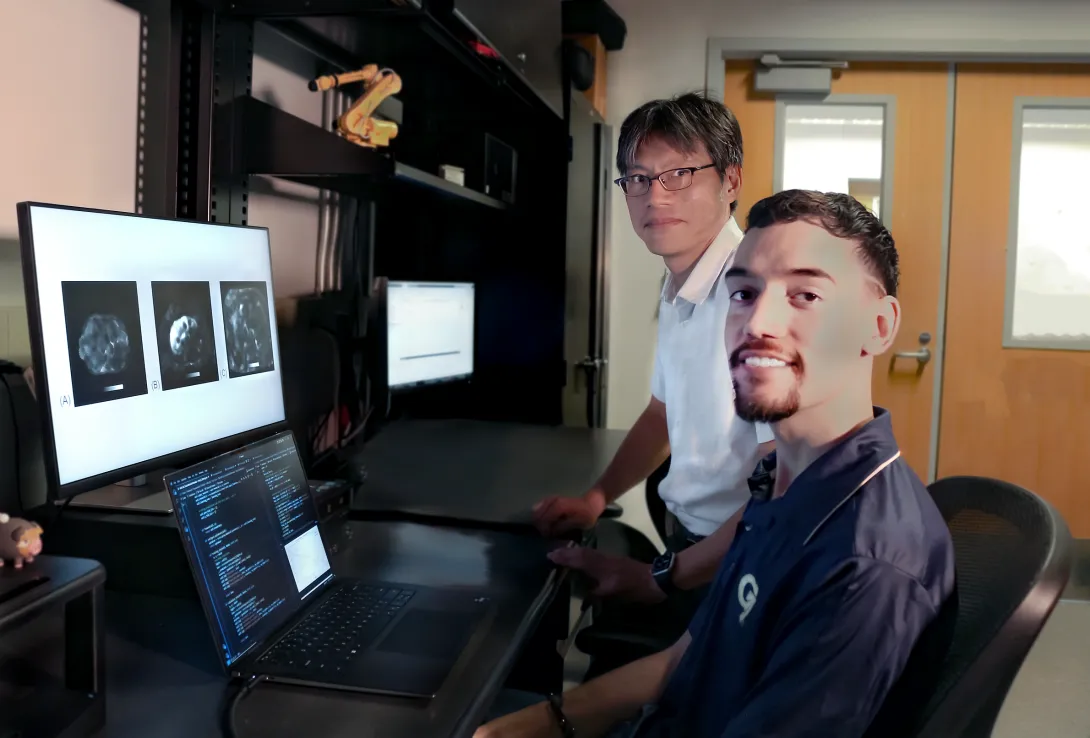

Jul. 15, 2024

Hepatic, or liver, disease affects more than 100 million people in the U.S. About 4.5 million adults (1.8%) have been diagnosed with liver disease, but it is estimated that between 80 and 100 million adults in the U.S. have undiagnosed fatty liver disease in varying stages. Over time, undiagnosed and untreated hepatic diseases can lead to cirrhosis, a severe scarring of the liver that cannot be reversed.

Most hepatic diseases are chronic conditions that will be present over the life of the patient, but early detection improves overall health and the ability to manage specific conditions over time. Additionally, assessing patients over time allows for effective treatments to be adjusted as necessary. The standard protocol for diagnosis, as well as follow-up tissue assessment, is a biopsy after the return of an abnormal blood test, but biopsies are time-consuming and pose risks for the patient. Several non-invasive imaging techniques have been developed to assess the stiffness of liver tissue, an indication of scarring, including magnetic resonance elastography (MRE).

MRE combines elements of ultrasound and MRI imaging to create a visual map showing gradients of stiffness throughout the liver and is increasingly used to diagnose hepatic issues. MRE exams, however, can fail for many reasons, including patient motion, patient physiology, imaging issues, and mechanical issues such as improper wave generation or propagation in the liver. Determining the success of MRE exams depends on visual inspection of technologists and radiologists. With increasing work demands and workforce shortages, providing an accurate, automated way to classify image quality will create a streamlined approach and reduce the need for repeat scans.

Professor Jun Ueda in the George W. Woodruff School of Mechanical Engineering and robotics Ph.D. student Heriberto Nieves, working with a team from the Icahn School of Medicine at Mount Sinai, have successfully applied deep learning techniques for accurate, automated quality control image assessment. The research, “Deep Learning-Enabled Automated Quality Control for Liver MR Elastography: Initial Results,” was published in the Journal of Magnetic Resonance Imaging.

Using five deep learning training models, an accuracy of 92% was achieved by the best-performing ensemble on retrospective MRE images of patients with varied liver stiffnesses. The team also achieved a return of the analyzed data within seconds. The rapidity of image quality return allows the technician to focus on adjusting hardware or patient orientation for re-scan in a single session, rather than requiring patients to return for costly and timely re-scans due to low-quality initial images.

This new research is a step toward streamlining the review pipeline for MRE using deep learning techniques, which have remained unexplored compared to other medical imaging modalities. The research also provides a helpful baseline for future avenues of inquiry, such as assessing the health of the spleen or kidneys. It may also be applied to automation for image quality control for monitoring non-hepatic conditions, such as breast cancer or muscular dystrophy, in which tissue stiffness is an indicator of initial health and disease progression. Ueda, Nieves, and their team hope to test these models on Siemens Healthineers magnetic resonance scanners within the next year.

Publication

Nieves-Vazquez, H.A., Ozkaya, E., Meinhold, W., Geahchan, A., Bane, O., Ueda, J. and Taouli, B. (2024), Deep Learning-Enabled Automated Quality Control for Liver MR Elastography: Initial Results. J Magn Reson Imaging. https://doi.org/10.1002/jmri.29490

Prior Work

Robotically Precise Diagnostics and Therapeutics for Degenerative Disc Disorder

Related Material

Editorial for “Deep Learning-Enabled Automated Quality Control for Liver MR Elastography: Initial Results”

News Contact

Christa M. Ernst |

Research Communications Program Manager |

Topic Expertise: Robotics, Data Sciences, Semiconductor Design & Fab |

Jul. 11, 2024

A new machine learning (ML) model created at Georgia Tech is helping neuroscientists better understand communications between brain regions. Insights from the model could lead to personalized medicine, better brain-computer interfaces, and advances in neurotechnology.

The Georgia Tech group combined two current ML methods into their hybrid model called MRM-GP (Multi-Region Markovian Gaussian Process).

Neuroscientists who use MRM-GP learn more about communications and interactions within the brain. This in turn improves understanding of brain functions and disorders.

“Clinically, MRM-GP could enhance diagnostic tools and treatment monitoring by identifying and analyzing neural activity patterns linked to various brain disorders,” said Weihan Li, the study’s lead researcher.

“Neuroscientists can leverage MRM-GP for its robust modeling capabilities and efficiency in handling large-scale brain data.”

MRM-GP reveals where and how communication travels across brain regions.

The group tested MRM-GP using spike trains and local field potential recordings, two kinds of measurements of brain activity. These tests produced representations that illustrated directional flow of communication among brain regions.

Experiments also disentangled brainwaves, called oscillatory interactions, into organized frequency bands. MRM-GP’s hybrid configuration allows it to model frequencies and phase delays within the latent space of neural recordings.

MRM-GP combines the strengths of two existing methods: the Gaussian process (GP) and linear dynamical systems (LDS). The researchers say that MRM-GP is essentially an LDS that mirrors a GP.

LDS is a computationally efficient and cost-effective method, but it lacks the power to produce representations of the brain. GP-based approaches boost LDS's power, facilitating the discovery of variables in frequency bands and communication directions in the brain.

Converting GP outputs into an LDS is a difficult task in ML. The group overcame this challenge by instilling separability in the model’s multi-region kernel. Separability establishes a connection between the kernel and LDS while modeling communication between brain regions.

Through this approach, MRM-GP overcomes two challenges facing both neuroscience and ML fields. The model helps solve the mystery of intraregional brain communication. It does so by bridging a gap between GP and LDS, a feat not previously accomplished in ML.

“The introduction of MRM-GP provides a useful tool to model and understand complex brain region communications,” said Li, a Ph.D. student in the School of Computational Science and Engineering (CSE).

“This marks a significant advancement in both neuroscience and machine learning.”

Fellow doctoral students Chengrui Li and Yule Wang co-authored the paper with Li. School of CSE Assistant Professor Anqi Wu advises the group.

Each MRM-GP student pursues a different Ph.D. degree offered by the School of CSE. W. Li studies computer science, C. Li studies computational science and engineering, and Wang studies machine learning. The school also offers Ph.D. degrees in bioinformatics and bioengineering.

Wu is a 2023 recipient of the Sloan Research Fellowship for neuroscience research. Her work straddles two of the School’s five research areas: machine learning and computational bioscience.

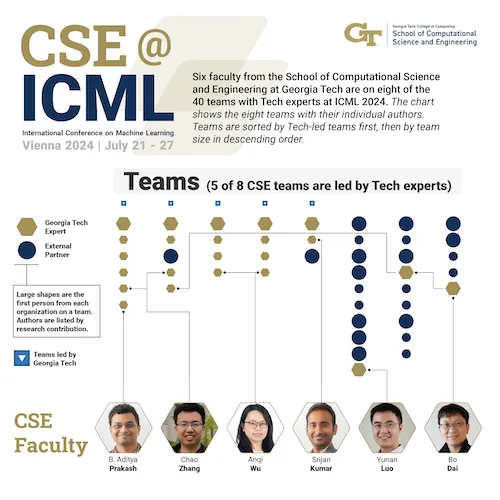

MRM-GP will be featured at the world’s top conference on ML and artificial intelligence. The group will share their work at the International Conference on Machine Learning (ICML 2024), which will be held July 21-27 in Vienna.

ICML 2024 also accepted for presentation a second paper from Wu’s group intersecting neuroscience and ML. The same authors will present A Differentiable Partially Observable Generalized Linear Model with Forward-Backward Message Passing.

Twenty-four Georgia Tech faculty from the Colleges of Computing and Engineering will present 40 papers at ICML 2024. Wu is one of six faculty representing the School of CSE who will present eight total papers.

The group’s ICML 2024 presentations exemplify Georgia Tech’s focus on neuroscience research as a strategic initiative.

Wu is an affiliated faculty member with the Neuro Next Initiative, a new interdisciplinary program at Georgia Tech that will lead research in neuroscience, neurotechnology, and society. The University System of Georgia Board of Regents recently approved a new neuroscience and neurotechnology Ph.D. program at Georgia Tech.

“Presenting papers at international conferences like ICML is crucial for our group to gain recognition and visibility, facilitates networking with other researchers and industry professionals, and offers valuable feedback for improving our work,” Wu said.

“It allows us to share our findings, stay updated on the latest developments in the field, and enhance our professional development and public speaking skills.”

Visit https://sites.gatech.edu/research/icml-2024 for news and coverage of Georgia Tech research presented at ICML 2024.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Jun. 12, 2024

Adoptive T-cell therapy has revolutionized medicine. A patient’s T-cells — a type of white blood cell that is part of the body’s immune system — are extracted and modified in a lab and then infused back into the body, to seek and destroy infection, or cancer cells.

Now Georgia Tech bioengineer Ankur Singh and his research team have developed a method to improve this pioneering immunotherapy.

Their solution involves using nanowires to deliver therapeutic miRNA to T-cells. This new modification process retains the cells’ naïve state, which means they’ll be even better disease fighters when they’re infused back into a patient.

“By delivering miRNA in naïve T cells, we have basically prepared an infantry, ready to deploy,” Singh said. “And when these naïve cells are stimulated and activated in the presence of disease, it’s like they’ve been converted into samurais.”

Lean and Mean

Currently in adoptive T-cell therapy, the cells become stimulated and preactivated in the lab when they are modified, losing their naïve state. Singh’s new technique overcomes this limitation. The approach is described in a new study published in the journal Nature Nanotechnology.

“Naïve T-cells are more useful for immunotherapy because they have not yet been preactivated, which means they can be more easily manipulated to adopt desired therapeutic functions,” said Singh, the Carl Ring Family Professor in the Woodruff School of Mechanical Engineering and the Wallace H. Coulter Department of Biomedical Engineering.

The raw recruits of the immune system, naïve T-cells are white blood cells that haven’t been tested in battle yet. But these cellular recruits are robust, impressionable, and adaptable — ready and eager for programming.

“This process creates a well-programmed naïve T-cell ideal for enhancing immune responses against specific targets, such as tumors or pathogens,” said Singh.

The precise programming naïve T-cells receive sets the foundational stage for a more successful disease fighting future, as compared to preactivated cells.

Giving Fighter Cells a Boost

Within the body, naïve T-cells become activated when they receive a danger signal from antigens, which are part of disease-causing pathogens, but they send a signal to T-cells that activate the immune system.

Adoptive T-cell therapy is used against aggressive diseases that overwhelm the body’s defense system. Scientists give the patient’s T-cells a therapeutic boost in the lab, loading them up with additional medicine and chemically preactivating them.

That’s when the cells lose their naïve state. When infused back into the patient, these modified T-cells are an effective infantry against disease — but they are prone to becoming exhausted. They aren’t samurai. Naïve T-cells, though, being the young, programmable recruits that they are, could be.

The question for Singh and his team was: How do we give cells that therapeutic boost without preactivating them, thereby losing that pristine, highly suggestable naïve state? Their answer: Nanowires.

NanoPrecision: The Pointed Solution

Singh wanted to enhance naïve T-cells with a dose of miRNA. miRNA is a molecule that, when used as a therapeutic, works as a kind of volume knob for genes, turning their activity up or down to keep infection and cancer in check. The miRNA for this study was developed in part by the study’s co-author, Andrew Grimson of Cornell University.

“If we could find a way to forcibly enter the cells without damaging them, we could achieve our goal to deliver the miRNA into naïve T cells without preactivating them,” Singh explained.

Traditional modification in the lab involves binding immune receptors to T-cells, enabling the uptake of miRNA or any genetic material (which results in loss of the naïve state). “But nanowires do not engage receptors and thus do not activate cells, so they retain their naïve state,” Singh said.

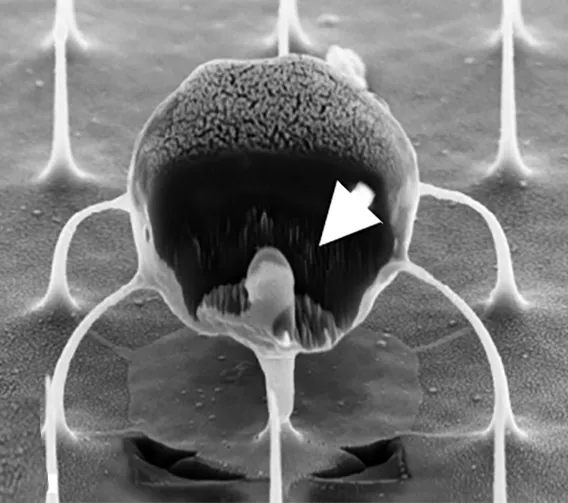

The nanowires, silicon wafers made with specialized tools at Georgia Tech’s Institute for Electronics and Nanotechnology, form a fine needle bed. Cells are placed on the nanowires, which easily penetrate the cells and deliver their miRNA over several hours. Then the cells with miRNA are flushed out from the tops of the nanowires, activated, eventually infused back into the patient. These programmed cells can kill enemies efficiently over an extended time period.

“We believe this approach will be a real gamechanger for adoptive immunotherapies, because we now have the ability to produce T-cells with predictable fates,” says Brian Rudd, a professor of immunology at Cornell University, and co-senior author of the study with Singh.

The researchers tested their work in two separate infectious disease animal models at Cornell for this study, and Singh described the results as “a robust performance in infection control.”

In the next phase of study, the researchers will up the ante, moving from infectious disease to test their cellular super soldiers against cancer and move toward translation to the clinical setting. New funding from the Georgia Clinical & Translational Science Alliance is supporting Singh’s research.

CITATION: Kristel J. Yee Mon, Sungwoong Kim, Zhonghao Dai, Jessica D. West, Hongya Zhu5, Ritika Jain, Andrew Grimson, Brian D. Rudd, Ankur Singh. “Functionalized nanowires for miRNA-mediated therapeutic programming of naïve T cells,” Nature Nanotechnology.

FUNDING: Curci Foundation, NSF (EEC-1648035, ECCS-2025462, ECCS-1542081), NIH (5R01AI132738-06, 1R01CA266052-01, 1R01CA238745-01A1, U01CA280984-01, R01AI110613 and U01AI131348).

News Contact

Jerry Grillo

May. 15, 2024

Georgia Tech researchers say non-English speakers shouldn’t rely on chatbots like ChatGPT to provide valuable healthcare advice.

A team of researchers from the College of Computing at Georgia Tech has developed a framework for assessing the capabilities of large language models (LLMs).

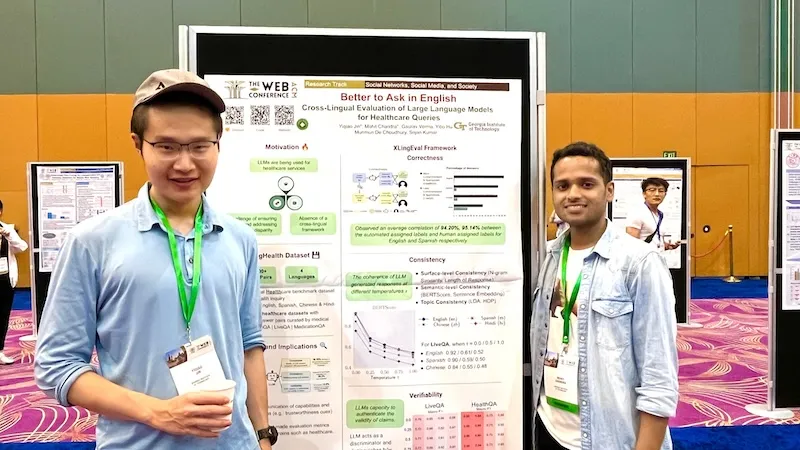

Ph.D. students Mohit Chandra and Yiqiao (Ahren) Jin are the co-lead authors of the paper Better to Ask in English: Cross-Lingual Evaluation of Large Language Models for Healthcare Queries.

Their paper’s findings reveal a gap between LLMs and their ability to answer health-related questions. Chandra and Jin point out the limitations of LLMs for users and developers but also highlight their potential.

Their XLingEval framework cautions non-English speakers from using chatbots as alternatives to doctors for advice. However, models can improve by deepening the data pool with multilingual source material such as their proposed XLingHealth benchmark.

“For users, our research supports what ChatGPT’s website already states: chatbots make a lot of mistakes, so we should not rely on them for critical decision-making or for information that requires high accuracy,” Jin said.

“Since we observed this language disparity in their performance, LLM developers should focus on improving accuracy, correctness, consistency, and reliability in other languages,” Jin said.

Using XLingEval, the researchers found chatbots are less accurate in Spanish, Chinese, and Hindi compared to English. By focusing on correctness, consistency, and verifiability, they discovered:

- Correctness decreased by 18% when the same questions were asked in Spanish, Chinese, and Hindi.

- Answers in non-English were 29% less consistent than their English counterparts.

- Non-English responses were 13% overall less verifiable.

XLingHealth contains question-answer pairs that chatbots can reference, which the group hopes will spark improvement within LLMs.

The HealthQA dataset uses specialized healthcare articles from the popular healthcare website Patient. It includes 1,134 health-related question-answer pairs as excerpts from original articles.

LiveQA is a second dataset containing 246 question-answer pairs constructed from frequently asked questions (FAQs) platforms associated with the U.S. National Institutes of Health (NIH).

For drug-related questions, the group built a MedicationQA component. This dataset contains 690 questions extracted from anonymous consumer queries submitted to MedlinePlus. The answers are sourced from medical references, such as MedlinePlus and DailyMed.

In their tests, the researchers asked over 2,000 medical-related questions to ChatGPT-3.5 and MedAlpaca. MedAlpaca is a healthcare question-answer chatbot trained in medical literature. Yet, more than 67% of its responses to non-English questions were irrelevant or contradictory.

“We see far worse performance in the case of MedAlpaca than ChatGPT,” Chandra said.

“The majority of the data for MedAlpaca is in English, so it struggled to answer queries in non-English languages. GPT also struggled, but it performed much better than MedAlpaca because it had some sort of training data in other languages.”

Ph.D. student Gaurav Verma and postdoctoral researcher Yibo Hu co-authored the paper.

Jin and Verma study under Srijan Kumar, an assistant professor in the School of Computational Science and Engineering, and Hu is a postdoc in Kumar’s lab. Chandra is advised by Munmun De Choudhury, an associate professor in the School of Interactive Computing.

The team will present their paper at The Web Conference, occurring May 13-17 in Singapore. The annual conference focuses on the future direction of the internet. The group’s presentation is a complimentary match, considering the conference's location.

English and Chinese are the most common languages in Singapore. The group tested Spanish, Chinese, and Hindi because they are the world’s most spoken languages after English. Personal curiosity and background played a part in inspiring the study.

“ChatGPT was very popular when it launched in 2022, especially for us computer science students who are always exploring new technology,” said Jin. “Non-native English speakers, like Mohit and I, noticed early on that chatbots underperformed in our native languages.”

School of Interactive Computing communications officer Nathan Deen and School of Computational Science and Engineering communications officer Bryant Wine contributed to this report.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nathan Deen, Communications Officer

ndeen6@cc.gatech.edu

May. 14, 2024

The call from his mom is still vivid 20 years later. Moments this big and this devastating can define lives, and for Hong Yeo, today a Georgia Tech mechanical engineer, this call certainly did. Yeo was a 21-year-old in college studying car design when his mom called to tell him his father had died in his sleep. A heart attack claimed the life of the 49-year-old high school English teacher who had no history of heart trouble and no signs of his growing health threat. For the family, it was a crushing blow that altered each of their paths.

“It was an uncertain time for all of us,” said Yeo. “This loss changed my focus.”

For Yeo, thoughts and dreams of designing cars for Hyundai in Korea turned instead toward medicine. The shock of his father going from no signs of illness to gone forever developed into a quest for medical answers that might keep other families from experiencing the pain and loss his family did — or at least making it less likely to happen.

Yeo’s own research and schooling in college pointed out a big problem when it comes to issues with sleep and how our bodies’ systems perform — data. He became determined to invent a way to give medical doctors better information that would allow them to spot a problem like his father’s before it became life-threatening.

His answer: a type of wearable sleep data system. Now very close to being commercially available, Yeo’s device comes after years of working on the materials and electronics for an easy-to-wear, comfortable mask that can gather data about sleep over multiple days or even weeks, allowing doctors to catch sporadic heart problems or other issues. Different from some of the bulky devices with straps and cords currently available for at-home heart monitoring, it offers the bonuses of ease of use and comfort, ensuring little to no alteration to users’ bedtime routine or wear. This means researchers can collect data from sleep patterns that are as close to normal sleep as possible.

“Most of the time now, gathering sleep data means the patient must come to a lab or hospital for sleep monitoring. Of course, it’s less comfortable than home, and the devices patients must wear make it even less so. Also, the process is expensive, so it’s rare to get multiple nights of data,” says Audrey Duarte, University of Texas human memory researcher.

Duarte has been working with Yeo on this system for more than 10 years. She says there are so many mental and physical health outcomes tied to sleep that good, long-term data has the potential to have tremendous impact.

“The results we’ve seen are incredibly encouraging, related to many things —from heart issues to areas I study more closely like memory and Alzheimer’s,” said Duarte.

Yeo’s device may not have caught the arrhythmia that caused his father’s heart attack, but nights or weeks of data would have made effective medical intervention much more likely.

Inspired by his own family’s loss, Yeo’s life’s work has become a tool of hope for others.

May. 06, 2024

Cardiologists and surgeons could soon have a new mobile augmented reality (AR) tool to improve collaboration in surgical planning.

ARCollab is an iOS AR application designed for doctors to interact with patient-specific 3D heart models in a shared environment. It is the first surgical planning tool that uses multi-user mobile AR in iOS.

The application’s collaborative feature overcomes limitations in traditional surgical modeling and planning methods. This offers patients better, personalized care from doctors who plan and collaborate with the tool.

Georgia Tech researchers partnered with Children’s Healthcare of Atlanta (CHOA) in ARCollab’s development. Pratham Mehta, a computer science major, led the group’s research.

“We have conducted two trips to CHOA for usability evaluations with cardiologists and surgeons. The overall feedback from ARCollab users has been positive,” Mehta said.

“They all enjoyed experimenting with it and collaborating with other users. They also felt like it had the potential to be useful in surgical planning.”

ARCollab’s collaborative environment is the tool’s most novel feature. It allows surgical teams to study and plan together in a virtual workspace, regardless of location.

ARCollab supports a toolbox of features for doctors to inspect and interact with their patients' AR heart models. With a few finger gestures, users can scale and rotate, “slice” into the model, and modify a slicing plane to view omnidirectional cross-sections of the heart.

Developing ARCollab on iOS works twofold. This streamlines deployment and accessibility by making it available on the iOS App Store and Apple devices. Building ARCollab on Apple’s peer-to-peer network framework ensures the functionality of the AR components. It also lessens the learning curve, especially for experienced AR users.

ARCollab overcomes traditional surgical planning practices of using physical heart models. Producing physical models is time-consuming, resource-intensive, and irreversible compared to digital models. It is also difficult for surgical teams to plan together since they are limited to studying a single physical model.

Digital and AR modeling is growing as an alternative to physical models. CardiacAR is one such tool the group has already created.

However, digital platforms lack multi-user features essential for surgical teams to collaborate during planning. ARCollab’s multi-user workspace progresses the technology’s potential as a mass replacement for physical modeling.

“Over the past year and a half, we have been working on incorporating collaboration into our prior work with CardiacAR,” Mehta said.

“This involved completely changing the codebase, rebuilding the entire app and its features from the ground up in a newer AR framework that was better suited for collaboration and future development.”

Its interactive and visualization features, along with its novelty and innovation, led the Conference on Human Factors in Computing Systems (CHI 2024) to accept ARCollab for presentation. The conference occurs May 11-16 in Honolulu.

CHI is considered the most prestigious conference for human-computer interaction and one of the top-ranked conferences in computer science.

M.S. student Harsha Karanth and alumnus Alex Yang (CS 2022, M.S. CS 2023) co-authored the paper with Mehta. They study under Polo Chau, an associate professor in the School of Computational Science and Engineering.

The Georgia Tech group partnered with Timothy Slesnick and Fawwaz Shaw from CHOA on ARCollab’s development.

“Working with the doctors and having them test out versions of our application and give us feedback has been the most important part of the collaboration with CHOA,” Mehta said.

“These medical professionals are experts in their field. We want to make sure to have features that they want and need, and that would make their job easier.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Mar. 18, 2024

Researchers with Georgia Tech and Emory University are field testing a new device that could help protect people who work outside from heat related injury. It’s a skin patch you can wear while working that sends detailed information to a smartphone or other device about important health markers like skin hydration and body temperature. The device takes different measurements than health wearables on the market currently and will be paired with an artificial intelligence program to predict health hazards. The team is calling the device BioPatch, and it’s being put to the test with landscaping crews. Researchers hope use of the device can guide better decisions about working in the heat.

The project involves collaboration between principal investigators Vicki Hertzberg from Emory University, W. Hong Yeo from Georgia Tech, and Li Xiong from Emory University. Their expertise spans statistics, mechanical and biomedical engineering, and computer science, respectively. Roxana Chicas of the Emory School of Nursing and Jeff Sands of the Emory School of Medicine, along with members of the Farmworker Association of Florida, are also part of the team. This video shows the device and data collection during a key component of testing during the summer.

Jan. 04, 2024

While increasing numbers of people are seeking mental health care, mental health providers are facing critical shortages. Now, an interdisciplinary team of investigators at Georgia Tech, Emory University, and Penn State aim to develop an interactive AI system that can provide key insights and feedback to help these professionals improve and provide higher quality care, while satisfying the increasing demand for highly trained, effective mental health professionals.

A new $2,000,000 grant from the National Science Foundation (NSF) will support the research.

The research builds on previous collaboration between Rosa Arriaga, an associate professor in the College of Computing and Andrew Sherrill, an assistant professor in the Department of Psychiatry and Behavioral Sciences at Emory University, who worked together on a computational system for PTSD therapy.

Arriaga and Christopher Wiese, an assistant professor in the School of Psychology will lead the Georgia Tech team, Saeed Abdullah, an assistant professor in the College of Information Sciences and Technology will lead the Penn State team, and Sherrill will serve as overall project lead and Emory team lead.

The grant, for “Understanding the Ethics, Development, Design, and Integration of Interactive Artificial Intelligence Teammates in Future Mental Health Work” will allocate $801,660 of support to the Georgia Tech team, supporting four years of research.

“The initial three years of our project are dedicated to understanding and defining what functionalities and characteristics make an AI system a 'teammate' rather than just a tool,” Wiese says. “This involves extensive research and interaction with mental health professionals to identify their specific needs and challenges. We aim to understand the nuances of their work, their decision-making processes, and the areas where AI can provide meaningful support.In the final year, we plan to implement a trial run of this AI teammate philosophy with mental health professionals.”

While the project focuses on mental health workers, the impacts of the project range far beyond. “AI is going to fundamentally change the nature of work and workers,” Arriaga says. “And, as such, there’s a significant need for research to develop best practices for integrating worker, work, and future technology.”

The team underscores that sectors like business, education, and customer service could easily apply this research. The ethics protocol the team will develop will also provide a critical framework for best practices. The team also hopes that their findings could inform policymakers and stakeholders making key decisions regarding AI.

“The knowledge and strategies we develop have the potential to revolutionize how AI is integrated into the broader workforce,” Wiese adds. “We are not just exploring the intersection of human and synthetic intelligence in the mental health profession; we are laying the groundwork for a future where AI and humans collaborate effectively across all areas of work.”

Collaborative project

The project aims to develop an AI coworker called TEAMMAIT (short for “the Trustworthy, Explainable, and Adaptive Monitoring Machine for AI Team”). Rather than functioning as a tool, as many AI’s currently do, TEAMMAIT will act more as a human teammate would, providing constructive feedback and helping mental healthcare workers develop and learn new skills.

“Unlike conventional AI tools that function as mere utilities, an AI teammate is designed to work collaboratively with humans, adapting to their needs and augmenting their capabilities,” Wiese explains. “Our approach is distinctively human-centric, prioritizing the needs and perspectives of mental health professionals… it’s important to recognize that this is a complex domain and interdisciplinary collaboration is necessary to create the most optimal outcomes when it comes to integrating AI into our lives.”

With both technical and human health aspects to the research, the project will leverage an interdisciplinary team of experts spanning clinical psychology, industrial-organizational psychology, human-computer interaction, and information science.

“We need to work closely together to make sure that the system, TEAMMAIT, is useful and usable,” adds Arriaga. “Chris (Wiese) and I are looking at two types of challenges: those associated with the organization, as Chris is an industrial organizational psychology expert — and those associated with the interface, as I am a computer scientist that specializes in human computer interaction.”

Long-term timeline

The project’s long-term timeline reflects the unique challenges that it faces.

“A key challenge is in the development and design of the AI tools themselves,” Wiese says. “They need to be user-friendly, adaptable, and efficient, enhancing the capabilities of mental health workers without adding undue complexity or stress. This involves continuous iteration and feedback from end-users to refine the AI tools, ensuring they meet the real-world needs of mental health professionals.”

The team plans to deploy TEAMMAIT in diverse settings in the fourth year of development, and incorporate data from these early users to create development guidelines for Worker-AI teammates in mental health work, and to create ethical guidelines for developing and using this type of system.

“This will be a crucial phase where we test the efficacy and integration of the AI in real-world scenarios,” Wiese says. “We will assess not just the functional aspects of the AI, such as how well it performs specific tasks, but also how it impacts the work environment, the well-being of the mental health workers, and ultimately, the quality of care provided to patients.”

Assessing the psychological impacts on workers, including how TEAMMAIT impacts their day-to-day work will be crucial in ensuring TEAMMAIT has a positive impact on healthcare worker’s skills and wellbeing.

“We’re interested in understanding how mental health clinicians interact with TEAMMAIT and the subsequent impact on their work,” Wiese adds. “How long does it take for clinicians to become comfortable and proficient with TEAMMAIT? How does their engagement with TEAMMAIT change over the year? Do they feel like they are more effective when using TEAMMAIT? We’re really excited to begin answering these questions.

News Contact

Written by Selena Langner

Contact: Jess Hunt-Ralston

Pagination

- Previous page

- 2 Page 2

- Next page