Nov. 22, 2024

The Department of Commerce has granted the Semiconductor Research Corporation (SRC), its partners, and Georgia Institute of Technology $285 million to establish and operate the 18th Manufacturing USA Institute. The Semiconductor Manufacturing and Advanced Reseach with Twins (SMART USA) will focus on using digital twins to accelerate the development and deployment of microelectronics. SMART USA, with more than 150 expected partner entities representing industry, academia, and the full spectrum of supply chain design and manufacturing, will span more than 30 states and have combined funding totaling $1 billion.

This is the first-of-its-kind CHIPS Manufacturing USA Institute.

“Georgia Tech’s role in the SMART USA Institute amplifies our trailblazing chip and advanced packaging research and leverages the strengths of our interdisciplinary research institutes,” said Tim Lieuwen, interim executive vice president for Research. “We believe innovation thrives where disciplines and sectors intersect. And the SMART USA Institute will help us ensure that the benefits of our semiconductor and advanced packaging discoveries extend beyond our labs, positively impacting the economy and quality of life in Georgia and across the United States.”

The 3D Systems Packaging Research Center (PRC), directed by School of Electrical and Computer Engineering Dan Fielder Professor Muhannad Bakir, played an integral role in developing the winning proposal. Georgia Tech will be designated as the Digital Innovation Semiconductor Center (DISC) for the Southeastern U.S.

“We are honored to collaborate with SRC and their team on this new Manufacturing USA Institute. Our partnership with SRC spans more than two decades, and we are thrilled to continue this collaboration by leveraging the Institute’s wide range of semiconductor and advanced packaging expertise,” said Bakir.

Through the Institute of Matter and Systems’ core facilities, housed in the Marcus Nanotechnology Building, DISC will accelerate semiconductor and advanced packaging development.

“The awarding of the Digital Twin Manufacturing USA Institute is a culmination of more than three years of work with the Semiconductor Research Corporation and other valued team members who share a similar vision of advancing U.S. leadership in semiconductors and advanced packaging,” said George White, senior director for strategic partnerships at Georgia Tech.

“As a founding member of the SMART USA Institute, Georgia Tech values this long-standing partnership. Its industry and academic partners, including the HBCU CHIPS Network, stand ready to make significant contributions to realize the goals and objectives of the SMART USA Institute,” White added.

Georgia Tech also plans to capitalize on the supply chain and optimization strengths of the No. 1-ranked H. Milton Stewart School of Industrial and Systems Engineering (ISyE). ISyE experts will help develop supply-chain digital twins to optimize and streamline manufacturing and operational efficiencies.

David Henshall, SRC vice president of Business Development, said, “The SMART USA Institute will advance American digital twin technology and apply it to the full semiconductor supply chain, enabling rapid process optimization, predictive maintenance, and agile responses to chips supply chain disruptions. These efforts will strengthen U.S. global competitiveness, ensuring our country reaps the rewards of American innovation at scale.”

News Contact

Amelia Neumeister | Research Communications Program Manager

Nov. 11, 2024

A first-of-its-kind algorithm developed at Georgia Tech is helping scientists study interactions between electrons. This innovation in modeling technology can lead to discoveries in physics, chemistry, materials science, and other fields.

The new algorithm is faster than existing methods while remaining highly accurate. The solver surpasses the limits of current models by demonstrating scalability across chemical system sizes ranging from large to small.

Computer scientists and engineers benefit from the algorithm’s ability to balance processor loads. This work allows researchers to tackle larger, more complex problems without the prohibitive costs associated with previous methods.

Its ability to solve block linear systems drives the algorithm’s ingenuity. According to the researchers, their approach is the first known use of a block linear system solver to calculate electronic correlation energy.

The Georgia Tech team won’t need to travel far to share their findings with the broader high-performance computing community. They will present their work in Atlanta at the 2024 International Conference for High Performance Computing, Networking, Storage and Analysis (SC24).

[MICROSITE: Georgia Tech at SC24]

“The combination of solving large problems with high accuracy can enable density functional theory simulation to tackle new problems in science and engineering,” said Edmond Chow, professor and associate chair of Georgia Tech’s School of Computational Science and Engineering (CSE).

Density functional theory (DFT) is a modeling method for studying electronic structure in many-body systems, such as atoms and molecules.

An important concept DFT models is electronic correlation, the interaction between electrons in a quantum system. Electron correlation energy is the measure of how much the movement of one electron is influenced by presence of all other electrons.

Random phase approximation (RPA) is used to calculate electron correlation energy. While RPA is very accurate, it becomes computationally more expensive as the size of the system being calculated increases.

Georgia Tech’s algorithm enhances electronic correlation energy computations within the RPA framework. The approach circumvents inefficiencies and achieves faster solution times, even for small-scale chemical systems.

The group integrated the algorithm into existing work on SPARC, a real-space electronic structure software package for accurate, efficient, and scalable solutions of DFT equations. School of Civil and Environmental Engineering Professor Phanish Suryanarayana is SPARC’s lead researcher.

The group tested the algorithm on small chemical systems of silicon crystals numbering as few as eight atoms. The method achieved faster calculation times and scaled to larger system sizes than direct approaches.

“This algorithm will enable SPARC to perform electronic structure calculations for realistic systems with a level of accuracy that is the gold standard in chemical and materials science research,” said Suryanarayana.

RPA is expensive because it relies on quartic scaling. When the size of a chemical system is doubled, the computational cost increases by a factor of 16.

Instead, Georgia Tech’s algorithm scales cubically by solving block linear systems. This capability makes it feasible to solve larger problems at less expense.

Solving block linear systems presents a challenging trade-off in solving different block sizes. While larger blocks help reduce the number of steps of the solver, using them demands higher computational cost per step on computer processors.

Tech’s solution is a dynamic block size selection solver. The solver allows each processor to independently select block sizes to calculate. This solution further assists in scaling, and improves processor load balancing and parallel efficiency.

“The new algorithm has many forms of parallelism, making it suitable for immense numbers of processors,” Chow said. “The algorithm works in a real-space, finite-difference DFT code. Such a code can scale efficiently on the largest supercomputers.”

Georgia Tech alumni Shikhar Shah (Ph.D. CSE 2024), Hua Huang (Ph.D. CSE 2024), and Ph.D. student Boqin Zhang led the algorithm’s development. The project was the culmination of work for Shah and Huang, who completed their degrees this summer. John E. Pask, a physicist at Lawrence Livermore National Laboratory, joined the Tech researchers on the work.

Shah, Huang, Zhang, Suryanarayana, and Chow are among more than 50 students, faculty, research scientists, and alumni affiliated with Georgia Tech who are scheduled to give more than 30 presentations at SC24. The experts will present their research through papers, posters, panels, and workshops.

SC24 takes place Nov. 17-22 at the Georgia World Congress Center in Atlanta.

“The project’s success came from combining expertise from people with diverse backgrounds ranging from numerical methods to chemistry and materials science to high-performance computing,” Chow said.

“We could not have achieved this as individual teams working alone.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Oct. 24, 2024

The U.S. Department of Energy (DOE) has awarded Georgia Tech researchers a $4.6 million grant to develop improved cybersecurity protection for renewable energy technologies.

Associate Professor Saman Zonouz will lead the project and leverage the latest artificial technology (AI) to create Phorensics. The new tool will anticipate cyberattacks on critical infrastructure and provide analysts with an accurate reading of what vulnerabilities were exploited.

“This grant enables us to tackle one of the crucial challenges facing national security today: our critical infrastructure resilience and post-incident diagnostics to restore normal operations in a timely manner,” said Zonouz.

“Together with our amazing team, we will focus on cyber-physical data recovery and post-mortem forensics analysis after cybersecurity incidents in emerging renewable energy systems.”

As the integration of renewable energy technology into national power grids increases, so does their vulnerability to cyberattacks. These threats put energy infrastructure at risk and pose a significant danger to public safety and economic stability. The AI behind Phorensics will allow analysts and technicians to scale security efforts to keep up with a growing power grid that is becoming more complex.

This effort is part of the Security of Engineering Systems (SES) initiative at Georgia Tech’s School of Cybersecurity and Privacy (SCP). SES has three pillars: research, education, and testbeds, with multiple ongoing large, sponsored efforts.

“We had a successful hiring season for SES last year and will continue filling several open tenure-track faculty positions this upcoming cycle,” said Zonouz.

“With top-notch cybersecurity and engineering schools at Georgia Tech, we have begun the SES journey with a dedicated passion to pursue building real-world solutions to protect our critical infrastructures, national security, and public safety.”

Zonouz is the director of the Cyber-Physical Systems Security Laboratory (CPSec) and is jointly appointed by Georgia Tech’s School of Cybersecurity and Privacy (SCP) and the School of Electrical and Computer Engineering (ECE).

The three Georgia Tech researchers joining him on this project are Brendan Saltaformaggio, associate professor in SCP and ECE; Taesoo Kim, jointly appointed professor in SCP and the School of Computer Science; and Animesh Chhotaray, research scientist in SCP.

Katherine Davis, associate professor at the Texas A&M University Department of Electrical and Computer Engineering, has partnered with the team to develop Phorensics. The team will also collaborate with the NREL National Lab, and industry partners for technology transfer and commercialization initiatives.

The Energy Department defines renewable energy as energy from unlimited, naturally replenished resources, such as the sun, tides, and wind. Renewable energy can be used for electricity generation, space and water heating and cooling, and transportation.

News Contact

John Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Aug. 21, 2024

- Written by Benjamin Wright -

As Georgia Tech establishes itself as a national leader in AI research and education, some researchers on campus are putting AI to work to help meet sustainability goals in a range of areas including climate change adaptation and mitigation, urban farming, food distribution, and life cycle assessments while also focusing on ways to make sure AI is used ethically.

Josiah Hester, interim associate director for Community-Engaged Research in the Brook Byers Institute for Sustainable Systems (BBISS) and associate professor in the School of Interactive Computing, sees these projects as wins from both a research standpoint and for the local, national, and global communities they could affect.

“These faculty exemplify Georgia Tech's commitment to serving and partnering with communities in our research,” he says. “Sustainability is one of the most pressing issues of our time. AI gives us new tools to build more resilient communities, but the complexities and nuances in applying this emerging suite of technologies can only be solved by community members and researchers working closely together to bridge the gap. This approach to AI for sustainability strengthens the bonds between our university and our communities and makes lasting impacts due to community buy-in.”

Flood Monitoring and Carbon Storage

Peng Chen, assistant professor in the School of Computational Science and Engineering in the College of Computing, focuses on computational mathematics, data science, scientific machine learning, and parallel computing. Chen is combining these areas of expertise to develop algorithms to assist in practical applications such as flood monitoring and carbon dioxide capture and storage.

He is currently working on a National Science Foundation (NSF) project with colleagues in Georgia Tech’s School of City and Regional Planning and from the University of South Florida to develop flood models in the St. Petersburg, Florida area. As a low-lying state with more than 8,400 miles of coastline, Florida is one of the states most at risk from sea level rise and flooding caused by extreme weather events sparked by climate change.

Chen’s novel approach to flood monitoring takes existing high-resolution hydrological and hydrographical mapping and uses machine learning to incorporate real-time updates from social media users and existing traffic cameras to run rapid, low-cost simulations using deep neural networks. Current flood monitoring software is resource and time-intensive. Chen’s goal is to produce live modeling that can be used to warn residents and allocate emergency response resources as conditions change. That information would be available to the general public through a portal his team is working on.

“This project focuses on one particular community in Florida,” Chen says, “but we hope this methodology will be transferable to other locations and situations affected by climate change.”

In addition to the flood-monitoring project in Florida, Chen and his colleagues are developing new methods to improve the reliability and cost-effectiveness of storing carbon dioxide in underground rock formations. The process is plagued with uncertainty about the porosity of the bedrock, the optimal distribution of monitoring wells, and the rate at which carbon dioxide can be injected without over-pressurizing the bedrock, leading to collapse. The new simulations are fast, inexpensive, and minimize the risk of failure, which also decreases the cost of construction.

“Traditional high-fidelity simulation using supercomputers takes hours and lots of resources,” says Chen. “Now we can run these simulations in under one minute using AI models without sacrificing accuracy. Even when you factor in AI training costs, this is a huge savings in time and financial resources.”

Flood monitoring and carbon capture are passion projects for Chen, who sees an opportunity to use artificial intelligence to increase the pace and decrease the cost of problem-solving.

“I’m very excited about the possibility of solving grand challenges in the sustainability area with AI and machine learning models,” he says. “Engineering problems are full of uncertainty, but by using this technology, we can characterize the uncertainty in new ways and propagate it throughout our predictions to optimize designs and maximize performance.”

Urban Farming and Optimization

Yongsheng Chen works at the intersection of food, energy, and water. As the Bonnie W. and Charles W. Moorman Professor in the School of Civil and Environmental Engineering and director of the Nutrients, Energy, and Water Center for Agriculture Technology, Chen is focused on making urban agriculture technologically feasible, financially viable, and, most importantly, sustainable. To do that he’s leveraging AI to speed up the design process and optimize farming and harvesting operations.

Chen’s closed-loop hydroponic system uses anaerobically treated wastewater for fertilization and irrigation by extracting and repurposing nutrients as fertilizer before filtering the water through polymeric membranes with nano-scale pores. Advancing filtration and purification processes depends on finding the right membrane materials to selectively separate contaminants, including antibiotics and per- and polyfluoroalkyl substances (PFAS). Chen and his team are using AI and machine learning to guide membrane material selection and fabrication to make contaminant separation as efficient as possible. Similarly, AI and machine learning are assisting in developing carbon capture materials such as ionic liquids that can retain carbon dioxide generated during wastewater treatment and redirect it to hydroponics systems, boosting food productivity.

“A fundamental angle of our research is that we do not see municipal wastewater as waste,” explains Chen. “It is a resource we can treat and recover components from to supply irrigation, fertilizer, and biogas, all while reducing the amount of energy used in conventional wastewater treatment methods.”

In addition to aiding in materials development, which reduces design time and production costs, Chen is using machine learning to optimize the growing cycle of produce, maximizing nutritional value. His USDA-funded vertical farm uses autonomous robots to measure critical cultivation parameters and take pictures without destroying plants. This data helps determine optimum environmental conditions, fertilizer supply, and harvest timing, resulting in a faster-growing, optimally nutritious plant with less fertilizer waste and lower emissions.

Chen’s work has received considerable federal funding. As the Urban Resilience and Sustainability Thrust Leader within the NSF-funded AI Institute for Advances in Optimization (AI4OPT), he has received additional funding to foster international collaboration in digital agriculture with colleagues across the United States and in Japan, Australia, and India.

Optimizing Food Distribution

At the other end of the agricultural spectrum is postdoc Rosemarie Santa González in the H. Milton Stewart School of Industrial and Systems Engineering, who is conducting her research under the supervision of Professor Chelsea White and Professor Pascal Van Hentenryck, the director of Georgia Tech’s AI Hub as well as the director of AI4OPT.

Santa González is working with the Wisconsin Food Hub Cooperative to help traditional farmers get their products into the hands of consumers as efficiently as possible to reduce hunger and food waste. Preventing food waste is a priority for both the EPA and USDA. Current estimates are that 30 to 40% of the food produced in the United States ends up in landfills, which is a waste of resources on both the production end in the form of land, water, and chemical use, as well as a waste of resources when it comes to disposing of it, not to mention the impact of the greenhouses gases when wasted food decays.

To tackle this problem, Santa González and the Wisconsin Food Hub are helping small-scale farmers access refrigeration facilities and distribution chains. As part of her research, she is helping to develop AI tools that can optimize the logistics of the small-scale farmer supply chain while also making local consumers in underserved areas aware of what’s available so food doesn’t end up in landfills.

“This solution has to be accessible,” she says. “Not just in the sense that the food is accessible, but that the tools we are providing to them are accessible. The end users have to understand the tools and be able to use them. It has to be sustainable as a resource.”

Making AI accessible to people in the community is a core goal of the NSF’s AI Institute for Intelligent Cyberinfrastructure with Computational Learning in the Environment (ICICLE), one of the partners involved with the project.

“A large segment of the population we are working with, which includes historically marginalized communities, has a negative reaction to AI. They think of machines taking over, or data being stolen. Our goal is to democratize AI in these decision-support tools as we work toward the UN Sustainable Development Goal of Zero Hunger. There is so much power in these tools to solve complex problems that have very real results. More people will be fed and less food will spoil before it gets to people’s homes.”

Santa González hopes the tools they are building can be packaged and customized for food co-ops everywhere.

AI and Ethics

Like Santa González, Joe Bozeman III is also focused on the ethical and sustainable deployment of AI and machine learning, especially among marginalized communities. The assistant professor in the School of Civil and Environmental Engineering is an industrial ecologist committed to fostering ethical climate change adaptation and mitigation strategies. His SEEEL Lab works to make sure researchers understand the consequences of decisions before they move from academic concepts to policy decisions, particularly those that rely on data sets involving people and communities.

“With the administration of big data, there is a human tendency to assume that more data means everything is being captured, but that's not necessarily true,” he cautions. “More data could mean we're just capturing more of the data that already exists, while new research shows that we’re not including information from marginalized communities that have historically not been brought into the decision-making process. That includes underrepresented minorities, rural populations, people with disabilities, and neurodivergent people who may not interface with data collection tools.”

Bozeman is concerned that overlooking marginalized communities in data sets will result in decisions that at best ignore them and at worst cause them direct harm.

“Our lab doesn't wait for the negative harms to occur before we start talking about them,” explains Bozeman, who holds a courtesy appointment in the School of Public Policy. “Our lab forecasts what those harms will be so decision-makers and engineers can develop technologies that consider these things.”

He focuses on urbanization, the food-energy-water nexus, and the circular economy. He has found that much of the research in those areas is conducted in a vacuum without consideration for human engagement and the impact it could have when implemented.

Bozeman is lobbying for built-in tools and safeguards to mitigate the potential for harm from researchers using AI without appropriate consideration. He already sees a disconnect between the academic world and the public. Bridging that trust gap will require ethical uses of AI.

“We have to start rigorously including their voices in our decision-making to begin gaining trust with the public again. And with that trust, we can all start moving toward sustainable development. If we don't do that, I don't care how good our engineering solutions are, we're going to miss the boat entirely on bringing along the majority of the population.”

BBISS Support

Moving forward, Hester is excited about the impact the Brooks Byers Institute for Sustainable Systems can have on AI and sustainability research through a variety of support mechanisms.

“BBISS continues to invest in faculty development and training in community-driven research strategies, including the Community Engagement Faculty Fellows Program (with the Center for Sustainable Communities Research and Education), while empowering multidisciplinary teams to work together to solve grand engineering challenges with AI by supporting the AI+Climate Faculty Interest Group, as well as partnering with and providing administrative support for community-driven research projects.”

News Contact

Brent Verrill, Research Communications Program Manager, BBISS

Aug. 20, 2024

For three days, a cybercriminal unleashed a crippling ransomware attack on the futuristic city of Northbridge. The attack shut down the city’s infrastructure and severely impacted public services, until Georgia Tech cybersecurity experts stepped in to stop it.

This scenario played out this weekend at the DARPA AI Cyber Challenge (AIxCC) semi-final competition held at DEF CON 32 in Las Vegas. Team Atlanta, which included the Georgia Tech experts, were among the contest’s winners.

Team Atlanta will now compete against six other teams in the final round that takes place at DEF CON 33 in August 2025. The finalists will keep their AI system and improve it over the next 12 months using the $2 million semi-final prize.

The AI systems in the finals must be open sourced and ready for immediate, real-world launch. The AIxCC final competition will award a $4 million grand prize to the ultimate champion.

Team Atlanta is made up of past and present Georgia Tech students and was put together with the help of SCP Professor Taesoo Kim. Not only did the team secure a spot in the final competition, they found a zero-day vulnerability in the contest.

“I am incredibly proud to announce that Team Atlanta has qualified for the finals in the DARPA AIxCC competition,” said Taesoo Kim, professor in the School of Cybersecurity and Privacy and a vice president of Samsung Research.

“This achievement is the result of exceptional collaboration across various organizations, including the Georgia Tech Research Institute (GTRI), industry partners like Samsung, and international academic institutions such as KAIST and POSTECH.”

After noticing discrepancies in the competition score board, the team discovered and reported a bug in the competition itself. The type of vulnerability they discovered is known as a zero-day vulnerability, because vendors have zero days to fix the issue.

While this didn’t earn Team Atlanta additional points, the competition organizer acknowledged the team and their finding during the closing ceremony.

“Our team, deeply rooted in Atlanta and largely composed of Georgia Tech alumni, embodies the innovative spirit and community values that define our city,” said Kim.

“With over 30 dedicated students and researchers, we have demonstrated the power of cross-disciplinary teamwork in the semi-final event. As we advance to the finals, we are committed to pushing the boundaries of cybersecurity and artificial intelligence, and I firmly believe the resulting systems from this competition will transform the security landscape in the coming year!”

The team tested their cyber reasoning system (CRS), dubbed Atlantis, on software used for data management, website support, healthcare systems, supply chains, electrical grids, transportation, and other critical infrastructures.

Atlantis is a next-generation, bug-finding and fixing system that can hunt bugs in multiple coding languages. The system immediately issues accurate software patches without any human intervention.

AIxCC is a Pentagon-backed initiative that was announced in August 2023 and will award up to $20 million in prize money throughout the competition. Team Atlanta was among the 42 teams that qualified for the semi-final competition earlier this year.

News Contact

John Popham

Communications Officer II at the School of Cybersecurity and Privacy

Apr. 17, 2024

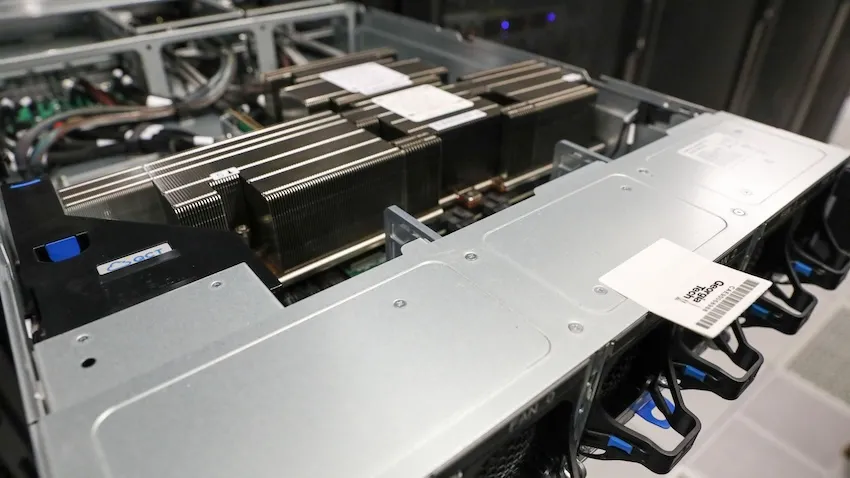

Computing research at Georgia Tech is getting faster thanks to a new state-of-the-art processing chip named after a female computer programming pioneer.

Tech is one of the first research universities in the country to receive the GH200 Grace Hopper Superchip from NVIDIA for testing, study, and research.

Designed for large-scale artificial intelligence (AI) and high-performance computing applications, the GH200 is intended for large language model (LLM) training, recommender systems, graph neural networks, and other tasks.

Alexey Tumanov and Tushar Krishna procured Georgia Tech’s first pair of Grace Hopper chips. Spencer Bryngelson attained four more GH200s, which will arrive later this month.

“We are excited about this new design that puts everything onto one chip and accessible to both processors,” said Will Powell, a College of Computing research technologist.

“The Superchip’s design increases computation efficiency where data doesn’t have to move as much and all the memory is on the chip.”

A key feature of the new processing chip is that the central processing unit (CPU) and graphics processing unit (GPU) are on the same board.

NVIDIA’s NVLink Chip-2-Chip (C2C) interconnect joins the two units together. C2C delivers up to 900 gigabytes per second of total bandwidth, seven times faster than PCIe Gen5 connections used in newer accelerated systems.

As a result, the two components share memory and process data with more speed and better power efficiency. This feature is one that the Georgia Tech researchers want to explore most.

Tumanov, an assistant professor in the School of Computer Science, and his Ph.D. student Amey Agrawal, are testing machine learning (ML) and LLM workloads on the chip. Their work with the GH200 could lead to more sustainable computing methods that keep up with the exponential growth of LLMs.

The advent of household LLMs, like ChatGPT and Gemini, pushes the limit of current architectures based on GPUs. The chip’s design overcomes known CPU-GPU bandwidth limitations. Tumanov’s group will put that design to the test through their studies.

Krishna is an associate professor in the School of Electrical and Computer Engineering and associate director of the Center for Research into Novel Computing Hierarchies (CRNCH).

His research focuses on optimizing data movement in modern computing platforms, including AI/ML accelerator systems. Ph.D. student Hao Kang uses the GH200 to analyze LLMs exceeding 30 billion parameters. This study will enable labs to explore deep learning optimizations with the new chip.

Bryngelson, an assistant professor in the School of Computational Science and Engineering, will use the chip to compute and simulate fluid and solid mechanics phenomena. His lab can use the CPU to reorder memory and perform disk writes while the GPU does parallel work. This capability is expected to significantly reduce the computational burden for some applications.

“Traditional CPU to GPU communication is slower and introduces latency issues because data passes back and forth over a PCIe bus,” Powell said. “Since they can access each other’s memory and share in one hop, the Superchip’s architecture boosts speed and efficiency.”

Grace Hopper is the inspirational namesake for the chip. She pioneered many developments in computer science that formed the foundation of the field today.

Hopper invented the first compiler, a program that translates computer source code into a target language. She also wrote the earliest programming languages, including COBOL, which is still used today in data processing.

Hopper joined the U.S. Navy Reserve during World War II, tasked with programming the Mark I computer. She retired as a rear admiral in August 1986 after 42 years of military service.

Georgia Tech researchers hope to preserve Hopper’s legacy using the technology that bears her name and spirit for innovation to make new discoveries.

“NVIDIA and other vendors show no sign of slowing down refinement of this kind of design, so it is important that our students understand how to get the most out of this architecture,” said Powell.

“Just having all these technologies isn’t enough. People must know how to build applications in their coding that actually benefit from these new architectures. That is the skill.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Mar. 05, 2024

Computer graphic simulations can represent natural phenomena such as tornados, underwater, vortices, and liquid foams more accurately thanks to an advancement in creating artificial intelligence (AI) neural networks.

Working with a multi-institutional team of researchers, Georgia Tech Assistant Professor Bo Zhu combined computer graphic simulations with machine learning models to create enhanced simulations of known phenomena. The new benchmark could lead to researchers constructing representations of other phenomena that have yet to be simulated.

Zhu co-authored the paper Fluid Simulation on Neural Flow Maps. The Association for Computing Machinery’s Special Interest Group in Computer Graphics and Interactive Technology (SIGGRAPH) gave it a best paper award in December at the SIGGRAPH Asia conference in Sydney, Australia.

The authors say the advancement could be as significant to computer graphic simulations as the introduction of neural radiance fields (NeRFs) was to computer vision in 2020. Introduced by researchers at the University of California-Berkley, University of California-San Diego, and Google, NeRFs are neural networks that easily convert 2D images into 3D navigable scenes.

NeRFs have become a benchmark among computer vision researchers. Zhu and his collaborators hope their creation, neural flow maps, can do the same for simulation researchers in computer graphics.

“A natural question to ask is, can AI fundamentally overcome the traditional method’s shortcomings and bring generational leaps to simulation as it has done to natural language processing and computer vision?” Zhu said. “Simulation accuracy has been a significant challenge to computer graphics researchers. No existing work has combined AI with physics to yield high-end simulation results that outperform traditional schemes in accuracy.”

In computer graphics, simulation pipelines are the equivalent of neural networks and allow simulations to take shape. They are traditionally constructed through mathematical equations and numerical schemes.

Zhu said researchers have tried to design simulation pipelines with neural representations to construct more robust simulations. However, efforts to achieve higher physical accuracy have fallen short.

Zhu attributes the problem to the pipelines’ incapability of matching the capacities of AI algorithms within the structures of traditional simulation pipelines. To solve the problem and allow machine learning to have influence, Zhu and his collaborators proposed a new framework that redesigns the simulation pipeline.

They named these new pipelines neural flow maps. The maps use machine learning models to store spatiotemporal data more efficiently. The researchers then align these models with their mathematical framework to achieve a higher accuracy than previous pipeline simulations.

Zhu said he does not believe machine learning should be used to replace traditional numerical equations. Rather, they should complement them to unlock new advantageous paradigms.

“Instead of trying to deploy modern AI techniques to replace components inside traditional pipelines, we co-designed the simulation algorithm and machine learning technique in tandem,” Zhu said.

“Numerical methods are not optimal because of their limited computational capacity. Recent AI-driven capacities have uplifted many of these limitations. Our task is redesigning existing simulation pipelines to take full advantage of these new AI capacities.”

In the paper, the authors state the once unattainable algorithmic designs could unlock new research possibilities in computer graphics.

Neural flow maps offer “a new perspective on the incorporation of machine learning in numerical simulation research for computer graphics and computational sciences alike,” the paper states.

“The success of Neural Flow Maps is inspiring for how physics and machine learning are best combined,” Zhu added.

News Contact

Nathan Deen, Communications Officer

Georgia Tech School of Interactive Computing

nathan.deen@cc.gatech.edu

Feb. 29, 2024

One of the hallmarks of humanity is language, but now, powerful new artificial intelligence tools also compose poetry, write songs, and have extensive conversations with human users. Tools like ChatGPT and Gemini are widely available at the tap of a button — but just how smart are these AIs?

A new multidisciplinary research effort co-led by Anna (Anya) Ivanova, assistant professor in the School of Psychology at Georgia Tech, alongside Kyle Mahowald, an assistant professor in the Department of Linguistics at the University of Texas at Austin, is working to uncover just that.

Their results could lead to innovative AIs that are more similar to the human brain than ever before — and also help neuroscientists and psychologists who are unearthing the secrets of our own minds.

The study, “Dissociating Language and Thought in Large Language Models,” is published this week in the scientific journal Trends in Cognitive Sciences. The work is already making waves in the scientific community: an earlier preprint of the paper, released in January 2023, has already been cited more than 150 times by fellow researchers. The research team has continued to refine the research for this final journal publication.

“ChatGPT became available while we were finalizing the preprint,” Ivanova explains. “Over the past year, we've had an opportunity to update our arguments in light of this newer generation of models, now including ChatGPT.”

Form versus function

The study focuses on large language models (LLMs), which include AIs like ChatGPT. LLMs are text prediction models, and create writing by predicting which word comes next in a sentence — just like how a cell phone or email service like Gmail might suggest what next word you might want to write. However, while this type of language learning is extremely effective at creating coherent sentences, that doesn’t necessarily signify intelligence.

Ivanova’s team argues that formal competence — creating a well-structured, grammatically correct sentence — should be differentiated from functional competence — answering the right question, communicating the correct information, or appropriately communicating. They also found that while LLMs trained on text prediction are often very good at formal skills, they still struggle with functional skills.

“We humans have the tendency to conflate language and thought,” Ivanova says. “I think that’s an important thing to keep in mind as we're trying to figure out what these models are capable of, because using that ability to be good at language, to be good at formal competence, leads many people to assume that AIs are also good at thinking — even when that's not the case.

It's a heuristic that we developed when interacting with other humans over thousands of years of evolution, but now in some respects, that heuristic is broken,” Ivanova explains.

The distinction between formal and functional competence is also vital in rigorously testing an AI’s capabilities, Ivanova adds. Evaluations often don’t distinguish formal and functional competence, making it difficult to assess what factors are determining a model’s success or failure. The need to develop distinct tests is one of the team’s more widely accepted findings, and one that some researchers in the field have already begun to implement.

Creating a modular system

While the human tendency to conflate functional and formal competence may have hindered understanding of LLMs in the past, our human brains could also be the key to unlocking more powerful AIs.

Leveraging the tools of cognitive neuroscience while a postdoctoral associate at Massachusetts Institute of Technology (MIT), Ivanova and her team studied brain activity in neurotypical individuals via fMRI, and used behavioral assessments of individuals with brain damage to test the causal role of brain regions in language and cognition — both conducting new research and drawing on previous studies. The team’s results showed that human brains use different regions for functional and formal competence, further supporting this distinction in AIs.

“Our research shows that in the brain, there is a language processing module and separate modules for reasoning,” Ivanova says. This modularity could also serve as a blueprint for how to develop future AIs.

“Building on insights from human brains — where the language processing system is sharply distinct from the systems that support our ability to think — we argue that the language-thought distinction is conceptually important for thinking about, evaluating, and improving large language models, especially given recent efforts to imbue these models with human-like intelligence,” says Ivanova’s former advisor and study co-author Evelina Fedorenko, a professor of brain and cognitive sciences at MIT and a member of the McGovern Institute for Brain Research.

Developing AIs in the pattern of the human brain could help create more powerful systems — while also helping them dovetail more naturally with human users. “Generally, differences in a mechanism’s internal structure affect behavior,” Ivanova says. “Building a system that has a broad macroscopic organization similar to that of the human brain could help ensure that it might be more aligned with humans down the road.”

In the rapidly developing world of AI, these systems are ripe for experimentation. After the team’s preprint was published, OpenAI announced their intention to add plug-ins to their GPT models.

“That plug-in system is actually very similar to what we suggest,” Ivanova adds. “It takes a modularity approach where the language model can be an interface to another specialized module within a system.”

While the OpenAI plug-in system will include features like booking flights and ordering food, rather than cognitively inspired features, it demonstrates that “the approach has a lot of potential,” Ivanova says.

The future of AI — and what it can tell us about ourselves

While our own brains might be the key to unlocking better, more powerful AIs, these AIs might also help us better understand ourselves. “When researchers try to study the brain and cognition, it's often useful to have some smaller system where you can actually go in and poke around and see what's going on before you get to the immense complexity,” Ivanova explains.

However, since human language is unique, model or animal systems are more difficult to relate. That's where LLMs come in.

“There are lots of surprising similarities between how one would approach the study of the brain and the study of an artificial neural network” like a large language model, she adds. “They are both information processing systems that have biological or artificial neurons to perform computations.”

In many ways, the human brain is still a black box, but openly available AIs offer a unique opportunity to see the synthetic system's inner workings and modify variables, and explore these corresponding systems like never before.

“It's a really wonderful model that we have a lot of control over,” Ivanova says. “Neural networks — they are amazing.”

Along with Anna (Anya) Ivanova, Kyle Mahowald, and Evelina Fedorenko, the research team also includes Idan Blank (University of California, Los Angeles), as well as Nancy Kanwisher and Joshua Tenenbaum (Massachusetts Institute of Technology).

DOI: https://doi.org/10.1016/j.tics.2024.01.011

Researcher Acknowledgements

For helpful conversations, we thank Jacob Andreas, Alex Warstadt, Dan Roberts, Kanishka Misra, students in the 2023 UT Austin Linguistics 393 seminar, the attendees of the Harvard LangCog journal club, the attendees of the UT Austin Department of Linguistics SynSem seminar, Gary Lupyan, John Krakauer, members of the Intel Deep Learning group, Yejin Choi and her group members, Allyson Ettinger, Nathan Schneider and his group members, the UT NLL Group, attendees of the KUIS AI Talk Series at Koç University in Istanbul, Tom McCoy, attendees of the NYU Philosophy of Deep Learning conference and his group members, Sydney Levine, organizers and attendees of the ILFC seminar, and others who have engaged with our ideas. We also thank Aalok Sathe for help with document formatting and references.

Funding sources

Anna (Anya) Ivanova was supported by funds from the Quest Initiative for Intelligence. Kyle Mahowald acknowledges funding from NSF Grant 2104995. Evelina Fedorenko was supported by NIH awards R01-DC016607, R01-DC016950, and U01-NS121471 and by research funds from the Brain and Cognitive Sciences Department, McGovern Institute for Brain Research, and the Simons Foundation through the Simons Center for the Social Brain.

News Contact

Written by Selena Langner

Editor and Press Contact:

Jess Hunt-Ralston

Director of Communications

College of Sciences

Georgia Tech

Feb. 05, 2024

Scientists are always looking for better computer models that simulate the complex systems that define our world. To meet this need, a Georgia Tech workshop held Jan. 16 illustrated how new artificial intelligence (AI) research could usher the next generation of scientific computing.

The workshop focused AI technology toward optimization of complex systems. Presentations of climatological and electromagnetic simulations showed these techniques resulted in more efficient and accurate computer modeling. The workshop also progressed AI research itself since AI models typically are not well-suited for optimization tasks.

The School of Computational Science and Engineering (CSE) and Institute for Data Engineering and Science jointly sponsored the workshop.

School of CSE Assistant Professors Peng Chen and Raphaël Pestourie led the workshop’s organizing committee and moderated the workshop’s two panel discussions. The duo also pitched their own research, highlighting potential of scientific AI.

Chen shared his work on derivative-informed neural operators (DINOs). DINOs are a class of neural networks that use derivative information to approximate solutions of partial differential equations. The derivative enhancement results in neural operators that are more accurate and efficient.

During his talk, Chen showed how DINOs makes better predictions with reliable derivatives. These have potential to solve data assimilation problems in weather and flooding prediction. Other applications include allocating sensors for early tsunami warnings and designing new self-assembly materials.

All these models contain elements of uncertainty where data is unknown, noisy, or changes over time. Not only is DINOs a powerful tool to quantify uncertainty, but it also requires little training data to become functional.

“Recent advances in AI tools have become critical in enhancing societal resilience and quality, particularly through their scientific uses in environmental, climatic, material, and energy domains,” Chen said.

“These tools are instrumental in driving innovation and efficiency in these and many other vital sectors.”

[Related: Machine Learning Key to Proposed App that Could Help Flood-prone Communities]

One challenge in studying complex systems is that it requires many simulations to generate enough data to learn from and make better predictions. But with limited data on hand, it is costly to run enough simulations to produce new data.

At the workshop, Pestourie presented his physics-enhanced deep surrogates (PEDS) as a solution to this optimization problem.

PEDS employs scientific AI to make efficient use of available data while demanding less computational resources. PEDS demonstrated to be up to three times more accurate than models using neural networks while needing less training data by at least a factor of 100.

PEDS yielded these results in tests on diffusion, reaction-diffusion, and electromagnetic scattering models. PEDS performed well in these experiments geared toward physics-based applications because it combines a physics simulator with a neural network generator.

“Scientific AI makes it possible to systematically leverage models and data simultaneously,” Pestourie said. “The more adoption of scientific AI there will be by domain scientists, the more knowledge will be created for society.”

[Related: Technique Could Efficiently Solve Partial Differential Equations for Numerous Applications]

Study and development of AI applications at these scales require use of the most powerful computers available. The workshop invited speakers from national laboratories who showcased supercomputing capabilities available at their facilities. These included Oak Ridge National Laboratory, Sandia National Laboratories, and Pacific Northwest National Laboratory.

The workshop hosted Georgia Tech faculty who represented the Colleges of Computing, Design, Engineering, and Sciences. Among these were workshop co-organizers Yan Wang and Ebeneser Fanijo. Wang is a professor in the George W. Woodruff School of Mechanical Engineering and Fanjio is an assistant professor in the School of Building Construction.

The workshop welcomed academics outside of Georgia Tech to share research occurring at their institutions. These speakers hailed from Emory University, Clemson University, and the University of California, Berkeley.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Oct. 03, 2023

Wenjing Liao, an associate professor in the School of Mathematics, has been awarded a Department of Energy (DOE) Early Career Award for her research into how deep learning might be leveraging to make mathematical advances in achieving more efficient modeling techniques.

Liao was selected as one of the 93 early career scientists from across the country who are receiving a combined $135 million in DOE funding. The awards aim to support the next generation of STEM leaders, and identify early-career scientists whose research will have global impacts.

Earlier this year, Liao was also selected for an National Science Foundation (NSF) Faculty Early Career Development Program (CAREER) Award, one of the most prestigious grants that a scientist can receive early in their profession.

“Supporting America’s scientists and researchers early in their careers will ensure the U.S. remains at the forefront of scientific discovery and develops the solutions to our most pressing challenges,” said U.S. Secretary of Energy Jennifer M. Granholm, adding that the funding “will allow the recipients the freedom to find the answers to some of the most complex questions as they establish themselves as experts in their fields.”

Model simplification; complex problems

Real-world applications of computer modeling often call for large, complex data simulations, which can be time-consuming and expensive, limiting their applications. Liao’s project “Model Reduction by Deep Learning: Interpretability and Mathematical Advances” focuses on a technique called model reduction, which allows researchers to reduce the size of problems computer models must solve to smaller ones that computers can efficiently solve.

Liao notes that while traditional model-reduction methods have been successful, the technique is mostly limited to low dimensional linear models, or those with fewer important features that the model can include. However, many problems found in nature are the opposite. Liao hopes that by identifying the underlying nonlinear structures in natural problems, she can broaden the application of model-reduction techniques.

To do so, her research will focus on three key questions. First, she will investigate how to leverage deep neural networks to extract low-dimensional nonlinear structures in data sets. Next, Liao will investigate how to use the nonlinear structures in model reduction. Finally, in order to better harness deep learning, Liao aims to develop new deep learning-based model reduction methods.

“This project has the potential to drive significant advances in scientific machine learning,” Liao says in her abstract. “The proposed model-reduction methods can be used to analyze large datasets and simulate complex phenomena in physics, biology, and engineering.”

News Contact

Written by Selena Langner

Pagination

- 1 Page 1

- Next page