Dec. 09, 2025

When we check the weather forecast, that information comes from satellites. When we FaceTime a friend, that call could come via satellites. From cellphone networks to national security systems, satellites are vital to our connected globe. Yet regulating how satellites function across borders is almost as complicated as the technology that launches them into space. Researchers in Georgia Tech’s Space Research Institute are shaping how satellites operate, both scientifically and politically.

Nov. 20, 2025

Georgia Institute of Technology has been ranked 7th in the world in the 2026 Times Higher Education Interdisciplinary Science Rankings, in association with Schmidt Science Fellows. This designation underscores Georgia Tech’s leadership in research that solves global challenges.

“Interdisciplinary research is at the heart of Georgia Tech’s mission,” said Tim Lieuwen, executive vice president for Research. “Our faculty, students, and research teams work across disciplines to create transformative solutions in areas such as healthcare, energy, advanced manufacturing, and artificial intelligence. This ranking reflects the strength of our collaborative culture and the impact of our research on society.”

As a top R1 research university, Georgia Tech is shaping the future of basic and applied research by pursuing inventive solutions to the world’s most pressing problems. Whether discovering cancer treatments or developing new methods to power our communities, work at the Institute focuses on improving the human condition.

Teams from all seven Georgia Tech colleges, 11 interdisciplinary research institutes, the Georgia Tech Research Institute, Enterprise Innovation Institute, and hundreds of research labs and centers work together to transform ideas into real results.

News Contact

Angela Ayers

Nov. 18, 2025

Viral videos abound with humanoid robots performing amazing feats of acrobatics and dance but finding videos of a humanoid robot performing a common household task or traversing a new multi-terrain environment easily, and without human control, are much rarer. This is because training humanoid robots to perform these seemingly simple functions involves the need for simulation training data that lack the complex dynamics and degrees of freedom of motion that are inherent in humanoid robots.

To achieve better training outcomes with faster deployment results, Fukang Liu and Feiyang Wu, graduate students under Professor Ye Zhao from the Woodruff School of Mechanical Engineering and faculty member of the Institute for Robotics and Intelligent Machines, have published a duo of papers in IEEE Robotics and Automation Letters. This is a collaborative work with three other IRIM affiliated faculties, Profs. Danfei Xu, Yue Chen, and Sehoon Ha, as well as Prof. Anqi Wu from School of Computational Science and Engineering.

To develop more reliable motion learning for humanoid robots and enable humanoid robots to perform complex whole-body movements in the real world, Fukang led a team and developed Opt2Skill, a hybrid robot learning framework that combines model-based trajectory optimization with reinforcement learning. Their framework integrates dynamics and contacts into the trajectory planning process and generates high-quality, dynamically feasible datasets, which result in more reliable motion learning for humanoid robots and improved position tracking and task success rates. This approach shows a promising way to augment the performance and generalization of humanoid RL policies using dynamically feasible motion datasets. Incorporating torque data also improved motion stability and force tracking in contact-rich scenarios, demonstrating that torque information plays a key role in learning physically consistent and contact-rich humanoid behaviors.

While other datasets, such as inverse kinematics or human demonstrations, are valuable, they don’t always capture the dynamics needed for reliable whole-body humanoid control.” said by Fukang Liu. “With our Opt2Skill framework, we combine trajectory optimization with reinforcement learning to generate and leverage high-quality, dynamically feasible motion data. This integrated approach gives robots a richer and more physically grounded training process, enabling them to learn these complex tasks more reliably and safely for real-world deployment. - Fukang Liu

In another line of humanoid research, Feiyang established a one-stage training framework that allows humanoid robots to learn locomotion more efficiently and with greater environmental adaptability. Their framework, Learn-to-Teach (L2T), unlike traditional two-stage “teacher-student” approaches, which first train an expert in simulation and then retrain a limited-perception student, teaches both simultaneously, sharing knowledge and experiences in real time. The result of this two-way training is a 50% reduction in training data and time, while maintaining or surpassing state-of-the-art performance in humanoid locomotion. The lightweight policy learned through this process enables the lab’s humanoid robot to traverse more than a dozen real-world terrains—grass, gravel, sand, stairs, and slopes—without retraining or depth sensors.

By training an expert and a deployable controller together, we can turn rich simulation feedback into a lightweight policy that runs on real hardware, letting our humanoid adapt to uneven, unstructured terrain with far less data and hand-tuning than traditional methods. - Feiyang Wu

By the application of these training processes, the team hopes to speed the development of deployable humanoid robots for home use, manufacturing, defense, and search and rescue assistance in dangerous environments. These methods also support advances in embodied intelligence, enabling robots to learn richer, more context-aware behaviors.Additionally, the training data process can be applied to research to improve the functionality and adaptability of human assistive devices for medical and therapeutic uses.

As humanoid robots move from controlled labs into messy, unpredictable real-world environments, the key is developing embodied intelligence—the ability for robots to sense, adapt, and act through their physical bodies,” said Professor Ye Zhao. “The innovations from our students push us closer to robots that can learn robust skills, navigate diverse terrains, and ultimately operate safely and reliably alongside people. - Prof. Ye Zhao

Author - Christa M. Ernst

Citations

Liu F, Gu Z, Cai Y, Zhou Z, Jung H, Jang J, Zhao S, Ha S, Chen Y, Xu D, Zhao Y. Opt2skill: Imitating dynamically-feasible whole-body trajectories for versatile humanoid loco-manipulation. IEEE Robotics and Automation Letters. 2025 Oct 13.

Wu F, Nal X, Jang J, Zhu W, Gu Z, Wu A, Zhao Y. Learn to teach: Sample-efficient privileged learning for humanoid locomotion over real-world uneven terrain. IEEE Robotics and Automation Letters. 2025 Jul 23.

News Contact

Sep. 18, 2025

Maintaining balance while walking may seem automatic — until suddenly it isn’t. Gait impairment, or difficulty with walking, is a major liability for stroke and Parkinson’s patients. Not only do gait issues slow a person down, but they are also one of the top causes of falls. And solutions are often limited to time-intensive and costly physical therapy.

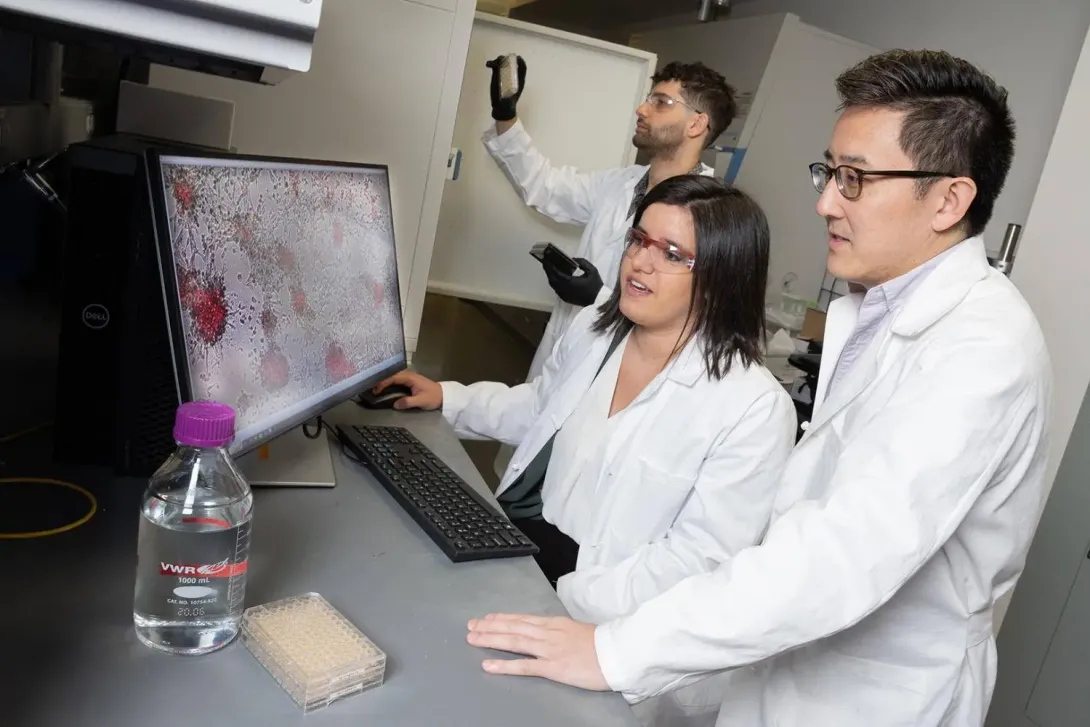

A new wearable electronic device that can be inserted inside any shoe may be able to address this challenge. The device, developed by Georgia Tech researchers, is made of more than 170 thin, flexible sensors that measure foot pressure — a key metric for determining whether someone is off-balance. The sensor collects pressure data, which the researchers could eventually use to predict which changes lead to falls.

The researchers presented their work in the paper, “Flexible Smart Insole and Plantar Pressure Monitoring Using Screen-Printed Nanomaterials and Piezoresistive Sensors.” It was the cover paper in the August edition of ACSApplied Materials & Interfaces.

Pressure Points

Smart footwear isn’t new — but making it both functional and affordable has been nearly impossible. W. Hong Yeo’s lab has made its reputation on creating malleable medical devices. The researchers rely on the common commercial practice of screen-printing electronics to screen-print sensors. They realized they could apply this printing technique to address walking difficulties.

“Screen-printing is advantageous for developing medical devices because it's low-cost and scalable,” said Yeo, the Peterson Professor and Harris Saunders Jr. Professor in the George W. Woodruff School of Mechanical Engineering. “So, when it comes to thinking about commercialization and mass production, screen-printing is a really good platform because it's already been used in the electronics industry.”

Making the device accessible to the everyday user was paramount for Yeo’s team. A key innovation was making sure the wearable is thin enough to be comfortable for the wearer and easy to integrate with other assistive technologies. The device uses Bluetooth, enabling a smartphone to collect data and offer the future possibility of integrating with existing health monitoring applications.

Possibilities for real-world adaptation are promising, thanks to these innovations. Lightweight and small, the wearable could be paired with robotics devices to help stroke and Parkinson’s patients and the elderly walk. The high number of sensors could make it easier for researchers to apply a machine learning algorithm that could predict falls. The device could even enable professional athletes to analyze their performance.

Regardless of how the device is used, Yeo intends to keep its cost under $100. So far, with funding from the National Science Foundation, the researchers have tested the device on healthy subjects. They hope to expand the study to people with gait impairments and, eventually, make the device commercially available.

“I'm trying to bridge the gap between the lack of available devices in hospitals or medical practices and the lab-scale devices,” Yeo said. “We want these devices to be ready now — not in 10 years.”

With its low-cost, wireless design and potential for real-time feedback, this smart insole could transform how we monitor and manage walking difficulties — not just in clinical settings, but in everyday life.

News Contact

Tess Malone, Senior Research Writer/Editor

tess.malone@gatech.edu

Sep. 12, 2025

Robotic systems are currently deployed in sectors ranging from industrial manufacturing to healthcare to agriculture, adding benefits in production times, patient outcomes, and yields. This trend towards greater automation and human robot collaborative work environments, while providing great opportunities, also highlights a critical gap in cybersecurity research. These systems rely on network communication to coordinate movement, meaning that security breaches could result in the robot acting in ways that may endanger people and property.

Current cybersecurity approaches have been shown to be insufficient in blocking sophisticated attacks aimed at networked robotic motion-control systems.

To address this gap, Jun Ueda, Professor and ASME Fellow in the George W. Woodruff School of Mechanical Engineering at Georgia Tech, has been awarded approximately $700,000 by the National Science Foundation to establish methods to enhance cybersecurity for networked motion-control system. The research will focus on the unique geometric vulnerabilities in networked robotic systems and stealthy false data injection attacks that exploit geometric coordinate transformations to maintain mathematical consistency in robotic dynamics while altering physical world behavior.

Using an interdisciplinary approach that will combine research methodology from system dynamics, control, communication, differential geometry and cybersecurity engineering, Ueda hopes to establish new mathematical tools for analyzing robotic security and develop safer networked robotic systems that successfully repel system intrusion, manipulation attacks, and attacks that mislead operators.

This article refers to NSF Program Foundational Research in Robotics (FRR) Award # 2112793

A Geometric Approach for Generalized Encrypted Control of Networked Dynamical Systems

News Contact

Aug. 21, 2025

A new study explains how tiny water bugs use fan-like propellers to zip across streams at speeds up to 120 body lengths per second. The researchers then created a similar fan structure and used it to propel and maneuver an insect-sized robot.

The discovery offers new possibilities for designing small machines that could operate during floods or other challenging situations.

Instead of relying on their muscles, the insects about the size of a grain of rice use the water’s surface tension and elastic forces to morph the ribbon-shaped fans on the end of their legs to slice the water surface and change directions.

Once they understood the mechanism, the team built a self-deployable, one-milligram fan and installed it into an insect-sized robot capable of accelerating, braking, and maneuvering right and left.

The study is featured on the cover of the journal Science.

Read the entire story and see the robot in action on the College of Engineering website.

News Contact

Jason Maderer

College of Engineering

maderer@gatech.edu

Aug. 08, 2025

Research into tailored assistive and rehabilitative devices has seen recent advancements but the goal remains out of reach due to the sparsity of data on how humans learn complex balance tasks. To address this gap, a collaborating team of interdisciplinary faculty from Florida State University and Georgia Tech have been awarded ~$798,000 by the NSF to launch a study to better understand human motor learning as well as gain greater understanding into human robot interaction dynamics during the learning process.

Led by PI: Taylor Higgins, Assistant Professor, FAMU-FSU Department of Mechanical Engineering, partnering with Co-PIs Shreyas Kousik, Assistant Professor, Georgia Tech, George W. Woodruff School of Mechanical Engineering, and Brady DeCouto, Assistant Professor, FSU Anne Spencer Daves College of Education, Health, and Human Sciences, the research will use the acquisition of unicycle riding skill by participants to gain a better grasp on human motor learning in tasks requiring balance and complex movement in space. Although it might sound a bit odd, the fact that most people don’t know how to ride a unicycle, and the fact that it requires balance, mean that the data will cover the learning process from novice to skilled across the participant pool.

Using data acquired from human participants, the team will develop a “robotics assistive unicycle” that will be used in the training of the next pool of novice unicycle riders. This is to gauge if, and how rapidly, human motor learning outcomes improve with the assistive unicycle. The participants that engage with the robotic unicycle will also give valuable insight into developing effective human-robot collaboration strategies.

The fact that deciding to get on a unicycle requires a bit of bravery might not be great for the participants, but it’s great for the research team. The project will also allow exploration into the interconnection between anxiety and human motor learning to discover possible alleviation strategies, thus increasing the likelihood of positive outcomes for future patients and consumers of these devices.

Author

-Christa M. Ernst

This Article Refers to NSF Award # 2449160

News Contact

Jul. 16, 2025

The National Science Foundation (NSF) has awarded Georgia Tech and its partners $20 million to build a powerful new supercomputer that will use artificial intelligence (AI) to accelerate scientific breakthroughs.

Called Nexus, the system will be one of the most advanced AI-focused research tools in the U.S. Nexus will help scientists tackle urgent challenges such as developing new medicines, advancing clean energy, understanding how the brain works, and driving manufacturing innovations.

“Georgia Tech is proud to be one of the nation’s leading sources of the AI talent and technologies that are powering a revolution in our economy,” said Ángel Cabrera, president of Georgia Tech. “It’s fitting we’ve been selected to host this new supercomputer, which will support a new wave of AI-centered innovation across the nation. We’re grateful to the NSF, and we are excited to get to work.”

Designed from the ground up for AI, Nexus will give researchers across the country access to advanced computing tools through a simple, user-friendly interface. It will support work in many fields, including climate science, health, aerospace, and robotics.

“The Nexus system's novel approach combining support for persistent scientific services with more traditional high-performance computing will enable new science and AI workflows that will accelerate the time to scientific discovery,” said Katie Antypas, National Science Foundation director of the Office of Advanced Cyberinfrastructure. “We look forward to adding Nexus to NSF's portfolio of advanced computing capabilities for the research community.”

Nexus Supercomputer — In Simple Terms

- Built for the future of science: Nexus is designed to power the most demanding AI research — from curing diseases, to understanding how the brain works, to engineering quantum materials.

- Blazing fast: Nexus can crank out over 400 quadrillion operations per second — the equivalent of everyone in the world continuously performing 50 million calculations every second.

- Massive brain plus memory: Nexus combines the power of AI and high-performance computing with 330 trillion bytes of memory to handle complex problems and giant datasets.

- Storage: Nexus will feature 10 quadrillion bytes of flash storage, equivalent to about 10 billion reams of paper. Stacked, that’s a column reaching 500,000 km high — enough to stretch from Earth to the moon and a third of the way back.

- Supercharged connections: Nexus will have lightning-fast connections to move data almost instantaneously, so researchers do not waste time waiting.

- Open to U.S. researchers: Scientists from any U.S. institution can apply to use Nexus.

Why Now?

AI is rapidly changing how science is investigated. Researchers use AI to analyze massive datasets, model complex systems, and test ideas faster than ever before. But these tools require powerful computing resources that — until now — have been inaccessible to many institutions.

This is where Nexus comes in. It will make state-of-the-art AI infrastructure available to scientists all across the country, not just those at top tech hubs.

“This supercomputer will help level the playing field,” said Suresh Marru, principal investigator of the Nexus project and director of Georgia Tech’s new Center for AI in Science and Engineering (ARTISAN). “It’s designed to make powerful AI tools easier to use and available to more researchers in more places.”

Srinivas Aluru, Regents’ Professor and senior associate dean in the College of Computing, said, “With Nexus, Georgia Tech joins the league of academic supercomputing centers. This is the culmination of years of planning, including building the state-of-the-art CODA data center and Nexus’ precursor supercomputer project, HIVE."

Like Nexus, HIVE was supported by NSF funding. Both Nexus and HIVE are supported by a partnership between Georgia Tech’s research and information technology units.

A National Collaboration

Georgia Tech is building Nexus in partnership with the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign, which runs several of the country’s top academic supercomputers. The two institutions will link their systems through a new high-speed network, creating a national research infrastructure.

“Nexus is more than a supercomputer — it’s a symbol of what’s possible when leading institutions work together to advance science,” said Charles Isbell, chancellor of the University of Illinois and former dean of Georgia Tech’s College of Computing. “I'm proud that my two academic homes have partnered on this project that will move science, and society, forward.”

What’s Next

Georgia Tech will begin building Nexus this year, with its expected completion in spring 2026. Once Nexus is finished, researchers can apply for access through an NSF review process. Georgia Tech will manage the system, provide support, and reserve up to 10% of its capacity for its own campus research.

“This is a big step for Georgia Tech and for the scientific community,” said Vivek Sarkar, the John P. Imlay Dean of Computing. “Nexus will help researchers make faster progress on today’s toughest problems — and open the door to discoveries we haven’t even imagined yet.”

News Contact

Siobhan Rodriguez

Senior Media Relations Representative

Institute Communications

Jul. 15, 2025

The National Science Foundation (NSF) has awarded Georgia Tech and its partners $20 million to build a powerful new supercomputer that will use artificial intelligence (AI) to accelerate scientific breakthroughs.

Called Nexus, the system will be one of the most advanced AI-focused research tools in the U.S. Nexus will help scientists tackle urgent challenges such as developing new medicines, advancing clean energy, understanding how the brain works, and driving manufacturing innovations.

“Georgia Tech is proud to be one of the nation’s leading sources of the AI talent and technologies that are powering a revolution in our economy,” said Ángel Cabrera, president of Georgia Tech. “It’s fitting we’ve been selected to host this new supercomputer, which will support a new wave of AI-centered innovation across the nation. We’re grateful to the NSF, and we are excited to get to work.”

Designed from the ground up for AI, Nexus will give researchers across the country access to advanced computing tools through a simple, user-friendly interface. It will support work in many fields, including climate science, health, aerospace, and robotics.

“The Nexus system's novel approach combining support for persistent scientific services with more traditional high-performance computing will enable new science and AI workflows that will accelerate the time to scientific discovery,” said Katie Antypas, National Science Foundation director of the Office of Advanced Cyberinfrastructure. “We look forward to adding Nexus to NSF's portfolio of advanced computing capabilities for the research community.”

Nexus Supercomputer — In Simple Terms

- Built for the future of science: Nexus is designed to power the most demanding AI research — from curing diseases, to understanding how the brain works, to engineering quantum materials.

- Blazing fast: Nexus can crank out over 400 quadrillion operations per second — the equivalent of everyone in the world continuously performing 50 million calculations every second.

- Massive brain plus memory: Nexus combines the power of AI and high-performance computing with 330 trillion bytes of memory to handle complex problems and giant datasets.

- Storage: Nexus will feature 10 quadrillion bytes of flash storage, equivalent to about 10 billion reams of paper. Stacked, that’s a column reaching 500,000 km high — enough to stretch from Earth to the moon and a third of the way back.

- Supercharged connections: Nexus will have lightning-fast connections to move data almost instantaneously, so researchers do not waste time waiting.

- Open to U.S. researchers: Scientists from any U.S. institution can apply to use Nexus.

Why Now?

AI is rapidly changing how science is investigated. Researchers use AI to analyze massive datasets, model complex systems, and test ideas faster than ever before. But these tools require powerful computing resources that — until now — have been inaccessible to many institutions.

This is where Nexus comes in. It will make state-of-the-art AI infrastructure available to scientists all across the country, not just those at top tech hubs.

“This supercomputer will help level the playing field,” said Suresh Marru, principal investigator of the Nexus project and director of Georgia Tech’s new Center for AI in Science and Engineering (ARTISAN). “It’s designed to make powerful AI tools easier to use and available to more researchers in more places.”

Srinivas Aluru, Regents’ Professor and senior associate dean in the College of Computing, said, “With Nexus, Georgia Tech joins the league of academic supercomputing centers. This is the culmination of years of planning, including building the state-of-the-art CODA data center and Nexus’ precursor supercomputer project, HIVE."

Like Nexus, HIVE was supported by NSF funding. Both Nexus and HIVE are supported by a partnership between Georgia Tech’s research and information technology units.

A National Collaboration

Georgia Tech is building Nexus in partnership with the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign, which runs several of the country’s top academic supercomputers. The two institutions will link their systems through a new high-speed network, creating a national research infrastructure.

“Nexus is more than a supercomputer — it’s a symbol of what’s possible when leading institutions work together to advance science,” said Charles Isbell, chancellor of the University of Illinois and former dean of Georgia Tech’s College of Computing. “I'm proud that my two academic homes have partnered on this project that will move science, and society, forward.”

What’s Next

Georgia Tech will begin building Nexus this year, with its expected completion in spring 2026. Once Nexus is finished, researchers can apply for access through an NSF review process. Georgia Tech will manage the system, provide support, and reserve up to 10% of its capacity for its own campus research.

“This is a big step for Georgia Tech and for the scientific community,” said Vivek Sarkar, the John P. Imlay Dean of Computing. “Nexus will help researchers make faster progress on today’s toughest problems — and open the door to discoveries we haven’t even imagined yet.”

News Contact

Siobhan Rodriguez

Senior Media Relations Representative

Institute Communications

Jul. 01, 2025

Georgia Tech has launched two new Interdisciplinary Research Institutes (IRIs): The Institute for Neuroscience, Neurotechnology, and Society (INNS) and the Space Research Institute (SRI).

The new institutes focus on expanding breakthroughs in neuroscience and space, two areas where research and federal funding are anticipated to remain strong. Both fields are poised to influence research in everything from healthcare and ethics to exploration and innovation. This expansion of Georgia Tech’s research enterprise represents the Institute’s commitment to research that will shape the future.

“At Georgia Tech, innovation flourishes where disciplines converge. With the launch of the Space Research Institute and the Institute for Neuroscience, Neurotechnology, and Society, we’re uniting experts across fields to take on some of humanity’s most profound questions. Even as we are tightening our belts in anticipation of potential federal R&D budget actions, we also are investing in areas where non-federal funding sources will grow and where big impacts are possible,” said Executive Vice President for Research Tim Lieuwen. "These institutes are about advancing knowledge — and using it to improve lives, inspire future generations, and help shape a better future for us all.”

Both INNS and SRI grew out of faculty-led initiatives shaped by a strategic planning process and campus-wide collaboration. Their evolution into formal institutes underscores the strength and momentum of Georgia Tech’s interdisciplinary research enterprise.

Georgia Tech’s 11 IRIs support collaboration between researchers and students across the Institute’s seven colleges, the Georgia Tech Research Institute (GTRI), national laboratories, and corporate entities to tackle critical topics of strategic significance for the Institute as well as for local, state, national, and international communities.

"IRIs bring together Georgia Tech researchers making them more competitive and successful in solving research challenges, especially across disciplinary boundaries,” said Julia Kubanek, vice president of interdisciplinary research. “We're making these new investments in neuro- and space-related fields to publicly showcase impactful discoveries and developments led by Georgia Tech faculty, attract new partners and collaborators, and pursue alternative funding strategies at a time of federal funding uncertainty."

The Space Research Institute

The Space Research Institute will connect faculty, students, and staff who share a passion for space exploration and discovery. They will investigate a wide variety of space-related topics, exploring how space influences and intersects with the human experience. The SRI fosters a collaborative community including scientific, engineering, cultural, and commercial research that pursues broadly integrated, innovative projects.

SRI is the hub for all things space-related at Georgia Tech. It connects the Institute’s schools, colleges, research institutes, and labs to lead conversations about space in the state of Georgia and the world. Working in partnership with academics, business partners, philanthropists, students, and governments, Georgia Tech is committed to staying at the forefront of space-related innovation.

The SRI will build upon the collaborative work of the Space Research Initiative, the first step in formalizing Georgia Tech’s broad interdisciplinary space research community. The Initiative brought together researchers from across campus and was guided by input from Georgia Tech stakeholders and external partners. It was led by an executive committee including Glenn Lightsey, John W. Young Chair Professor in the Daniel Guggenheim School of Aerospace Engineering; Mariel Borowitz, associate professor in the Sam Nunn School of International Affairs; and Jennifer Glass, associate professor in the School of Earth and Atmospheric Sciences. Beginning July 1, W. Jud Ready, a principal research engineer in GTRI’s Electro-Optical Systems Laboratory, will serve as the inaugural executive director of the Space Research Institute.

To receive the latest updates on space research and innovation at Georgia Tech, join the SRI mailing list.

The Institute for Neuroscience, Neurotechnology, and Society

The Institute for Neuroscience, Neurotechnology, and Society (INNS) is dedicated to advancing neuroscience and neurotechnology to improve society through discovery, innovation, and engagement. INNS brings together researchers from neuroscience, engineering, computing, ethics, public policy, and the humanities to explore the brain and nervous system while addressing the societal and ethical dimensions of neuro-related research.

INNS builds on a foundation established over a decade ago, which first led to the GT-Neuro Initiative and later evolved into the Neuro Next Initiative. Over the past two years, this effort has culminated in the development of a comprehensive plan for an IRI, guided by an executive committee composed of faculty and staff from across Georgia Tech. The committee included Simon Sponberg, Dunn Family Associate Professor in the School of Physics and the School of Biological Sciences; Christopher Rozell, Julian T. Hightower Chaired Professor in the School of Electrical and Computer Engineering; Jennifer Singh, associate professor in the School of History and Sociology; and Sarah Peterson, Neuro Next Initiative program manager. Their leadership shaped the vision for a research community both scientifically ambitious and socially responsive.

INNS will serve as a dynamic hub for interdisciplinary collaboration across the full spectrum of brain-related research — from biological foundations to behavior and cognition, and from fundamental research to medical innovations that advance human flourishing. Research areas will encompass the foundations of human intelligence and movement, bio-inspired design and neurotechnology development, and the ethical dimensions of a neuro-connected future.

By integrating technical innovation with human-centered inquiry, INNS is committed to ensuring that advances in neuroscience and neurotechnology are developed and applied ethically and responsibly. Through fostering innovation, cultivating interdisciplinary expertise, and engaging with the public, the institute seeks to shape a future where advancements in neuroscience and neurotechnology serve the greater good. INNS also aims to deepen Georgia Tech’s collaborations with clinical, academic, and industry partners, creating new pathways for translational research and real-world impact.

An internal search for INNS’s inaugural executive director is in the final stages, with an announcement expected soon.

Join our mailing list to receive the latest updates on everything neuro at Georgia Tech.

News Contact

Laurie Haigh

Research Communications

Pagination

- 1 Page 1

- Next page

![<p>Hong Yeo holds the wearable electronic device made of more than 170 thin, flexible sensors that measure foot pressure — a key metric for determining whether someone is off-balance. [Photos by Joya Chapman]</p> Hong Yeo holds shoe insert.](/sites/default/files/styles/wide/public/news/2025-12/DSC_0589.jpeg.webp?itok=D__uUB8q)