Oct. 20, 2023

In keeping with a strong strategic focus on AI for the 2023-2024 Academic Year, the Institute for Data Engineering and Science (IDEaS) has announced the winners of its 2023 Seed Grants for Thematic Events in AI and Cyberinfrastructure Resource Grants to support research in AI requiring secure, high-performance computing capabilities. Thematic event awards recipients will receive $8K to support their proposed workshop or series and Cyberinfrastructure winners will receive research support consisting of 600,000 CPU hours on the AMD Genoa Server as well as 36,000 hours of NVIDIA DGX H-100 GPU server usage and 172 TB of secure storage.

Congratulations to the award winners listed below!

Thematic Events in AI Awards

Proposed Workshop: “Foundation of scientific AI (Artificial Intelligence) for Optimization of Complex Systems”

Primary PI: Peng Chen, Assistant Professor, School of Computational Science and Engineering

Proposed Series: “Guest Lecture Seminar Series on Generative Art and Music”

Primary PI: Gil Weinberg, Professor, School of Music

Cyber-Infrastructure Resource Awards

Title: Human-in-the-Loop Musical Audio Source Separation

Topics: Music Informatics, Machine Learning

Primary PI: Alexander Lerch, Associate Professor, School of Music

Co-PIs: Karn Watcharasupat, Music Informatics Group | Yiwei Ding, Music Informatics Group | Pavan Seshadri, Music Informatics Group

Title: Towards A Multi-Species, Multi-Region Foundation Model for Neuroscience

Topics: Data-Centric AI, Neuroscience

Primary PI: Eva Dyer, Assistant Professor, Biomedical Engineering

Title: Multi-point Optimization for Building Sustainable Deep Learning Infrastructure

Topics: Energy Efficient Computing, Deep Learning, AI Systems OPtimization

Primary PI: Divya Mahajan, Assistant Professor, School of Electrical and Computer Engineering, School of Computer Science

Title: Neutrons for Precision Tests of the Standard Model

Topics: Nuclear/Particle Physics, Computational Physics

Primary PI: Aaron Jezghani - OIT-PACE

Title: Continual Pretraining for Egocentric Video

Primary PI: : Zsolt Kira, Assistant Professor, School of Interactive Computing

Co-PI: Shaunak Halbe, Ph.D. Student, Machine Learning

Title: Training More Trustworthy LLMs for Scientific Discovery via Debating and Tool Use

Topics: Trustworthy AI, Large-Language Models, Multi-Agent Systems, AI Optimization

Primary PIs: Chao Zhang, School of Computational Science and Engineering & Bo Dai, College of Computing

Title: Scaling up Foundation AI-based Protein Function Prediction with IDEaS Cyberinfrastructure

Topics: AI, Biology

Primary PI: Yunan Luo, Assistant Professor, School of Computational Science and Engineering

- Christa M. Ernst

News Contact

Christa M. Ernst - Research Communications Program Manager

Robotics | Data Engineering | Neuroengineering

Oct. 20, 2023

The Institute for Data Engineering and Science, in conjunction with several Interdisciplinary Research Institutes (IRIs) at Georgia Tech, have awarded seven teams of researchers from across the Institute a total of $105,000 in seed funding geared to better position Georgia Tech to perform world-class interdisciplinary research in data science and artificial intelligence development and deployment.

The goals of the funded proposals include identifying prominent emerging research directions on the topic of AI, shaping IDEaS future strategy in the initiative area, building an inclusive and active community of Georgia Tech researchers in the field that potentially include external collaborators, and identifying and preparing groundwork for competing in large-scale grant opportunities in AI and its use in other research fields.

Below are the 2023 recipients and the co-sponsoring IRIs:

Proposal Title: "AI for Chemical and Materials Discovery" + “AI in Microscopy Thrust”

PI: Victor Fung, CSE | Vida Jamali, ChBE| Pan Li, ECE | Amirali Aghazadeh Mohandesi, ECE

Award: $20k (co-sponsored by IMat)

Overview: The goal of this initiative is to bring together expertise in machine learning/AI, high-throughput computing, computational chemistry, and experimental materials synthesis and characterization to accelerate material discovery. Computational chemistry and materials simulations are critical for developing new materials and understanding their behavior and performance, as well as aiding in experimental synthesis and characterization. Machine learning and AI play a pivotal role in accelerating material discovery through data-driven surrogate models, as well as high-throughput and automated synthesis and characterization.

Proposal Title: " AI + Quantum Materials”

PI: Zhigang JIang, Physics | Martin Mourigal, Physics

Award: $20k (Co-Sponsored by IMat)

Overview: Zhigang Jiang is currently leading an initiative within IMAT entitled “Quantum responses of topological and magnetic matter” to nurture multi-PI projects. By crosscutting the IMAT initiative with this IDEAS call, we propose to support and feature the applications of AI on predictive and inverse problems in quantum materials. Understanding the limit and capabilities of AI methodologies is a huge barrier of entry for Physics students, because researchers in that field already need heavy training in quantum mechanics, low-temperature physics and chemical synthesis. Our most pressing need is for our AI inclined quantum materials students to find a broader community to engage with and learn. This is the primary problem we aim to solve with this initiative.

PI: Jeffrey Skolnick, Bio Sci | Chao Zhang, CSE

Proposal Title: Harnessing Large Language Models for Targeted and Effective Small Molecule 4 Library Design in Challenging Disease Treatment

Award: $15k (co-sponsored by IBB)

Overview: Our objective is to use large language models (LLMs) in conjunction with AI algorithms to identify effective driver proteins, develop screening algorithms that target appropriate binding sites while avoiding deleterious ones, and consider bioavailability and drug resistance factors. LLMs can rapidly analyze vast amounts of information from literature and bioinformatics tools, generating hypotheses and suggesting molecular modifications. By bridging multiple disciplines such as biology, chemistry, and pharmacology, LLMs can provide valuable insights from diverse sources, assisting researchers in making informed decisions. Our aim is to establish a first-in-class, LLM driven research initiative at Georgia Tech that focuses on designing highly effective small molecule libraries to treat challenging diseases. This initiative will go beyond existing AI approaches to molecule generation, which often only consider simple properties like hydrogen bonding or rely on a limited set of proteins to train the LLM and therefore lack generalizability. As a result, this initiative is expected to consistently produce safe and effective disease-specific molecules.

PI: Yiyi He, School of City & Regional Plan | Jun Rentschler, World Bank

Proposal Title: “AI for Climate Resilient Energy Systems”

Award: $15k (co-sponsored by SEI)

Overview: We are committed to building a team of interdisciplinary & transdisciplinary researchers and practitioners with a shared goal: developing a new framework which model future climatic variations and the interconnected and interdependent energy infrastructure network as complex systems. To achieve this, we will harness the power of cutting-edge climate model outputs, sourced from the Coupled Model Intercomparison Project (CMIP), and integrate approaches from Machine Learning and Deep Learning models. This strategic amalgamation of data and techniques will enable us to gain profound insights into the intricate web of future climate-change-induced extreme weather conditions and their immediate and long-term ramifications on energy infrastructure networks. The seed grant from IDEaS stands as the crucial catalyst for kick-starting this ambitious endeavor. It will empower us to form a collaborative and inclusive community of GT researchers hailing from various domains, including City and Regional Planning, Earth and Atmospheric Science, Computer Science and Electrical Engineering, Civil and Environmental Engineering etc. By drawing upon the wealth of expertise and perspectives from these diverse fields, we aim to foster an environment where innovative ideas and solutions can flourish. In addition to our internal team, we also have plans to collaborate with external partners, including the World Bank, the Stanford Doerr School of Sustainability, and the Berkeley AI Research Initiative, who share our vision of addressing the complex challenges at the intersection of climate and energy infrastructure.

PI: Jian Luo, Civil & Environmental Eng | Yi Deng, EAS

Proposal Title: “Physics-informed Deep Learning for Real-time Forecasting of Urban Flooding”

Award: $15k (co-sponsored by BBISS)

Overview: Our research team envisions a significant trend in the exploration of AI applications for urban flooding hazard forecasting. Georgia Tech possesses a wealth of interdisciplinary expertise, positioning us to make a pioneering contribution to this burgeoning field. We aim to harness the combined strengths of Georgia Tech's experts in civil and environmental engineering, atmospheric and climate science, and data science to chart new territory in this emerging trend. Furthermore, we envision the potential extension of our research efforts towards the development of a real-time hazard forecasting application. This application would incorporate adaptation and mitigation strategies in collaboration with local government agencies, emergency management departments, and researchers in computer engineering and social science studies. Such a holistic approach would address the multifaceted challenges posed by urban flooding. To the best of our knowledge, Georgia Tech currently lacks a dedicated team focused on the fusion of AI and climate/flood research, making this initiative even more pioneering and impactful.

Proposal Title: “AI for Recycling and Circular Economy”

PI: Valerie Thomas, ISyE and PubPoly | Steven Balakirsky, GTRI

Award: $15k (co-sponsored by BBISS)

Overview: Most asset management and recycling use technology that has not changed for decades. The use of bar codes and RFID has provided some benefits, such as for retail returns management. Automated sorting of recyclables using magnets, eddy currents, and laser plastics identification has improved municipal recycling. Yet the overall field has been challenged by not-quite-easy-enough identification of products in use or at end of life. AI approaches, including computer vision, data fusion, and machine learning provide the additional capability to make asset management and product recycling easy enough to be nearly autonomous. Georgia Tech is well suited to lead in the development of this application. With its strength in machine learning, robotics, sustainable business, supply chains and logistics, and technology commercialization, Georgia Tech has the multi-disciplinary capability to make this concept a reality, in research and in commercial application.

Proposal Title: “Data-Driven Platform for Transforming Subjective Assessment into Objective Processes for Artistic Human Performance and Wellness”

PI: Milka Trajkova, Research Scientist/School of Literature, Media, Communication | Brian Magerko, School of Literature, Media, Communication

Award: $15k (co-sponsored by IPaT)

Overview: Artistic human movement at large, stands at the precipice of a data-driven renaissance. By leveraging novel tools, we can usher in a transparent, data-driven, and accessible training environment. The potential ramifications extend beyond dance. As sports analytics have reshaped our understanding of athletic prowess, a similar approach to dance could redefine our comprehension of human movement, with implications spanning healthcare, construction, rehabilitation, and active aging. Georgia Tech, with its prowess in AI, HCI, and biomechanics is primed to lead this exploration. To actualize this vision, we propose the following research questions with ballet as a prime example of one of the most complex types of artistic movements: 1) What kinds of data - real-time kinematic, kinetic, biomechanical, etc. captured through accessible off-the-shelf technologies, are essential for effective AI assessment in ballet education for young adults?; 2) How can we design and develop an end-to-end ML architecture that assesses artistic and technical performance?; 3) What feedback elements (combination of timing, communication mode, feedback nature, polarity, visualization) are most effective for AI- based dance assessment?; and 4) How does AI-assisted feedback enhance physical wellness, artistic performance, and the learning process in young athletes compared to traditional methods?

- Christa M. Ernst

News Contact

Christa M. Ernst | Research Communications Program Manager

Robotics | Data Engineering | Neuroengineering

christa.ernst@research.gatech.edu

Oct. 19, 2023

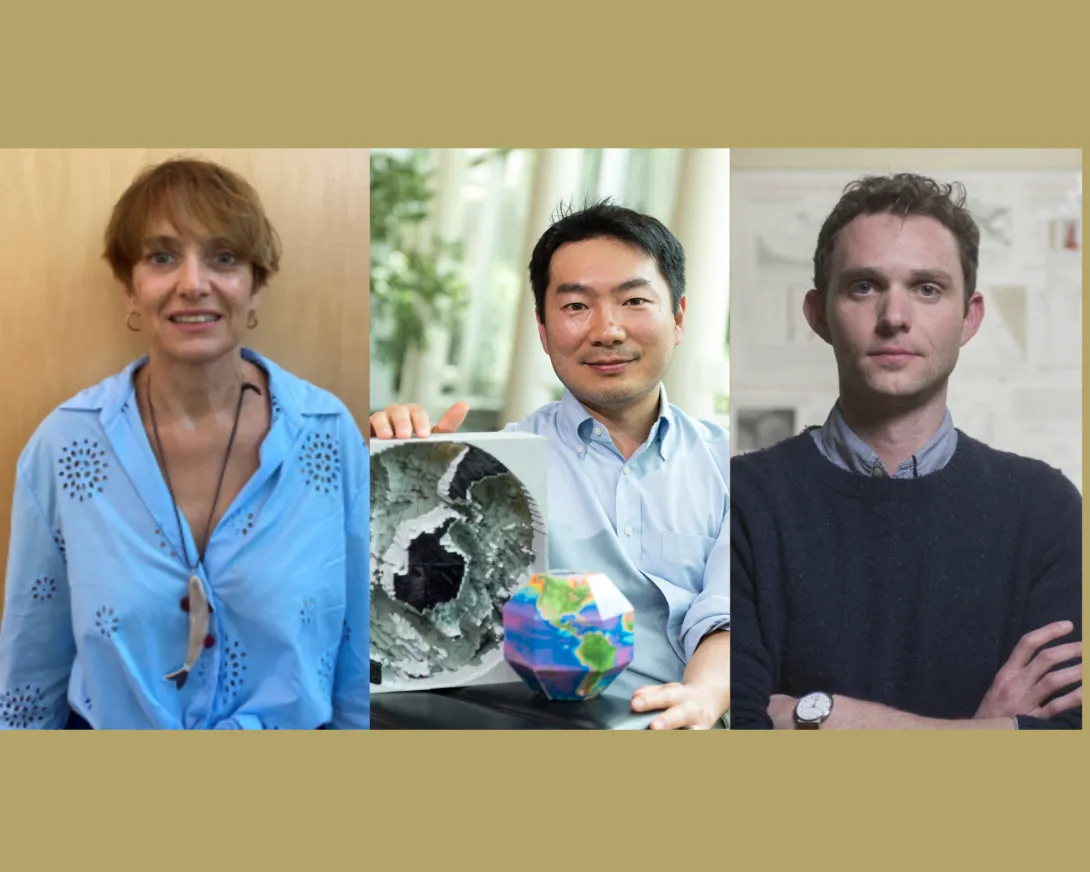

Three Georgia Tech School of Earth and Atmospheric Sciences researchers — Professor and Associate Chair Annalisa Bracco, Professor Taka Ito, and Georgia Power Chair and Associate Professor Chris Reinhard — will join colleagues from Princeton, Texas A&M, and Yale University for an $8 million Department of Energy (DOE) grant that will build an “end-to-end framework” for studying the impact of carbon dioxide removal efforts for land, rivers, and seas.

The proposal is one of 29 DOE Energy Earthshot Initiatives projects recently granted funding, and among several led by and involving Georgia Tech investigators across the Sciences and Engineering.

Overall, DOE is investing $264 million to develop solutions for the scientific challenges underlying the Energy Earthshot goals. The 29 projects also include establishing 11 Energy Earthshot Research Centers led by DOE National Laboratories.

The Energy Earthshots connect the Department of Energy's basic science and energy technology offices to accelerate breakthroughs towards more abundant, affordable, and reliable clean energy solutions — seeking to revolutionize many sectors across the U.S., and relying on fundamental science and innovative technology to be successful.

Carbon Dioxide Removal

The School of Earth and Atmospheric Sciences project, “Carbon Dioxide Removal and High-Performance Computing: Planetary Boundaries of Earth Shots,” is part of the agency’s Science Foundations for the Energy Earthshots program. Its goal is to create a publicly-accessible computer modeling system that will track progress in two key carbon dioxide removal (CDR) processes: enhanced earth weathering, and global ocean alkalinization.

In enhanced earth weathering, carbon dioxide is converted into bicarbonate by spreading minerals like basalt on land, which traps rainwater containing CO2. That gets washed out by rivers into oceans, where it is trapped on the ocean floor. If used at scale, these nature-based climate solutions could remove atmospheric carbon dioxide and alleviate ocean acidification.

The research team notes that there is currently “no end-to-end framework to assess the impacts of enhanced weathering or ocean alkalinity enhancement — which are likely to be pursued at the same time.”

“The proposal is for a three-year effort, but our hope is that the foundation we lay down in that time will represent a major step forward in our ability to track carbon from land to sea,” says Reinhard, the Georgia Power Chair who is a co-investigator on the grant.

“Like many folks interested in better understanding how climate interventions might impact the Earth system across scales, we are in some ways building the plane in midair,” he adds. “We need to develop and validate the individual pieces of the system — soils, rivers, the coastal ocean — but also wire them up and prove from observations on the ground how a fully integrated model works.”

That will involve the use of several existing computer models, along with Georgia Tech’s PACE supercomputers, Professor Ito explains. “We will use these models as a tool to better understand how the added alkalinity, carbon and weathering byproducts from the soils and rivers will eventually affect the cycling of nutrients, alkalinity, carbon and associated ecological processes in the ocean,” Ito adds. “After the model passes the quality check and we have confidence in our output, we can start to ask many questions about assessment of different carbon sequestration approaches or downstream impacts on ecosystem processes.”

Professor Bracco, whose recent research has focused on rising ocean heat levels, says CDR is needed just to keep ocean systems from warming about 2 degrees centigrade (Celsius).

“Ninety percent of the excess heat caused by greenhouse gas emissions is in the oceans,” Bracco shares, “and even if we stop emitting all together tomorrow, that change we imprinted will continue to impact the climate system for many hundreds of years to come. So in terms of ocean heat, CDRs will help in not making the problem worse, but we will not see an immediate cooling effect on ocean temperatures. Stabilizing them, however, would be very important.”

Bracco and co-investigators will study the soil-river-ocean enhanced weathering pipeline “because it’s definitely cheaper and closer to scale-up.” Reverse weathering can also happen on the ocean floor, with new clays chemically formed from ocean and marine sediments, and CO2 is included in that process. “The cost, however, is higher at the moment. Anything that has to be done in the ocean requires ships and oil to begin,” she adds.

Reinhard hopes any tools developed for the DOE project would be used by farmers and other land managers to make informed decisions on how and when to manage their soil, while giving them data on the downstream impacts of those practices.

“One of our key goals will also be to combine our data from our model pipeline with historical observational data from the Mississippi watershed and the Gulf of Mexico,” Reinhard says. “This will give us some powerful new insights into the impacts large-scale agriculture in the U.S. has had over the last half-century, and will hopefully allow us to accurately predict how business-as-usual practices and modified approaches will play out across scales.”

News Contact

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Oct. 12, 2023

Sean Castillo is in the win-win business. As an industrial hygienist in the Georgia Tech Enterprise Innovation Institute (EI2), his job is to ensure that employees are safe in their workspaces, and when he does that, he simultaneously improves a company’s performance.

That’s been a theme for Castillo and his colleagues in the Safety, Health, Environmental Services (SHES) program and their partners in the Georgia Manufacturing Extension Partnership (GaMEP), part of EI2’s suite of programs aimed at helping Georgia businesses thrive.

“A healthier workforce is healthy for business,” said Castillo, part of the SHES team of consultants who often work closely with their GaMEP counterparts to improve safety while also maximizing productivity.

This team of experts from EI2 assist companies trying to reach that critical intersection of both, combining smart ergonomics and safety enhancements with lean manufacturing practices. This can solve human performance gaps due to fatigue, heat, or some other environmental stressor, while helping businesses continue to improve their production processes and, ultimately, their bottom line.

These stressors cost U.S. industry billions of dollars each year — fatigue, for example, is responsible for about $136 billion in lost productivity.

“Protecting your employee — investing in safety now — saves a lot of money later,” Castillo said. “It equates to less money spent on workers compensation and less employee turnover, which means less time training new employees, and that ideally leads to a more efficient process in the workplace.”

It takes careful and intentional collaboration to bring those moving pieces together, and inextricably linked programs like SHES and GaMEP can help orchestrate all of that.

Ensuring Safe Workspaces

SHES is staffed by safety consultants, like Castillo, who provide a free and essential service to Georgia businesses. They help companies ensure that they meet or exceed the standards set by the federal Occupational Health and Safety Administration (OSHA), mainly through SHES’ flagship OSHA 21(d) Consultation Program.

“Our job is to ensure that workspaces and processes are designed so that anybody can perform the work safely,” said Trey Sawyers, a safety, health, and ergonomics consultant on the SHES team, aiding small and mid-sized businesses in Georgia. When a company reaches out to SHES to apply for the free, confidential OSHA consultation program, a consultant like Sawyers gets assigned to the task, “based on our area of expertise,” said Sawyers, an expert in ergonomics, which is the science of designing and adapting a workspace to efficiently suit the physical and mental needs and limitations of workers.

“If a company is having ergonomic issues — maybe they’re experiencing a lot of strains and sprains — then I might get the call because of my knowledge and understanding of anthropometry, and then I’ll go take a close look at the facility,” Sawyers said. Anthropometry is the scientific study of a human’s size, form, and functional capacity.

SHES consultants can identify potential workplace hazards, provide guidance on how to comply with OSHA standards, and establish or improve safety and health programs in the company.

“The caveat is the company has to correct any serious hazards that we find,” said Castillo, who visits a wide range of workspaces in his role. For instance, his job will take him to construction and manufacturing sites, gun ranges, even office settings. “We do noise and air monitoring at all different types of workplaces. I was at a primary care clinic the other day. And over the past few years, we’ve had a significant emphasis on stone fabricators, looking for overexposures to respirable crystalline silica.”

Silica, which is dust residue from the process of creating marble and quartz slabs, can lead to a lung disease called silicosis. OSHA established new limits that cut the permissible exposure limits in half, and that has kept the SHES consultants busy as Georgia manufacturers try to achieve and maintain compliance.

Keeping Companies Cool

Another area of growing emphasis for Georgia Tech’s consultants is heat-related stress in the workplace.

“Currently, there are no standards to address this,” Castillo said. “For example, there are no rules that say a construction site worker should drink this much water. There are suggested guidelines and emphasis programs for inspections for targeted industries where heat stress may be prevalent — but no standards, though that is coming.”

The SHES team is trying to stay ahead of what will likely be new federal rules for heat mitigation. To help develop safe standards and better understand the effects of heat on workers, consultants like Castillo are going to construction sites, plant nurseries, and warehouses, and enlisting volunteers in field studies. Using heat stress monitor armbands, they’re monitoring data on workers’ core body temperatures and heart rates.

“These tools are great because we’re not only gathering some good data, but we can use them proactively to prevent heat events such as heat exhaustion and heatstroke, which can be fatal if left untreated,” Castillo said.

To further help educate Georgia companies about the risks of heat-related problems, SHES applied for and recently won a Susan Harwood Training Grant from the U.S. Department of Labor. The $160,000 award will support SHES consultants’ efforts to further their work in heat stress education so that “companies and workers will understand the warning signs and the potential effects of heat stress, and how they can stay safe,” Castillo said. “We’re sure this will all become part of OSHA standards eventually, and we’d like to help our clients stay ahead of the curve to protect their employees.”

OSHA standards are the law, and while larger corporations routinely hire consulting firms to keep them on the straight and narrow, SHES is providing the same level of expertise for its smaller business clients for free. Most of those clients apply for help through SHES’ online request form. And others find the help they need through the guidance of process improvement specialist Katie Hines and her colleagues in GaMEP.

Lean and Safe

Hines came to her appreciation of ergonomics naturally. After graduating from Auburn University, she entered the workforce as a manufacturing engineer for a building materials company, where “it was just part of our day-to-day work life in that manufacturing environment, on the production floor,” she said.

It took grad school and a deeper focus on lean and continuous improvement processes to formalize that appreciation.

While working toward her master’s degree in chemical engineering at Auburn, Hines earned a certificate in occupational safety and ergonomics (like Sawyers, her SHES colleague). At the same time, Hines was helping to guide her company’s lean and continuous improvement program. And when she joined Proctor and Gamble after completing her degree, “The lean concept and safety best practices were fully ingrained, part of the daily discussion there,” she said.

All those hands-on manufacturing production floor experiences managing people and systems prepared Hines well for her current role as a project manager on GaMEP’s Operational Excellence team, where her focus is entirely on lean and continuous improvement work — that is, helping companies reduce waste and improve production while also enhancing safety and ergonomics.

Hines uses her expertise in knowing how manufacturing processes and people should look when everyone is safe and also productive. She can walk into a GaMEP client’s facility and drive the process improvements and solutions that will help them achieve a leaner, more efficient form of production. And then, when she sees the need, Hines will recommend the client contact SHES, “the people who have their fingers on the data and the expertise to improve safety.”

These were concepts that, for a long time, seemed to be working against each other — the very idea of maximizing production and improving profits while also emphasizing worker safety and comfort.

“But you can have both,” Castillo said. “You should have both.”

News Contact

Writer: Jerry Grillo

Sep. 21, 2023

For the 10th Demo Day, the Tech community came out in droves to support 75 Georgia Tech startups created by students, alumni, and faculty. In booths spread out in Exhibition Hall, they displayed their products, which ranged from AI and robotic training gear to fungi fashion, and more. Over four hours, more than 1,500 people filed in and out of the hall. Founders pitched their innovations to business and community leaders, as well as students and the public, eager to witness groundbreaking innovations across various industries.

Kiandra Peart, co-founder of Reinvend, said the amount of people surprised her.

“After the first VIP session was over, hundreds of people were just flooding through the door at all times,” she said. “We had to give the pitch a million times to explain it to a lot of different people, but they seemed really, really engaged, and we were also able to get a few interactions.”

Reinvend is working through a potential deal with Tech Dining on using their vending machines, which would expand food options for students after dining halls close.

Demo Day is the culmination of the 12-week summer accelerator, Startup Launch, where founders learn about entrepreneurship and build out their businesses with the support of mentors. Along with guidance from experts in business, teams receive $5,000 in optional funding and $30,000 of in-kind services. This year, the program had over 100 startups and 250 founders, continuing the growth trend for CREATE-X. The program aims to eventually support the launch of 300 startups per year.

Peart said the experience taught the team how to better pitch to potential clients and formulate a call to action after a successful interaction.

Since its inception in 2014, CREATE-X has had more than 5,000 participate in their programming, which is segmented in three areas: Learn, Make, and Launch. Besides providing resources, the program also helps founders through its rich entrepreneurial ecosystem.

“We want to increase access to entrepreneurship. That’s the heart of the program, and it’s the goal to have everyone in the Tech community to have entrepreneurial confidence. The energy and passion of our founders to solve real-world problems — it’s palpable at Demo Day. I’d say it’s the best place to see what we’re about and understand what this program offers,” said Rahul Saxena, director of CREATE-X, who also reminded founders that the connections they make here would last for years.

At its core, CREATE-X is a community geared toward innovation. Participants were at the forefront of integrating OpenAI's GPT-3 when it was not yet widely adopted. They share their insights with each other, and the program has mentors coming back from even the very first cohort. Starting with eight teams, CREATE-X has now launched more than 400 startup teams, with founders representing 38 academic majors. Its total startup portfolio valuation is above $1.9 billion.

Peart compared CREATE-X to an energy drink.

“After going through the program, I was really able to refine my ideas, talk with other people, and now that the program is over, I feel energized,” she said. “I think that having an accelerator right at home allows students who may have never considered starting a company, or didn't have access to an accelerator, to actually utilize their resources from their school and their own community to get their companies started.”

Although Demo Day just ended, CREATE-X is already gearing up for the next cohort. Applications for Startup Launch opened Aug. 31, the same day as Demo Day.

“Consider interning for yourself next summer,” said Saxena. “We know you have ideas about solutions to address global challenges. You’re at Tech; you have the talent. Let us help you with the resources and support system.”

Georgia Tech students, alumni, and faculty can apply to GT Startup Launch now. The priority deadline is Nov. 6. To learn more about CREATE-X, find CREATE-X events to build a startup team, or learn more about entrepreneurship, visit th CREATE-X website

News Contact

Breanna Durham

Marketing Strategist

Sep. 19, 2023

In front of a standing-room-only crowd inside the John Lewis Student Center's Atlantic Theater, global leaders from the Hyundai Motor Group and Georgia Tech signed a memorandum of understanding, creating a transformative partnership focused on sustainable mobility, the hydrogen economy, and workforce development.

As the automaker continues to construct its Metaplant America site in Bryan County — the cornerstone of Hyundai's $12 billion investment into electric vehicles and battery production across the state of Georgia — today's signing ceremony symbolizes the vision that Hyundai and Georgia Tech share on the road to advancing technology and improving the human condition.

"As a leading public technological research university, we believe we have the opportunity and the responsibility to serve society, and that technology and the science and policy that support it must change our world for the better. These are responsibilities and challenges that we boldly accept. And we know we can't get there alone. On the contrary, we need travel partners, like-minded innovators, and partners with whom we can go farther, and today's partnership with Hyundai is a perfect example of what that means," Georgia Tech President Ángel Cabrera said.

The state of Georgia and the Institute have positioned themselves as leaders in the electrification of the automotive industry. Hyundai is among the top sellers of electric vehicles in the United States as the company aims to produce up to 500,000 vehicles annually at the $7 billion Savannah plant when production begins in 2025. The plant will create 8,500 jobs, and the company's total investments are projected to inject tens of billions of dollars into the state economy while spurring the creation of up to 40,000 jobs.

"It's clear, we are in the right place with the right partners," Jay Chang, president and CEO of Hyundai Motor Company, said. "When our executive chairman first decided on [the site of] the metaplant, one of the first things he said was, 'Make sure we collaborate with Georgia Tech.’ Hyundai and Georgia Tech have a lot in common. We have proud histories. We celebrate excellence, and we have very high standards. What we love about Georgia Tech is the vision to be a leading research university that addresses global challenges and develops exceptional leaders from all backgrounds."

Spearheading new opportunities for students, the partnership will create technical training and leadership development programming for Hyundai employees and initiate engagement activities to stimulate interest in STEM degrees among students.

José Muñoz, president and global COO of Hyundai Motor Company and president and CEO of Hyundai and Genesis Motor North America, says the company quickly realized the potential impact of the newly forged partnership with Georgia Tech.

"Proximity to institutions like Georgia Tech was one of the many reasons Hyundai selected Georgia for our new EV manufacturing facility. Imagine zero-emissions, hydrogen-powered vehicles here on campus, advanced air mobility shuttling people to Hartsfield-Jackson Atlanta International Airport, or riding hands-free and stress-free in autonomous vehicles during rush hour on I-75 and I-85. Together, Georgia Tech and Hyundai have the resources to fundamentally improve how people and goods move," he said.

In pursuit of sustainability, Hyundai has invested heavily in the potential of hydrogen and plans to lean on the Institute's expertise to explore the potential of the alternative fuel source, primarily for commercial vehicles. Hyundai has deployed its hydrogen-powered XCIENT rigs to transport materials in five countries.

University System of Georgia Chancellor Sonny Perdue was on hand for Tuesday’s ceremony. Reflecting on his visits to the company's global headquarters in South Korea prior to the construction of the West Point, Georgia, Kia plant, he praised the company's values and world-class engineering ability.

"This is a relationship built on mutual trust and respect. It's a company, a family atmosphere, and a culture that I respect and admire for the way they do business and honor progress, innovation, and creativity. That is why I am so excited about this partnership between the Hyundai Motor Group and the Georgia Institute of Technology because that will only enhance that," Perdue said.

Owned by Hyundai, Kia recently invested an additional $200 million into its West Point facility to prepare for the production of the all-electric 2024 EV9 SUV. The plant currently manufactures more than 40% of all Kia models sold in the U.S.

The partnership also includes field-naming recognition at Bobby Dodd Stadium, which is now known as Bobby Dodd Stadium at Hyundai Field, and provides student-athletes and teams with the resources needed to compete at the highest levels, both athletically and academically.

Sep. 06, 2023

“A stitch in time saves nine,” goes the old saying. For a company in Georgia, that adage became very real when damage to a key piece of machinery threatened its operation. The group helping with the stitch in time was the Georgia Manufacturing Extension Partnership (GaMEP), a program of Georgia Tech's Enterprise Innovation Institute that — for more than 60 years — has been helping small- to medium-sized manufacturers in Georgia stay competitive and grow, boosting economic development across the state.

Silon US, a Peachtree City manufacturer that designs and produces engineered compounds used to create a wide range of products — from automotive applications to building materials, such as PEX piping and wire and cable, was experiencing problems with their extrusion line during a time of increasing customer demand. Problems with the drive mechanism on that extrusion line, a piece of equipment critical to the company’s ability to produce, threatened to shut them down. With replacement parts several weeks away, was it safe to continue operating? At what throughput rates? How much collateral damage might be incurred if they continued to operate?

That’s when Silon managers turned to GaMEP for help.

After working through ideas with GaMEP’s manufacturing experts, the team installed wireless condition monitoring sensors that provide continuous, real-time insights on their manufacturing assets’ health. With the sensors, Silon was able to find a sweet spot that not only allowed them to continue operating but also kept them from overexerting the equipment, preventing further damage.

The solution to that problem has now become a routine part of Silon’s process, as company technicians continue to use this sensor technology for early detection of any deviations or anomalies in the machinery’s health, allowing the company’s maintenance team to proactively respond by adjusting scheduled maintenance to avoid costly downtime.

GaMEP’s Sean Madhavaraman says, “Silon is more productive than ever and on track for growth. The strong results in this challenge are a great example of the decades-long focus of GaMEP to educate and train managers and employees in best practices, to develop and implement the latest technology, and to work together with businesses to find solutions.”

Daniel Raubenheimer and Matt Gammon, Silon’s general managers, also lauded GaMEP, saying, “GaMEP’s extensive experience within the manufacturing realm has been a great benefit to our company. The wireless condition monitoring sensors allow us to predict future breakdowns and mitigate a potential catastrophe — allowing us to operate in a safe manner, while saving money, time, and effort.”

News Contact

Blair Meeks

Institute Communications

Aug. 31, 2023

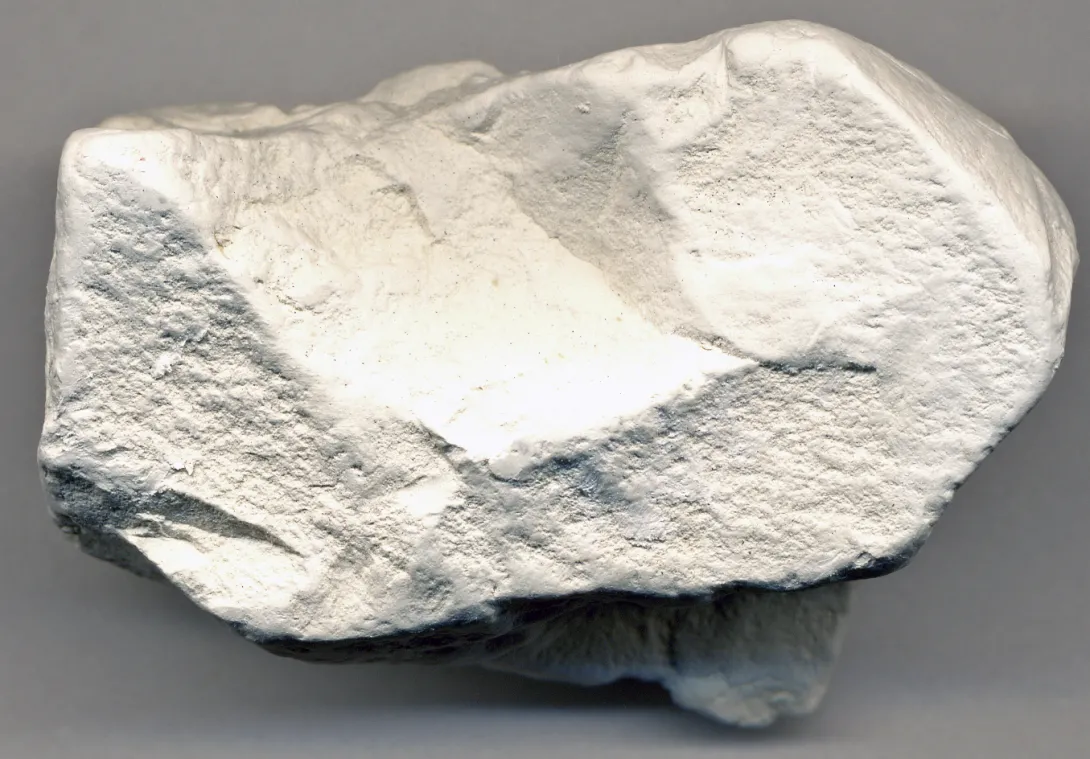

Yuanzhi Tang has received a National Science Foundation grant to see if areas along the middle and coastal plains of Georgia that produce a highly sought-after clay are also home to large amounts of rare earth elements (REEs) needed for a wide range of industries, including rapidly evolving clean energy efforts.

Tang is an associate professor in the School of Earth and Atmospheric Sciences at Georgia Tech. She is joined by Crawford Elliott, associate professor at Georgia State University, on their proposal, “The occurrences of the rare earth elements in highly weathered sedimentary rocks, Georgia kaolins,” funded by the NSF Division of Earth Sciences.

All about REEs

REEs such as cerium, terbium, neodymium, and yttrium, are critical minerals used in many industrial technology components such as semiconductors, permanent magnets, and rechargeable batteries (smart phones, computers), phosphors (flat screen TVs, light-emitting diodes), and catalysts (fuel combustion, auto emissions controls, water purification). They impact a wide range of industries such as health care, transportation, power generation (including wind turbines), petroleum refining, and consumer electronics.

“With the increasing global demand for green and sustainable technologies, REE demand is projected to increase rapidly in the U.S. and globally,” Tang says. “Yet currently the domestic REE production is very low, and the U.S. relies heavily on imports. The combination of growing demand and high dependence on international supplies has prompted the U.S. to explore new resources and develop environmentally friendly extraction and processing technologies.”

Georgia geology

Kaolin is a white, aluminosilicate clay mineral used in making paper, plastics, rubber, paints, and many other products. More than $1 billion worth of kaolin is mined from Georgia’s kaolin deposits every year, more than any other state.

Tang and Elliott say considerable amounts of the REEs have been found in the waste residues generated from Georgia kaolin mining.

“These occurrences have high REE contents and might add significantly to domestic resources,” Tang says. “By understanding the geological and geochemical processes controlling the occurrence and distribution of REEs in these weathered environments, we might be able to provide fundamental information for the identification of REE resources, and the design of efficient and green extraction technologies.”

“The new work with Dr. Tang has the potential to advance our fundamental understanding of the occurrences, mineralogical speciation, and distribution of the REEs in bauxite and kaolin ore,” Elliott says. “I am thrilled to be working with Dr. Tang on this project.”

Laterite thinking

The Department of Energy notes the 17 rare earth elements are found in highly weathered environments, such as the laterites, a type of soil and rock located in eastern and southeastern China, which currently comprises around 80 percent of the world’s REE reserves. To promote domestic production of REEs, the NSF sought proposals to explore natural unconventional element resources located in highly weathered sedimentary/regolith (loose rocky material covering bedrock) settings in the U.S. Georgia’s kaolin deposits and mines extend in the state from southwest to northeast, paralleling the state’s ‘fall line’ that separates the Piedmont Plateau from the coastal plains.

With the NSF grant, Tang and Elliott will find out more about the geochemical factors and processes controlling REE mobility, distribution, and fractionation (enrichment of light REE versus heavy REE) in these environments, which can provide the foundation to identify domestic resources, and for the rational design of extraction technologies.

Community connections

The proposed work will also integrate research with education, combining student training with undergraduate education and research, as well as K-12 and community outreach emphasizing the participation of underrepresented groups in geological sciences.

The grant relates to Tang’s work at two Georgia Tech interdisciplinary research institutes dedicated to sustainability, energy, and climate: the Strategic Energy Institute and the Brook Byers Institute for Sustainable Systems (BBISS), where she is a co-lead with Hailong Chen, an associate professor in the School of Materials Science and Engineering. Tang and Chen’s BBISS project is “Sustainable Resources for Clean Energy.” Tang also serves as an SEI/BBISS initiative lead on sustainable resources.

“The state of Georgia has already been experiencing rapid and exciting developments in the clean energy industry,” Tang says. “We hope to bridge an important link in this space. We hope to help identify and explore regional critical resources for clean energy development by both understanding the geological/geochemical fundamentals, and developing sustainable extraction technologies.”

Georgia Tech is also investing in the community outreach and social aspects of energy research, not just in science and engineering, Tang adds. “Collaboration with Georgia State University also gives exciting opportunities for the engagement with underrepresented student groups, especially in geological sciences, which will serve in the long term for workforce development.”

News Contact

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Aug. 30, 2023

Twenty years ago, when strangers would ask Hermann Fritz about his job and he told them he was a tsunami expert, he got plenty of quizzical looks in response.

“The 2004 Indian Ocean earthquake changed everything,” said Fritz, a young researcher whose Ph.D. was barely two years old at the time, recalling the powerful undersea megathrust off the Indonesian coast that caused the ocean floor to rise on Boxing Day. It triggered a massive, deadly tsunami with 100-foot waves that killed nearly 230,000 people in 14 countries, the last of them 5,000 miles from the earthquake epicenter.

“Overnight, everyone in the world knew about tsunamis,” said Fritz, now a professor in the School of Civil and Environmental Engineering at Georgia Tech. He worked post-disaster reconnaissance operations in countries devastated by that 2004 tsunami.

In the years since, he has led or participated in at least a dozen other such scientific missions, in the wake of tsunamis, hurricanes, landslides, and earthquakes. His wide-ranging research centers on fluid dynamics in these natural (and human-made) disasters, but also on their mitigation and coastal protection. That’s where his latest research, published in the journal Physics of Fluids, is aimed.

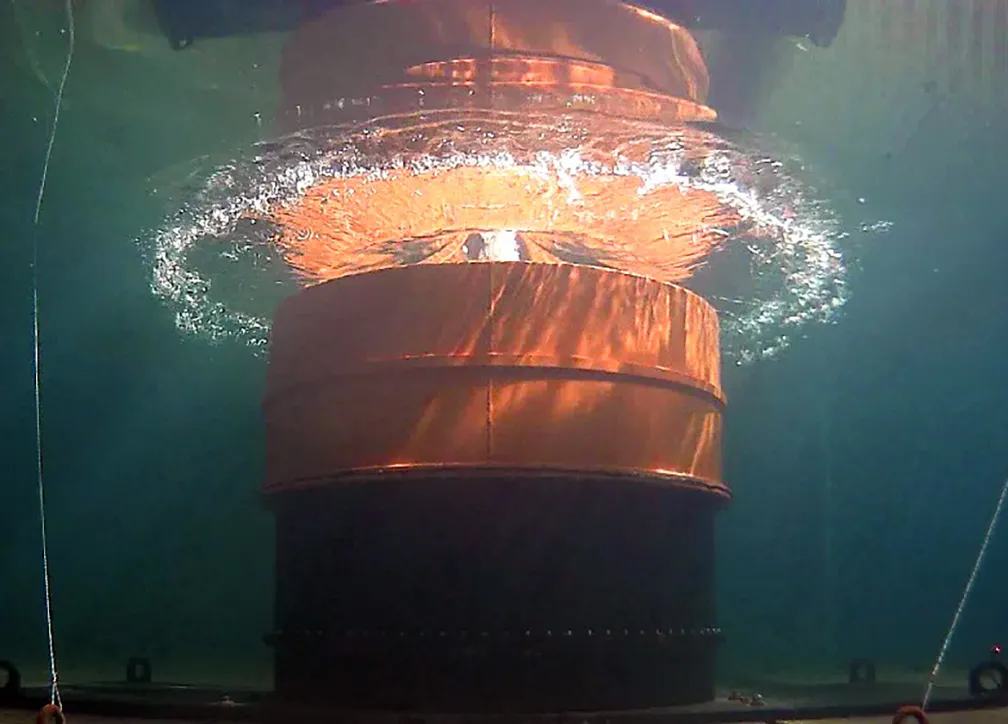

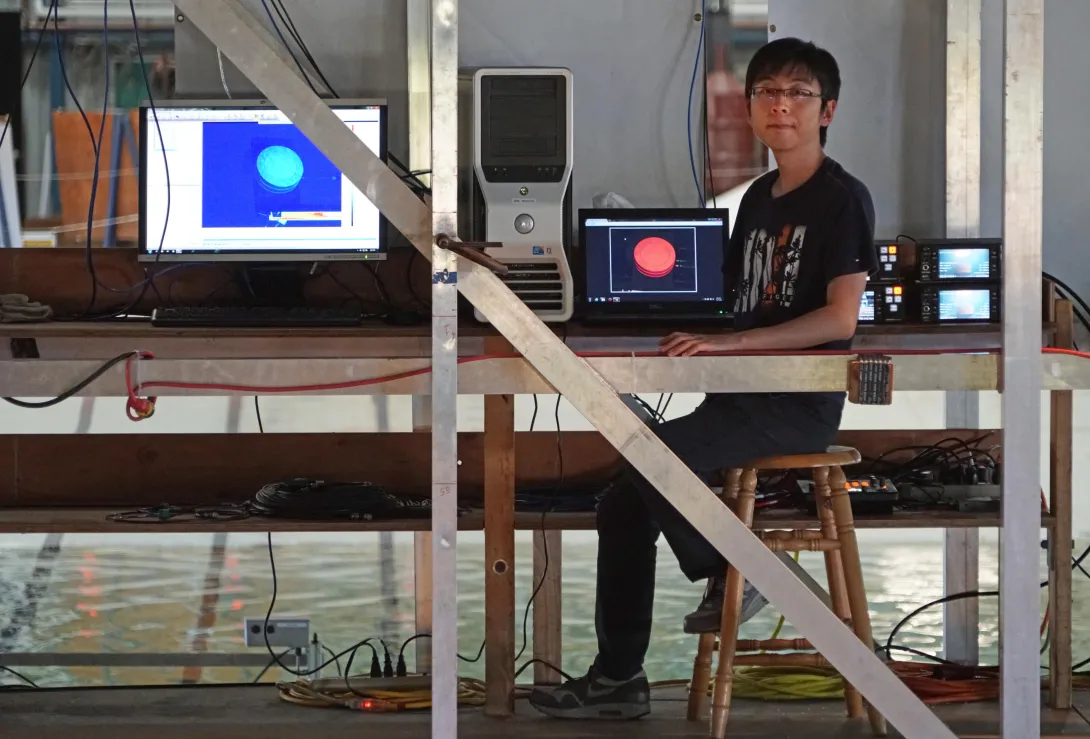

Fritz and his former graduate student, Yibin Liu, are interested in a specific kind of tsunami, those caused by underwater volcanic eruptions and landslides. So, they built a volcanic tsunami generator in a wave basin — essentially, a large lab-in-a-tank for studying wave behavior, the O.H. Hinsdale Wave Research Laboratory, a National Science Foundation-supported Natural Hazards Engineering Research Infrastructure facility at Oregon State University.

“The recent underwater volcanic eruptions of 2018 at Anak Krakatau and the 2022 Tonga event caused tsunami hazards with extensive casualties and economic impacts,” Liu said. “That’s what motivated this project — the limited scientific understanding and field data of tsunami generation mechanisms.”

Making Waves

The tsunami generation process is the transfer of mechanical and thermal energy from volcanic activities into the surrounding body of water. To mimic this behavior for their observation, the researchers built a pneumatic volcanic tsunami generator (VTG). Tsunami waves are generated by sending a vertical column of water up through the water surface. Then the water surface deformation was measured with cameras and wave gauges.

“This design, with multiple pneumatic cylinders, can reach a wide spectrum of motion patterns to simulate various types of underwater volcanic eruptions,” said Liu.

The VTG, which they installed on the basin floor at Oregon State, is essentially the next generation of technology they’d used in previous studies.

“We had studied tsunamis from non-tectonic sources, such as submarine landslides, for decades, and we had previously used pneumatics to drive gravel landslides and were familiar with the pneumatic controls and rapid accelerations,” Fritz said. “Ultimately it came down to developing a volcanic tsunami generator that would allow for safe, controlled, and reproducible experiments of an isolated volcanic tsunami generation mechanism.”

They conducted more than 300 experiments on the simulator, recreating different volcanic conditions. The 3D data they gathered will ideally lead to better tsunami models for future events, which can be very unpredictable. The Anak Krakatau event in 2018 is a great example.

“It had basically been announcing a potential tsunami hazard by erupting for six months before collapsing and triggering a tsunami that still took more than 400 lives,” Fritz said.

He added, “The beauty of physical modeling is that it allows us to safely study details of volcanic tsunami generation at reduced scale and fill gaps in rare, real-world observations of volcanic tsunamis. Ultimately, the broader impacts of this research are to raise tsunami awareness, educate, and contribute to saving lives.”

Video: Volcanic Tsunami Generator at work

Citation: Hermann Fritz and Yibin Liu, “Physical modeling of spikes during the volcanic tsunami generation,” Physics of Fluids. doi.org/10.1063/5.0147970

News Contact

Aug. 24, 2023

CREATE-X’s Startup Launch will introduce its 10th cohort of talented startup founders on Demo Day, Aug. 31, 5 – 7p.m., in the Exhibition Hall. Last year, the event drew more than 1,500 people, including business and community leaders, to view new products from a wide range of industries. All of the startups are developed through the creative work of Georgia Tech’s faculty, alumni, and students. With these products, CREATE-X founders aim to address global problems head-on with the latest technology and ingenuity.

At the event, attendees will be able to explore the products of over 100 newly minted startups, from consumer apps to deep tech, and engage with more than 250 founders about their entrepreneurial journeys. In 2021, CREATE-X startups were at the frontier of the current AI revolution, integrating OpenAI's GPT-3 well ahead of mainstream adoption.

CREATE-X began in 2014 as a Georgia Tech initiative to instill entrepreneurial confidence in students launching real startups. Their signature program is the 12-week Startup Launch accelerator, in which students and alumni intern for their own companies. Participants attend sessions, team socials, and pitch practices and receive coaching and mentorship from experienced entrepreneurs and notable Tech alumni. Demo Day is the finale of the program, a vibrant exhibition that is free and open to the public.

The inaugural cohort had eight teams. Several companies among the first six cohorts are valued above $100 million, and one company is valued at $1.3 billion. The program has worked with nearly 450 startup teams, with a total portfolio valuation of over $1.9 billion, and has produced more than 1,100 founders launching startups. In the future, CREATE-X Director Rahul Saxena said the program hopes to produce 300 startups a year.

“CREATE-X has a rich entrepreneurial ecosystem that will support students as they launch real startups. In every cohort, I remind participants that the connections they make in the program will carry after, and that they’re surrounded by talent,” Saxena said. “We want every Georgia Tech student to have this advantage when starting their business.”

He noted, “From consumer apps revolutionizing everyday life to sustainable fashion brands paving the way toward responsible consumption — there's something here for everyone. CREATE-X founders are a testament to tomorrow’s possibilities, and we invite you to see it for yourself.”

Registration is open now for Demo Day 2023. For more information, visit the CREATE-X website.

News Contact

Breanna Durham

Marketing Strategist

Pagination

- Previous page

- 4 Page 4

- Next page