Sep. 06, 2024

New research shows that an effort to improve wintertime air quality in Fairbanks, Alaska — particularly in frigid conditions around 40 below zero Fahrenheit — may not be as effective as intended.

Led by a team of University of Alaska Fairbanks and Georgia Tech researchers that includes School of Earth and Atmospheric Sciences Professor Rodney Weber, the researchers' latest findings are published in Science Advances.

In the study, the team leveraged state-of-the-art thermodynamic tools used in global air quality models, with an aim to better understand how reducing the amount of primary sulfate in the atmosphere might affect sub-zero air quality conditions.

The project stems from the 2022 Alaskan Layered Pollution and Chemical Analysis project, or ALPACA, an international project funded by the National Science Foundation, the National Oceanic and Atmospheric Administration and European sources. It is part of an international air quality effort called Pollution in the Arctic: Climate Environment and Societies.

Read the full story in the University of Alaska Fairbanks newsroom.

News Contact

Jess Hunt-Ralston

Director of Communications

College of Sciences

Georgia Institute of Technology

Rod Boyce

University of Alaska Fairbanks

Sep. 03, 2024

Across Georgia Tech, researchers are exploring the universe — its origins, possible futures, and humanity and Earth’s place in it. These investigations are the efforts of hundreds of astrobiologists, astrophysicists, aerospace engineers, astronomers, and experts in space policy and science fiction — and all of this work is brought together under the Institute’s new Space Research Initiative (SRI).

The SRI is the hub of all things space-related at Georgia Tech. It connects research institutes, labs, facilities, Schools, and Colleges to foster the conversation about space across Georgia and beyond. As a budding Interdisciplinary Research Institute (IRI), the SRI currently encompasses three core centers that contribute distinct interdisciplinary perspectives to space exploration.

Center for Space Technology and Research

The Center for Space Technology and Research (CSTAR) is a hub dedicated to furthering the expansion of Georgia’s aerospace industry, which is already the state’s No. 1 economic driver. The center's team at Georgia Tech conducts cutting-edge research in fields such as astrophysics, Earth science, planetary science, robotics, space policy, space technology, materials science, and space systems engineering.

CSTAR boasts a collaborative network of more than 100 Georgia Tech faculty members and research staff, supported by annual funding exceeding $20 million. Its contribution to space research is highlighted by its active multiyear research grants totaling over $100 million. Each year, CSTAR also contributes to the academic community with around 100 peer-reviewed journal articles and provides mentorship to dozens of graduate and undergraduate students, shaping the next generation of space research.

Members of CSTAR have contributed to a variety of spaceflight projects, from observing the atmosphere of Jupiter, to creating carbon nanotube-based technology on CubeSats, to building an innovative, dual-use antenna that is simultaneously a critical life-saving handrail and a radio emitter inside an airlock on the International Space Station. Several examples of this research will soon be part of a new permanent display in the National Air and Space Museum in Washington, D.C.

“The work done by the Georgia Tech research community in space is phenomenal,” said CSTAR Director Jud Ready. “We have worked on the International Space Station, launched numerous free-flying CubeSats in low Earth orbit, as well as our current crowning achievement, the Lunar Flashlight CubeSat, which is the world’s only heliocentric spacecraft currently owned and operated by an academic institution that recently demonstrated planetary optical navigation techniques for the first time, by any organization — including NASA.” Future missions include materials demonstrations on a lunar lander, as well as additional orbital activities of both the Earth and moon.

“The SRI will increase our reach and impact over and above these prior activities by at least an order of magnitude,” he said. “I am excited for what the future holds for Georgia Tech students, faculty, and research partners as a result of this new organization.”

Director: Jud Ready

Associate Directors: Morris Cohen and Jennifer Glass

Center for Relativistic Astrophysics

The Center for Relativistic Astrophysics (CRA) is housed within the College of Sciences’ School of Physics. The center’s mission is to provide students with education and training in the key research areas of astroparticle physics, theoretical astrophysics, and gravitational wave astrophysics.

CRA researchers study the breadth of space, ranging from the early universe’s large-scale structure to particle interactions. They also study black holes and the merger of compact objects, the potential outcome of the evolution of stellar binary systems, and — closer to home — exoplanets and stars found in the Milky Way. Of particular strength are computational astrophysics and multi-messenger astrophysical studies with neutrinos, photons, and gravitational waves.

In addition, CRA researchers actively participate in major international collaborations, such as the operations and development of existing and future detectors, including the IceCube Neutrino Observatory, the LIGO and LISA gravitational wave observatories, X-ray observatories NuSTAR and Athena, and gamma-ray detectors VERITAS and CTA.

“Bringing together all space research under a single umbrella will be a huge boon to the CRA’s research efforts and visibility,” said John Wise, CRA director. “I am excited about the opportunities the SRI will bring forth within such a collaborative environment, especially the prospect of Georgia Tech leading a space mission that can test the theoretical work performed within the CRA.”

Director: John Wise

Associate Director: Tamara Bogdanović

Astrobiology research at Georgia Tech, which includes experts in biochemistry, physics, aerospace engineering, planetary science, and astronomy, as well as others, seeks to answer these age-old questions: What is the origin of life? Does life exist on other worlds?

Georgia Tech’s astrobiology community includes students, staff, and faculty across campus, the educational curriculum, the Exploring Origins student-run group, an astrobiology fellows program, and keystone events.

Many globally recognized researchers in this field are at Georgia Tech, and their recent discoveries hint at the potential for life on Mars and ocean worlds like Europa. Astrobiology at Tech brings together these faculty with scholars in the humanities and social sciences to share their research with the public and give it a broader cultural context.

The Georgia Tech Astrobiology Graduate Certificate Program, an interdisciplinary initiative across several Schools and Colleges, is designed to broaden student participation in astrobiology. An undergraduate minor is in development. The purpose of these programs is to expand opportunities for both undergraduate and graduate students in the interdisciplinary field of astrobiology.

“One of the main reasons I came to Georgia Tech in 2020 is its vibrant astrobiology program,” said Christopher E. Carr, co-director of Georgia Tech Astrobiology. “It’s a true pleasure to have such amazing colleagues.”

Co-directors: Frances Rivera Hernández and Christopher E. Carr

News Contact

Laurie Haigh

Research Communications

Sep. 03, 2024

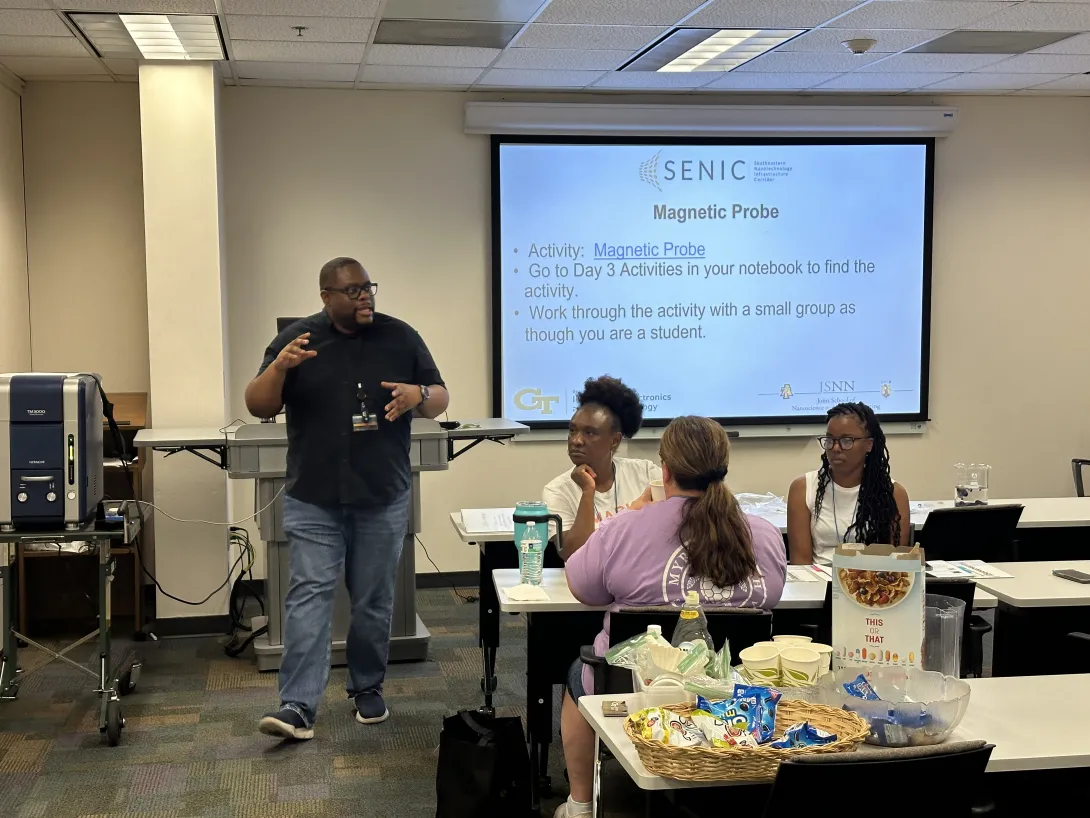

The Institute for Matter and Systems (IMS) has received $700,000 in funding from the National Science Foundation (NSF) for two education and outreach programs.

The awards will support the Research Experience for Undergraduates (REU) and Research Experience for Teachers (RET) programs at Georgia Tech. The REU summer internship program provides undergraduate students from two- and four-year programs the chance to perform cutting-edge research at the forefront of nanoscale science and engineering. The RET program for high school teachers and technical college faculty offers a paid opportunity to experience the excitement of nanotechnology research and to share this experience in their classrooms.

“This NSF funding allows us to be able to do more with the programs,” said Mikkel Thomas, associate director for education and outreach. “These are programs that have existed in the past, but we haven’t had external funding for the last three years. The NSF support allows us to do more — bring more students into the program or increase the RET stipends.”

In addition to the REU and RET programs, IMS offers short courses and workshops focused on professional development, instructional labs for undergraduate and graduate students, a certificate for veterans in microelectronics and nano-manufacturing, and community engagement activities such as the Atlanta Science Festival.

News Contact

Amelia Neumeister | Communications Program Manager

Aug. 30, 2024

The Cloud Hub, a key initiative of the Institute for Data Engineering and Science (IDEaS) at Georgia Tech, recently concluded a successful Call for Proposals focused on advancing the field of Generative Artificial Intelligence (GenAI). This initiative, made possible by a generous gift funding from Microsoft, aims to push the boundaries of GenAI research by supporting projects that explore both foundational aspects and innovative applications of this cutting-edge technology.

Call for Proposals: A Gateway to Innovation

Launched in early 2024, the Call for Proposals invited researchers from across Georgia Tech to submit their innovative ideas on GenAI. The scope was broad, encouraging proposals that spanned foundational research, system advancements, and novel applications in various disciplines, including arts, sciences, business, and engineering. A special emphasis was placed on projects that addressed responsible and ethical AI use.

The response from the Georgia Tech research community was overwhelming, with 76 proposals submitted by teams eager to explore this transformative technology. After a rigorous selection process, eight projects were selected for support. Each awarded team will also benefit from access to Microsoft’s Azure cloud resources..

Recognizing Microsoft’s Generous Contribution

This successful initiative was made possible through the generous support of Microsoft, whose contribution of research resources has empowered Georgia Tech researchers to explore new frontiers in GenAI. By providing access to Azure’s advanced tools and services, Microsoft has played a pivotal role in accelerating GenAI research at Georgia Tech, enabling researchers to tackle some of the most pressing challenges and opportunities in this rapidly evolving field.

Looking Ahead: Pioneering the Future of GenAI

The awarded projects, set to commence in Fall 2024, represent a diverse array of research directions, from improving the capabilities of large language models to innovative applications in data management and interdisciplinary collaborations. These projects are expected to make significant contributions to the body of knowledge in GenAI and are poised to have a lasting impact on the industry and beyond.

IDEaS and the Cloud Hub are committed to supporting these teams as they embark on their research journeys. The outcomes of these projects will be shared through publications and highlighted on the Cloud Hub web portal, ensuring visibility for the groundbreaking work enabled by this initiative.

Congratulations to the Fall 2024 Winners

- Annalisa Bracco | EAS "Modeling the Dispersal and Connectivity of Marine Larvae with GenAI Agents" [proposal co-funded with support from the Brook Byers Institute for Sustainable Systems]

- Yunan Luo | CSE “Designing New and Diverse Proteins with Generative AI”

- Kartik Goyal | IC “Generative AI for Greco-Roman Architectural Reconstruction: From Partial Unstructured Archaeological Descriptions to Structured Architectural Plans”

- Victor Fung | CSE “Intelligent LLM Agents for Materials Design and Automated Experimentation”

- Noura Howell | LMC “Applying Generative AI for STEM Education: Supporting AI literacy and community engagement with marginalized youth”

- Neha Kumar | IC “Towards Responsible Integration of Generative AI in Creative Game Development”

- Maureen Linden | Design “Best Practices in Generative AI Used in the Creation of Accessible Alternative Formats for People with Disabilities”

- Surya Kalidindi | ME & MSE “Accelerating Materials Development Through Generative AI Based Dimensionality Expansion Techniques”

- Tuo Zhao | ISyE “Adaptive and Robust Alignment of LLMs with Complex Rewards”

News Contact

Christa M. Ernst - Research Communications Program Manager

christa.ernst@research.gatech.edu

Aug. 30, 2024

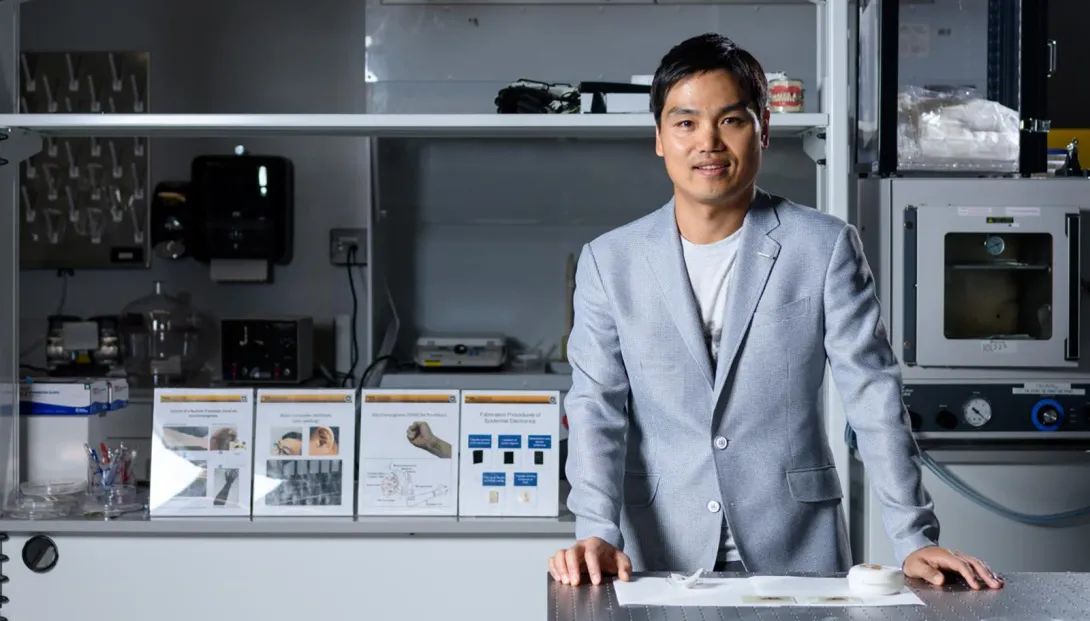

Georgia Tech researcher W. Hong Yeo has been awarded a $3 million grant to help develop a new generation of engineers and scientists in the field of sustainable medical devices.

“The workforce that will emerge from this program will tackle a global challenge through sustainable innovations in device design and manufacturing,” said Yeo, Woodruff Faculty Fellow and associate professor in the George W. Woodruff School of Mechanical Engineering and the Wallace H. Coulter Department of Biomedical Engineering at Georgia Tech and Emory University.

The funding, from the National Science Foundation (NSF) Research Training (NRT) program, will address the environmental impacts resulting from the mass production of medical devices, including the increase in material waste and greenhouse gas emissions.

Under Yeo’s leadership, the Georgia Tech team comprises multidisciplinary faculty: Andrés García (bioengineering), HyunJoo Oh (industrial design and interactive computing), Lewis Wheaton (biology), and Josiah Hester (sustainable computing). Together, they’ll train 100 graduate students, including 25 NSF-funded trainees, who will develop reuseable, reliable medical devices for a range of uses.

“We plan to educate students on how to develop medical devices using biocompatible and biodegradable materials and green manufacturing processes using low-cost printing technologies,” said Yeo. “These wearable and implantable devices will enhance disease diagnosis, therapeutics, rehabilitation, and health monitoring.”

Students in the program will be challenged by a comprehensive, multidisciplinary curriculum, with deep dives into bioengineering, public policy, physiology, industrial design, interactive computing, and medicine. And they’ll get real-world experience through collaborations with clinicians and medical product developers, working to create devices that meet the needs of patients and care providers.

The Georgia Tech NRT program aims to attract students from various backgrounds, fostering a diverse, inclusive environment in the classroom — and ultimately in the workforce.

The program will also introduce a new Ph.D. concentration in smart medical devices as part of Georgia Tech's bioengineering program, and a new M.S. program in the sustainable development of medical devices. Yeo also envisions an academic impact that extends beyond the Tech campus.

“Collectively, this NRT program's curriculum, combining methods from multiple domains, will help establish best practices in many higher education institutions for developing reliable and personalized medical devices for healthcare,” he said. “We’d like to broaden students' perspectives, move past the current technology-first mindset, and reflect the needs of patients and healthcare providers through sustainable technological solutions.”

News Contact

Jerry Grillo

Aug. 29, 2024

Between revitalized investments in America’s manufacturing infrastructure and an increased focus on AI and automation, the U.S. is experiencing a manufacturing renaissance. A key focus of this resurgence lies in improving the resiliency of supply chains in the U.S., particularly in crucial sectors like defense.

“If we were to suddenly have a seismic shift in defense manufacturing needs,” asks Aaron Stebner, professor and Eugene C. Gwaltney Jr. Chair in Manufacturing in the George W. Woodruff School of Mechanical Engineering, “do we have the supply chain and manufacturers who could meet that sudden increase in demand? How do we do that in a way that’s sustainable for long periods of time as a nation if that need arises?”

The Georgia Tech Manufacturing Institute (GTMI) officially launched the Manufacturing 4.0 Consortium in 2023 to address that need. Designed to form a network of engaged manufacturers from across the country, the Consortium serves as a key connection point between Georgia Tech and industry partners — and as fertile ground for collaborative innovation.

“By bringing us all together,” says Stebner, who serves on the board of the Consortium, “we can do bigger, more meaningful things and find unique ways and opportunities to get money flowing back to the companies and Georgia Tech.”

With over 25 founding company members, the Consortium celebrated its first official year of operation in August.

Creating a Resilient Network

The Manufacturing 4.0 Consortium originally grew out of an 18-month pilot project funded by the Department of Defense Office of Local Community Cooperation aiming to increase defense supply chain resilience, assist Georgia manufacturers in adopting new technologies, and foster collaboration by connecting manufacturers across Georgia.

Those goals and more are tackled by the Consortium’s focus on “networking, engagement, and collaboration,” says Stebner. “It's not just a consortium for Georgia Tech to take money from industry and do stuff with their money — the goal is to create new resources that enable us to collaborate in bigger ways than we could otherwise.”

To join the Consortium, industry members pay up to $10,000 annually to access its network, intellectual property, and facilities. With a 10% membership discount for Georgia businesses and a 75% discount for small businesses, the Consortium especially aims to promote growth for small Georgia manufacturers.

“Memberships come with time at the Advanced Manufacturing Pilot Facility, which we’re expanding to be this test bed for autonomous maturation of research and development,” says Stebner. “The fact that we have what’s going to be an almost $60 million facility behind us as a mechanism and a playground for all these companies is unique.”

“Having a shared use facility that is fully equipped to solve manufacturing’s most interesting challenges is not only a perk of Consortium memberships,” said Executive Director Steven Ferguson, “but it also serves as a hub for innovation in manufacturing.”

Industry Innovation

Many consortiums founded by academic institutions are primarily focused on academic research.

“The Manufacturing 4.0 consortium has an industry focus,” said Branden Kappes, founder and president of Consortium member company Contextualize LLC. “It's more about how we take this capability that, at the moment, is trapped in a lab and transition from a wonderful concept into a wonderful product.”

The Consortium achieves that translation through shared intellectual property agreements, collaborative research initiatives, and an emphasis on creating an engaged and open network of members.

“I see camaraderie inside the Manufacturing 4.0 Consortium,” says Kappes. “I see companies that overlap and compete in some areas, are complementary in others, and are willing to build a bridge to advance the capabilities of both sides and the community as a whole. That type of mentality is very exciting.”

“This is one of the most highly engaged groups I have interacted with in a professional setting,” said John Flynn, vice president of Sales at Consortium member company Endeavor 3D. “It is an incredibly dynamic melting pot of all the different facets of industry 4.0 and digital manufacturing, bringing everyone together from that part of the supply chain to create what I know will be important and value-added projects, ultimately resulting in intellectual property.”

“We are able to connect Consortium members with subject matter experts at Georgia Tech and within the Consortium who have ‘been there and done that,’” said Ferguson. “At the same time, we are working with manufacturers to create novel solutions to complex problems through research engagements. Blending all of those activities into one organization is part of the magic that is the Consortium.”

News Contact

Audra Davidson

Communications Manager

Georgia Tech Manufacturing Institute

Aug. 28, 2024

The National Science Foundation has awarded $2 million to Clark Atlanta University in partnership with the HBCU CHIPS Network, a collaborative effort involving historically black colleges and universities (HBCUs), government agencies, academia, and industry that will serve as a national resource for semiconductor research and education.

“This is an exciting time for the HBCU CHIPS Network,” said George White, senior director for Strategic Partnerships at Georgia Tech. “This funding, and the support of Georgia Tech Executive Vice President for Research Chaouki Abdallah, is integral for the successful launch of the CHIPS Network.”

The HBCU Chips Network works to cultivate a diverse and skilled workforce that supports the national semiconductor industry. The student research and internship opportunities along with the development of specialized curricula in semiconductor design, fabrication, and related fields will expand the microelectronics workforce. As part of the network, Georgia Tech will optimize the packaging of chips into systems.

News Contact

Georgia Tech Contact:

Amelia Neumeister | Research Communications Program Manager

Clark Atlanta University Contact:

Frances Williams

Aug. 19, 2024

Nylon, Teflon, Kevlar. These are just a few familiar polymers — large-molecule chemical compounds — that have changed the world. From Teflon-coated frying pans to 3D printing, polymers are vital to creating the systems that make the world function better.

Finding the next groundbreaking polymer is always a challenge, but now Georgia Tech researchers are using artificial intelligence (AI) to shape and transform the future of the field. Rampi Ramprasad’s group develops and adapts AI algorithms to accelerate materials discovery.

This summer, two papers published in the Nature family of journals highlight the significant advancements and success stories emerging from years of AI-driven polymer informatics research. The first, featured in Nature Reviews Materials, showcases recent breakthroughs in polymer design across critical and contemporary application domains: energy storage, filtration technologies, and recyclable plastics. The second, published in Nature Communications, focuses on the use of AI algorithms to discover a subclass of polymers for electrostatic energy storage, with the designed materials undergoing successful laboratory synthesis and testing.

“In the early days of AI in materials science, propelled by the White House’s Materials Genome Initiative over a decade ago, research in this field was largely curiosity-driven,” said Ramprasad, a professor in the School of Materials Science and Engineering. “Only in recent years have we begun to see tangible, real-world success stories in AI-driven accelerated polymer discovery. These successes are now inspiring significant transformations in the industrial materials R&D landscape. That’s what makes this review so significant and timely.”

AI Opportunities

Ramprasad’s team has developed groundbreaking algorithms that can instantly predict polymer properties and formulations before they are physically created. The process begins by defining application-specific target property or performance criteria. Machine learning (ML) models train on existing material-property data to predict these desired outcomes. Additionally, the team can generate new polymers, whose properties are forecasted with ML models. The top candidates that meet the target property criteria are then selected for real-world validation through laboratory synthesis and testing. The results from these new experiments are integrated with the original data, further refining the predictive models in a continuous, iterative process.

While AI can accelerate the discovery of new polymers, it also presents unique challenges. The accuracy of AI predictions depends on the availability of rich, diverse, extensive initial data sets, making quality data paramount. Additionally, designing algorithms capable of generating chemically realistic and synthesizable polymers is a complex task.

The real challenge begins after the algorithms make their predictions: proving that the designed materials can be made in the lab and function as expected and then demonstrating their scalability beyond the lab for real-world use. Ramprasad’s group designs these materials, while their fabrication, processing, and testing are carried out by collaborators at various institutions, including Georgia Tech. Professor Ryan Lively from the School of Chemical and Biomolecular Engineering frequently collaborates with Ramprasad’s group and is a co-author of the paper published in Nature Reviews Materials.

"In our day-to-day research, we extensively use the machine learning models Rampi’s team has developed,” Lively said. “These tools accelerate our work and allow us to rapidly explore new ideas. This embodies the promise of ML and AI because we can make model-guided decisions before we commit time and resources to explore the concepts in the laboratory."

Using AI, Ramprasad’s team and their collaborators have made significant advancements in diverse fields, including energy storage, filtration technologies, additive manufacturing, and recyclable materials.

Polymer Progress

One notable success, described in the Nature Communications paper, involves the design of new polymers for capacitors, which store electrostatic energy. These devices are vital components in electric and hybrid vehicles, among other applications. Ramprasad’s group worked with researchers from the University of Connecticut.

Current capacitor polymers offer either high energy density or thermal stability, but not both. By leveraging AI tools, the researchers determined that insulating materials made from polynorbornene and polyimide polymers can simultaneously achieve high energy density and high thermal stability. The polymers can be further enhanced to function in demanding environments, such as aerospace applications, while maintaining environmental sustainability.

“The new class of polymers with high energy density and high thermal stability is one of the most concrete examples of how AI can guide materials discovery,” said Ramprasad. “It is also the result of years of multidisciplinary collaborative work with Greg Sotzing and Yang Cao at the University of Connecticut and sustained sponsorship by the Office of Naval Research.”

Industry Potential

The potential for real-world translation of AI-assisted materials development is underscored by industry participation in the Nature Reviews Materials article. Co-authors of this paper also include scientists from Toyota Research Institute and General Electric. To further accelerate the adoption of AI-driven materials development in industry, Ramprasad co-founded Matmerize Inc., a software startup company recently spun out of Georgia Tech. Their cloud-based polymer informatics software is already being used by companies across various sectors, including energy, electronics, consumer products, chemical processing, and sustainable materials.

“Matmerize has transformed our research into a robust, versatile, and industry-ready solution, enabling users to design materials virtually with enhanced efficiency and reduced cost,” Ramprasad said. “What began as a curiosity has gained significant momentum, and we are entering an exciting new era of materials by design.”

News Contact

Tess Malone, Senior Research Writer/Editor

tess.malone@gatech.edu

Aug. 09, 2024

The federally funded IAC program provides small to mid-sized industrial facilities in the region with free assessments for energy, productivity, and waste, while also supporting workforce development, recruitment, and training.

“This IAC is a great example of the ways in which Georgia Tech is serving all of Georgia and the Southeast,” said Tim Lieuwen, executive director of Georgia Tech’s Strategic Energy Institute (SEI) and Regents’ Professor and holder of the David S. Lewis, Jr. Chair in the Daniel Guggenheim School of Aerospace Engineering.

“We support numerous small and medium-sized enterprises in rural, suburban, and urban areas, bringing the technical expertise of Georgia Tech to bear in solving real-world problems faced by our small businesses.”

Georgia Tech’s IAC, which serves Georgia, South Carolina, and North Florida, is administered jointly by the George W. Woodruff School of Mechanical Engineering and the Georgia Manufacturing Extension Partnership (GaMEP), part of the Enterprise Innovation Institute (EI2). The organization has performed thousands of assessments since its inception in the 1980s – usually at the rate of 15 to 20 per year – and typically identifies upwards of 10% in energy savings for clients.

The assessment team, overseen by IAC associate director Kelly Grissom, comprises faculty and student engineers from Georgia Tech and the Florida A&M University/Florida State University College of Engineering.

In addition, Georgia Tech leads the Southeastern IACs Center of Excellence, which partners the institution with fellow University System of Georgia (USG) entity Kennesaw State University, local HBCU Clark Atlanta University, and neighboring state capital HBCU Florida A&M University.

Although mechanical engineering has historically been the chief area of concentration for IAC’s interns, the program currently accepts students across a range of disciplines. “Increased diversity from that standpoint enriches the potential of the recommendations we can make,” said Grissom.

Students are integral to the program, as is Grissom’s role in facilitating their experiences with client engagement and technical recommendations.

“Kelly is the reason our program has been recognized,” said Randy Green, energy and sustainability services group manager at GaMEP. “He works tirelessly to ensure that assessments are accomplished with success for our manufacturers and students.”

“We also recognize our partnership with the Woodruff School of Mechanical Engineering and with IAC program lead Comas Haynes, Ph.D., who works diligently to keep us on track and connected with our sponsors at the U.S. Department of Energy,” Green added.

The DoE accolade represents “a ‘one Georgia Tech’ win,” symbolic of the synergistic relationships forged across the Institute, said Haynes, who also serves as the Hydrogen Initiative Lead at Georgia Tech’s Strategic Energy Institute (SEI) and Energy branch head in the Intelligent Sustainable Technologies Division at the Georgia Tech Research Institute. Haynes specifically cited Green’s “technical prowess and managerial oversight” as another key to the IAC program’s success.

Said Devesh Ranjan, Eugene C. Gwaltney, Jr. School Chair and professor in the George W. Woodruff School of Mechanical Engineering, “It is truly an honor for Georgia Tech to be named the Department of Energy Industrial (Training and) Assessment Center of the Year. Clean energy and manufacturing have been a focus for the Institute and the Woodruff School for a long time, and GTRI, EI2, and SEI have collaboratively done phenomenal work in helping manufacturers save energy, improve productivity, and reduce waste.”

To check eligibility and apply for assistance from Georgia Tech’s IAC, click here.

News Contact

Eve Tolpa

eve.tolpa@innovate.gatech.edu

Jul. 30, 2024

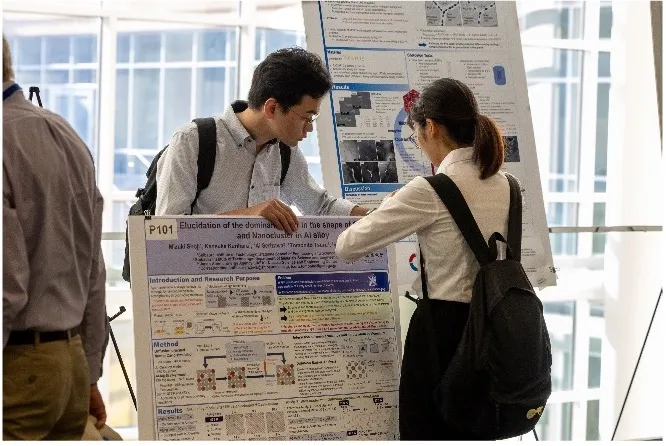

From airplanes to soda cans, aluminum is a crucial — not to mention, an incredibly sustainable — material in manufacturing. Since 2019, Georgia Tech has partnered with Novelis, a global leader in aluminum rolling and recycling, through the Novelis Innovation Hub to advance research and business opportunities in aluminum manufacturing.

Novelis and the Georgia Institute of Technology recently co-hosted the 19th International Conference on Aluminum Alloys (ICAA19). Held on Georgia Tech's campus, this event brought together the brightest minds in aluminum technology for four days of intensive learning and networking.

Since its inception in 1986, ICAA has been the premier global forum for aluminum manufacturing innovations. This year, the conference attracted over 300 participants from 19 countries, including representatives from academia, research organizations, and industry leaders.

“The diverse mix of attendees created a rich tapestry of knowledge and experience, fostering a robust exchange of ideas,” said Naresh Thadhani, conference co-chair and professor in the School of Materials Science and Engineering

ICAA19 featured 12 symposia topics and over 250 technical presentations, delving into critical themes such as sustainability, future mobility, and next-generation manufacturing. Keynote addresses from leaders at the Aluminum Association, Airbus, and Coca-Cola set the stage for insightful discussions. Novelis Chief Technology Officer Philippe Meyer and Georgia Tech Executive Vice President for Research Chaouki Abdallah headlined the event, underscoring the importance of Novelis’ partnership with Georgia Tech.

Marking the fifth anniversary of the Novelis Innovation Hub at Georgia Tech, Hub Executive Director Shreyes Melkote says that “ICAA19 represents a prime example of the close collaboration between Novelis and the Institute, enabled by the Novelis Innovation Hub.” Melkote, a professor in the George W. Woodruff School of Mechanical Engineering, also serves as the associate director of the Georgia Tech Manufacturing Institute.

“This unique center for research, development, and technology has been instrumental in advancing aluminum innovations, exemplifying the power of partnerships in driving industry progress,” says Meyer. “As we reflect on the success of ICAA19, we remain committed to strengthening our existing partnerships and forging new alliances to accelerate innovation. The collaborative spirit showcased at the conference is a testament to our dedication to leading the aluminum industry into a more sustainable future.”

News Contact

Audra Davidson

Research Communications Program Manager

Georgia Tech Manufacturing Institute

Pagination

- Previous page

- 11 Page 11

- Next page