May. 14, 2025

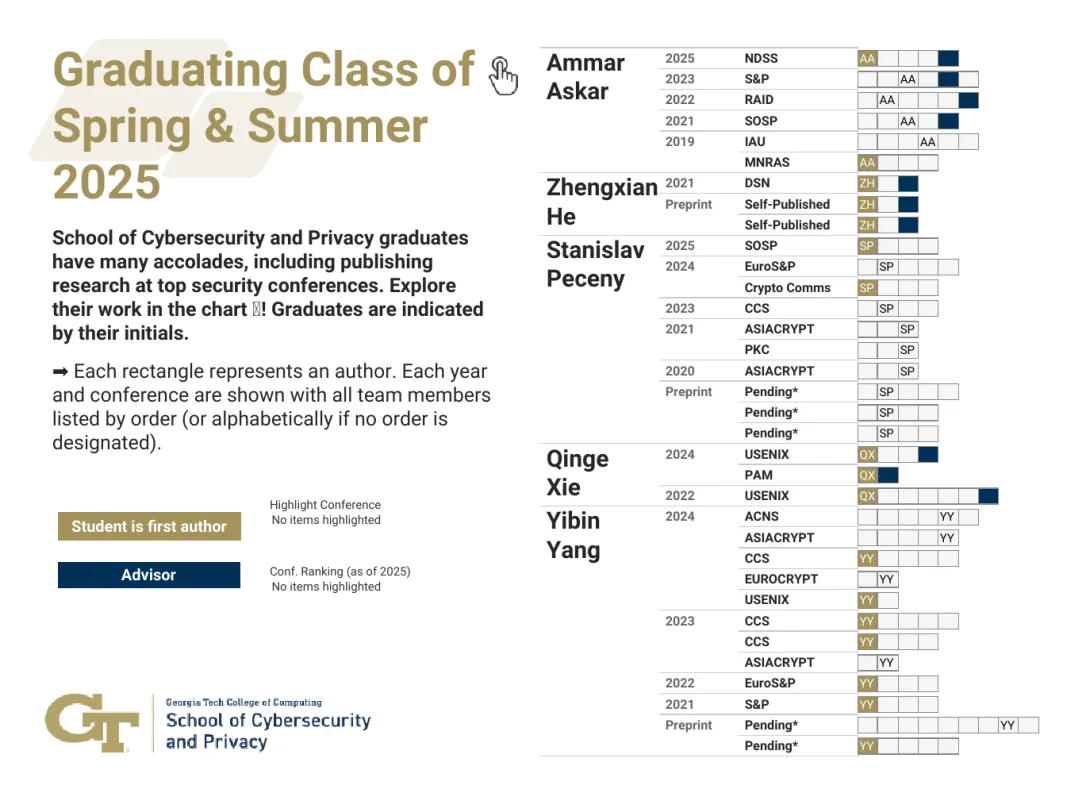

The School of Cybersecurity and Privacy at Georgia Tech is proud to recognize the accomplishments of five doctoral students who finished their doctoral programs in Spring 2025. These scholars have advanced critical research in software security, cryptography, and privacy, collectively publishing 34 papers, most of which appear in top-tier venues.

Ammar Askar developed new tools for software security in multi-language systems, including a concolic execution engine powered by large language models. He highlighted DEFCON 2021, which he attended with the Systems Software and Security Lab (SSLab), as a favorite memory.

Zhengxian He persevered through the pandemic to lead a major project with an industry partner, achieving strong research outcomes. He will be joining Amazon and fondly remembers watching sunsets from the CODA building.

Stanislav Peceny focused on secure multiparty computation (MPC), designing high-performance cryptographic protocols that improve efficiency by up to 1000x. He’s known for his creativity in both research and life, naming avocado trees after famous mathematicians and enjoying research discussions on the CODA rooftop.

Qinge Xie impressed faculty with her adaptability across multiple domains. Her advisor praised her independence and technical range, noting her ability to pivot seamlessly between complex research challenges.

Yibin Yang contributed to the advancement of zero-knowledge proofs and MPC, building toolchains that are faster and more usable than existing systems. His work earned a Distinguished Paper Award at ACM CCS 2023, and he also served as an RSAC Security Scholar. Yang enjoyed teaching and engaging with younger students, especially through events like Math Kangaroo.

Faculty mentors included Regents’ Entrepreneur Mustaque Ahamad, Professors Taesoo Kim and Vladimir Kolesnikov, and Assistant Professor Frank Li, who played vital roles in guiding the graduates’ research journeys.

Learn more about the graduates and their mentors on the 2025 Ph.D. graduate microsite.

News Contact

JP Popham, Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Apr. 02, 2025

Kinaxis, a global leader in supply chain orchestration, and the NSF AI Institute for Advances in Optimization (AI4OPT) at Georgia Tech today announced a new co-innovation partnership. This partnership will focus on developing scalable artificial intelligence (AI) and optimization solutions to address the growing complexity of global supply chains. AI4OPT operates under Tech AI, Georgia Tech’s AI hub, bringing together interdisciplinary expertise to advance real-world AI applications.

This particular collaboration builds on a multi-year relationship between Kinaxis and Georgia Tech, strengthening their shared commitment to turn academic innovation into real-world supply chain impact. The collaboration will span joint research, real-world applications, thought leadership, guest lectures, and student internships.

“In collaboration with AI4OPT, Kinaxis is exploring how the fusion of machine learning and optimization may bring a step change in capabilities for the next generation of supply chain management systems,” said Pascal Van Hentenryck, the A. Russell Chandler III Chair and professor at Georgia Tech, and director of AI4OPT and Tech AI at Georgia Tech.

Kinaxis’ AI-infused supply chain orchestration platform, Maestro™, combines proprietary technologies and techniques to deliver real-time transparency, agility, and decision-making across the entire supply chain — from multi-year strategic orchestration to last-mile delivery. As global supply chains face increasing disruptions from tariffs, pandemics, extreme weather, and geopolitical events, the Kinaxis–AI4OPT partnership will focus on developing AI-driven strategies to enhance companies’ responsiveness and resilience.

“At Kinaxis, we recognize the vital role that academic research plays in shaping the future of supply chain orchestration,” said Chief Technology Officer Gelu Ticala. “By partnering with world-class institutions like Georgia Tech, we’re closing the gap between AI innovation and implementation, bringing cutting-edge ideas into practice to solve the industry’s most pressing challenges.”

With more than 40 years of supply chain leadership, Kinaxis supports some of the world’s most complex industries, including high-tech, life sciences, industrial, mobility, consumer products, chemical, and oil and gas. Its customers include Unilever, P&G, Ford, Subaru, Lockheed Martin, Raytheon, Ipsen, and Santen.

About Kinaxis

Kinaxis is a global leader in modern supply chain orchestration, powering complex global supply chains and supporting the people who manage them, in service of humanity. Our powerful, AI-infused supply chain orchestration platform, Maestro™, combines proprietary technologies and techniques that provide full transparency and agility across the entire supply chain — from multi-year strategic planning to last-mile delivery. We are trusted by renowned global brands to provide the agility and predictability needed to navigate today’s volatility and disruption. For more news and information, please visit kinaxis.com or follow us on LinkedIn.

About AI4OPT

The NSF AI Institute for Advances in Optimization (AI4OPT) is one of the 27 National Artificial Intelligence Research Institutes set up by the National Science Foundation to conduct use-inspired research and realize the potential of AI. The AI Institute for Advances in Optimization (AI4OPT) is focused on AI for Engineering and is conducting cutting-edge research at the intersection of learning, optimization, and generative AI to transform decision making at massive scales, driven by applications in supply chains, energy systems, chip design and manufacturing, and sustainable food systems. AI4OPT brings together over 80 faculty and students from Georgia Tech, UC Berkeley, University of Southern California, UC San Diego, Clark Atlanta University, and the University of Texas at Arlington, working together with industrial partners that include Intel, Google, UPS, Ryder, Keysight, Southern Company, and Los Alamos National Laboratory. To learn more, visit ai4opt.org.

About Tech AI

Tech AI is Georgia Tech's hub for artificial intelligence research, education, and responsible deployment. With over $120 million in active AI research funding, including more than $60 million in NSF support for five AI Research Institutes, Tech AI drives innovation through cutting-edge research, industry partnerships, and real-world applications. With over 370 papers published at top AI conferences and workshops, Tech AI is a leader in advancing AI-driven engineering, mobility, and enterprise solutions. Through strategic collaborations, Tech AI bridges the gap between AI research and industry, optimizing supply chains, enhancing cybersecurity, advancing autonomous systems, and transforming healthcare and manufacturing. Committed to workforce development, Tech AI provides AI education across all levels, from K-12 outreach to undergraduate and graduate programs, as well as specialized certifications. These initiatives equip students with hands-on experience, industry exposure, and the technical expertise needed to lead in AI-driven industries. Bringing AI to the world through innovation, collaboration, and partnerships. Visit tech.ai.gatech.edu.

News Contact

Angela Barajas Prendiville | Director of Media Relations

aprendiville@gatech.edu

Mar. 21, 2025

March 20, 2025 – Tech AI, the AI hub at Georgia Tech, will host Tech AI Fest 2025 from March 26 to 28 at the Historic Academy of Medicine in Atlanta. This major AI event will bring together experts, researchers, industry professionals, policymakers, and students to explore the latest advancements and applications of artificial intelligence.

A Hub for AI Conversations

Tech AI Fest 2025 will bring together over 40 experts from top institutions and leading companies, including:

- Academic Institutions: Georgia Tech, Carnegie Mellon University, Harvard University, the University of Texas at Austin, Washington State University, and Columbia University.

- Leading Companies: J.P. Morgan, NVIDIA, Juniper Networks, Microsoft, OpenAI, Bosch USA, Kinaxis, MindsDB, Verdant Technologies, and Dandelion Science Corp.

Event Highlights

- Days 1 – 2 (March 26 – 27) will focus on how AI is being used in industry, government, and research. Sessions will discuss AI's role in technological progress, economic growth, and policy development.

- Day 3 (March 28) will showcase Georgia Tech's AI projects, including groundbreaking research, student presentations, and the launch of the Tech AI Alumni Group to strengthen industry-academic connections.

Commitment to AI Progress

Tech AI Fest 2025 shows Georgia Tech's dedication to advancing AI research, fostering innovation, and building meaningful partnerships across different sectors. The event is a platform for sharing knowledge, networking, and collaboration, putting attendees at the forefront of the AI revolution.

“AI is more than just an academic pursuit; it’s a force that’s reshaping industries, redefining education, and creating solutions for some of the biggest challenges we face today,” said Pascal Van Hentenryck, the A. Russell Chandler III Chair and professor in Georgia Tech’s H. Milton Stewart School of Industrial and Systems Engineering, director of the NSF Artificial Intelligence Institute for Advances in Optimization (AI4OPT), and director of Tech AI.

Registration and Participation

General admission is $25, but Georgia Tech students can attend for free while seats are available. Due to high demand, registration has reached capacity. Interested individuals can join the waiting list by contacting Josh Tullis at josh@corporate.gatech.edu.

For more information, visit the official event page.

About Tech AI

Tech AI is Georgia Tech’s hub for artificial intelligence research, education, and responsible deployment. It drives real-world AI solutions through innovation, collaboration, and industry partnerships. With over $120 million in active AI research funding and more than 370 publications at top AI conferences, Tech AI is ranked No. 5 in the U.S. for AI research and leads the way in AI-driven engineering and applied research. Supported by over $60 million in National Science Foundation funding, Tech AI plays a critical role in developing the next generation of AI leaders through world-class degree programs and specialized certifications. By bridging the gap between research and real-world applications, Tech AI collaborates with industry and government partners to optimize supply chains, enhance cybersecurity, advance autonomous systems, revolutionize healthcare, improve energy efficiency, and drive sustainable manufacturing.

News Contact

Georgia Tech Media Relations

Feb. 17, 2025

Men and women in California put their lives on the line when battling wildfires every year, but there is a future where machines powered by artificial intelligence are on the front lines, not firefighters.

However, this new generation of self-thinking robots would need security protocols to ensure they aren’t susceptible to hackers. To integrate such robots into society, they must come with assurances that they will behave safely around humans.

It begs the question: can you guarantee the safety of something that doesn’t exist yet? It’s something Assistant Professor Glen Chou hopes to accomplish by developing algorithms that will enable autonomous systems to learn and adapt while acting with safety and security assurances.

He plans to launch research initiatives, in collaboration with the School of Cybersecurity and Privacy and the Daniel Guggenheim School of Aerospace Engineering, to secure this new technological frontier as it develops.

“To operate in uncertain real-world environments, robots and other autonomous systems need to leverage and adapt a complex network of perception and control algorithms to turn sensor data into actions,” he said. “To obtain realistic assurances, we must do a joint safety and security analysis on these sensors and algorithms simultaneously, rather than one at a time.”

This end-to-end method would proactively look for flaws in the robot’s systems rather than wait for them to be exploited. This would lead to intrinsically robust robotic systems that can recover from failures.

Chou said this research will be useful in other domains, including advanced space exploration. If a space rover is sent to one of Saturn’s moons, for example, it needs to be able to act and think independently of scientists on Earth.

Aside from fighting fires and exploring space, this technology could perform maintenance in nuclear reactors, automatically maintain the power grid, and make autonomous surgery safer. It could also bring assistive robots into the home, enabling higher standards of care.

This is a challenging domain where safety, security, and privacy concerns are paramount due to frequent, close contact with humans.

This will start in the newly established Trustworthy Robotics Lab at Georgia Tech, which Chou directs. He and his Ph.D. students will design principled algorithms that enable general-purpose robots and autonomous systems to operate capably, safely, and securely with humans while remaining resilient to real-world failures and uncertainty.

Chou earned dual bachelor’s degrees in electrical engineering and computer sciences as well as mechanical engineering from University of California Berkeley in 2017, a master’s and Ph.D. in electrical and computer engineering from the University of Michigan in 2019 and 2022, respectively. He was a postdoc at MIT Computer Science & Artificial Intelligence Laboratory prior to joining Georgia Tech in November 2024. He is a recipient of the National Defense Science and Engineering Graduate fellowship program, NSF Graduate Research fellowships, and was named a Robotics: Science and Systems Pioneer in 2022.

News Contact

John (JP) Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Nov. 15, 2024

The Automatic Speech Recognition (ASR) models that power voice assistants like Amazon Alexa may have difficulty transcribing English speakers with minority dialects.

A study by Georgia Tech and Stanford researchers compared the transcribing performance of leading ASR models for people using Standard American English (SAE) and three minority dialects — African American Vernacular English (AAVE), Spanglish, and Chicano English.

Interactive Computing Ph.D. student Camille Harris is the lead author of a paper accepted into the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP) this week in Miami.

Harris recruited people who spoke each dialect and had them read from a Spotify podcast dataset, which includes podcast audio and metadata. Harris then used three ASR models — wav2vec 2.0, HUBERT, and Whisper — to transcribe the audio and compare their performances.

For each model, Harris found SAE transcription significantly outperformed each minority dialect. The models more accurately transcribed men who spoke SAE than women who spoke SAE. Members who spoke Spanglish and Chicano English had the least accurate transcriptions out of the test groups.

While the models transcribed SAE-speaking women less accurately than their male counterparts, that did not hold true across minority dialects. Minority men had the most inaccurate transcriptions of all demographics in the study.

“I think people would expect if women generally perform worse and minority dialects perform worse, then the combination of the two must also perform worse,” Harris said. “That’s not what we observed.

“Sometimes minority dialect women performed better than Standard American English. We found a consistent pattern that men of color, particularly Black and Latino men, could be at the highest risk for these performance errors.”

Addressing underrepresentation

Harris said the cause of that outcome starts with the training data used to build these models. Model performance reflected the underrepresentation of minority dialects in the data sets.

AAVE performed best under the Whisper model, which Harris said had the most inclusive training data of minority dialects.

Harris also looked at whether her findings mirrored existing systems of oppression. Black men have high incarceration rates and are one of the people groups most targeted by police. Harris said there could be a correlation between that and the low rate of Black men enrolled in universities, which leads to less representation in technology spaces.

“Minority men performing worse than minority women doesn’t necessarily mean minority men are more oppressed,” she said. “They may be less represented than minority women in computing and the professional sector that develops these AI systems.”

Harris also had to be cautious of a few variables among AAVE, including code-switching and various regional subdialects.

Harris noted in her study there were cases of code-switching to SAE. Speakers who code-switched performed better than speakers who did not.

Harris also tried to include different regional speakers.

“It’s interesting from a linguistic and history perspective if you look at migration patterns of Black folks — perhaps people moving from a southern state to a northern state over time creates different linguistic variations,” she said. “There are also generational variations in that older Black Americans may speak differently from younger folks. I think the variation was well represented in our data. We wanted to be sure to include that for robustness.”

TikTok barriers

Harris said she built her study on a paper she authored that examined user-design barriers and biases faced by Black content creators on TikTok. She presented that paper at the Association of Computing Machinery’s (ACM) 2023 Conference on Computer Supported Cooperative Works.

Those content creators depended on TikTok for a significant portion of their income. When providing captions for videos grew in popularity, those creators noticed the ASR tool built into the app inaccurately transcribed them. That forced the creators to manually input their captions, while SAE speakers could use the ASR feature to their benefit.

“Minority users of these technologies will have to be more aware and keep in mind that they’ll probably have to do a lot more customization because things won’t be tailored to them,” Harris said.

Harris said there are ways that designers of ASR tools could work toward being more inclusive of minority dialects, but cultural challenges could arise.

“It could be difficult to collect more minority speech data, and you have to consider consent with that,” she said. “Developers need to be more community-engaged to think about the implications of their models and whether it’s something the community would find helpful.”

News Contact

Nathan Deen

Communications Officer

School of Interactive Computing

Nov. 13, 2024

Members of the recently victorious cybersecurity group known as Team Atlanta received recognition from one of the top technology companies in the world for their discovery of a zero-day vulnerability in the DARPA AI Cyber Challenge (AIxCC) earlier this year.

On November 1, a team of Google’s security researchers from Project Zero announced they were inspired by the Georgia Tech students and alumni on the team that discovered a flaw in SQLite. This widely used open-source database ran the competition’s scoring algorithm.

According to a post from the project’s blog, when Google researchers saw the success of Atlantis, the large language model (LLM) used in AIxCC, they deployed their LLM to check vulnerabilities in SQLite.

Google’s Big Sleep tool discovered a security flaw in SQLite, an exploitable stack buffer underflow. Project Zero reported the vulnerability and it was patched almost immediately.

“We’re thrilled to see our work on LLM-based bug discovery and remediation inspiring further advancements in security research at Google,” said Hanqing Zhao, a Georgia Tech Ph.D. student. “It’s incredibly rewarding to witness the broader community recognizing and citing our contributions to AI and LLM-driven security efforts.”

Zhao led a group within Team Atlanta focused on tracking their project’s success during the competition, leading to the bug's discovery. He also wrote a technical breakdown of their findings in a blog post cited by Google’s Project Zero.

“This achievement was entirely autonomous, without any human intervention, and we hadn’t even anticipated targeting SQLite3,” he said. “The outcome highlighted the transformative potential of generative AI in security research. Our approach is rooted in a simple yet effective philosophy: mimic the expertise of seasoned security researchers using LLMs.”

The DARPA AI Cyber Challenge (AIxCC) semi-final competition was held at DEF CON 32 in Las Vegas. Team Atlanta, which included Georgia Tech experts, was among the contest’s winners.

Team Atlanta will now compete against six other teams in the final round, which will take place at DEF CON 33 in August 2025. The finalists will use the $2 million semi-final prize to improve their AI system over the next 12 months. Team Atlanta consists of past and present Georgia Tech students and was put together with the help of SCP Professor Taesoo Kim.

The AI systems in the finals must be open-sourced and ready for immediate, real-world launch. The AIxCC final competition will award the champion a $4 million grand prize.

The team tested their cyber reasoning system (CRS), dubbed Atlantis, on software used for data management, website support, healthcare systems, supply chains, electrical grids, transportation, and other critical infrastructures.

Atlantis is a next-generation, bug-finding and fixing system that can hunt bugs in multiple coding languages. The system immediately issues accurate software patches without any human intervention.

AIxCC is a Pentagon-backed initiative announced in August 2023 and will award up to $20 million in prize money throughout the competition. Team Atlanta was among the 42 teams that qualified for the semi-final competition earlier this year.

News Contact

John Popham

Communications Officer II | School of Cybersecurity and Privacy

Oct. 01, 2024

Even though artificial intelligence (AI) is not advanced enough to help the average person build weapons of mass destruction, federal agencies know it could be possible and are keeping pace with next generation technologies through rigorous research and strategic partnerships.

It is a delicate balance, but as the leader of the Department of Homeland Security (DHS), Countering Weapons of Mass Destruction Office (CWMD) told a room full of Georgia Tech students, faculty, and staff, there is no room for error.

“You have to be right all the time, the bad guys only have to be right once,” said Mary Ellen Callahan, assistant secretary for CWMD.

As a guest of John Tien, former DHS deputy secretary and professor of practice in the School of Cybersecurity and Privacy as well as the Sam Nunn School of International Affairs, Callahan was at Georgia Tech for three separate speaking engagements in late September.

"Assistant Secretary Callahan's contributions were remarkable in so many ways,” said Tien. “Most importantly, I love how she demonstrated to our students that the work in the fields of cybersecurity, privacy, and homeland security is an honorable, interesting, and substantive way to serve the greater good of keeping the American people safe and secure. As her former colleague at the U.S. Department of Homeland Security, I was proud to see her represent her CWMD team, DHS, and the Biden-Harris Administration in the way she did, with humility, personality, and leadership."

While the thought of AI-assisted WMDs is terrifying to think about, it is just a glimpse into what Callahan’s office handles on a regular basis. The assistant secretary walked her listeners through how CWMD works with federal and local law enforcement on how to identify and detect the signs of potential chemical, biological, radiological, or nuclear (CBRN) weapons.

“There's a whole cadre of professionals who spend every day preparing for the worst day in U.S. history,” said Callahan. “They are doing everything in their power to make sure that that does not happen.”

CWMD is also researching ways to implement AI technologies into current surveillance systems to help identify and respond to threats faster. For example, an AI-backed bio-hazard surveillance systems would allow analysts to characterize and contextualize the risk of potential bio-hazard threats in a timely manner.

Callahan’s office spearheaded a report exploring the advantages and risks of AI in, “Reducing the Risks at the Intersection of Artificial Intelligence and Chemical, Biological, Radiological, and Nuclear Threats,” which was released to the public earlier this year.

The report was a multidisciplinary effort that was created in collaboration with the White House Office of Science and Technology Policy, Department of Energy, academic institutions, private industries, think tanks, and third-party evaluators.

During his introduction of assistant secretary, SCP Chair Michael Bailey told those seated in the Coda Atrium that Callahan’s career is an incredible example of the interdisciplinary nature he hopes the school’s students and faculty can use as a roadmap.

“Important, impactful, and interdisciplinary research can be inspired by everyday problems,” he said. "We believe that building a secure future requires revolutionizing security education and being vigilant, and together, we can achieve this goal."

While on campus Tuesday, Callahan gave a special guest lecture to the students in “CS 3237 Human Dimension of Cybersecurity: People, Organizations, Societies,” and “CS 4267 - Critical Infrastructures.” Following the lecture, she gave a prepared speech to students, faculty, and staff.

Lastly, she participated in a moderated panel discussion with SCP J.Z. Liang Chair Peter Swire and Jerry Perullo, SCP professor of practice and former CISO of International Continental Exchange as well as the New York Stock Exchange. The panel was moderated by Tien.

News Contact

John Popham, Communications Officer II

School of Cybersecurity and Privacy | Georgia Institute of Technology

scp.cc.gatech.edu | in/jp-popham on LinkedIn

Get the latest SCP updates by joining our mailing list!

Sep. 26, 2024

Is it a building or a street? How tall is the building? Are there powerlines nearby?

These are details autonomous flying vehicles would need to know to function safely. However, few aerial image datasets exist that can adequately train the computer vision algorithms that would pilot these vehicles.

That’s why Georgia Tech researchers created a new benchmark dataset of computer-generated aerial images.

Judy Hoffman, an assistant professor in Georgia Tech’s School of Interactive Computing, worked with students in her lab to create SKYSCENES. The dataset contains over 33,000 aerial images of cities curated from a computer simulation program.

Hoffman said sufficient training datasets could unlock the potential of autonomous flying vehicles. Constructing those datasets is a challenge the computer vision research community has been working for years to overcome.

“You can’t crowdsource it the same way you would standard internet images,” Hoffman said. “Trying to collect it manually would be very slow and expensive — akin to what the self-driving industry is doing driving around vehicles, but now you’re talking about drones flying around.

“We must fix those problems to have models that work reliably and safely for flying vehicles.”

Many existing datasets aren’t annotated well enough for algorithms to distinguish objects in the image. For example, the algorithms may not recognize the surface of a building from the surface of a street.

Working with Hoffman, Ph.D. student Sahil Khose tried a new approach — constructing a synthetic image data set from a ground-view, open-source simulator known as CARLA.

CARLA was originally designed to provide ground-view simulation for self-driving vehicles. It creates an open-world virtual reality that allows users to drive around in computer-generated cities.

Khose and his collaborators adjusted CARLA’s interface to support aerial views that mimic views one might get from unmanned aerial vehicles (UAVs).

What's the Forecast?

The team also created new virtual scenarios to mimic the real world by accounting for changes in weather, times of day, various altitudes, and population per city. The algorithms will struggle to recognize the objects in the frame consistently unless those details are incorporated into the training data.

“CARLA’s flexibility offers a wide range of environmental configurations, and we take several important considerations into account while curating SKYSCENES images from CARLA,” Khose said. “Those include strategies for obtaining diverse synthetic data, embedding real-world irregularities, avoiding correlated images, addressing skewed class representations, and reproducing precise viewpoints.”

SKYSCENES is not the largest dataset of aerial images to be released, but a paper co-authored by Khose shows that it performs better than existing models.

Khose said models trained on this dataset exhibit strong generalization to real-world scenarios, and integration with real-world data enhances their performance. The dataset also controls variability, which is essential to perform various tasks.

“This dataset drives advancements in multi-view learning, domain adaptation, and multimodal approaches, with major implications for applications like urban planning, disaster response, and autonomous drone navigation,” Khose said. “We hope to bridge the gap for synthetic-to-real adaptation and generalization for aerial images.”

Seeing the Whole Picture

For algorithms, generalization is the ability to perform tasks based on new data that expands beyond the specific examples on which they were trained.

“If you have 200 images, and you train a model on those images, they’ll do well at recognizing what you want them to recognize in that closed-world initial setting,” Hoffman said. “But if we were to take aerial vehicles and fly them around cities at various times of the day or in other weather conditions, they would start to fail.”

That’s why Khose designed algorithms to enhance the quality of the curated images.

“These images are captured from 100 meters above ground, which means the objects appear small and are challenging to recognize,” he said. “We focused on developing algorithms specifically designed to address this.”

Those algorithms elevate the ability of ML models to recognize small objects, improving their performance in navigating new environments.

“Our annotations help the models capture a more comprehensive understanding of the entire scene — where the roads are, where the buildings are, and know they are buildings and not just an obstacle in the way,” Hoffman said. “It gives a richer set of information when planning a flight.

“To work safely, many autonomous flight plans might require a map given to them beforehand. If you have successful vision systems that understand exactly what the obstacles in the real world are, you could navigate in previously unseen environments.”

For more information about Georgia Tech Research at ECCV 2024, click here.

News Contact

Nathan Deen

Communications Officer

School of Interactive Computing