Nov. 11, 2024

A first-of-its-kind algorithm developed at Georgia Tech is helping scientists study interactions between electrons. This innovation in modeling technology can lead to discoveries in physics, chemistry, materials science, and other fields.

The new algorithm is faster than existing methods while remaining highly accurate. The solver surpasses the limits of current models by demonstrating scalability across chemical system sizes ranging from large to small.

Computer scientists and engineers benefit from the algorithm’s ability to balance processor loads. This work allows researchers to tackle larger, more complex problems without the prohibitive costs associated with previous methods.

Its ability to solve block linear systems drives the algorithm’s ingenuity. According to the researchers, their approach is the first known use of a block linear system solver to calculate electronic correlation energy.

The Georgia Tech team won’t need to travel far to share their findings with the broader high-performance computing community. They will present their work in Atlanta at the 2024 International Conference for High Performance Computing, Networking, Storage and Analysis (SC24).

[MICROSITE: Georgia Tech at SC24]

“The combination of solving large problems with high accuracy can enable density functional theory simulation to tackle new problems in science and engineering,” said Edmond Chow, professor and associate chair of Georgia Tech’s School of Computational Science and Engineering (CSE).

Density functional theory (DFT) is a modeling method for studying electronic structure in many-body systems, such as atoms and molecules.

An important concept DFT models is electronic correlation, the interaction between electrons in a quantum system. Electron correlation energy is the measure of how much the movement of one electron is influenced by presence of all other electrons.

Random phase approximation (RPA) is used to calculate electron correlation energy. While RPA is very accurate, it becomes computationally more expensive as the size of the system being calculated increases.

Georgia Tech’s algorithm enhances electronic correlation energy computations within the RPA framework. The approach circumvents inefficiencies and achieves faster solution times, even for small-scale chemical systems.

The group integrated the algorithm into existing work on SPARC, a real-space electronic structure software package for accurate, efficient, and scalable solutions of DFT equations. School of Civil and Environmental Engineering Professor Phanish Suryanarayana is SPARC’s lead researcher.

The group tested the algorithm on small chemical systems of silicon crystals numbering as few as eight atoms. The method achieved faster calculation times and scaled to larger system sizes than direct approaches.

“This algorithm will enable SPARC to perform electronic structure calculations for realistic systems with a level of accuracy that is the gold standard in chemical and materials science research,” said Suryanarayana.

RPA is expensive because it relies on quartic scaling. When the size of a chemical system is doubled, the computational cost increases by a factor of 16.

Instead, Georgia Tech’s algorithm scales cubically by solving block linear systems. This capability makes it feasible to solve larger problems at less expense.

Solving block linear systems presents a challenging trade-off in solving different block sizes. While larger blocks help reduce the number of steps of the solver, using them demands higher computational cost per step on computer processors.

Tech’s solution is a dynamic block size selection solver. The solver allows each processor to independently select block sizes to calculate. This solution further assists in scaling, and improves processor load balancing and parallel efficiency.

“The new algorithm has many forms of parallelism, making it suitable for immense numbers of processors,” Chow said. “The algorithm works in a real-space, finite-difference DFT code. Such a code can scale efficiently on the largest supercomputers.”

Georgia Tech alumni Shikhar Shah (Ph.D. CSE 2024), Hua Huang (Ph.D. CSE 2024), and Ph.D. student Boqin Zhang led the algorithm’s development. The project was the culmination of work for Shah and Huang, who completed their degrees this summer. John E. Pask, a physicist at Lawrence Livermore National Laboratory, joined the Tech researchers on the work.

Shah, Huang, Zhang, Suryanarayana, and Chow are among more than 50 students, faculty, research scientists, and alumni affiliated with Georgia Tech who are scheduled to give more than 30 presentations at SC24. The experts will present their research through papers, posters, panels, and workshops.

SC24 takes place Nov. 17-22 at the Georgia World Congress Center in Atlanta.

“The project’s success came from combining expertise from people with diverse backgrounds ranging from numerical methods to chemistry and materials science to high-performance computing,” Chow said.

“We could not have achieved this as individual teams working alone.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Oct. 24, 2024

The U.S. Department of Energy (DOE) has awarded Georgia Tech researchers a $4.6 million grant to develop improved cybersecurity protection for renewable energy technologies.

Associate Professor Saman Zonouz will lead the project and leverage the latest artificial technology (AI) to create Phorensics. The new tool will anticipate cyberattacks on critical infrastructure and provide analysts with an accurate reading of what vulnerabilities were exploited.

“This grant enables us to tackle one of the crucial challenges facing national security today: our critical infrastructure resilience and post-incident diagnostics to restore normal operations in a timely manner,” said Zonouz.

“Together with our amazing team, we will focus on cyber-physical data recovery and post-mortem forensics analysis after cybersecurity incidents in emerging renewable energy systems.”

As the integration of renewable energy technology into national power grids increases, so does their vulnerability to cyberattacks. These threats put energy infrastructure at risk and pose a significant danger to public safety and economic stability. The AI behind Phorensics will allow analysts and technicians to scale security efforts to keep up with a growing power grid that is becoming more complex.

This effort is part of the Security of Engineering Systems (SES) initiative at Georgia Tech’s School of Cybersecurity and Privacy (SCP). SES has three pillars: research, education, and testbeds, with multiple ongoing large, sponsored efforts.

“We had a successful hiring season for SES last year and will continue filling several open tenure-track faculty positions this upcoming cycle,” said Zonouz.

“With top-notch cybersecurity and engineering schools at Georgia Tech, we have begun the SES journey with a dedicated passion to pursue building real-world solutions to protect our critical infrastructures, national security, and public safety.”

Zonouz is the director of the Cyber-Physical Systems Security Laboratory (CPSec) and is jointly appointed by Georgia Tech’s School of Cybersecurity and Privacy (SCP) and the School of Electrical and Computer Engineering (ECE).

The three Georgia Tech researchers joining him on this project are Brendan Saltaformaggio, associate professor in SCP and ECE; Taesoo Kim, jointly appointed professor in SCP and the School of Computer Science; and Animesh Chhotaray, research scientist in SCP.

Katherine Davis, associate professor at the Texas A&M University Department of Electrical and Computer Engineering, has partnered with the team to develop Phorensics. The team will also collaborate with the NREL National Lab, and industry partners for technology transfer and commercialization initiatives.

The Energy Department defines renewable energy as energy from unlimited, naturally replenished resources, such as the sun, tides, and wind. Renewable energy can be used for electricity generation, space and water heating and cooling, and transportation.

News Contact

John Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Oct. 24, 2024

Eight Georgia Tech researchers were honored with the ACM Distinguished Paper Award for their groundbreaking contributions to cybersecurity at the recent ACM Conference on Computer and Communications Security (CCS).

Three papers were recognized for addressing critical challenges in the field, spanning areas such as automotive cybersecurity, password security, and cryptographic testing.

“These three projects underscore Georgia Tech's leadership in advancing cybersecurity solutions that have real-world impact, from protecting critical infrastructure to ensuring the security of future computing systems and improving everyday digital practices,” said School of Cybersecurity and Privacy (SCP) Chair Michael Bailey.

One of the papers, ERACAN: Defending Against an Emerging CAN Threat Model, was co-authored by Ph.D. student Zhaozhou Tang, Associate Professor Saman Zonouz, and College of Engineering Dean and Professor Raheem Beyah. This research focuses on securing the controller area network (CAN), a vital system used in modern vehicles that is increasingly targeted by cyber threats.

"This project is led by our Ph.D. student Zhaozhou Tang with the Cyber-Physical Systems Security (CPSec) Lab," said Zonouz. "Impressively, this was Zhaozhou's first paper in his Ph.D., and he deserves special recognition for this groundbreaking work on automotive cybersecurity."

The work introduces a comprehensive defense system to counter advanced threats to vehicular CAN networks, and the team is collaborating with the Hyundai America Technical Center to implement the research. The CPSec Lab is a collaborative effort between SCP and the School of Electrical and Computer Engineering (ECE).

In another paper, Testing Side-Channel Security of Cryptographic Implementations Against Future Microarchitectures, Assistant Professor Daniel Genkin collaborated with international researchers to define security threats in new computing technology.

"We appreciate ACM for recognizing our work," said Genkin. “Tools for early-stage testing of CPUs for emerging side-channel threats are crucial to ensuring the security of the next generation of computing devices.”

The third paper, Unmasking the Security and Usability of Password Masking, was authored by graduate students Yuqi Hu, Suood Al Roomi, Sena Sahin, and Frank Li, SCP and ECE assistant professor. This study investigated the effectiveness and provided recommendations for implementing password masking and the practice of hiding characters as they are typed and offered.

"Password masking is a widely deployed security mechanism that hasn't been extensively investigated in prior works," said Li.

The assistant professor credited the collaborative efforts of his students, particularly Yuqi Hu, for leading the project.

The ACM Conference on Computer and Communications Security (CCS) is the flagship annual conference of the Special Interest Group on Security, Audit and Control (SIGSAC) of the Association for Computing Machinery (ACM). The conference was held from Oct. 14-18 in Salt Lake City.

News Contact

John Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Oct. 11, 2024

A newly designed soil-powered fuel cell that could provide a sustainable alternative to batteries was recognized as an honorable mention in the annual Fast Company Innovation by Design Awards.

Terracell is roughly the size of a paperback book and uses microbes found in soil to generate energy for low-power applications.

Previous designs for soil microbial fuel cells required water submergence or saturated soil. Terracell can function in soil with a volumetric water content of 42%

Terracell placed in Fast Company’s list of the best sustainability-focused designs of 2024.

Researchers at Northwestern University lead the multi-institution research team that designed Terracell.

Josiah Hester, an associate professor in Georgia Tech's School of Interactive Computing who previously worked at Northwestern, directs the Ka Moamoa Lab, where the project was conceived.

The team includes researchers from Northwestern, Georgia Tech, Stanford, the University of California-San Diego, and the University of California-Santa Cruz.

Their research was published in January in the Proceedings of the Association for Computing Machinery on Interactive, Mobile, Wearable, and Ubiquitous Technologies. The researchers will also present this work at the ACM international joint conference on Pervasive and Ubiquitous Computing (Ubicomp), Oct. 5-9.

According to the Fast Company website, the Innovation by Design Awards recognize “designers and businesses solving the most crucial problems of today and anticipating the pressing issues of tomorrow.” Winners are published in Fast Company Magazine and are honored at the Fast Company Innovation Festival in the fall.

“Terracell could reduce e-waste and extend the useful lifetime of electronics deployed for agriculture, environmental monitoring, and smart cities,” Hester said. “We were honored to be recognized for the design innovation award. It is a testament to the promise of sustainable computing and our hope for a more sustainable world.”

For more information about Terracell, see the story featured on Northwestern Now, or visit the project’s website.

News Contact

Nathan Deen, Communications Officer

Georgia Tech School of Interactive Computing

nathan.deen@cc.gatech.edu

Sep. 19, 2024

A new algorithm tested on NASA’s Perseverance Rover on Mars may lead to better forecasting of hurricanes, wildfires, and other extreme weather events that impact millions globally.

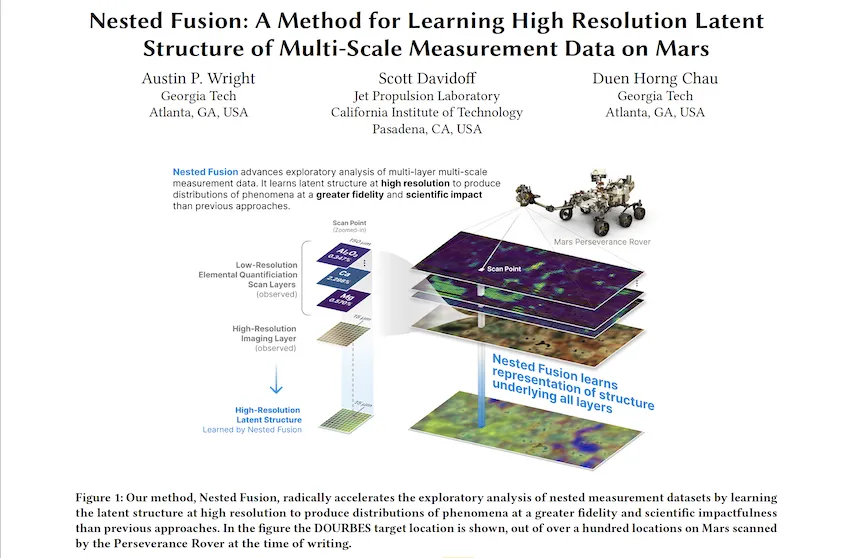

Georgia Tech Ph.D. student Austin P. Wright is first author of a paper that introduces Nested Fusion. The new algorithm improves scientists’ ability to search for past signs of life on the Martian surface.

In addition to supporting NASA’s Mars 2020 mission, scientists from other fields working with large, overlapping datasets can use Nested Fusion’s methods toward their studies.

Wright presented Nested Fusion at the 2024 International Conference on Knowledge Discovery and Data Mining (KDD 2024) where it was a runner-up for the best paper award. KDD is widely considered the world's most prestigious conference for knowledge discovery and data mining research.

“Nested Fusion is really useful for researchers in many different domains, not just NASA scientists,” said Wright. “The method visualizes complex datasets that can be difficult to get an overall view of during the initial exploratory stages of analysis.”

Nested Fusion combines datasets with different resolutions to produce a single, high-resolution visual distribution. Using this method, NASA scientists can more easily analyze multiple datasets from various sources at the same time. This can lead to faster studies of Mars’ surface composition to find clues of previous life.

The algorithm demonstrates how data science impacts traditional scientific fields like chemistry, biology, and geology.

Even further, Wright is developing Nested Fusion applications to model shifting climate patterns, plant and animal life, and other concepts in the earth sciences. The same method can combine overlapping datasets from satellite imagery, biomarkers, and climate data.

“Users have extended Nested Fusion and similar algorithms toward earth science contexts, which we have received very positive feedback,” said Wright, who studies machine learning (ML) at Georgia Tech.

“Cross-correlational analysis takes a long time to do and is not done in the initial stages of research when patterns appear and form new hypotheses. Nested Fusion enables people to discover these patterns much earlier.”

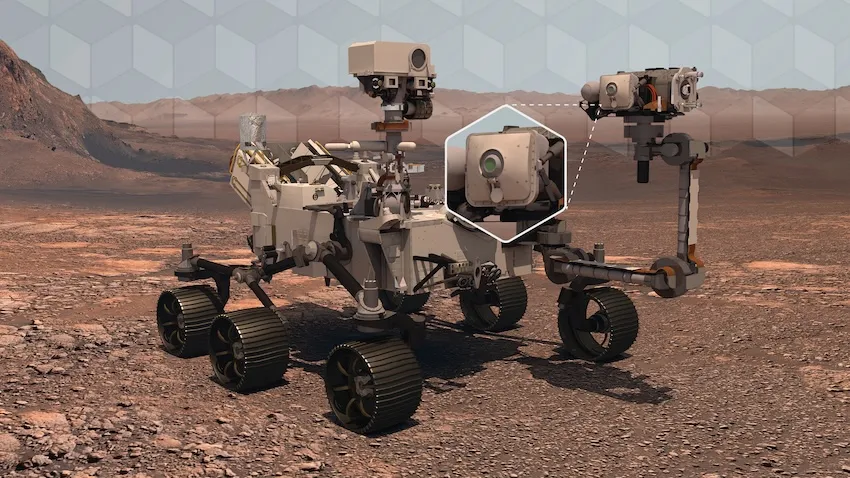

Wright is the data science and ML lead for PIXLISE, the software that NASA JPL scientists use to study data from the Mars Perseverance Rover.

Perseverance uses its Planetary Instrument for X-ray Lithochemistry (PIXL) to collect data on mineral composition of Mars’ surface. PIXL’s two main tools that accomplish this are its X-ray Fluorescence (XRF) Spectrometer and Multi-Context Camera (MCC).

When PIXL scans a target area, it creates two co-aligned datasets from the components. XRF collects a sample's fine-scale elemental composition. MCC produces images of a sample to gather visual and physical details like size and shape.

A single XRF spectrum corresponds to approximately 100 MCC imaging pixels for every scan point. Each tool’s unique resolution makes mapping between overlapping data layers challenging. However, Wright and his collaborators designed Nested Fusion to overcome this hurdle.

In addition to progressing data science, Nested Fusion improves NASA scientists' workflow. Using the method, a single scientist can form an initial estimate of a sample’s mineral composition in a matter of hours. Before Nested Fusion, the same task required days of collaboration between teams of experts on each different instrument.

“I think one of the biggest lessons I have taken from this work is that it is valuable to always ground my ML and data science problems in actual, concrete use cases of our collaborators,” Wright said.

“I learn from collaborators what parts of data analysis are important to them and the challenges they face. By understanding these issues, we can discover new ways of formalizing and framing problems in data science.”

Wright presented Nested Fusion at KDD 2024, held Aug. 25-29 in Barcelona, Spain. KDD is an official special interest group of the Association for Computing Machinery. The conference is one of the world’s leading forums for knowledge discovery and data mining research.

Nested Fusion won runner-up for the best paper in the applied data science track, which comprised of over 150 papers. Hundreds of other papers were presented at the conference’s research track, workshops, and tutorials.

Wright’s mentors, Scott Davidoff and Polo Chau, co-authored the Nested Fusion paper. Davidoff is a principal research scientist at the NASA Jet Propulsion Laboratory. Chau is a professor at the Georgia Tech School of Computational Science and Engineering (CSE).

“I was extremely happy that this work was recognized with the best paper runner-up award,” Wright said. “This kind of applied work can sometimes be hard to find the right academic home, so finding communities that appreciate this work is very encouraging.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Dec. 20, 2023

A new machine learning method could help engineers detect leaks in underground reservoirs earlier, mitigating risks associated with geological carbon storage (GCS). Further study could advance machine learning capabilities while improving safety and efficiency of GCS.

The feasibility study by Georgia Tech researchers explores using conditional normalizing flows (CNFs) to convert seismic data points into usable information and observable images. This potential ability could make monitoring underground storage sites more practical and studying the behavior of carbon dioxide plumes easier.

The 2023 Conference on Neural Information Processing Systems (NeurIPS 2023) accepted the group’s paper for presentation. They presented their study on Dec. 16 at the conference’s workshop on Tackling Climate Change with Machine Learning.

“One area where our group excels is that we care about realism in our simulations,” said Professor Felix Herrmann. “We worked on a real-sized setting with the complexities one would experience when working in real-life scenarios to understand the dynamics of carbon dioxide plumes.”

CNFs are generative models that use data to produce images. They can also fill in the blanks by making predictions to complete an image despite missing or noisy data. This functionality is ideal for this application because data streaming from GCS reservoirs are often noisy, meaning it’s incomplete, outdated, or unstructured data.

The group found in 36 test samples that CNFs could infer scenarios with and without leakage using seismic data. In simulations with leakage, the models generated images that were 96% similar to ground truths. CNFs further supported this by producing images 97% comparable to ground truths in cases with no leakage.

This CNF-based method also improves current techniques that struggle to provide accurate information on the spatial extent of leakage. Conditioning CNFs to samples that change over time allows it to describe and predict the behavior of carbon dioxide plumes.

This study is part of the group’s broader effort to produce digital twins for seismic monitoring of underground storage. A digital twin is a virtual model of a physical object. Digital twins are commonplace in manufacturing, healthcare, environmental monitoring, and other industries.

“There are very few digital twins in earth sciences, especially based on machine learning,” Herrmann explained. “This paper is just a prelude to building an uncertainty aware digital twin for geological carbon storage.”

Herrmann holds joint appointments in the Schools of Earth and Atmospheric Sciences (EAS), Electrical and Computer Engineering, and Computational Science and Engineering (CSE).

School of EAS Ph.D. student Abhinov Prakash Gahlot is the paper’s first author. Ting-Ying (Rosen) Yu (B.S. ECE 2023) started the research as an undergraduate group member. School of CSE Ph.D. students Huseyin Tuna Erdinc, Rafael Orozco, and Ziyi (Francis) Yin co-authored with Gahlot and Herrmann.

NeurIPS 2023 took place Dec. 10-16 in New Orleans. Occurring annually, it is one of the largest conferences in the world dedicated to machine learning.

Over 130 Georgia Tech researchers presented more than 60 papers and posters at NeurIPS 2023. One-third of CSE’s faculty represented the School at the conference. Along with Herrmann, these faculty included Ümit Çatalyürek, Polo Chau, Bo Dai, Srijan Kumar, Yunan Luo, Anqi Wu, and Chao Zhang.

“In the field of geophysics, inverse problems and statistical solutions of these problems are known, but no one has been able to characterize these statistics in a realistic way,” Herrmann said.

“That’s where these machine learning techniques come into play, and we can do things now that you could never do before.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Sep. 28, 2022

Advancement in technology brings about plenty of benefits for everyday life, but it also provides cyber criminals and other potential adversaries with new opportunities to cause chaos for their own benefit.

As researchers begin to shape the future of artificial intelligence in manufacturing, Georgia Tech recognizes the potential risks to this technology once it is implemented on an industrial scale. That’s why Associate Professor Saman Zonouz will begin researching ways to protect the nation’s newest investment in manufacturing.

The project is part of the $65 million grant from the U.S. Department of Commerce’s Economic Development Administration to develop the Georgia AI Manufacturing (GA-AIM) Technology Corridor. While main purpose of the grant is to develop ways of integrating artificial intelligence into manufacturing, it will also help advance cybersecurity research, educational outreach, and workforce development in the subject as well.

“When introducing new capabilities, we don’t know about its cybersecurity weaknesses and landscape,” said Zonouz. “In the IT world, the potential cybersecurity vulnerabilities and corresponding mitigation are clear, but when it comes to artificial intelligence in manufacturing, the best practices are uncertain. We don’t know what all could go wrong.”

Zonouz will work alongside other Georgia Tech researchers in the new Advanced Manufacturing Pilot Facility (AMPF) to pinpoint where those inevitable attacks will come from and how they can be repelled. Along with a team of Ph.D. students, Zonouz will create a roadmap for future researchers, educators, and industry professionals to use when detecting and responding to cyberattacks.

“As we increasingly rely on computing and artificial intelligence systems to drive innovation and competitiveness, there is a growing recognition that the security of these systems is of paramount importance if we are to realize the anticipated gains,” said Michael Bailey, Inaugural Chair of the School of Cybersecurity and Privacy (SCP). “Professor Zonouz is an expert in the security of industrial control systems and will be a vital member of the new coalition as it seeks to provide leadership in manufacturing automation.”

Before coming to Georgia Tech, Zonouz worked with the School of Electrical and Computer Engineering (ECE) and the College of Engineering on protecting and studying the cyber-physical systems of manufacturing. He worked with Raheem Beyah, Dean of the College of Engineering and ECE professor, on several research papers including two that were published at the 26th USENIX Security Symposium, and the Network and Distributed System Security Symposium.

“As Georgia Tech continues to position itself as a leader in artificial intelligence manufacturing, interdisciplinarity collaboration is not only an added benefit, it is fundamental,” said Arijit Raychowdhury, Steve W. Chaddick School Chair and Professor of ECE. “Saman’s cybersecurity expertise will play a crucial role in the overall protection and success of GA-AIM and AMPF. ECE is proud to have him representing the school on this important project.”

The research is expected to take five years, which is typical for a project of this scale. Apart from research, there will be a workforce development and educational outreach portion of the GA-AIM program. The cyber testbed developed by Zonouz, and his team will live in the 24,000 square-foot AMPF facility.

News Contact

JP Popham

Communications Officer | School of Cybersecurity and Privacy

Georgia Institute of Technology

jpopham3@gatech.edu | scp.cc.gatech.edu

Pagination

- Previous page

- 2 Page 2