Apr. 25, 2025

A Georgia Tech doctoral student’s dissertation could help physicians diagnose neuropsychiatric disorders, including schizophrenia, autism, and Alzheimer’s disease. The new approach leverages data science and algorithms instead of relying on traditional methods like cognitive tests and image scans.

Ph.D. candidate Md Abdur Rahaman’s dissertation studies brain data to understand how changes in brain activity shape behavior.

Computational tools Rahaman developed for his dissertation look for informative patterns between the brain and behavior. Successful tests of his algorithms show promise to help doctors diagnose mental health disorders and design individualized treatment plans for patients.

“I've always been fascinated by the human brain and how it defines who we are,” Rahaman said.

“The fact that so many people silently suffer from neuropsychiatric disorders, while our understanding of the brain remains limited, inspired me to develop tools that bring greater clarity to this complexity and offer hope through more compassionate, data-driven care.”

Rahaman’s dissertation introduces a framework focusing on granular factoring. This computing technique stratifies brain data into smaller, localized subgroups, making it easier for computers and researchers to study data and find meaningful patterns.

Granular factoring overcomes the challenges of size and heterogeneity in neurological data science. Brain data is obtained from neuroimaging, genomics, behavioral datasets, and other sources. The large size of each source makes it a challenge to study them individually, let alone analyze them simultaneously, to find hidden inferences.

Rahaman’s research allows researchers and physicians to move past one-size-fits-all approaches. Instead of manually reviewing tests and scans, algorithms look for patterns and biomarkers in the subgroups that otherwise go undetected, especially ones that indicate neuropsychiatric disorders.

“My dissertation advances the frontiers of computational neuroscience by introducing scalable and interpretable models that navigate brain heterogeneity to reveal how neural dynamics shape behavior,” Rahaman said.

“By uncovering subgroup-specific patterns, this work opens new directions for understanding brain function and enables more precise, personalized approaches to mental health care.”

Rahaman defended his dissertation on April 14, the final step in completing his Ph.D. in computational science and engineering. He will graduate on May 1 at Georgia Tech’s Ph.D. Commencement.

After walking across the stage at McCamish Pavilion, Rahaman’s next step in his career is to go to Amazon, where he will work in the generative artificial intelligence (AI) field.

Graduating from Georgia Tech is the summit of an educational trek spanning over a decade. Rahaman hails from Bangladesh where he graduated from Chittagong University of Engineering and Technology in 2013. He attained his master’s from the University of New Mexico in 2019 before starting at Georgia Tech.

“Munna is an amazingly creative researcher,” said Vince Calhoun, Rahman’s advisor. Calhoun is the founding director of the Translational Research in Neuroimaging and Data Science Center (TReNDS).

TReNDS is a tri-institutional center spanning Georgia Tech, Georgia State University, and Emory University that develops analytic approaches and neuroinformatic tools. The center aims to translate the approaches into biomarkers that address areas of brain health and disease.

“His work is moving the needle in our ability to leverage multiple sources of complex biological data to improve understanding of neuropsychiatric disorders that have a huge impact on an individual’s livelihood,” said Calhoun.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Apr. 22, 2025

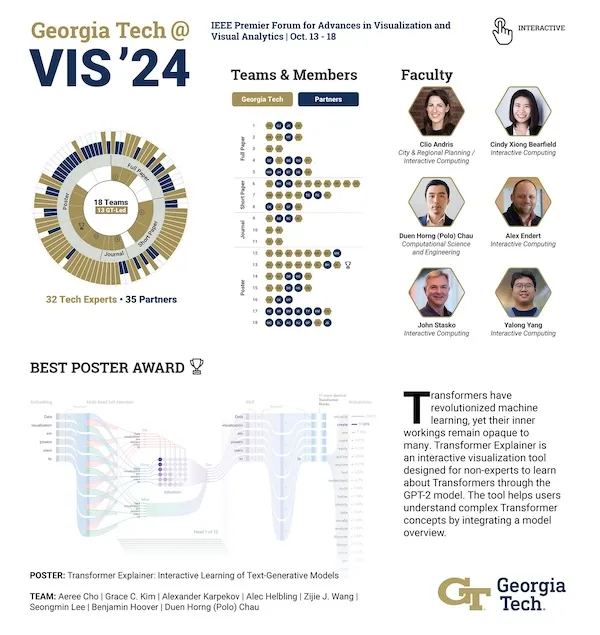

A Georgia Tech alum’s dissertation introduced ways to make artificial intelligence (AI) more accessible, interpretable, and accountable. Although it’s been a year since his doctoral defense, Zijie (Jay) Wang’s (Ph.D. ML-CSE 2024) work continues to resonate with researchers.

Wang is a recipient of the 2025 Outstanding Dissertation Award from the Association for Computing Machinery Special Interest Group on Computer-Human Interaction (ACM SIGCHI). The award recognizes Wang for his lifelong work on democratizing human-centered AI.

“Throughout my Ph.D. and industry internships, I observed a gap in existing research: there is a strong need for practical tools for applying human-centered approaches when designing AI systems,” said Wang, now a safety researcher at OpenAI.

“My work not only helps people understand AI and guide its behavior but also provides user-friendly tools that fit into existing workflows.”

[Related: Georgia Tech College of Computing Swarms to Yokohama, Japan, for CHI 2025]

Wang’s dissertation presented techniques in visual explanation and interactive guidance to align AI models with user knowledge and values. The work culminated from years of research, fellowship support, and internships.

Wang’s most influential projects formed the core of his dissertation. These included:

- CNN Explainer: an open-source tool developed for deep-learning beginners. Since its release in July 2020, more than 436,000 global visitors have used the tool.

- DiffusionDB: a first-of-its-kind large-scale dataset that lays a foundation to help people better understand generative AI. This work could lead to new research in detecting deepfakes and designing human-AI interaction tools to help people more easily use these models.

- GAM Changer: an interface that empowers users in healthcare, finance, or other domains to edit ML models to include knowledge and values specific to their domain, which improves reliability.

- GAM Coach: an interactive ML tool that could help people who have been rejected for a loan by automatically letting an applicant know what is needed for them to receive loan approval.

- Farsight: a tool that alerts developers when they write prompts in large language models that could be harmful and misused.

“I feel extremely honored and lucky to receive this award, and I am deeply grateful to many who have supported me along the way, including Polo, mentors, collaborators, and friends,” said Wang, who was advised by School of Computational Science and Engineering (CSE) Professor Polo Chau.

“This recognition also inspired me to continue striving to design and develop easy-to-use tools that help everyone to easily interact with AI systems.”

Like Wang, Chau advised Georgia Tech alumnus Fred Hohman (Ph.D. CSE 2020). Hohman won the ACM SIGCHI Outstanding Dissertation Award in 2022.

Chau’s group synthesizes machine learning (ML) and visualization techniques into scalable, interactive, and trustworthy tools. These tools increase understanding and interaction with large-scale data and ML models.

Chau is the associate director of corporate relations for the Machine Learning Center at Georgia Tech. Wang called the School of CSE his home unit while a student in the ML program under Chau.

Wang is one of five recipients of this year’s award to be presented at the 2025 Conference on Human Factors in Computing Systems (CHI 2025). The conference occurs April 25-May 1 in Yokohama, Japan.

SIGCHI is the world’s largest association of human-computer interaction professionals and practitioners. The group sponsors or co-sponsors 26 conferences, including CHI.

Wang’s outstanding dissertation award is the latest recognition of a career decorated with achievement.

Months after graduating from Georgia Tech, Forbes named Wang to its 30 Under 30 in Science for 2025 for his dissertation. Wang was one of 15 Yellow Jackets included in nine different 30 Under 30 lists and the only Georgia Tech-affiliated individual on the 30 Under 30 in Science list.

While a Georgia Tech student, Wang earned recognition from big names in business and technology. He received the Apple Scholars in AI/ML Ph.D. Fellowship in 2023 and was in the 2022 cohort of the J.P. Morgan AI Ph.D. Fellowships Program.

Along with the CHI award, Wang’s dissertation earned him awards this year at banquets across campus. The Georgia Tech chapter of Sigma Xi presented Wang with the Best Ph.D. Thesis Award. He also received the College of Computing’s Outstanding Dissertation Award.

“Georgia Tech attracts many great minds, and I’m glad that some, like Jay, chose to join our group,” Chau said. “It has been a joy to work alongside them and witness the many wonderful things they have accomplished, and with many more to come in their careers.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Mar. 21, 2025

Many communities rely on insights from computer-based models and simulations. This week, a nest of Georgia Tech experts are swarming an international conference to present their latest advancements in these tools, which offer solutions to pressing challenges in science and engineering.

Students and faculty from the School of Computational Science and Engineering (CSE) are leading the Georgia Tech contingent at the SIAM Conference on Computational Science and Engineering (CSE25). The Society of Industrial and Applied Mathematics (SIAM) organizes CSE25, occurring March 3-7 in Fort Worth, Texas.

At CSE25, the School of CSE researchers are presenting papers that apply computing approaches to varying fields, including:

- Experiment designs to accelerate the discovery of material properties

- Machine learning approaches to model and predict weather forecasting and coastal flooding

- Virtual models that replicate subsurface geological formations used to store captured carbon dioxide

- Optimizing systems for imaging and optical chemistry

- Plasma physics during nuclear fusion reactions

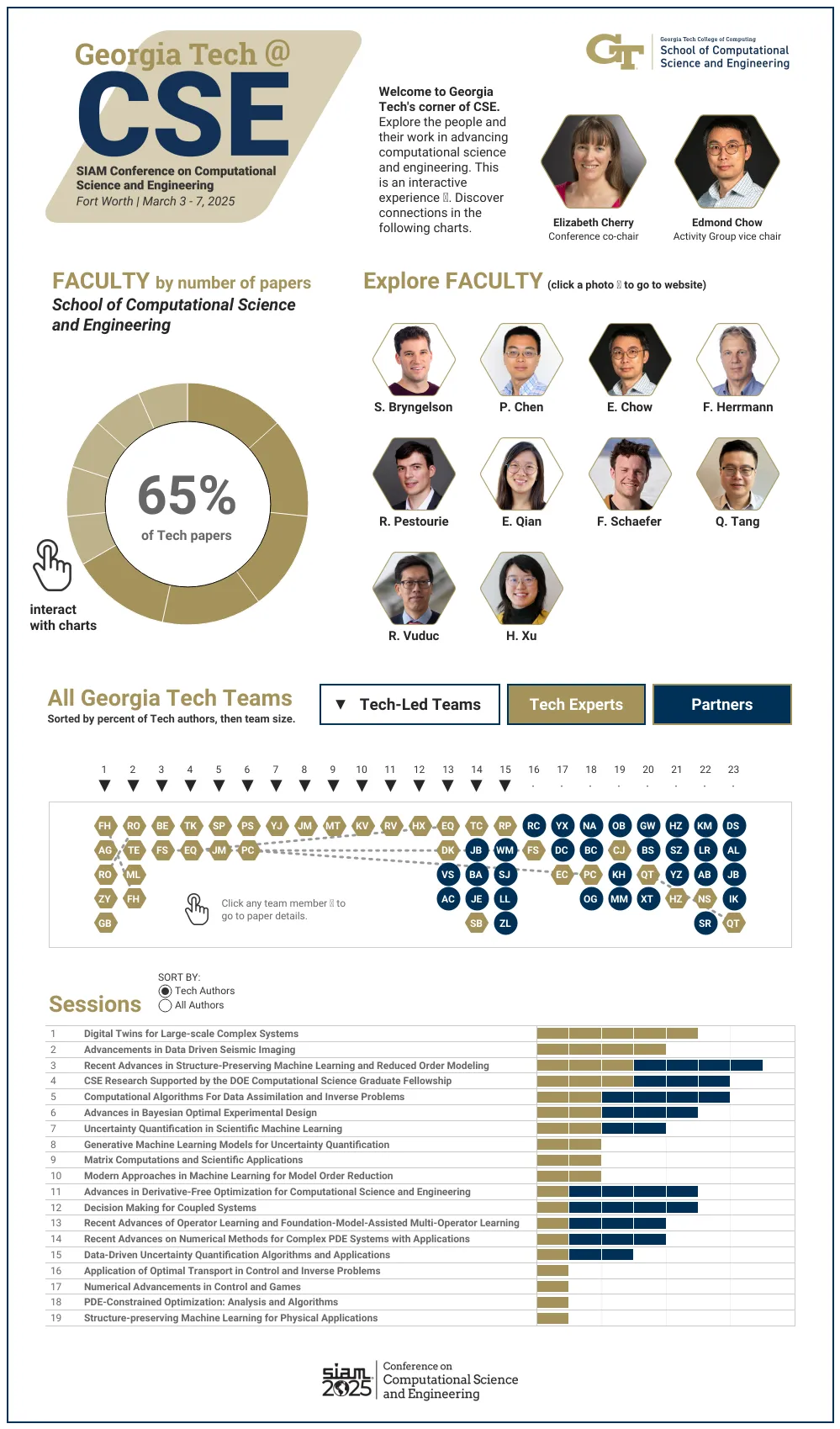

[Related: GT CSE at SIAM CSE25 Interactive Graphic]

“In CSE, researchers from different disciplines work together to develop new computational methods that we could not have developed alone,” said School of CSE Professor Edmond Chow.

“These methods enable new science and engineering to be performed using computation.”

CSE is a discipline dedicated to advancing computational techniques to study and analyze scientific and engineering systems. CSE complements theory and experimentation as modes of scientific discovery.

Held every other year, CSE25 is the primary conference for the SIAM Activity Group on Computational Science and Engineering (SIAG CSE). School of CSE faculty serve in key roles in leading the group and preparing for the conference.

In December, SIAG CSE members elected Chow to a two-year term as the group’s vice chair. This election comes after Chow completed a term as the SIAG CSE program director.

School of CSE Associate Professor Elizabeth Cherry has co-chaired the CSE25 organizing committee since the last conference in 2023. Later that year, SIAM members reelected Cherry to a second, three-year term as a council member at large.

At Georgia Tech, Chow serves as the associate chair of the School of CSE. Cherry, who recently became the associate dean for graduate education of the College of Computing, continues as the director of CSE programs.

“With our strong emphasis on developing and applying computational tools and techniques to solve real-world problems, researchers in the School of CSE are well positioned to serve as leaders in computational science and engineering both within Georgia Tech and in the broader professional community,” Cherry said.

Georgia Tech’s School of CSE was first organized as a division in 2005, becoming one of the world’s first academic departments devoted to the discipline. The division reorganized as a school in 2010 after establishing the flagship CSE Ph.D. and M.S. programs, hiring nine faculty members, and attaining substantial research funding.

Ten School of CSE faculty members are presenting research at CSE25, representing one-third of the School’s faculty body. Of the 23 accepted papers written by Georgia Tech researchers, 15 originate from School of CSE authors.

The list of School of CSE researchers, paper titles, and abstracts includes:

Bayesian Optimal Design Accelerates Discovery of Material Properties from Bubble Dynamics

Postdoctoral Fellow Tianyi Chu, Joseph Beckett, Bachir Abeid, and Jonathan Estrada (University of Michigan), Assistant Professor Spencer Bryngelson

[Abstract]

Latent-EnSF: A Latent Ensemble Score Filter for High-Dimensional Data Assimilation with Sparse Observation Data

Ph.D. student Phillip Si, Assistant Professor Peng Chen

[Abstract]

A Goal-Oriented Quadratic Latent Dynamic Network Surrogate Model for Parameterized Systems

Yuhang Li, Stefan Henneking, Omar Ghattas (University of Texas at Austin), Assistant Professor Peng Chen

[Abstract]

Posterior Covariance Structures in Gaussian Processes

Yuanzhe Xi (Emory University), Difeng Cai (Southern Methodist University), Professor Edmond Chow

[Abstract]

Robust Digital Twin for Geological Carbon Storage

Professor Felix Herrmann, Ph.D. student Abhinav Gahlot, alumnus Rafael Orozco (Ph.D. CSE-CSE 2024), alumnus Ziyi (Francis) Yin (Ph.D. CSE-CSE 2024), and Ph.D. candidate Grant Bruer

[Abstract]

Industry-Scale Uncertainty-Aware Full Waveform Inference with Generative Models

Rafael Orozco, Ph.D. student Tuna Erdinc, alumnus Mathias Louboutin (Ph.D. CS-CSE 2020), and Professor Felix Herrmann

[Abstract]

Optimizing Coupled Systems: Insights from Co-Design Imaging and Optical Chemistry

Assistant Professor Raphaël Pestourie, Wenchao Ma and Steven Johnson (MIT), Lu Lu (Yale University), Zin Lin (Virginia Tech)

[Abstract]

Multifidelity Linear Regression for Scientific Machine Learning from Scarce Data

Assistant Professor Elizabeth Qian, Ph.D. student Dayoung Kang, Vignesh Sella, Anirban Chaudhuri and Anirban Chaudhuri (University of Texas at Austin)

[Abstract]

LyapInf: Data-Driven Estimation of Stability Guarantees for Nonlinear Dynamical Systems

Ph.D. candidate Tomoki Koike and Assistant Professor Elizabeth Qian

[Abstract]

The Information Geometric Regularization of the Euler Equation

Alumnus Ruijia Cao (B.S. CS 2024), Assistant Professor Florian Schäfer

[Abstract]

Maximum Likelihood Discretization of the Transport Equation

Ph.D. student Brook Eyob, Assistant Professor Florian Schäfer

[Abstract]

Intelligent Attractors for Singularly Perturbed Dynamical Systems

Daniel A. Serino (Los Alamos National Laboratory), Allen Alvarez Loya (University of Colorado Boulder), Joshua W. Burby, Ioannis G. Kevrekidis (Johns Hopkins University), Assistant Professor Qi Tang (Session Co-Organizer)

[Abstract]

Accurate Discretizations and Efficient AMG Solvers for Extremely Anisotropic Diffusion Via Hyperbolic Operators

Golo Wimmer, Ben Southworth, Xianzhu Tang (LANL), Assistant Professor Qi Tang

[Abstract]

Randomized Linear Algebra for Problems in Graph Analytics

Professor Rich Vuduc

[Abstract]

Improving Spgemm Performance Through Reordering and Cluster-Wise Computation

Assistant Professor Helen Xu

[Abstract]

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Mar. 06, 2025

Many communities rely on insights from computer-based models and simulations. This week, a nest of Georgia Tech experts are swarming an international conference to present their latest advancements in these tools, which offer solutions to pressing challenges in science and engineering.

Students and faculty from the School of Computational Science and Engineering (CSE) are leading the Georgia Tech contingent at the SIAM Conference on Computational Science and Engineering (CSE25). The Society of Industrial and Applied Mathematics (SIAM) organizes CSE25, occurring March 3-7 in Fort Worth, Texas.

At CSE25, the School of CSE researchers are presenting papers that apply computing approaches to varying fields, including:

- Experiment designs to accelerate the discovery of material properties

- Machine learning approaches to model and predict weather forecasting and coastal flooding

- Virtual models that replicate subsurface geological formations used to store captured carbon dioxide

- Optimizing systems for imaging and optical chemistry

- Plasma physics during nuclear fusion reactions

[Related: GT CSE at SIAM CSE25 Interactive Graphic]

“In CSE, researchers from different disciplines work together to develop new computational methods that we could not have developed alone,” said School of CSE Professor Edmond Chow.

“These methods enable new science and engineering to be performed using computation.”

CSE is a discipline dedicated to advancing computational techniques to study and analyze scientific and engineering systems. CSE complements theory and experimentation as modes of scientific discovery.

Held every other year, CSE25 is the primary conference for the SIAM Activity Group on Computational Science and Engineering (SIAG CSE). School of CSE faculty serve in key roles in leading the group and preparing for the conference.

In December, SIAG CSE members elected Chow to a two-year term as the group’s vice chair. This election comes after Chow completed a term as the SIAG CSE program director.

School of CSE Associate Professor Elizabeth Cherry has co-chaired the CSE25 organizing committee since the last conference in 2023. Later that year, SIAM members reelected Cherry to a second, three-year term as a council member at large.

At Georgia Tech, Chow serves as the associate chair of the School of CSE. Cherry, who recently became the associate dean for graduate education of the College of Computing, continues as the director of CSE programs.

“With our strong emphasis on developing and applying computational tools and techniques to solve real-world problems, researchers in the School of CSE are well positioned to serve as leaders in computational science and engineering both within Georgia Tech and in the broader professional community,” Cherry said.

Georgia Tech’s School of CSE was first organized as a division in 2005, becoming one of the world’s first academic departments devoted to the discipline. The division reorganized as a school in 2010 after establishing the flagship CSE Ph.D. and M.S. programs, hiring nine faculty members, and attaining substantial research funding.

Ten School of CSE faculty members are presenting research at CSE25, representing one-third of the School’s faculty body. Of the 23 accepted papers written by Georgia Tech researchers, 15 originate from School of CSE authors.

The list of School of CSE researchers, paper titles, and abstracts includes:

Bayesian Optimal Design Accelerates Discovery of Material Properties from Bubble Dynamics

Postdoctoral Fellow Tianyi Chu, Joseph Beckett, Bachir Abeid, and Jonathan Estrada (University of Michigan), Assistant Professor Spencer Bryngelson

[Abstract]

Latent-EnSF: A Latent Ensemble Score Filter for High-Dimensional Data Assimilation with Sparse Observation Data

Ph.D. student Phillip Si, Assistant Professor Peng Chen

[Abstract]

A Goal-Oriented Quadratic Latent Dynamic Network Surrogate Model for Parameterized Systems

Yuhang Li, Stefan Henneking, Omar Ghattas (University of Texas at Austin), Assistant Professor Peng Chen

[Abstract]

Posterior Covariance Structures in Gaussian Processes

Yuanzhe Xi (Emory University), Difeng Cai (Southern Methodist University), Professor Edmond Chow

[Abstract]

Robust Digital Twin for Geological Carbon Storage

Professor Felix Herrmann, Ph.D. student Abhinav Gahlot, alumnus Rafael Orozco (Ph.D. CSE-CSE 2024), alumnus Ziyi (Francis) Yin (Ph.D. CSE-CSE 2024), and Ph.D. candidate Grant Bruer

[Abstract]

Industry-Scale Uncertainty-Aware Full Waveform Inference with Generative Models

Rafael Orozco, Ph.D. student Tuna Erdinc, alumnus Mathias Louboutin (Ph.D. CS-CSE 2020), and Professor Felix Herrmann

[Abstract]

Optimizing Coupled Systems: Insights from Co-Design Imaging and Optical Chemistry

Assistant Professor Raphaël Pestourie, Wenchao Ma and Steven Johnson (MIT), Lu Lu (Yale University), Zin Lin (Virginia Tech)

[Abstract]

Multifidelity Linear Regression for Scientific Machine Learning from Scarce Data

Assistant Professor Elizabeth Qian, Ph.D. student Dayoung Kang, Vignesh Sella, Anirban Chaudhuri and Anirban Chaudhuri (University of Texas at Austin)

[Abstract]

LyapInf: Data-Driven Estimation of Stability Guarantees for Nonlinear Dynamical Systems

Ph.D. candidate Tomoki Koike and Assistant Professor Elizabeth Qian

[Abstract]

The Information Geometric Regularization of the Euler Equation

Alumnus Ruijia Cao (B.S. CS 2024), Assistant Professor Florian Schäfer

[Abstract]

Maximum Likelihood Discretization of the Transport Equation

Ph.D. student Brook Eyob, Assistant Professor Florian Schäfer

[Abstract]

Intelligent Attractors for Singularly Perturbed Dynamical Systems

Daniel A. Serino (Los Alamos National Laboratory), Allen Alvarez Loya (University of Colorado Boulder), Joshua W. Burby, Ioannis G. Kevrekidis (Johns Hopkins University), Assistant Professor Qi Tang (Session Co-Organizer)

[Abstract]

Accurate Discretizations and Efficient AMG Solvers for Extremely Anisotropic Diffusion Via Hyperbolic Operators

Golo Wimmer, Ben Southworth, Xianzhu Tang (LANL), Assistant Professor Qi Tang

[Abstract]

Randomized Linear Algebra for Problems in Graph Analytics

Professor Rich Vuduc

[Abstract]

Improving Spgemm Performance Through Reordering and Cluster-Wise Computation

Assistant Professor Helen Xu

[Abstract]

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Dec. 04, 2024

Georgia Tech researchers have created a dataset that trains computer models to understand nuances in human speech during financial earnings calls. The dataset provides a new resource to study how public correspondence affects businesses and markets.

SubjECTive-QA is the first human-curated dataset on question-answer pairs from earnings call transcripts (ECTs). The dataset teaches models to identify subjective features in ECTs, like clarity and cautiousness.

The dataset lays the foundation for a new approach to identifying disinformation and misinformation caused by nuances in speech. While ECT responses can be technically true, unclear or irrelevant information can misinform stakeholders and affect their decision-making.

Tests on White House press briefings showed that the dataset applies to other sectors with frequent question-and-answer encounters, notably politics, journalism, and sports. This increases the odds of effectively informing audiences and improving transparency across public spheres.

The intersecting work between natural language processing and finance earned the paper acceptance to NeurIPS 2024, the 38th Annual Conference on Neural Information Processing Systems. NeurIPS is one of the world’s most prestigious conferences on artificial intelligence (AI) and machine learning (ML) research.

"SubjECTive-QA has the potential to revolutionize nowcasting predictions with enhanced clarity and relevance,” said Agam Shah, the project’s lead researcher.

“Its nuanced analysis of qualities in executive responses, like optimism and cautiousness, deepens our understanding of economic forecasts and financial transparency."

[MICROSITE: Georgia Tech at NeurIPS 2024]

SubjECTive-QA offers a new means to evaluate financial discourse by characterizing language's subjective and multifaceted nature. This improves on traditional datasets that quantify sentiment or verify claims from financial statements.

The dataset consists of 2,747 Q&A pairs taken from 120 ECTs from companies listed on the New York Stock Exchange from 2007 to 2021. The Georgia Tech researchers annotated each response by hand based on six features for a total of 49,446 annotations.

The group evaluated answers on:

- Relevance: the speaker answered the question with appropriate details.

- Clarity: the speaker was transparent in the answer and the message conveyed.

- Optimism: the speaker answered with a positive outlook regarding future outcomes.

- Specificity: the speaker included sufficient and technical details in their answer.

- Cautiousness: the speaker answered using a conservative, risk-averse approach.

- Assertiveness: the speaker answered with certainty about the company’s events and outcomes.

The Georgia Tech group validated their dataset by training eight computer models to detect and score these six features. Test models comprised of three BERT-based pre-trained language models (PLMs), and five popular large language models (LLMs) including Llama and ChatGPT.

All eight models scored the highest on the relevance and clarity features. This is attributed to domain-specific pretraining that enables the models to identify pertinent and understandable material.

The PLMs achieved higher scores on the clear, optimistic, specific, and cautious categories. The LLMs scored higher in assertiveness and relevance.

In another experiment to test transferability, a PLM trained with SubjECTive-QA evaluated 65 Q&A pairs from White House press briefings and gaggles. Scores across all six features indicated models trained on the dataset could succeed in other fields outside of finance.

"Building on these promising results, the next step for SubjECTive-QA is to enhance customer service technologies, like chatbots,” said Shah, a Ph.D. candidate studying machine learning.

“We want to make these platforms more responsive and accurate by integrating our analysis techniques from SubjECTive-QA."

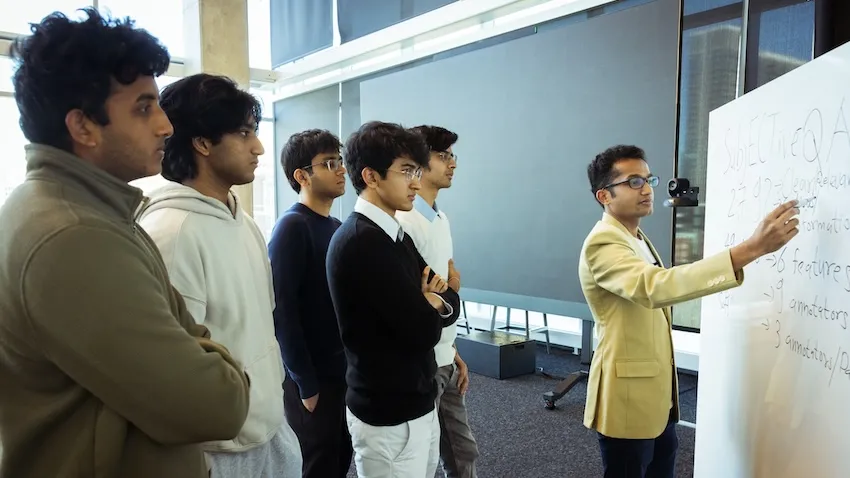

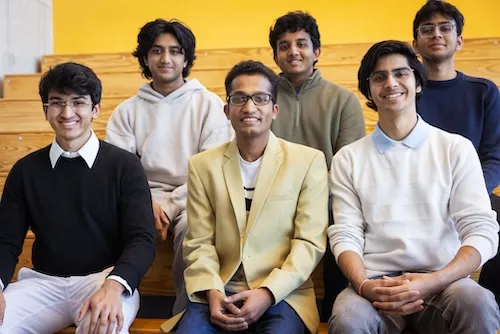

SubjECTive-QA culminated from two semesters of work through Georgia Tech’s Vertically Integrated Projects (VIP) Program. The VIP Program is an approach to higher education where undergraduate and graduate students work together on long-term project teams led by faculty.

Undergraduate students earn academic credit and receive hands-on experience through VIP projects. The extra help advances ongoing research and gives graduate students mentorship experience.

Computer science major Huzaifa Pardawala and mathematics major Siddhant Sukhani co-led the SubjECTive-QA project with Shah.

Fellow collaborators included Veer Kejriwal, Abhishek Pillai, Rohan Bhasin, Andrew DiBiasio, Tarun Mandapati, and Dhruv Adha. All six researchers are undergraduate students studying computer science.

Sudheer Chava co-advises Shah and is the faculty lead of SubjECTive-QA. Chava is a professor in the Scheller College of Business and director of the M.S. in Quantitative and Computational Finance (QCF) program.

Chava is also an adjunct faculty member in the College of Computing’s School of Computational Science and Engineering (CSE).

"Leading undergraduate students through the VIP Program taught me the powerful impact of balancing freedom with guidance,” Shah said.

“Allowing students to take the helm not only fosters their leadership skills but also enhances my own approach to mentoring, thus creating a mutually enriching educational experience.”

Presenting SubjECTive-QA at NeurIPS 2024 exposes the dataset for further use and refinement. NeurIPS is one of three primary international conferences on high-impact research in AI and ML. The conference occurs Dec. 10-15.

The SubjECTive-QA team is among the 162 Georgia Tech researchers presenting over 80 papers at NeurIPS 2024. The Georgia Tech contingent includes 46 faculty members, like Chava. These faculty represent Georgia Tech’s Colleges of Business, Computing, Engineering, and Sciences, underscoring the pertinence of AI research across domains.

"Presenting SubjECTive-QA at prestigious venues like NeurIPS propels our research into the spotlight, drawing the attention of key players in finance and tech,” Shah said.

“The feedback we receive from this community of experts validates our approach and opens new avenues for future innovation, setting the stage for transformative applications in industry and academia.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Dec. 04, 2024

A new machine learning (ML) model from Georgia Tech could protect communities from diseases, better manage electricity consumption in cities, and promote business growth, all at the same time.

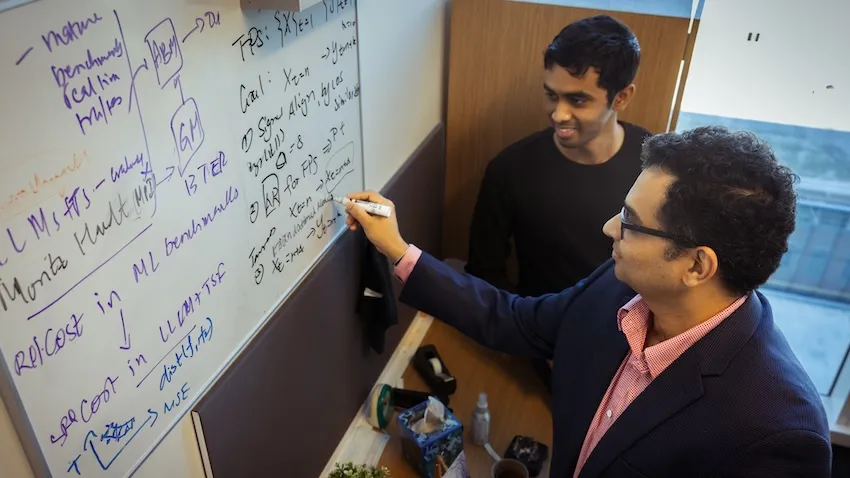

Researchers from the School of Computational Science and Engineering (CSE) created the Large Pre-Trained Time-Series Model (LPTM) framework. LPTM is a single foundational model that completes forecasting tasks across a broad range of domains.

Along with performing as well or better than models purpose-built for their applications, LPTM requires 40% less data and 50% less training time than current baselines. In some cases, LPTM can be deployed without any training data.

The key to LPTM is that it is pre-trained on datasets from different industries like healthcare, transportation, and energy. The Georgia Tech group created an adaptive segmentation module to make effective use of these vastly different datasets.

The Georgia Tech researchers will present LPTM in Vancouver, British Columbia, Canada, at the 2024 Conference on Neural Information Processing Systems (NeurIPS 2024). NeurIPS is one of the world’s most prestigious conferences on artificial intelligence (AI) and ML research.

“The foundational model paradigm started with text and image, but people haven’t explored time-series tasks yet because those were considered too diverse across domains,” said B. Aditya Prakash, one of LPTM’s developers.

“Our work is a pioneer in this new area of exploration where only few attempts have been made so far.”

[MICROSITE: Georgia Tech at NeurIPS 2024]

Foundational models are trained with data from different fields, making them powerful tools when assigned tasks. Foundational models drive GPT, DALL-E, and other popular generative AI platforms used today. LPTM is different though because it is geared toward time-series, not text and image generation.

The Georgia Tech researchers trained LPTM on data ranging from epidemics, macroeconomics, power consumption, traffic and transportation, stock markets, and human motion and behavioral datasets.

After training, the group pitted LPTM against 17 other models to make forecasts as close to nine real-case benchmarks. LPTM performed the best on five datasets and placed second on the other four.

The nine benchmarks contained data from real-world collections. These included the spread of influenza in the U.S. and Japan, electricity, traffic, and taxi demand in New York, and financial markets.

The competitor models were purpose-built for their fields. While each model performed well on one or two benchmarks closest to its designed purpose, the models ranked in the middle or bottom on others.

In another experiment, the Georgia Tech group tested LPTM against seven baseline models on the same nine benchmarks in zero-shot forecasting tasks. Zero-shot means the model is used out of the box and not given any specific guidance during training. LPTM outperformed every model across all benchmarks in this trial.

LPTM performed consistently as a top-runner on all nine benchmarks, demonstrating the model’s potential to achieve superior forecasting results across multiple applications with less and resources.

“Our model also goes beyond forecasting and helps accomplish other tasks,” said Prakash, an associate professor in the School of CSE.

“Classification is a useful time-series task that allows us to understand the nature of the time-series and label whether that time-series is something we understand or is new.”

One reason traditional models are custom-built to their purpose is that fields differ in reporting frequency and trends.

For example, epidemic data is often reported weekly and goes through seasonal peaks with occasional outbreaks. Economic data is captured quarterly and typically remains consistent and monotone over time.

LPTM’s adaptive segmentation module allows it to overcome these timing differences across datasets. When LPTM receives a dataset, the module breaks data into segments of different sizes. Then, it scores all possible ways to segment data and chooses the easiest segment from which to learn useful patterns.

LPTM’s performance, enhanced through the innovation of adaptive segmentation, earned the model acceptance to NeurIPS 2024 for presentation. NeurIPS is one of three primary international conferences on high-impact research in AI and ML. NeurIPS 2024 occurs Dec. 10-15.

Ph.D. student Harshavardhan Kamarthi partnered with Prakash, his advisor, on LPTM. The duo are among the 162 Georgia Tech researchers presenting over 80 papers at the conference.

Prakash is one of 46 Georgia Tech faculty with research accepted at NeurIPS 2024. Nine School of CSE faculty members, nearly one-third of the body, are authors or co-authors of 17 papers accepted at the conference.

Along with sharing their research at NeurIPS 2024, Prakash and Kamarthi released an open-source library of foundational time-series modules that data scientists can use in their applications.

“Given the interest in AI from all walks of life, including business, social, and research and development sectors, a lot of work has been done and thousands of strong papers are submitted to the main AI conferences,” Prakash said.

“Acceptance of our paper speaks to the quality of the work and its potential to advance foundational methodology, and we hope to share that with a larger audience.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Nov. 11, 2024

A first-of-its-kind algorithm developed at Georgia Tech is helping scientists study interactions between electrons. This innovation in modeling technology can lead to discoveries in physics, chemistry, materials science, and other fields.

The new algorithm is faster than existing methods while remaining highly accurate. The solver surpasses the limits of current models by demonstrating scalability across chemical system sizes ranging from large to small.

Computer scientists and engineers benefit from the algorithm’s ability to balance processor loads. This work allows researchers to tackle larger, more complex problems without the prohibitive costs associated with previous methods.

Its ability to solve block linear systems drives the algorithm’s ingenuity. According to the researchers, their approach is the first known use of a block linear system solver to calculate electronic correlation energy.

The Georgia Tech team won’t need to travel far to share their findings with the broader high-performance computing community. They will present their work in Atlanta at the 2024 International Conference for High Performance Computing, Networking, Storage and Analysis (SC24).

[MICROSITE: Georgia Tech at SC24]

“The combination of solving large problems with high accuracy can enable density functional theory simulation to tackle new problems in science and engineering,” said Edmond Chow, professor and associate chair of Georgia Tech’s School of Computational Science and Engineering (CSE).

Density functional theory (DFT) is a modeling method for studying electronic structure in many-body systems, such as atoms and molecules.

An important concept DFT models is electronic correlation, the interaction between electrons in a quantum system. Electron correlation energy is the measure of how much the movement of one electron is influenced by presence of all other electrons.

Random phase approximation (RPA) is used to calculate electron correlation energy. While RPA is very accurate, it becomes computationally more expensive as the size of the system being calculated increases.

Georgia Tech’s algorithm enhances electronic correlation energy computations within the RPA framework. The approach circumvents inefficiencies and achieves faster solution times, even for small-scale chemical systems.

The group integrated the algorithm into existing work on SPARC, a real-space electronic structure software package for accurate, efficient, and scalable solutions of DFT equations. School of Civil and Environmental Engineering Professor Phanish Suryanarayana is SPARC’s lead researcher.

The group tested the algorithm on small chemical systems of silicon crystals numbering as few as eight atoms. The method achieved faster calculation times and scaled to larger system sizes than direct approaches.

“This algorithm will enable SPARC to perform electronic structure calculations for realistic systems with a level of accuracy that is the gold standard in chemical and materials science research,” said Suryanarayana.

RPA is expensive because it relies on quartic scaling. When the size of a chemical system is doubled, the computational cost increases by a factor of 16.

Instead, Georgia Tech’s algorithm scales cubically by solving block linear systems. This capability makes it feasible to solve larger problems at less expense.

Solving block linear systems presents a challenging trade-off in solving different block sizes. While larger blocks help reduce the number of steps of the solver, using them demands higher computational cost per step on computer processors.

Tech’s solution is a dynamic block size selection solver. The solver allows each processor to independently select block sizes to calculate. This solution further assists in scaling, and improves processor load balancing and parallel efficiency.

“The new algorithm has many forms of parallelism, making it suitable for immense numbers of processors,” Chow said. “The algorithm works in a real-space, finite-difference DFT code. Such a code can scale efficiently on the largest supercomputers.”

Georgia Tech alumni Shikhar Shah (Ph.D. CSE 2024), Hua Huang (Ph.D. CSE 2024), and Ph.D. student Boqin Zhang led the algorithm’s development. The project was the culmination of work for Shah and Huang, who completed their degrees this summer. John E. Pask, a physicist at Lawrence Livermore National Laboratory, joined the Tech researchers on the work.

Shah, Huang, Zhang, Suryanarayana, and Chow are among more than 50 students, faculty, research scientists, and alumni affiliated with Georgia Tech who are scheduled to give more than 30 presentations at SC24. The experts will present their research through papers, posters, panels, and workshops.

SC24 takes place Nov. 17-22 at the Georgia World Congress Center in Atlanta.

“The project’s success came from combining expertise from people with diverse backgrounds ranging from numerical methods to chemistry and materials science to high-performance computing,” Chow said.

“We could not have achieved this as individual teams working alone.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Oct. 21, 2024

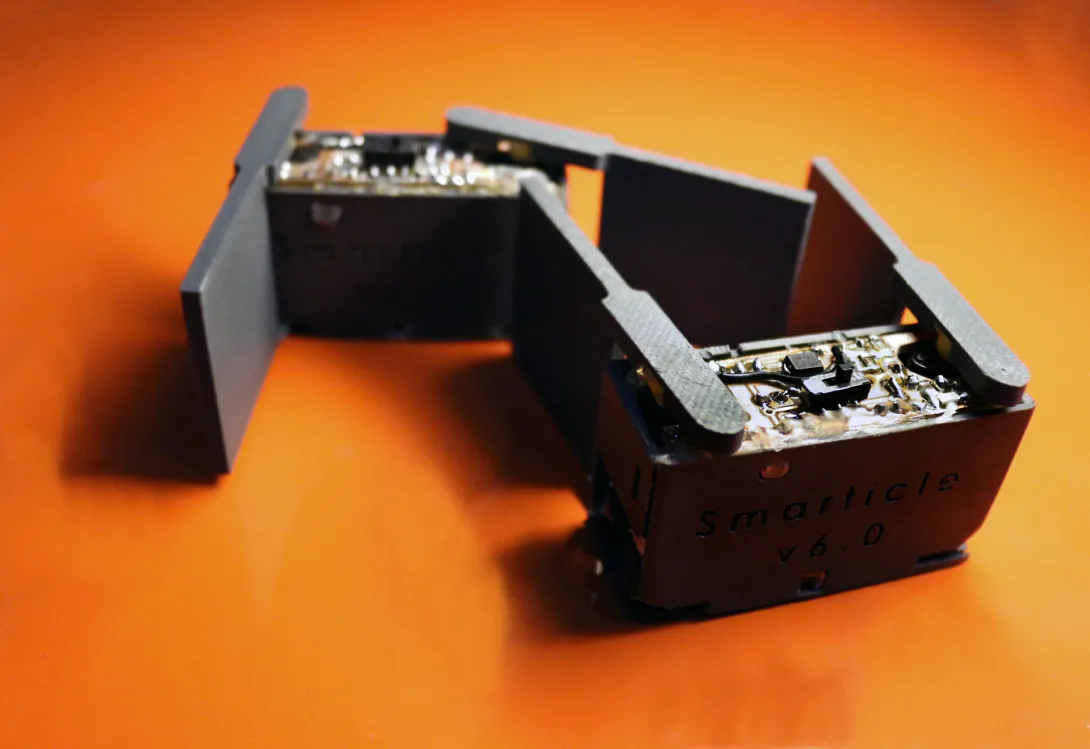

If you’ve ever watched a large flock of birds on the wing, moving across the sky like a cloud with various shapes and directional changes appearing from seeming chaos, or the maneuvers of an ant colony forming bridges and rafts to escape floods, you’ve been observing what scientists call self-organization. What may not be as obvious is that self-organization occurs throughout the natural world, including bacterial colonies, protein complexes, and hybrid materials. Understanding and predicting self-organization, especially in systems that are out of equilibrium, like living things, is an enduring goal of statistical physics.

This goal is the motivation behind a recently introduced principle of physics called rattling, which posits that systems with sufficiently “messy” dynamics organize into what researchers refer to as low rattling states. Although the principle has proved accurate for systems of robot swarms, it has been too vague to be more broadly tested, and it has been unclear exactly why it works and to what other systems it should apply.

Dana Randall, a professor in the School of Computer Science, and Jacob Calvert, a postdoctoral fellow at the Institute for Data Engineering and Science, have formulated a theory of rattling that answers these fundamental questions. Their paper, “A Local-Global Principle for Nonequilibrium Steady States,” published last week in Proceedings of the National Academy of Sciences, characterizes how rattling is related to the amount of time that a system spends in a state. Their theory further identifies the classes of systems for which rattling explains self-organization.

When we first heard about rattling from physicists, it was very hard to believe it could be true. Our work grew out of a desire to understand it ourselves. We found that the idea at its core is surprisingly simple and holds even more broadly than the physicists guessed.

Dana Randall Professor, School of Computer Science & Adjunct Professor, School of Mathematics

Georgia Institute of Technology

Beyond its basic scientific importance, the work can be put to immediate use to analyze models of phenomena across scientific domains. Additionally, experimentalists seeking organization within a nonequilibrium system may be able to induce low rattling states to achieve their desired goal. The duo thinks the work will be valuable in designing microparticles, robotic swarms, and new materials. It may also provide new ways to analyze and predict collective behaviors in biological systems at the micro and nanoscale.

The preceding material is based on work supported by the Army Research Office under award ARO MURI Award W911NF-19-1-0233 and by the National Science Foundation under grant CCF-2106687. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the sponsoring agencies.

Jacob Calvert and Dana Randall. A local-global principle for nonequilibrium steady states. Proceedings of the National Academy of Sciences, 121(42):e2411731121, 2024.

Oct. 17, 2024

Two new assistant professors joined the School of Computational Science and Engineering (CSE) faculty this fall. Lu Mi comes to Georgia Tech from the Allen Institute for Brain Science in Seattle, where she was a Shanahan Foundation Fellow.

We sat down with Mi to learn more about her background and to introduce her to the Georgia Tech and College of Computing communities.

Faculty: Lu Mi, assistant professor, School of CSE

Research Interests: Computational Neuroscience, Machine Learning

Education: Ph.D. in Computer Science from the Massachusetts Institute of Technology; B.S. in Measurement, Control, and Instruments from Tsinghua University

Hometown: Sichuan, China (home of the giant pandas)

How have your first few months at Georgia Tech gone so far?

I’ve really enjoyed my time at Georgia Tech. Developing a new course has been both challenging and rewarding. I’ve learned a lot from the process and conversations with students. My colleagues have been incredibly welcoming, and I’ve had the opportunity to work with some very smart and motivated students here at Georgia Tech.

You hit the ground running this year by teaching your CSE 8803 course on brain-inspired machine intelligence. What important concepts do you teach in this class?

This course focuses on comparing biological neural networks with artificial neural networks. We explore questions like: How does the brain encode information, perform computations, and learn? What can neuroscience and artificial intelligence (AI) learn from each other? Key topics include spiking neural networks, neural coding, and biologically plausible learning rules. By the end of the course, I expect students to have a solid understanding of neural algorithms and the emerging NeuroAI field.

When and how did you become interested in computational neuroscience in the first place?

I’ve been fascinated by how the brain works since I was young. My formal engagement with the field began during my Ph.D. research, where we developed algorithms to help neuroscientists map large-scale synaptic wiring diagrams in the brain. Since then, I’ve had the opportunity to collaborate with researchers at institutions like Harvard, the Janelia Research Campus, the Allen Institute for Brain Science, and the University of Washington on various exciting projects in this field.

What about your experience and research are you currently most proud of?

I’m particularly proud of the framework we developed to integrate black-box machine learning models with biologically realistic mechanistic models. We use advanced deep-learning techniques to infer unobserved information and combine this with prior knowledge from mechanistic models. This allows us to test hypotheses by applying different model variants. I believe this framework holds great potential to address a wide range of scientific questions, leveraging the power of AI.

What about Georgia Tech convinced you to accept a faculty position?

Georgia Tech CSE felt like a perfect fit for my background and research interests, particularly within the AI4Science initiative and the development of computational tools for biology and neuroscience. My work overlaps with several colleagues here, and I’m excited to collaborate with them. Georgia Tech also has a vibrant and impactful Neuro Next Initiative community, which is another great attraction.

What are your hobbies and interests when not researching and teaching?

I enjoy photography and love spending time with my two corgi dogs, especially taking them for walks.

What have you enjoyed most so far about living in Atlanta?

I’ve really appreciated the peaceful, green environment with so many trees. I’m also looking forward to exploring more outdoor activities, like fishing and golfing.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Oct. 16, 2024

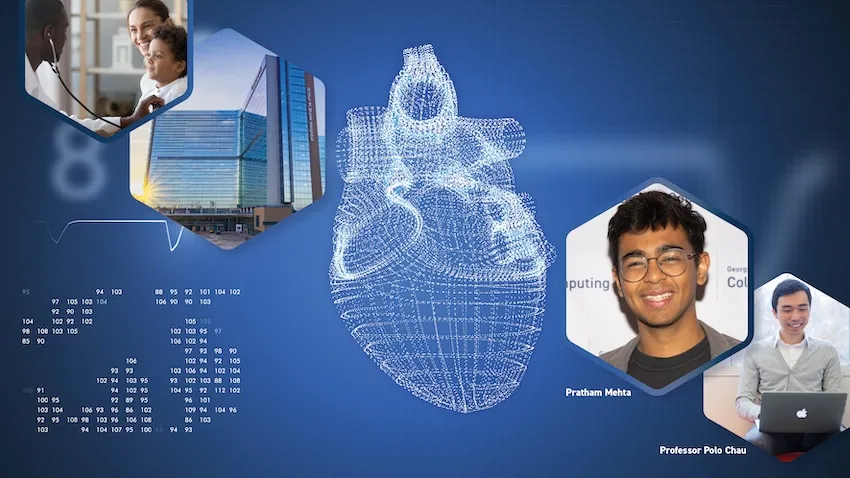

A new surgery planning tool powered by augmented reality (AR) is in development for doctors who need closer collaboration when planning heart operations. Promising results from a recent usability test have moved the platform one step closer to everyday use in hospitals worldwide.

Georgia Tech researchers partnered with medical experts from Children’s Healthcare of Atlanta (CHOA) to develop and test ARCollab. The iOS-based app leverages advanced AR technologies to let doctors collaborate together and interact with a patient’s 3D heart model when planning surgeries.

The usability evaluation demonstrates the app’s effectiveness, finding that ARCollab is easy to use and understand, fosters collaboration, and improves surgical planning.

“This tool is a step toward easier collaborative surgical planning. ARCollab could reduce the reliance on physical heart models, saving hours and even days of time while maintaining the collaborative nature of surgical planning,” said M.S. student Pratham Mehta, the app’s lead researcher.

“Not only can it benefit doctors when planning for surgery, it may also serve as a teaching tool to explain heart deformities and problems to patients.”

Two cardiologists and three cardiothoracic surgeons from CHOA tested ARCollab. The two-day study ended with the doctors taking a 14-question survey assessing the app’s usability. The survey also solicited general feedback and top features.

The Georgia Tech group determined from the open-ended feedback that:

- ARCollab enables new collaboration capabilities that are easy to use and facilitate surgical planning.

- Anchoring the model to a physical space is important for better interaction.

- Portability and real-time interaction are crucial for collaborative surgical planning.

Users rated each of the 14 questions on a 7-point Likert scale, with one being “strongly disagree” and seven being “strongly agree.” The 14 questions were organized into five categories: overall, multi-user, model viewing, model slicing, and saving and loading models.

The multi-user category attained the highest rating with an average of 6.65. This included a unanimous 7.0 rating that it was easy to identify who was controlling the heart model in ARCollab. The scores also showed it was easy for users to connect with devices, switch between viewing and slicing, and view other users’ interactions.

The model slicing category received the lowest, but formidable, average of 5.5. These questions assessed ease of use and understanding of finger gestures and usefulness to toggle slice direction.

Based on feedback, the researchers will explore adding support for remote collaboration. This would assist doctors in collaborating when not in a shared physical space. Another improvement is extending the save feature to support multiple states.

“The surgeons and cardiologists found it extremely beneficial for multiple people to be able to view the model and collaboratively interact with it in real-time,” Mehta said.

The user study took place in a CHOA classroom. CHOA also provided a 3D heart model for the test using anonymous medical imaging data. Georgia Tech’s Institutional Review Board (IRB) approved the study and the group collected data in accordance with Institute policies.

The five test participants regularly perform cardiovascular surgical procedures and are employed by CHOA.

The Georgia Tech group provided each participant with an iPad Pro with the latest iOS version and the ARCollab app installed. Using commercial devices and software meets the group’s intentions to make the tool universally available and deployable.

“We plan to continue iterating ARCollab based on the feedback from the users,” Mehta said.

“The participants suggested the addition of a ‘distance collaboration’ mode, enabling doctors to collaborate even if they are not in the same physical environment. This allows them to facilitate surgical planning sessions from home or otherwise.”

The Georgia Tech researchers are presenting ARCollab and the user study results at IEEE VIS 2024, the Institute of Electrical and Electronics Engineers (IEEE) visualization conference.

IEEE VIS is the world’s most prestigious conference for visualization research and the second-highest rated conference for computer graphics. It takes place virtually Oct. 13-18, moved from its venue in St. Pete Beach, Florida, due to Hurricane Milton.

The ARCollab research group's presentation at IEEE VIS comes months after they shared their work at the Conference on Human Factors in Computing Systems (CHI 2024).

Undergraduate student Rahul Narayanan and alumni Harsha Karanth (M.S. CS 2024) and Haoyang (Alex) Yang (CS 2022, M.S. CS 2023) co-authored the paper with Mehta. They study under Polo Chau, a professor in the School of Computational Science and Engineering.

The Georgia Tech group partnered with Dr. Timothy Slesnick and Dr. Fawwaz Shaw from CHOA on ARCollab’s development and user testing.

"I'm grateful for these opportunities since I get to showcase the team's hard work," Mehta said.

“I can meet other like-minded researchers and students who share these interests in visualization and human-computer interaction. There is no better form of learning.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Pagination

- 1 Page 1

- Next page