Jul. 19, 2024

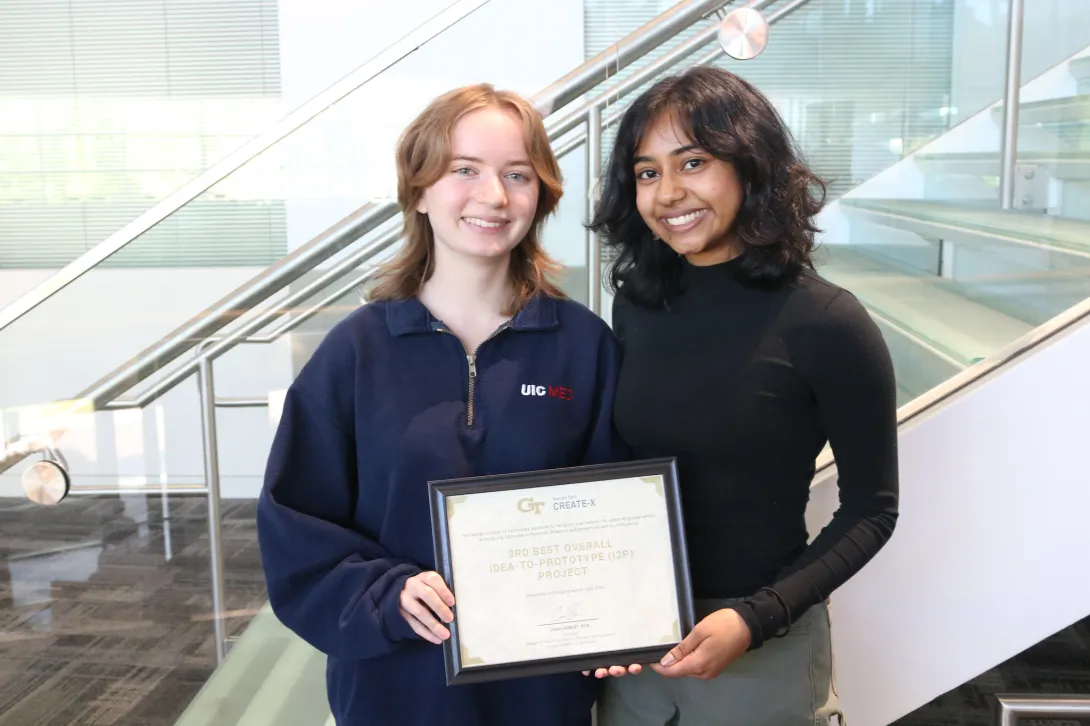

CREATE-X is built to help students integrate entrepreneurship into their academic journey through courses, workshops, and a startup accelerator. This spring, a new set of students displayed their solutions to real-world problems at the I2P Showcase. It’s our privilege to shine a light on and celebrate those journeys. Today’s spotlight focuses on the spring I2P Showcase third-place winners.

Electrosuit

Aubrey Hall, a first-year biomedical student, and Sherya Chakraborty, a first-year computer science major, founded a startup to produce a garment that eases the use of at-home, prescribed electrical stimulation for people with chronic pain, stroke, and motor impairments.

What made you interested in building this solution?

“I did research at Northwestern for a couple of years before this, and some of the patients I worked with had severe stroke and spasticity in their arms,” Chakraborty said. “I found out that when they tried using at-home prescribed electrical stimulation, they had trouble setting it off themselves. So, we created a garment to ease pressure on that.”

What part of the course was most helpful to you?

“One of our mentors, Sun Mi Park, was the first person to patent printable wires on fabric, and that gave us some inspiration to make our garment even more compact, easier to use, and integrate some interesting ideas that we wouldn’t have been able to without our mentors. So, our mentors are honestly the best part of the program,” Chakraborty said.

“For me, you don’t get a lot of chances to apply these engineering courses outside of the classroom,” said Hall. “This course is a really interesting way to get firsthand experience building a prototype and really understand the engineering process.”

What’s so special about CREATE-X?

“I think these student projects are the future, and a lot of these projects make it out of college and become actual companies. Giving students that possibility to make a change just from a simple idea and fueling that with funding so we don’t have to take risks out of our own pockets is a, really big deal,” Chakraborty said.

“It’s helpful to have that safety net, knowing that you have your mentors to back you, and also the people of the program to back you. It brings a lot of security and opportunity to try different things out and not have to be so fearful of failure. Even if you fail a million times, you can get back up and try again,” Hall said.

What’s the best insight you’ve gained from doing this?

“I think one big misconception is that entrepreneurship has a lot to do with finance and business and just lucrative ideas, but it’s pretty important to understand that you can solve a seemingly everyday problem,” said Chakraborty. “If it affects you or your friends, it’s still worth trying to find a way to solve it, especially backed up with money and mentors from CREATE-X. What’s the harm in trying something out?”

“Don’t try to make it feel like it’s an all-or-nothing project,” Hall said. “You’re allowed to live your life as a college student but also pursue these interesting ideas and figure out if you enjoy entrepreneurship. It shouldn’t be this daunting task where if you don’t put everything in, you’re going to fail.”

“It’s also important to keep an open mind. We might come in with an idea and a very specific way of executing that idea, but we found out through talking with mentors, and with other students and people who gave us advice, that sometimes the idea you come in with is not going to be the same thing you end up with,” Chakraborty said.

Next Steps

“We’ve only done four or five prototypes so far,” she noted. “We want to do at least 12 of those prototypes and keep working with our mentors, keep making connections at Emory, and just constantly getting more and more feedback about our prototypes until we get to a state where we’re satisfied, and we can demo our product and work with physical therapists across Atlanta.”

If you’re a student interested in building your own product for college credit, apply for I2P. And join us for Demo Day, Aug. 29, at 5 p.m., in the Georgia Tech Exhibition Hall to see new CREATE-X founders launch products in a variety of industries. Tickets are free but limited. Register today to secure your spot.

News Contact

Breanna Durham

Marketing Strategist

Jul. 18, 2024

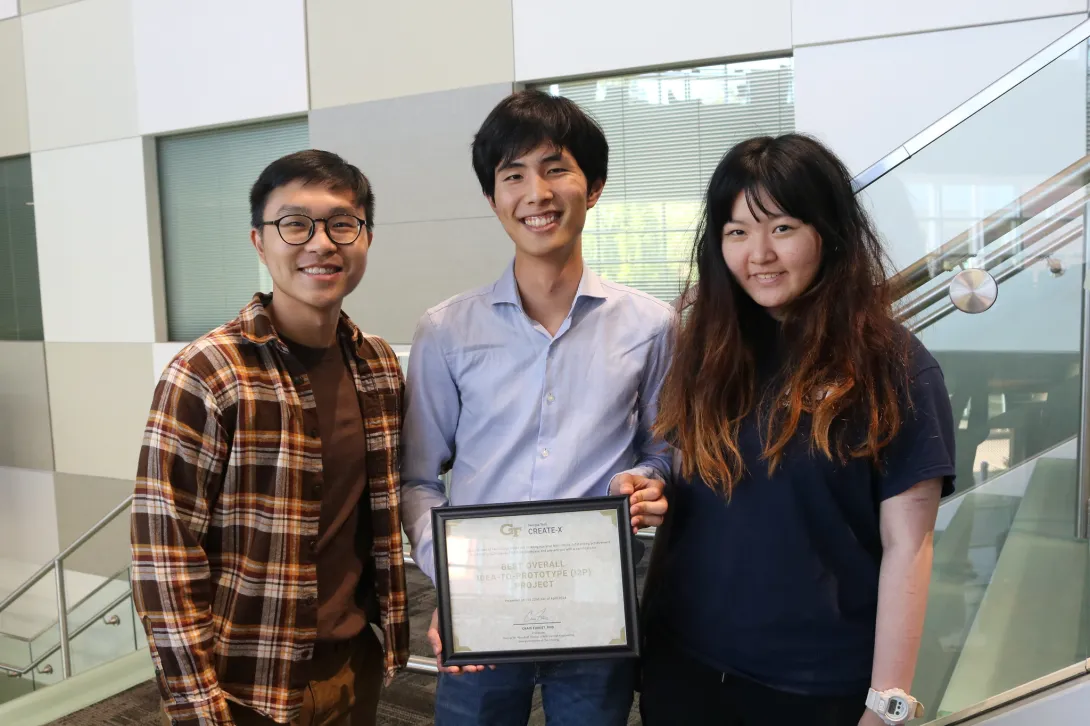

During the school year and the summer, Georgia Tech students can incorporate entrepreneurship into their college experience through courses, workshops, special events, and even a startup accelerator. CREATE-X invites you to delve into the journeys of our top achievers, this time focusing on the Spring 2024 I2P Showcase first-place winners:

Dolfin Solutions

Marianna Cao, James Gao, and Jaeheon Shim, first-year computer science majors, are the founders of Dolfin Solutions, a personal financial management platform that promises a unified solution to budgeting, transaction management, and expense tracking, among other personal finance tasks.

What challenges did you have in I2P, and how did you work through them?

“We were really lucky to get an excellent mentor, Aaron Hillegass. He has a lot of experience in the industry as a startup founder himself, and he gave us a lot of help, both technical as well as business, throughout the process. That helped us make better decisions,” Gao said.

“I think the biggest challenge was, I had done projects in the past by myself, writing the full stack, but working together, communicating the requirements, and integrating everyone's different code at the end was a little bit of a logistical struggle,” Shim said. “But we managed to figure it out.”

What advice do you have for students interested in I2P or entrepreneurship in general?

“Go for it. It's a three-credit course, so it counts toward your junior capstone as well. You get $500. Now is the perfect time to start because you don't have much to lose. If you're doing I2P and your company fails, you still have four years of college; you can still pursue a traditional path. It's a little risk but a lot to gain,” Shim said.

“Even if you pivot or change your idea, it's important to believe in what you started,” said Cao. “If you don't believe in your app, then nobody else does. Right now, you have all of the friends, mentors, professors, and the right resources, and money is not an issue. It's a good opportunity for you to work on it on the side, and maybe it could turn into something.”

What’s Next?

“We’re going to build for the iOS and Android platforms, and then we're going to deploy hopefully by the end of summer,” Shim said.

If you’re a student interested in building your own product for college credit, apply for I2P. And join us for Demo Day, Aug. 29, at 5 p.m., in the Georgia Tech Exhibition Hall to see new CREATE-X founders launch products in a variety of industries. Tickets are free but limited. Register today to secure your spot.

News Contact

Breanna Durham

Marketing Strategist

Mar. 04, 2024

Hyundai Motor Group Innovation Center Singapore hosted the Meta-Factory Conference Jan. 23 – 24. It brought together academic leaders, industry experts, and manufacturing companies to discuss technology and the next generation of integrated manufacturing facilities.

Seth Hutchinson, executive director of the Institute for Robotics and Intelligent Machines at Georgia Tech, delivered a keynote lecture on “The Impacts of Today’s Robotics Innovation on the Relationship Between Robots and Their Human Co-Workers in Manufacturing Applications” — an overview of current state-of-the-art robotic technologies and future research trends for developing robotics aimed at interactions with human workers in manufacturing.

In addition to the keynote, Hutchinson also participated in the Hyundai Motor Group's Smart Factory Executive Technology Advisory Committee (E-TAC) panel on comprehensive future manufacturing directions and toured the new Hyundai Meta-Factory to observe how digital-twin technology is being applied in their human-robot collaborative manufacturing environment.

Hutchinson is a professor in the School of Interactive Computing. He received his Ph.D. from Purdue University in 1988, and in 1990 joined the University of Illinois Urbana-Champaign, where he was professor of electrical and computer engineering until 2017 and is currently professor emeritus. He has served on the Hyundai Motor Group's Smart Factory E-TAC since 2022.

Hyundai Motor Group Innovation Center Singapore is Hyundai Motor Group’s open innovation hub to support research and development of human-centered smart manufacturing processes using advanced technologies such as artificial intelligence, the Internet of Things, and robotics.

- Christa M. Ernst

Related Links

- Hyundai Newsroom Article: Link

- Event Link: https://mfc2024.com/

- Keynote Speakers: https://mfc2024.com/keynotes/

News Contact

Christa M. Ernst - Research Communications Program Manager

christa.ernst@research.gatech.edu

Dec. 07, 2023

Students tackled climate change in the Fall 2023 Emory Global Health Institute (EGHI) /Georgia Institute of Technology (GT) Global Health Hackathon, Nov. 11, at Tech Square ATL Social. Competing for cash prizes and a spot in GT Startup Launch, first place went to Team iManhole. The team created an integrated system that gathers real-time data from manholes and uses machine learning algorithms to predict flooding to manage traffic and evacuation routes.

“The effects of climate change are felt in every country with the brunt and burden of an unmanaged climate crises threatening to set back global health progress by eroding decades of poverty eradication and health equity efforts worldwide,” said Dr. Rebecca Martin, EGHI director of Emory Global Health Institute. “Students are an important partner in our work as a global community to mitigate the impacts of climate change on health, safety, and security.”

The EGHI/GT Global Health Hackathon is a partner event between EGHI and CREATE-X. It provides multidisciplinary student teams from Emory University and the Georgia Institute of Technology an opportunity to create technology-based product solutions for global health problems. The target for this fall’s event was creating solutions that address urban flooding, urban heat, or global sea level rise in densely populated, low-resource urban settings. Prizes included $4,000 and a golden ticket into CREATE-X Startup Launch for first place winners, $3,000 for second place winners, $2,000 for third place winners, and $500 each for two honorable mention winners.

“This hackathon continues to be a wonderful partnership between our two institutions that gives these talented students the platform and support to put forward solutions to the most pressing issues we face today,” Rahul Saxena, director of CREATE-X, said. “Each hackathon, I’m increasingly impressed with their ingenuity and their dedication to build something of impact.”

Check out the event program on the EGHI website and see photos from the event on the CREATE-X Flickr account. The full list of the winners of this year’s event includes:

1st Place: iManhole

An integrated system that gathers real-time data from manholes and uses machine learning algorithms to predict flooding to manage traffic and evacuation routes

Team Members: Imran Shah, Leonardo Molinari, and Jiaqi Yang

2nd Place: Canopy

A climate-tech software platform for democratizing climate analytics using machine learning for urban development planning.

Team Members: Deesha Panchal, Kruthik Ravikanti, Vaibhav Mishra, Nicholas Swanson, Jennifer Samuel, and Vaishnavi Sanjeev

3rd Place: Floodwise

A package of effective simulations and an informed chatbot that help facilitate wise decisions during floods.

Team Members: Ansh Gupta, Dimi Deju, Mukund Chidambaram, and Sahit Mamidipaka

Honorable Mention

Conquering Heat Islands

Process and hardware that uses excess solar power to mine crypto

Team Members: Rida Akbar, DJ Louis, Edward Zheng, Dmitri Kalinin, and Jade Bondy

Real-Time Computational Modeling of Urban Flooding and Evacuation in Local Atlanta Communities

Integrated system to gather real-time data from manholes and use machine learning algorithms to predict flooding and optimize traffic/evacuation.

Team Members: Imran Shah, Leonardo Molinari, and Jiaqi Yang

News Contact

Breanna Durham

Marketing Strategist

breanna.durham@gatech.edu

Nov. 30, 2023

The Strategic Energy Institute (SEI) of Georgia Tech is excited to announce that Bettina Arkhurst is the 2023 recipient of the James G. Campbell Fellowship Award. Arkhurst’s commitment to academics, research, and community service has been recognized by the award committee. She is a Ph.D. candidate advised by Katherine Fu, professor in the George W. Woodruff School of Mechanical Engineering.

Arkhurst holds a bachelor’s degree in mechanical engineering from Massachusetts Institute of Technology and a master’s degree in mechanical engineering from Georgia Tech. Her research seeks to understand how concepts of energy justice can be applied to renewable energy technology design to better consider marginalized and vulnerable populations. She strives to create frameworks and tools for mechanical engineers to apply as they design energy technologies for all communities.

As an energy equity intern at the National Renewable Energy Laboratory, Arkhurst has worked with colleagues to better understand the role of researchers and engineers in the pursuit of a more just clean energy transition. She is also a leader in the Woodruff School’s graduate student mental health committee, which seeks to improve the culture around graduate student mental health and well-being. Additionally, Arkhurst is working with the Georgia Tech Center for Sustainable Communities Research and Education (SCoRE) to develop a course on community engagement and engineering that will launch in Spring 2024.

The Energy, Policy, and Innovation Center (EPICenter) and the Strategic Energy Institute are proud to announce the 2023 Spark Award recipients: Jake Churchill, Jordan R. Hale, Andrew G. Hill, Henry J. Kantrow, Emily Marshall, and Jacob W Tjards. The award honors outstanding leadership in advancing student engagement in energy research.

Churchill is a master’s student in mechanical engineering advised by Akanksha Menon, assistant professor in the Woodruff School. Working with Menon in the Water-Energy Research Lab, his research focuses on coupling reverse osmosis desalination with renewable energy and storage technologies to provide clean, sustainable, and affordable water in the face of growing global water stress. Churchill has led the Georgia Tech Energy Club’s Solar District Cup team for three years, guiding students interested in solar energy careers. He has also been involved with several SEI initiatives, including EPICenter’s high school summer camp, Energy Unplugged. He is currently facilitating a student-led study to quantify the benefits of cleaning photovoltaic panels using the rooftop array at the Carbon Neutral Energy Solutions Lab.

Hale is pursuing a Ph.D. in chemistry, specializing in theoretical and computational chemistry under Joshua Kretchmer, assistant professor in the School of Chemistry and Biochemistry. His current research focus is utilizing various quantum dynamics formalisms and unique computational techniques to identify the microscopic mechanisms of electron transport in perovskite solar cells. Hale has mentored high school students, teaching them the fundamentals of computational chemistry and various programming skills. Additionally, he has been actively engaged with undergraduate students from other universities both in and out of Georgia through the Summer Theoretical and Computational Chemistry workshop.

Hill is a Ph.D. candidate in the Soper Lab in the School of Chemistry and Biochemistry. His research is focused on the activation of strong chemical bonds using Earth-abundant metals for energy conversion and storage. He has taken an active leadership role on campus, in part through service as the president of the Georgia Tech Chemistry Graduate Student Forum.

Marshall is a second-year graduate student working for Alan Doolittle, professor in the School of Electrical and Computer Engineering. She uses specialized molecular beam epitaxy techniques to grow high-quality III-nitride materials for next-generation power, radio frequency, and optoelectronic devices. Her current research focuses on improving the fundamental understanding of the scandium catalytic effect to optimize the growth of scandium aluminum nitride, a material that shows great promise for applications in future power grids. In addition to her research, Marshall is committed to teaching, having volunteered for five semesters serving her fellow students as a peer instructor at the Hive Makerspace and currently training junior members of her lab to grow semiconductors via molecular beam epitaxy. After earning her master’s and Ph.D., she hopes to continue teaching, mentoring, and connecting others across the world in an effort to bring about a brighter future.

Kantrow is a Ph.D. candidate in the School of Chemical and Biomolecular Engineering, co-advised by Natalie Stingelin and Carlos Silva. His research seeks to understand the photo physics of semiconducting polymers operating in dynamic dielectric environments and to provide material design guidelines for solar fuel technologies. He is an active student leader in the Center for Soft Photo-Electrochemical Systems, where he also serves on the energy justice committee. He served as the secretary of the Association for Chemical Engineering Graduate Students (AChEGS) in 2022 and continues to mentor first-year graduate students in AChEGS and through the Pride Peers Program at Georgia Tech.

Tjards is a graduate research assistant at Georgia Tech’s Sustainable Thermal Systems Laboratory. He graduated with a bachelor’s degree in mechanical engineering from Georgia Tech in 2021 before beginning his Ph.D. program, where he is studying energy systems. Tjards’ research is focused on modeling new manufacturing processes of drywall and aluminum to reduce water consumption during production. Additionally, he is working on a new technique for water purification. While in school, he has been a teaching assistant and instructor for the undergraduate mechanical engineering course on energy systems analysis and design (ME 4315). In his free time, Tjards enjoys Formula 1 racing, Georgia Tech baseball games, and woodworking.

News Contact

Priya Devarajan | Research Communications Program Manager, SEI

Oct. 19, 2023

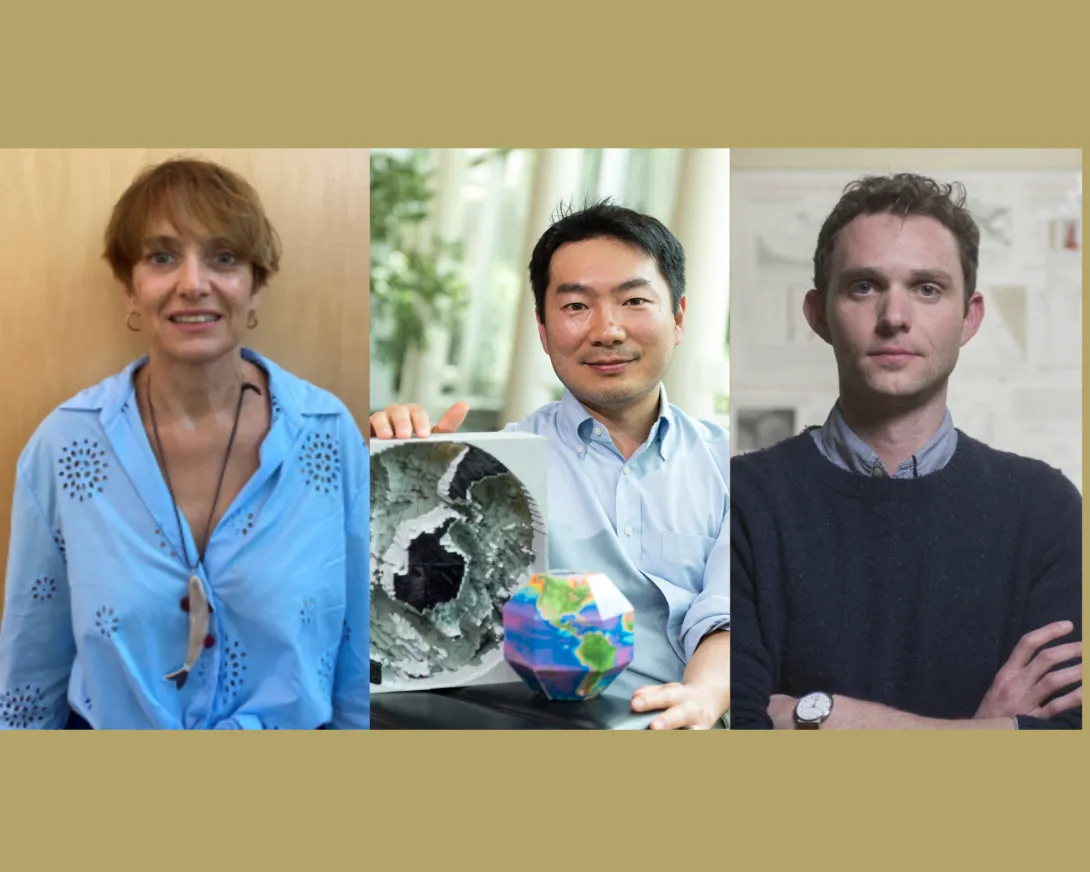

Three Georgia Tech School of Earth and Atmospheric Sciences researchers — Professor and Associate Chair Annalisa Bracco, Professor Taka Ito, and Georgia Power Chair and Associate Professor Chris Reinhard — will join colleagues from Princeton, Texas A&M, and Yale University for an $8 million Department of Energy (DOE) grant that will build an “end-to-end framework” for studying the impact of carbon dioxide removal efforts for land, rivers, and seas.

The proposal is one of 29 DOE Energy Earthshot Initiatives projects recently granted funding, and among several led by and involving Georgia Tech investigators across the Sciences and Engineering.

Overall, DOE is investing $264 million to develop solutions for the scientific challenges underlying the Energy Earthshot goals. The 29 projects also include establishing 11 Energy Earthshot Research Centers led by DOE National Laboratories.

The Energy Earthshots connect the Department of Energy's basic science and energy technology offices to accelerate breakthroughs towards more abundant, affordable, and reliable clean energy solutions — seeking to revolutionize many sectors across the U.S., and relying on fundamental science and innovative technology to be successful.

Carbon Dioxide Removal

The School of Earth and Atmospheric Sciences project, “Carbon Dioxide Removal and High-Performance Computing: Planetary Boundaries of Earth Shots,” is part of the agency’s Science Foundations for the Energy Earthshots program. Its goal is to create a publicly-accessible computer modeling system that will track progress in two key carbon dioxide removal (CDR) processes: enhanced earth weathering, and global ocean alkalinization.

In enhanced earth weathering, carbon dioxide is converted into bicarbonate by spreading minerals like basalt on land, which traps rainwater containing CO2. That gets washed out by rivers into oceans, where it is trapped on the ocean floor. If used at scale, these nature-based climate solutions could remove atmospheric carbon dioxide and alleviate ocean acidification.

The research team notes that there is currently “no end-to-end framework to assess the impacts of enhanced weathering or ocean alkalinity enhancement — which are likely to be pursued at the same time.”

“The proposal is for a three-year effort, but our hope is that the foundation we lay down in that time will represent a major step forward in our ability to track carbon from land to sea,” says Reinhard, the Georgia Power Chair who is a co-investigator on the grant.

“Like many folks interested in better understanding how climate interventions might impact the Earth system across scales, we are in some ways building the plane in midair,” he adds. “We need to develop and validate the individual pieces of the system — soils, rivers, the coastal ocean — but also wire them up and prove from observations on the ground how a fully integrated model works.”

That will involve the use of several existing computer models, along with Georgia Tech’s PACE supercomputers, Professor Ito explains. “We will use these models as a tool to better understand how the added alkalinity, carbon and weathering byproducts from the soils and rivers will eventually affect the cycling of nutrients, alkalinity, carbon and associated ecological processes in the ocean,” Ito adds. “After the model passes the quality check and we have confidence in our output, we can start to ask many questions about assessment of different carbon sequestration approaches or downstream impacts on ecosystem processes.”

Professor Bracco, whose recent research has focused on rising ocean heat levels, says CDR is needed just to keep ocean systems from warming about 2 degrees centigrade (Celsius).

“Ninety percent of the excess heat caused by greenhouse gas emissions is in the oceans,” Bracco shares, “and even if we stop emitting all together tomorrow, that change we imprinted will continue to impact the climate system for many hundreds of years to come. So in terms of ocean heat, CDRs will help in not making the problem worse, but we will not see an immediate cooling effect on ocean temperatures. Stabilizing them, however, would be very important.”

Bracco and co-investigators will study the soil-river-ocean enhanced weathering pipeline “because it’s definitely cheaper and closer to scale-up.” Reverse weathering can also happen on the ocean floor, with new clays chemically formed from ocean and marine sediments, and CO2 is included in that process. “The cost, however, is higher at the moment. Anything that has to be done in the ocean requires ships and oil to begin,” she adds.

Reinhard hopes any tools developed for the DOE project would be used by farmers and other land managers to make informed decisions on how and when to manage their soil, while giving them data on the downstream impacts of those practices.

“One of our key goals will also be to combine our data from our model pipeline with historical observational data from the Mississippi watershed and the Gulf of Mexico,” Reinhard says. “This will give us some powerful new insights into the impacts large-scale agriculture in the U.S. has had over the last half-century, and will hopefully allow us to accurately predict how business-as-usual practices and modified approaches will play out across scales.”

News Contact

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Aug. 31, 2023

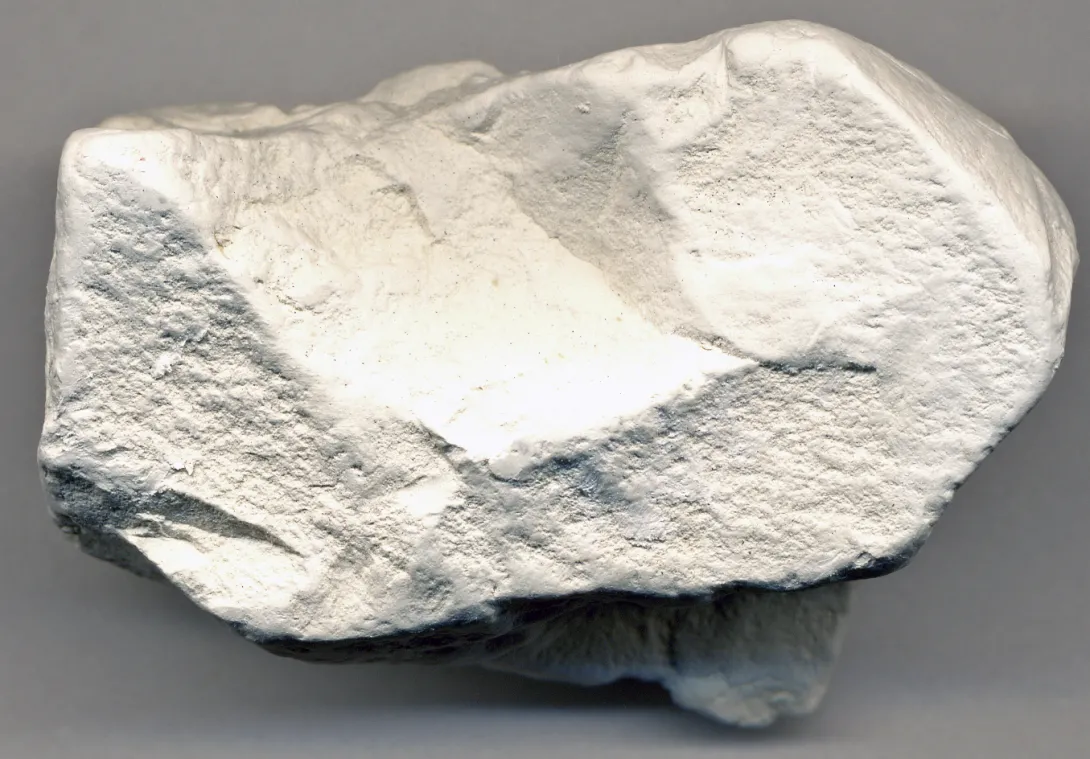

Yuanzhi Tang has received a National Science Foundation grant to see if areas along the middle and coastal plains of Georgia that produce a highly sought-after clay are also home to large amounts of rare earth elements (REEs) needed for a wide range of industries, including rapidly evolving clean energy efforts.

Tang is an associate professor in the School of Earth and Atmospheric Sciences at Georgia Tech. She is joined by Crawford Elliott, associate professor at Georgia State University, on their proposal, “The occurrences of the rare earth elements in highly weathered sedimentary rocks, Georgia kaolins,” funded by the NSF Division of Earth Sciences.

All about REEs

REEs such as cerium, terbium, neodymium, and yttrium, are critical minerals used in many industrial technology components such as semiconductors, permanent magnets, and rechargeable batteries (smart phones, computers), phosphors (flat screen TVs, light-emitting diodes), and catalysts (fuel combustion, auto emissions controls, water purification). They impact a wide range of industries such as health care, transportation, power generation (including wind turbines), petroleum refining, and consumer electronics.

“With the increasing global demand for green and sustainable technologies, REE demand is projected to increase rapidly in the U.S. and globally,” Tang says. “Yet currently the domestic REE production is very low, and the U.S. relies heavily on imports. The combination of growing demand and high dependence on international supplies has prompted the U.S. to explore new resources and develop environmentally friendly extraction and processing technologies.”

Georgia geology

Kaolin is a white, aluminosilicate clay mineral used in making paper, plastics, rubber, paints, and many other products. More than $1 billion worth of kaolin is mined from Georgia’s kaolin deposits every year, more than any other state.

Tang and Elliott say considerable amounts of the REEs have been found in the waste residues generated from Georgia kaolin mining.

“These occurrences have high REE contents and might add significantly to domestic resources,” Tang says. “By understanding the geological and geochemical processes controlling the occurrence and distribution of REEs in these weathered environments, we might be able to provide fundamental information for the identification of REE resources, and the design of efficient and green extraction technologies.”

“The new work with Dr. Tang has the potential to advance our fundamental understanding of the occurrences, mineralogical speciation, and distribution of the REEs in bauxite and kaolin ore,” Elliott says. “I am thrilled to be working with Dr. Tang on this project.”

Laterite thinking

The Department of Energy notes the 17 rare earth elements are found in highly weathered environments, such as the laterites, a type of soil and rock located in eastern and southeastern China, which currently comprises around 80 percent of the world’s REE reserves. To promote domestic production of REEs, the NSF sought proposals to explore natural unconventional element resources located in highly weathered sedimentary/regolith (loose rocky material covering bedrock) settings in the U.S. Georgia’s kaolin deposits and mines extend in the state from southwest to northeast, paralleling the state’s ‘fall line’ that separates the Piedmont Plateau from the coastal plains.

With the NSF grant, Tang and Elliott will find out more about the geochemical factors and processes controlling REE mobility, distribution, and fractionation (enrichment of light REE versus heavy REE) in these environments, which can provide the foundation to identify domestic resources, and for the rational design of extraction technologies.

Community connections

The proposed work will also integrate research with education, combining student training with undergraduate education and research, as well as K-12 and community outreach emphasizing the participation of underrepresented groups in geological sciences.

The grant relates to Tang’s work at two Georgia Tech interdisciplinary research institutes dedicated to sustainability, energy, and climate: the Strategic Energy Institute and the Brook Byers Institute for Sustainable Systems (BBISS), where she is a co-lead with Hailong Chen, an associate professor in the School of Materials Science and Engineering. Tang and Chen’s BBISS project is “Sustainable Resources for Clean Energy.” Tang also serves as an SEI/BBISS initiative lead on sustainable resources.

“The state of Georgia has already been experiencing rapid and exciting developments in the clean energy industry,” Tang says. “We hope to bridge an important link in this space. We hope to help identify and explore regional critical resources for clean energy development by both understanding the geological/geochemical fundamentals, and developing sustainable extraction technologies.”

Georgia Tech is also investing in the community outreach and social aspects of energy research, not just in science and engineering, Tang adds. “Collaboration with Georgia State University also gives exciting opportunities for the engagement with underrepresented student groups, especially in geological sciences, which will serve in the long term for workforce development.”

News Contact

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Jul. 26, 2023

Graduate students, under the guidance of SCL affiliated faculty member Jianjun Shi, have recently received well-deserved recognition for their accomplishments. The students' research interests revolve around the use of machine learning and data analytics in relation to advanced manufacturing.

Michael Biehler (advisor: Professor Jianjun Shi)

- Mary G. and Joseph Natrella Scholarship, American Statistical Association (ASA) (2023)

- Best Student Paper Award (Winner) Quality Control and Reliability Engineering (QCRE) Division, IISE (2023)

- For the paper: M. Biehler, D. Lin , J. Shi (2023): “DETONATE: Nonlinear Dynamic Evolution Modeling of Time-dependent 3-dimensional Point Cloud Profiles” IISE Transactions

- Best Student Paper Award (Finalist) Data Analytics and Information Systems (DAIS) Division, IISE (2023)

- For the paper: M. Biehler, A. Kulkarni, J. Li, J. Shi (2023+): “MULTI-MODAL: MULTI-fidelity, multi-modality 3D shape modeler:” submitted to IEEE Transactions on Automation Science and Engineering

- Phillip J. and Delores A. Scott Graduate Student Health and Wellness Award, H. Milton Stewart School of Industrial and Systems Engineering, Georgia Tech (2023)

- IHE-LeaD Fellow, Burroughs Wellcome Fund, Interdisciplinary and Health and Environment Leadership Development (2022-2023)

Alina Gorbunova (advisors: Professor Jianjun Shi and Professor Kamran Paynabar)

- NSF Graduate Research Fellowship (2023)

Shancong Mou (advisor: Professor Jianjun Shi)

- Best Track Paper Award (Winner), Quality Control and Reliability Engineering (QCRE) Division, IISE (2023)

- For the paper: Mou, S., Gu, X., Cao, M., Bai, H., Huang, P., Shan, J., Shi, J.*, 2023 “RGI: Robust GAN-Inversion for Generic Pixel-wise Anomaly Detection and Mask-free Image Inpainting”, The International Conference on Learning Representations (ICLR 2023).

- John S.W. Fargher Jr. Scholarship, IISE (2023)

- Angela P. and Reed J. Baker Research Excellence Award, School of ISyE, Georgia Institute of Technology (2023)

Zihan Zhang (advisors: Professor Jianjun Shi and Professor Kamran Paynabar)

- Aerospace and Test Measurement Division Scholarship, ISA (2023)

- ISA Scholarship, ISA (2023)

- Gilbreth Memorial Scholarship, IISE (2023)

- NCORE Student Scholar, National Conference on Race and Ethnicity in Higher Education (2023)

May. 30, 2023

When Nathanael Koh (CmpE ’22), Ivan Zou (CmpE ’23), and Bowen “Bruce” Tan (a second-year computer science major) were all students at Georgia Tech, they noticed a sustainability problem close to home – the vast amounts of food being discarded in Georgia Tech’s dining halls. They wondered what was at the heart of the waste. Were the portions too large? Were the vegetables overcooked? Was the meat dry? Nathanael, Ivan, and Bruce launched a startup, Raccoon Eyes, to discover why students throw food away and to help dining hall managers address the problem. On March 31, 2023, the team took second prize in the Sustainable-X Showcase. With the help of prize money and investment, Raccoon Eyes is pursuing its goal to reduce food waste by transforming each cafeteria and dining hall across the nation. The Ray C. Anderson Center for Sustainable Business, which leads Sustainable-X (a Sustainability Next Georgia Tech Strategic Plan initiative) in partnership with CREATE-X, asked Nathanael Koh to share Raccoon Eyes’ startup journey.

Where did you get the idea for Raccoon Eyes?

We first got the idea from our experiences in Georgia Tech’s dining halls, mainly West Village Dining Commons. One day as Ivan was leaving the cafeteria, he saw the kitchen staff throwing out a lot of breakfast food items. We continued to take notice of waste. We discovered that pre-consumer (kitchen) and post-consumer (student) waste is a big problem at Georgia Tech and other universities. We wanted to change how students and chefs alike look at waste.

What environmental challenge does Raccoon Eyes address?

Food waste is a big problem. College dining halls in the U.S. produce 7.9 billion pounds of food waste per year. That food waste produces 175 tons of carbon emissions. Overall, food waste has a larger carbon footprint than the airline industry. Greenhouse gasses are emitted by garbage in landfills as well as by the processes used to get rid of waste, such as garbage trucks and burning trash.

So, what exactly is Raccoon Eyes?

Our business was developed around a trash can extension. The extension includes a camera that photographs food waste. We developed software to identify the type of food waste and its weight. A computer screen prompts students to explain why they are throwing away food. The paired data and feedback are shared with dining hall managers and chefs to help them modify portion sizes and food preparation.

What business problem does the company solve for dining hall managers?

Food waste is costly. Think of the ingredients, the labor to make the food, and the process to get rid of trash. Raccoon Eyes provides dining managers with crucial data on the type and quantity of food waste paired with student feedback. This information can help managers make data-driven decisions to reduce waste and improve the dining experience for students. Managers are eager to improve the student experience. If students enjoy the food in the dining halls, that has a positive impact on meal plan retention.

How did the three of you meet, and what steps did you take to develop this business?

We met in our Georgia Tech classes. We came together to build Raccoon Eyes for the Fall 2022 CREATE-X Capstone (a special section of the senior design course dedicated to entrepreneurial projects). Through our talks with chefs, managers, and directors in the dining halls, we discovered there was a real need for our product and the data it collects. We therefore decided to continue our development.

What has it been like to work with Georgia Tech Dining Services as a partner?

Working with Georgia Tech Dining Services has been an amazing experience. We continuously communicate with the dining staff, chefs, managers, sustainability team, and directors to understand the problem. They have helped us see what a perfect solution would look like to them. Maurice Gibson, the assistant director of dining operations at West Village Dining Commons, was especially helpful. He gave us permission to use our device in the cafeteria and offered feedback that helped us make improvements to the extension.

How have students responded to the extension?

Overall, the feedback is positive. Students realize that their feedback can lead to a better dining experience.

What is one of the biggest obstacles your team has had to overcome?

We overcame the obstacle of developing the technology to identify the food on the student’s plate and determine its weight from a video or image. While the rest of the food waste industry needs a scale to measure the food, we can do it with a camera.

What are some of Raccoon Eyes’ big achievements thus far?

Our team won first place at the Fall 2022 Senior Capstone Expo, was a finalist for the 2023 Georgia Tech InVenture Prize, and won second place at the 2023 Sustainable-X Showcase.

What Georgia Tech resources have helped you develop the startup?

CREATE-X mentorship, the Hive Makerspace, and courses in which we learned about the technologies used in our product have all been tremendously helpful. Winning second place in the Sustainable-X Showcase has opened up even more opportunities. For instance, Sustainable-X has been helping us develop a contract we can use for our work with Georgia Tech Dining Services. Also, we are working with a partner affiliated with CREATE-X and Sustainable-X to receive additional investments for our company.

What are the next steps for your company?

Our goal is to be at the forefront of the movement to reduce food waste by transforming each cafeteria and dining hall across the nation. By providing dining managers with data on the type and quantity of food waste, the company aims to reduce food waste, improve the dining experience for students, and reduce the environmental impact of wasted food. Thanks to our win in the Sustainable-X Showcase, we have been invited to continue our business journey this summer during the CREATE-X Startup Launch. We want to expand our work at Georgia Tech – to go from having one unit to six working in the dining halls. We also want to run pilot programs for colleges and universities near Atlanta.

Would you like to thank anyone in particular?

Abhipsa Ujwal (CmpE ’22) and Phuc Truong (EE ’22) contributed to the senior design project and InVenture Prize competition. We would like to thank our friends, family, and mentors who have allowed us to succeed and grow not only as a company but also as people.

As told to Jennifer Holley Lux.

Photo: Bruce Tan, Ivan Zou, and Nathanael Koh. (Photo credit for left image: Joya Chapman.)

May. 15, 2023

James X. Zhong Manis, who is pursuing his Ph.D. in Physical Chemistry at Georgia Tech, will get a chance to conduct his thesis research at a Department of Energy national laboratory at Stanford University, thanks to his selection to the DOE Office of Science Graduate Student Research (SCGSR) program.

The goal of the SCGSR program is to prepare graduate students for science, technology, engineering, or mathematics (STEM) careers critically important to the DOE Office of Science mission. The agency provides graduate thesis research opportunities through extended residency at DOE national laboratories.

“I am so excited and feel extremely lucky to have this opportunity to continue my research with DOE help,” Manis said. “I am thankful for everyone’s help to get me where I am, especially my principal investigator Thomas Orlando, our lab senior research scientist Brant Jones, my collaborating DOE scientist Thorsten Weber, and also everyone else in my research group. I am so thrilled to be working with world class scientists on cutting edge equipment.”

Manis is one of 87 awardees from 58 different universities who will conduct research at 16 DOE national laboratories. The research projects proposed by the new awardees are aligned with the priority mission areas of the DOE Office of Science that have a high need for workforce development.

“The SCGSR program provides a way for graduate students to enrich their scientific research by engaging with researchers at DOE National Labs, learning from world class scientists, and using state-of-the-art equipment and facilities,” notes Asmeret Asefaw Berhe, Director of the DOE Office of Science. “In addition, they get valuable opportunities to network and observe firsthand what it’s like to have a scientific career. I can’t wait to see what these young researchers do in the future. I know they will meet upcoming scientific challenges in new and innovative ways.”

Manis, who also earned a Bachelor of Science degree from the Wallace H. Coulter Department of Biomedical Engineering in 2018, will join the DOE’s Gas Phase Chemical Physics program at the Stanford Linear Accelerator Center (SLAC) at Stanford University. The Center supports research on fundamental gas-phase chemical processes important in energy applications.

SCGSR awardees work on research projects of significant importance to the Office of Science mission that address critical energy, environmental, and nuclear challenges at national and international scales. Projects in this new cohort span eight different DOE Office of Science research programs.

Manis’ project falls into the Basic Energy Sciences category. “I am interested in understanding the low energy electron interaction with biomolecules, which is a potential way of causing DNA damage,” he said. “The research I will conduct at the SLAC National Accelerator Laboratory is to first help in commissioning the DREAM (Dynamic REAction Microscope) end station in the TMO (time-resolved atomic, molecular and optical science) instrument hub.

“I have never visited SLAC before, but I am extremely excited to work there,” Manis added. “It’s going to be a change of pace collaborating with another group of scientists, and I can’t wait to start.”

News Contact

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Pagination

- Previous page

- 4 Page 4

- Next page