Jul. 22, 2025

In June, the Strategic Energy Institute (SEI) hosted the Energy and National Security Summer Cohort Meeting that convened seed grant awardees from the Energy and National Security Initiative. A partnership between SEI and the Georgia Tech Research Institute (GTRI), the initiative provides research support through a seed grant program that launched last summer.

“As national security needs rapidly evolve, Georgia Tech is leveraging its research ecosystem and seed funding programs to accelerate the development of transformational technologies and strategies that strengthen national resilience,” said Christine Conwell, interim executive director of SEI. “We designed this seed grant program to tackle pressing national security priorities of today, such as threats to the grid, nuclear security, supply chain resilience, and renewable integration.”

The event began with an introduction from John Tien, SEI distinguished external fellow, professor of the practice, and former deputy secretary for the Department of Homeland Security, who addressed the evolving and multifaceted challenges facing energy, national security, and policy today. Tien’s talk emphasized the importance of early, strategic research investments in driving sustainable progress and long-term solutions.

The seed grant awardees then presented the initial progress of their research projects through lightning talks and a Q&A session. The research projects included:

- Energy Infrastructure Security and Risk Assessment Through Interactive Wargaming.

- Evaluating Energy Storage Materials, Supplies, and Systems in the Context of National Security Requirements.

- Nanostructured Sensors for Monitoring of Nuclear Fuel Cycle.

- Resilient Critical Infrastructures via Provable Secure Control Algorithms.

- Robust Energy Systems Planning by Way of Novel Systems Engineering (RESPoNSE).

- SPARC: Severe-Weather Predictive Analytics and Resilient Communication.

- The Strategic Mineral Economy: Challenges and Opportunities for Critical Resources.

“That critical intersection between energy and national security is where both risk and opportunity lie. To mitigate those risks and take advantage of the opportunities, our project teams have developed research topic areas that align with the U.S. Department of Energy's nine pillars for American energy dominance and security, as well as ongoing U.S. Department of Defense priorities,” said Tien.

The meeting showcased Georgia Tech’s collaborative and forward-looking research at the intersection of energy and national security, aimed at shaping a more secure and resilient energy future.

Written by: Katie Strickland & Priya Devarajan

News Contact

Priya Devarajan || SEI Communications Program Manager

Jul. 18, 2025

As more satellites launch into space, the satellite industry has sounded the alarm about the danger of collisions in low Earth orbit (LEO). What is less understood is what might happen as more missions head to a more targeted destination: the moon.

According to The Planetary Society, more than 30 missions are slated to launch to the moon between 2024 and 2030, backed by the U.S., China, Japan, India, and various private corporations. That compares to over 40 missions to the moon between 1959 and 1979 and a scant three missions between 1980 and 2000.

A multidisciplinary team at Georgia Tech has found that while collision probabilities in orbits around the moon are very low compared to Earth orbit, spacecraft in lunar orbit will likely need to conduct multiple costly collision avoidance maneuvers each year. The Journal of Spacecraft and Rockets published the Georgia Tech collision-avoidance study in March.

“The number of close approaches in lunar orbit is higher than some might expect, given that there are only tens of satellites, rather than the thousands in low Earth orbit,” says paper co-author Mariel Borowitz, associate professor in the Sam Nunn School of International Affairs in the Ivan Allen College of Liberal Arts.

Borowitz and other researchers attribute these risky approaches in part to spacecraft often choosing a limited number of favorable orbits and the difficulty of monitoring the exact location of spacecraft that are more than 200,000 miles away.

“There is significant uncertainty about the exact location of objects around the moon. This, combined with the high cost associated with lunar missions, means that operators often undertake maneuvers even when the probability is very low — up to one in 10 million,” Borowitz explains.

The Georgia Tech research is the first published study showing short- and long-term collision risks in cislunar orbits. Using a series of Monte Carlo simulations, the researchers modeled the probability of various outcomes in a process that cannot be easily predicted because of random variables.

“Our analysis suggests that satellite operators must perform up to four maneuvers annually for each satellite for a fleet of 50 satellites in low lunar orbit (LLO),” said one of the study’s authors, Brian Gunter, associate professor in the Daniel Guggenheim School of Aerospace Engineering.

He noted that with only 10 satellites in LLO, a satellite might still need a yearly maneuver. This is supported by what current cislunar operators have reported.

Favored Orbits

Most close encounters are expected to occur near the moon’s equator, an intersection point between the orbit planes of commonly used “frozen” and low lunar orbits, which are preferred by many operators. Other possible regions of congestion can occur at the Lagrangian points, or regions where the gravitational forces of Earth and the moon balance out. Stable orbits in these regions have names such as Halo and Lyapunov orbits.

“Lagrangian points are an interesting place to put a satellite because it can maintain its orbit for long periods with very little maneuvering and thrusting. Frozen orbits, too. Anywhere outside these special areas, you have to spend a lot of fuel to maintain an orbit,” he said.

Gunter and other researchers worry that if operators aren’t coordinated about how they plan lunar missions, opportunities for collision will increase in these popular orbits.

“The close approaches were much more common than I would have intuitively anticipated,” says lead study author Stef Crum.

The 2024 graduate of Georgia Tech’s aerospace engineering doctoral program notes that, considering the small number of satellites in lunar orbit, the need for multiple maneuvers was “really surprising.”

Crum, who is also co-founder of Reditus Space, a startup he founded in 2024 to provide reusable orbital re-entry services, adds that the cislunar environment is so challenging because “it’s incredibly vast.”

His research also examines ways to improve object monitoring in cislunar space. Maintaining continuous custody of these objects is difficult because a target’s position must be monitored over the entire duration of its trajectory.

“That wasn’t feasible for translunar orbits, given the vast volume of cislunar orbit, which stretches multiple millions of kilometers in three dimensions,” he says.

By estimating a satellite’s orbit using observed data and constraining the presumed location and direction of the satellite, rather than continuous tracking (a process known as continuous custody), Crum greatly simplified the process.

“You no longer need thousands of satellites or a set of enormous satellites to cover all potential trajectories,” he explains. “Instead, one or a few satellites are required, and operators can lose custody for a time as long as the connection is reacquired later.”

Since the team started their study, there has been a lot of interest in the moon and cislunar activity — both NASA and China’s National Space Administration are planning to send humans to the moon. In the last two years, India, Japan, the U.S., China, Russia, and four private companies have attempted missions to the moon.

Why the Moon

Spacefaring nations’ intense interest in exploring the lunar surface comes as no surprise given that the moon offers a variety of resources, including solar power, water, oxygen, and metals like iron, titanium, and uranium. It also contains Helium-3, a potential fuel for nuclear fusion, and rare earth metals vital for modern technology. With the recent discovery of water ice, it could be a plentiful source for rocket fuel that can be created from liquifying oxygen and hydrogen needed to launch deep space missions to destinations like Mars. In February, Georgia Tech announced that researchers have developed new algorithms to help Intuitive Machines’ lunar lander find water ice on the moon.

Commercial space companies like Axiom Space and Redwire Space, as well as space agencies, are actively building lunar infrastructure, from satellite constellations to orbital platforms to support communication, navigation, scientific research, and eventually space tourism.

A key project involves the Lunar Gateway, a joint venture of NASA and international space agencies like ESA, JAXA, and CSA, as well as commercial partners. Humanity’s first space station around the moon will serve as a central hub for human exploration of the moon and is considered a stepping stone for future deep space missions.

Getting Ahead of a Gold Rush to the Moon

All this activity underscores the urgency to get out in front of potential crowding issues — something that hasn’t occurred in LEO, where near-miss collisions, or conjunctions, are frequent. LEO, which is 100 to 1,200 miles above the Earth’s surface, is host to more than 14,000 satellites and 120 million pieces of debris from launches, collisions, and wear and tear, reports Reuters.

“Using the near-Earth environment as an example, the space object population has gone from approximately 6,000 active satellites in the early 2020s to an anticipated 60,000 satellites in the coming decade if the projected number of large satellite constellations currently in the works gets deployed. That poses many challenges in terms of how we can manage that sustainably,” observed Gunter. “If something similar happens in the lunar environment, say if Artemis (NASA’s program to establish the first long-term presence on the moon) is successful and a lunar base is established, and there is discovery of volatiles or water deposits, it could initiate a kind of gold rush effect that might accelerate the number of actors in cislunar space.”

For this reason, Borowitz argues for the need to begin working on coordination, either in the planning of the orbits for future missions or by sharing information about the location of objects operating in lunar orbit. She pointed out that spacecraft outfitted for moon missions are expensive, making a collision highly costly. Also, debris from such a scenario would spread in an unpredictable way, which could be problematic for other objects.

Gunter agreed, noting, “If we’re not careful, we could be putting a lot of things in this same path. We must ensure we build out the cislunar orbital environment in a smart way, where we’re not intentionally putting spacecraft in the same orbital spaces. If we do that, everyone should be able to get what they want and not be in each other’s way.”

Borowitz says some coordination efforts are underway with the UN Committee on the Peaceful Uses of Outer Space and the creation of an action team on lunar activities; however, international diplomacy is a time-consuming process, and it can be a challenge to keep pace with advancements in technology.

She contends that the Georgia Tech study could provide baseline data that “could be helpful for international coordination efforts, helping to ensure that countries better understand potential future risks.”

Gunter and Borowitz say that follow-on research for the team could involve looking into the Lunar Gateway orbit and other special orbits to see how crowded that space will likely get, and then do an end-to-end simulation of these orbits to determine the most effective way to build them out to avoid collision risks. Ultimately, they intend to develop guidelines to help ensure that future space actors headed to the moon can operate safely.

Jul. 16, 2025

The National Science Foundation (NSF) has awarded Georgia Tech and its partners $20 million to build a powerful new supercomputer that will use artificial intelligence (AI) to accelerate scientific breakthroughs.

Called Nexus, the system will be one of the most advanced AI-focused research tools in the U.S. Nexus will help scientists tackle urgent challenges such as developing new medicines, advancing clean energy, understanding how the brain works, and driving manufacturing innovations.

“Georgia Tech is proud to be one of the nation’s leading sources of the AI talent and technologies that are powering a revolution in our economy,” said Ángel Cabrera, president of Georgia Tech. “It’s fitting we’ve been selected to host this new supercomputer, which will support a new wave of AI-centered innovation across the nation. We’re grateful to the NSF, and we are excited to get to work.”

Designed from the ground up for AI, Nexus will give researchers across the country access to advanced computing tools through a simple, user-friendly interface. It will support work in many fields, including climate science, health, aerospace, and robotics.

“The Nexus system's novel approach combining support for persistent scientific services with more traditional high-performance computing will enable new science and AI workflows that will accelerate the time to scientific discovery,” said Katie Antypas, National Science Foundation director of the Office of Advanced Cyberinfrastructure. “We look forward to adding Nexus to NSF's portfolio of advanced computing capabilities for the research community.”

Nexus Supercomputer — In Simple Terms

- Built for the future of science: Nexus is designed to power the most demanding AI research — from curing diseases, to understanding how the brain works, to engineering quantum materials.

- Blazing fast: Nexus can crank out over 400 quadrillion operations per second — the equivalent of everyone in the world continuously performing 50 million calculations every second.

- Massive brain plus memory: Nexus combines the power of AI and high-performance computing with 330 trillion bytes of memory to handle complex problems and giant datasets.

- Storage: Nexus will feature 10 quadrillion bytes of flash storage, equivalent to about 10 billion reams of paper. Stacked, that’s a column reaching 500,000 km high — enough to stretch from Earth to the moon and a third of the way back.

- Supercharged connections: Nexus will have lightning-fast connections to move data almost instantaneously, so researchers do not waste time waiting.

- Open to U.S. researchers: Scientists from any U.S. institution can apply to use Nexus.

Why Now?

AI is rapidly changing how science is investigated. Researchers use AI to analyze massive datasets, model complex systems, and test ideas faster than ever before. But these tools require powerful computing resources that — until now — have been inaccessible to many institutions.

This is where Nexus comes in. It will make state-of-the-art AI infrastructure available to scientists all across the country, not just those at top tech hubs.

“This supercomputer will help level the playing field,” said Suresh Marru, principal investigator of the Nexus project and director of Georgia Tech’s new Center for AI in Science and Engineering (ARTISAN). “It’s designed to make powerful AI tools easier to use and available to more researchers in more places.”

Srinivas Aluru, Regents’ Professor and senior associate dean in the College of Computing, said, “With Nexus, Georgia Tech joins the league of academic supercomputing centers. This is the culmination of years of planning, including building the state-of-the-art CODA data center and Nexus’ precursor supercomputer project, HIVE."

Like Nexus, HIVE was supported by NSF funding. Both Nexus and HIVE are supported by a partnership between Georgia Tech’s research and information technology units.

A National Collaboration

Georgia Tech is building Nexus in partnership with the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign, which runs several of the country’s top academic supercomputers. The two institutions will link their systems through a new high-speed network, creating a national research infrastructure.

“Nexus is more than a supercomputer — it’s a symbol of what’s possible when leading institutions work together to advance science,” said Charles Isbell, chancellor of the University of Illinois and former dean of Georgia Tech’s College of Computing. “I'm proud that my two academic homes have partnered on this project that will move science, and society, forward.”

What’s Next

Georgia Tech will begin building Nexus this year, with its expected completion in spring 2026. Once Nexus is finished, researchers can apply for access through an NSF review process. Georgia Tech will manage the system, provide support, and reserve up to 10% of its capacity for its own campus research.

“This is a big step for Georgia Tech and for the scientific community,” said Vivek Sarkar, the John P. Imlay Dean of Computing. “Nexus will help researchers make faster progress on today’s toughest problems — and open the door to discoveries we haven’t even imagined yet.”

News Contact

Siobhan Rodriguez

Senior Media Relations Representative

Institute Communications

Jul. 15, 2025

The National Science Foundation (NSF) has awarded Georgia Tech and its partners $20 million to build a powerful new supercomputer that will use artificial intelligence (AI) to accelerate scientific breakthroughs.

Called Nexus, the system will be one of the most advanced AI-focused research tools in the U.S. Nexus will help scientists tackle urgent challenges such as developing new medicines, advancing clean energy, understanding how the brain works, and driving manufacturing innovations.

“Georgia Tech is proud to be one of the nation’s leading sources of the AI talent and technologies that are powering a revolution in our economy,” said Ángel Cabrera, president of Georgia Tech. “It’s fitting we’ve been selected to host this new supercomputer, which will support a new wave of AI-centered innovation across the nation. We’re grateful to the NSF, and we are excited to get to work.”

Designed from the ground up for AI, Nexus will give researchers across the country access to advanced computing tools through a simple, user-friendly interface. It will support work in many fields, including climate science, health, aerospace, and robotics.

“The Nexus system's novel approach combining support for persistent scientific services with more traditional high-performance computing will enable new science and AI workflows that will accelerate the time to scientific discovery,” said Katie Antypas, National Science Foundation director of the Office of Advanced Cyberinfrastructure. “We look forward to adding Nexus to NSF's portfolio of advanced computing capabilities for the research community.”

Nexus Supercomputer — In Simple Terms

- Built for the future of science: Nexus is designed to power the most demanding AI research — from curing diseases, to understanding how the brain works, to engineering quantum materials.

- Blazing fast: Nexus can crank out over 400 quadrillion operations per second — the equivalent of everyone in the world continuously performing 50 million calculations every second.

- Massive brain plus memory: Nexus combines the power of AI and high-performance computing with 330 trillion bytes of memory to handle complex problems and giant datasets.

- Storage: Nexus will feature 10 quadrillion bytes of flash storage, equivalent to about 10 billion reams of paper. Stacked, that’s a column reaching 500,000 km high — enough to stretch from Earth to the moon and a third of the way back.

- Supercharged connections: Nexus will have lightning-fast connections to move data almost instantaneously, so researchers do not waste time waiting.

- Open to U.S. researchers: Scientists from any U.S. institution can apply to use Nexus.

Why Now?

AI is rapidly changing how science is investigated. Researchers use AI to analyze massive datasets, model complex systems, and test ideas faster than ever before. But these tools require powerful computing resources that — until now — have been inaccessible to many institutions.

This is where Nexus comes in. It will make state-of-the-art AI infrastructure available to scientists all across the country, not just those at top tech hubs.

“This supercomputer will help level the playing field,” said Suresh Marru, principal investigator of the Nexus project and director of Georgia Tech’s new Center for AI in Science and Engineering (ARTISAN). “It’s designed to make powerful AI tools easier to use and available to more researchers in more places.”

Srinivas Aluru, Regents’ Professor and senior associate dean in the College of Computing, said, “With Nexus, Georgia Tech joins the league of academic supercomputing centers. This is the culmination of years of planning, including building the state-of-the-art CODA data center and Nexus’ precursor supercomputer project, HIVE."

Like Nexus, HIVE was supported by NSF funding. Both Nexus and HIVE are supported by a partnership between Georgia Tech’s research and information technology units.

A National Collaboration

Georgia Tech is building Nexus in partnership with the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign, which runs several of the country’s top academic supercomputers. The two institutions will link their systems through a new high-speed network, creating a national research infrastructure.

“Nexus is more than a supercomputer — it’s a symbol of what’s possible when leading institutions work together to advance science,” said Charles Isbell, chancellor of the University of Illinois and former dean of Georgia Tech’s College of Computing. “I'm proud that my two academic homes have partnered on this project that will move science, and society, forward.”

What’s Next

Georgia Tech will begin building Nexus this year, with its expected completion in spring 2026. Once Nexus is finished, researchers can apply for access through an NSF review process. Georgia Tech will manage the system, provide support, and reserve up to 10% of its capacity for its own campus research.

“This is a big step for Georgia Tech and for the scientific community,” said Vivek Sarkar, the John P. Imlay Dean of Computing. “Nexus will help researchers make faster progress on today’s toughest problems — and open the door to discoveries we haven’t even imagined yet.”

News Contact

Siobhan Rodriguez

Senior Media Relations Representative

Institute Communications

Jul. 15, 2025

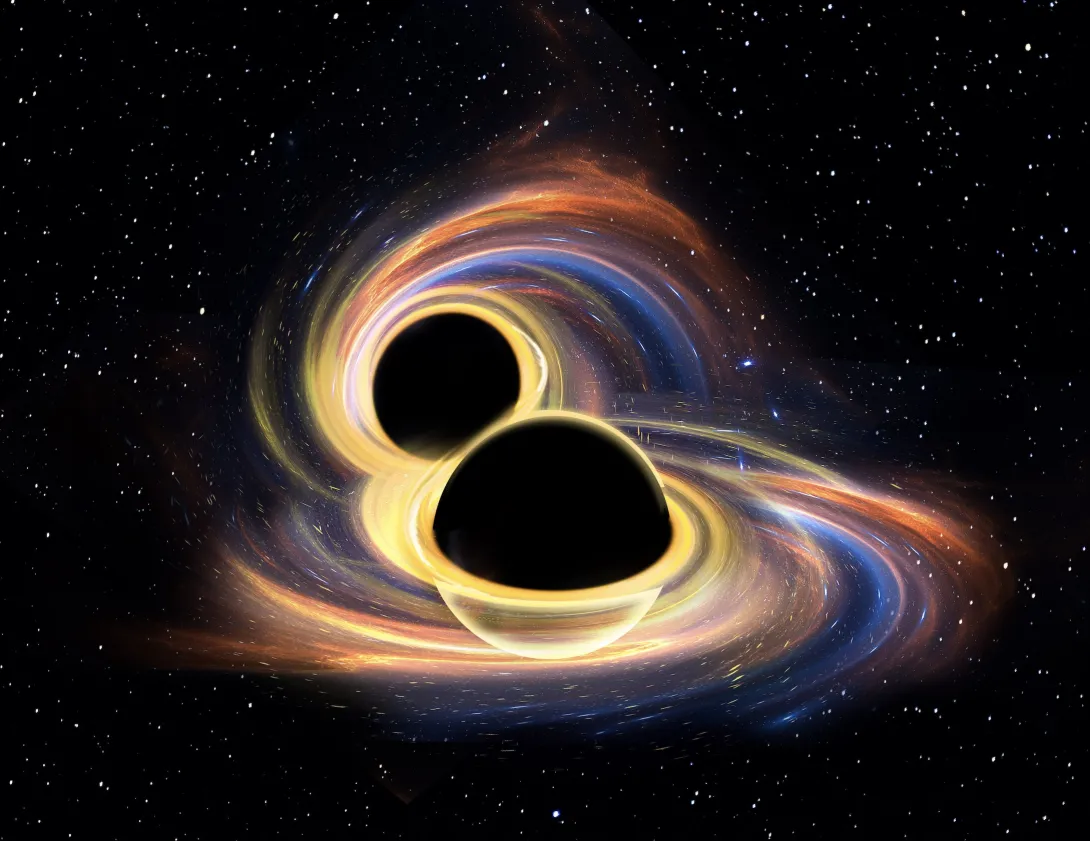

The Laser Interferometer Gravitational-Wave Observatory (LIGO)’s LIGO-Virgo-KAGRA (LVK) collaboration has detected an extremely unusual binary black hole merger — a phenomenon that occurs when two black holes are pulled into each other's orbit and combine. Announced yesterday in a California Institute of Technology press release, the binary black hole merger, GW231123, is the largest ever detected with gravitational waves.

Before merging, both black holes were spinning exceptionally fast, and their masses fell into a range that should be very rare — or impossible.

“Most models don't predict black holes this big can be made by supernovas, and our data indicates that they were spinning at a rate close to the limit of what’s theoretically possible,” says Margaret Millhouse, a research scientist in the School of Physics who played a key role in the research. “Where could they have come from? It raises interesting questions.”

A binary black hole merger absorbs characteristics from both of the contributors, she adds. “As a result, this is not only the most massive binary black hole ever seen but also the fastest-spinning binary black hole confidently detected with gravitational waves.”

“GW231123 is a record-breaking event,” says School of Physics Professor Laura Cadonati, who has been a member of the LIGO Scientific Collaboration since 2002. “LIGO has been observing the cosmos for 10 years now. This discovery underscores that there is still so much that this instrument can help us learn.”

A Cosmic View

The findings challenge current theories on how smaller black holes form, says School of Physics Assistant Professor and LIGO collaborator Surabhi Sachdev. Smaller black holes are the result of supernovae: dying and collapsing stars. During that collapse, explosions can tear apart or eject part of the star’s mass — limiting the size of the black hole that forms.

“Black holes from supernovae can weigh up to about 60 times the mass of our Sun,” she says. “The black holes in this merger were likely the mass of hundreds of suns.”

Because of its size, GW231123 also allowed the team to study the merger in unprecedented detail. “LIGO has observed scores of black hole mergers,” says Cadonati. “Of these, GW231123 has provided us with the clearest view of the ‘grand finale’ of a merger thus far. This adds a new clue to solve the puzzle that are black holes, including their origins and properties.”

“While we saw that our expectations matched the data, the extreme nature of this event pushed our models to their limits,” Millhouse adds. “A massive, highly spinning system like this will be of interest to researchers who study how binary black holes form.”

Decoding a Split-Second Signal

Millhouse and School of Physics Postdoctoral Fellow Prathamesh Joshi used Einstein’s equations for general relativity to confirm LIGO’s detections.

To find black holes, LIGO measures distortions in spacetime — ripples that are created when two black holes collide. These patterns in gravitational waves can be used to find the signature signal of black hole collisions.

“In this case, the signal lasted for just one-tenth of a second, but it was very clear,” says Joshi. "Previously, we designed a special study to detect these interesting signals, which accounted for all the unusual properties of such massive systems — and it paid off!”

“To ensure it wasn’t noise, the Georgia Tech team first reconstructed the signal in a model-agnostic way,” Millhouse adds. “We then compared those reconstructions to a model that uses Einstein's equations of general relativity, and both reconstructions looked very similar, which helped confirm that this highly unusual phenomenon was a genuine detection.”

Sachdev says that seeing the signal at both LIGO Observatories — placed in Hanford, Washington and Livingston, Louisiana — was also critical. “These short signals are very hard to detect, and this signal is so unlike any of the other binary black holes that we've seen before,” she says. “Without both detectors, we would have missed it.”

A Decade of Discovery

While the team has yet to determine how the original black holes formed, one theory is that they may have resulted from mergers themselves. “This could have been a chain of mergers,” Sachdev explains. “This tells us that they could have existed in a very dense environment like a nuclear star cluster or an active galactic nucleus.” Their spins provide another clue as spinning is a characteristic usually seen in black holes resulting from a merge.

The team adds that GW231123 could provide clues on how larger black holes are formed — including the mysterious supermassive black holes at the center of galaxies.

“Gravitational wave science is almost a decade old, and we're still making fundamental discoveries,” says Millhouse. “It’s exciting that LIGO is continuing to detect new phenomena, and this is at the edge of what we've seen thus far. There's still so much we can learn.”

The team expects to update their catalogue of black holes in August 2025, which will provide another window into how this exceptionally heavy black hole might fit into the universe, and what we can continue to learn from it.

Funding: The LIGO Laboratory is supported by the U.S. National Science Foundation and operated jointly by Caltech and MIT.

Jul. 11, 2025

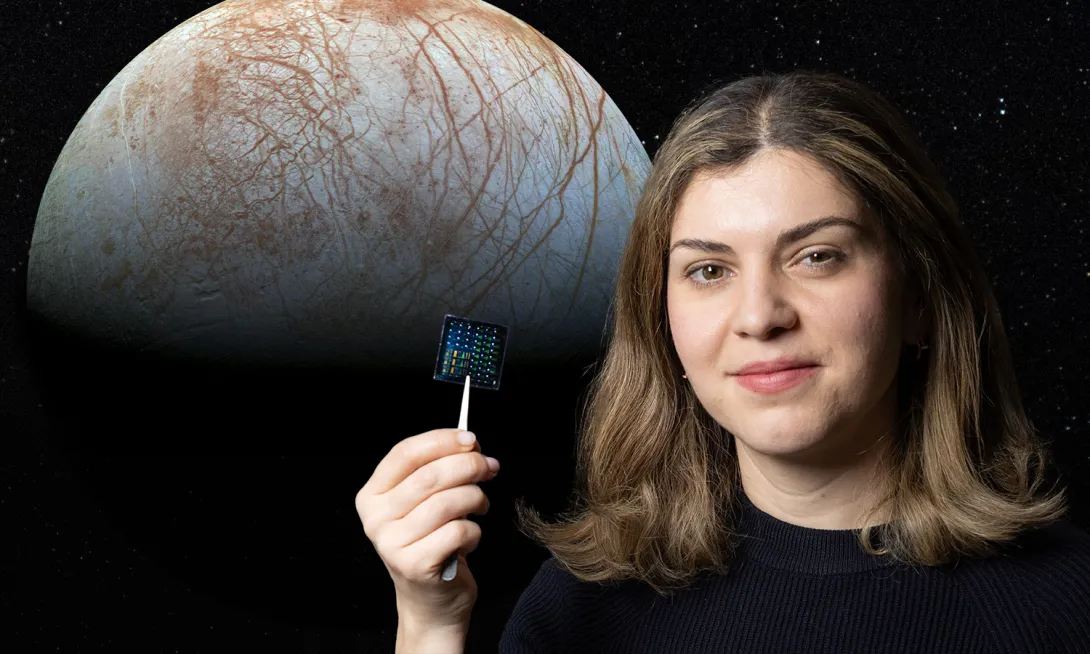

Right now, about 70 million miles away, a Ramblin’ Wreck from Georgia Tech streaks through the cosmos. It’s a briefcase-sized spacecraft called Lunar Flashlight that was assembled in a Georgia Tech Research Institute (GTRI) cleanroom in 2021, then launched aboard a SpaceX rocket in 2022.

The plan was to send Lunar Flashlight to the moon, where the spacecraft would shoot lasers at its south pole in a search for frozen water. Mission control for the flight was on Georgia Tech’s campus, where students in the Daniel Guggenheim School of Aerospace Engineering (AE) sat in the figurative driver’s seat. They worked for several months in 2023 to coax the craft toward its intended orbit in coordination with NASA’s Jet Propulsion Lab (JPL).

A faulty propulsion system kept the CubeSat from reaching its goal. Disappointing, to be sure, but it opened a new series of opportunities for the student controllers. When it was clear Lunar Flashlight wouldn’t reach the moon and instead settle into an orbit of the sun, JPL turned over ownership to Georgia Tech. It’s now the only higher education institution that has controlled an interplanetary spacecraft.

Lunar Flashlight’s initial orbit, planned destination, and current whereabouts mirrors much of the College of Engineering’s research in space technology. Some faculty are focused on projects in low earth orbit (LEO). Others have an eye on the moon. A third group is looking well beyond our small area of the solar system.

No matter the distance, though, each of these Georgia Tech engineers is working toward a new era of exploration and scientific discovery.

News Contact

Jason Maderer

College of Engineering

Jul. 09, 2025

The future of computing is lit, literally.

As microchips grow more complex and data demands intensify, traditional electrical connections are hitting their limits. Speed is king in today’s digital systems, but a major bottleneck remains in how quickly information can move between components like processors and memory.

This lag is one of the most pressing challenges in advanced hardware design. While processors continue to accelerate, the links that connect them can't keep pace.

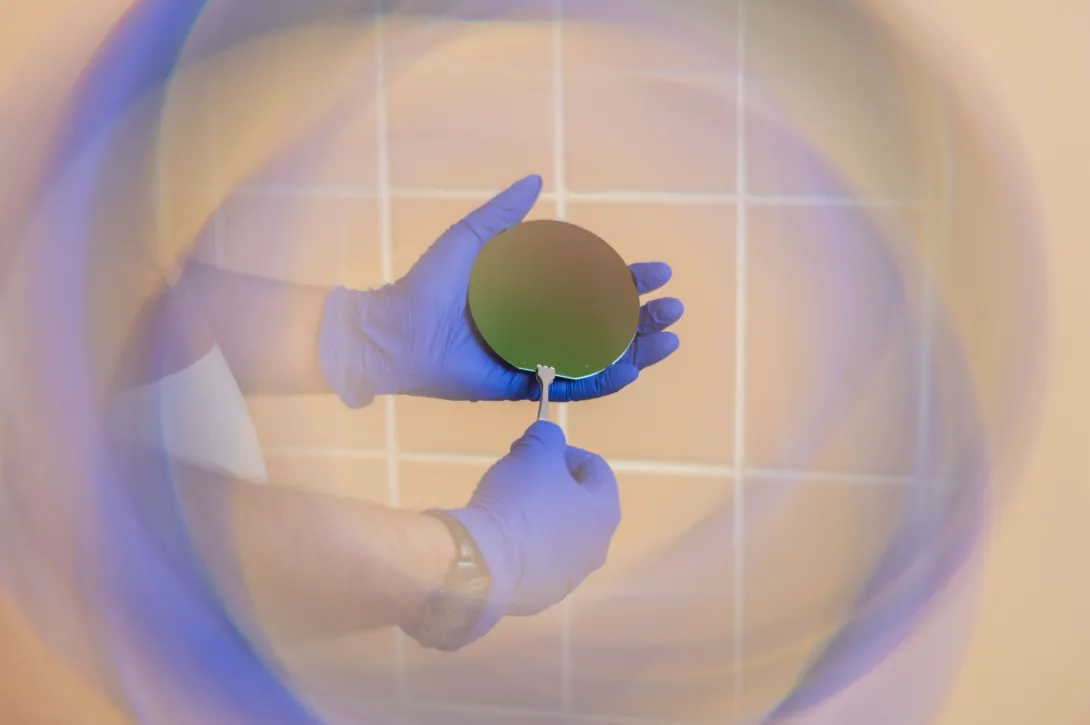

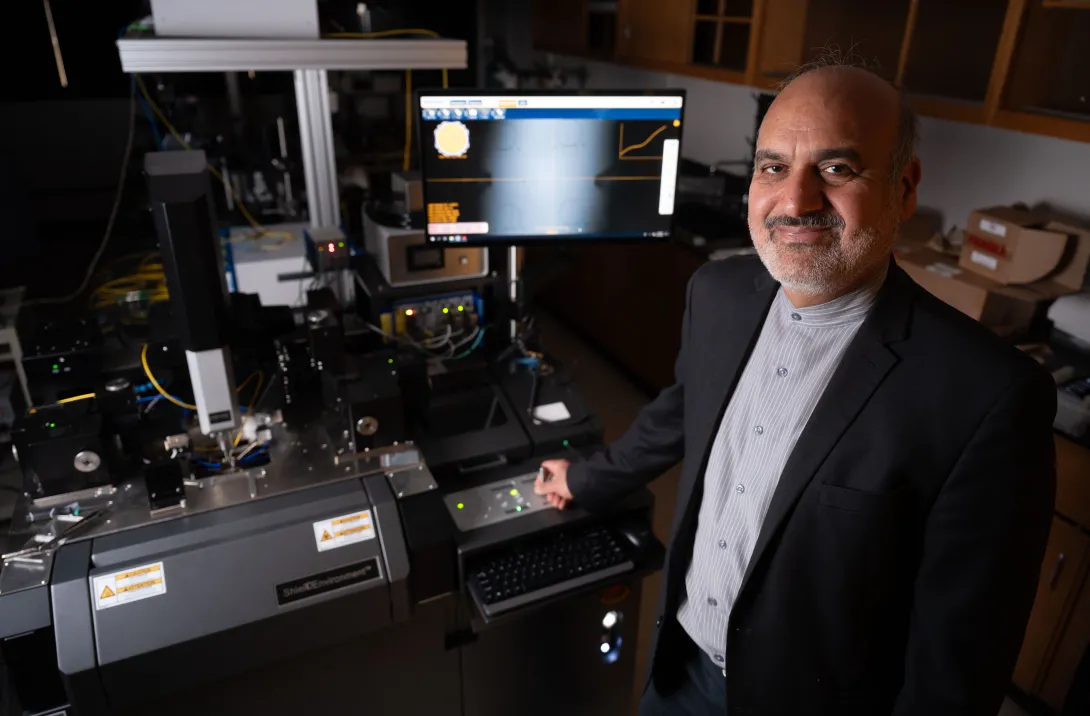

Georgia Tech researcher Ali Adibi is addressing this problem with $5.3 million in funding over three years from the Defense Advanced Research Projects Agency (DARPA). His project is part of DARPA’s Heterogeneous Adaptively Produced Photonic Interfaces (HAPPI) program, which aims to dramatically boost the speed and density of data transmission within microsystems by using light instead of electricity.

“Optical solutions are highly advantageous for providing the required data rates and power consumptions, and our project is formed to address the most important challenges for achieving the system-level performance,” said Adibi, a professor and Joseph M. Pettit Chair in the School of Electrical and Computer Engineering.

The project brings together a multidisciplinary team, including collaborators from the Massachusetts Institute of Technology, University of Florida, NY CREATES, and NHanced Semiconductors, Inc.

Going Vertical

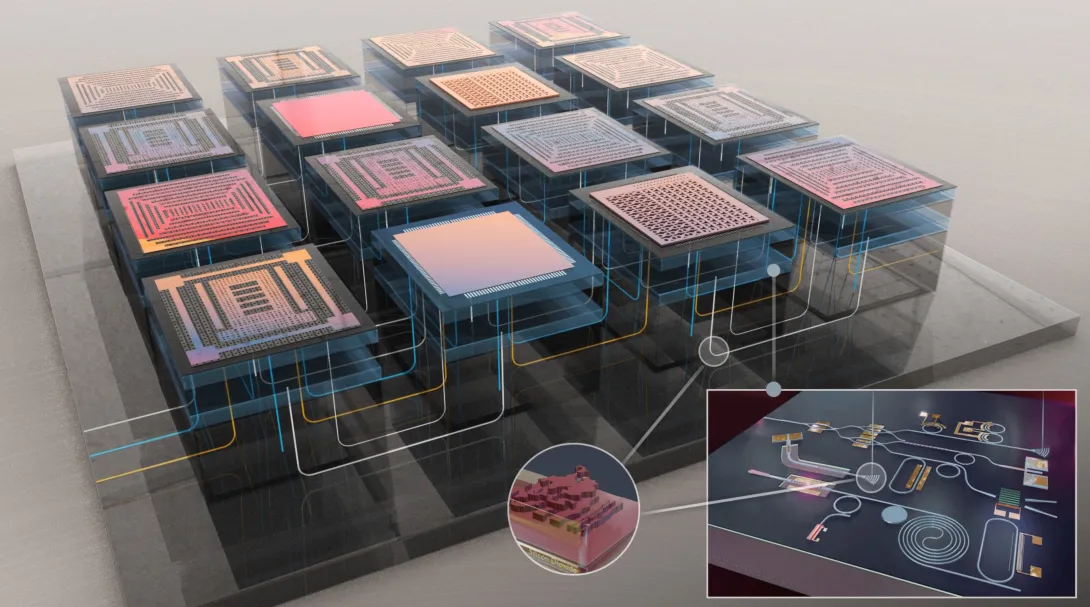

Unlike traditional optical communication, which connects systems across distances, this project focuses on enabling ultra-fast, low-loss communication withinelectronic systems.

The key innovation is vertically connecting electronic chips in a compact stack. This design helps overcome the limitations of planar optical routing geometries (layouts that guide light horizontally across a chip) which are often not compatible with the dense, 3D chip architectures needed for next-generation computing.

Adibi’s team is developing a novel 3D optical routing system that can transmit data with minimal loss, high bandwidth, and compact components. The system is designed to scale to large arrays of interconnected chips with minimal interference between data channels.

Smarter Design with Machine Learning

At the heart of the project is the use of machine learning (ML) to help design and optimize the light-based communication system.

ML is used to shape and fine-tune the tiny structures that guide light through and between chips. This includes finding the best sizes, shapes, and layouts for components like couplers and waveguides, so they can be made smaller, work more efficiently, and fit into dense chip layouts.

“Designing a complete, scalable 3D optical routing structure involves innumerable variables,” Adibi said. “Machine learning helps us navigate that complexity and find solutions that would be nearly impossible to identify manually.”

Tiny "Mirrors"

Another key innovation involves specialized optical structures, or what Adibi refers to as “artificial mirrors”.

The tiny, precisely shaped structures, called metagratings, are embedded in the chip material to redirect light vertically between layers with minimal loss. These components are designed to guide light efficiently in tight spaces, helping connect stacked chips without losing signal strength.

“Imagine light traveling through a chip and suddenly being redirected straight up. That’s the kind of precise control we’re achieving,” Adibi explained.

These innovations, along with advanced techniques for building vertical light paths through thick silicon layers and new packaging solutions that keep components precisely aligned, have shown promise on their own. But combining them is what enables dense, high-speed, low-loss communication between vertically stacked chips, something that no system has achieved before, according to Adibi.

“As with any complex system, success depends on how well everything is structured and optimized,” he said. “Once everything is in alignment, data can move faster, more efficiently, and with less energy consumption for communicating each bit of data.”

About the Research

This research is supported by the Defense Advanced Research Projects Agency (DARPA) Heterogeneous Adaptively Produced Photonic Interfaces (HAPPI) program. Notice ID DARPA-SN-24-105.

News Contact

Dan Watson

Jul. 03, 2025

As strange as it sounds, the key to understanding life’s origins might lie in artificial intelligence. At least, according to a new approached being pursued by researchers at Georgia Tech.

School of Electrical and Computer Engineering (ECE) Assistant Professor Amirali Aghazadeh and Ph.D. student Daniel Saeedi have developed AstroAgents, an AI system that analyzes mass spectrometry data — detailed chemical compositions from meteorites and Earth soil samples — to generate novel hypotheses about the origins of life on the planet.

What sets AstroAgents apart is its use of agentic AI. Unlike traditional AI systems that perform fixed tasks, this agentic system is designed to pursue a scientific goal. It draws from astrobiology literature, interprets complex data, and proposes original ideas that researchers can investigate further.

Their paper, recently featured in the journal "Nature", is opening new possibilities for how scientists explore questions that have remained unanswered for decades.

In a special Q&A, Aghazadeh and Saeedi explain how AstroAgents analyzes space chemistry, what it’s revealing about the possible origins of life on Earth, and what they hope to explore next.

News Contact

Dan Watson

Jul. 02, 2025

The Partnership for Inclusive Innovation launched the sixth annual PIN Summer Intern (PSI) program in May with an event at Fort Valley State University’s location in Warner Robins, Georgia. The program is shaping up to be the biggest yet.

This summer, 103 students are working on 51 projects across 27 communities in Georgia, Alabama, Virginia, and Texas. Selected from nearly 700 applicants — a 73% increase over last year — these students are tackling real-world challenges ranging from AI applications in North Georgia to Native American initiatives in Whigham, Georgia, and Bracketville, Texas.

By pairing students from different years, majors and institutions, the PSI program gives the next generation of innovators hands-on experience addressing complex challenges while delivering practical solutions to communities across the region.

A collaboration with the Southeast Crescent Regional Commission (SCRC) has funded 17 projects in several counties in Middle and South Georgia and is a large part of the program’s expansion this year. The opportunity to make an impact across a broad swath of Georgia is part of why the SCRC was interested in working with PIN, said SCRC Executive Director Christopher McKinney.

News Contact

Karen Kirkpatrick (karen.kirkpatrick@innovate.gatech.edu)

Jul. 01, 2025

Fan Zhang, assistant professor in the George W. Woodruff School of Mechanical Engineering, has received the 2025 Landis Young Member Engineering Achievement Award from the American Nuclear Society (ANS).

The award recognizes young members for outstanding achievements in which engineering knowledge is effectively applied to yield an engineering concept, design, safety improvement, method of analysis, or product utilized in nuclear power research and development or commercial application.

Zhang was selected by the ANS Honors and Awards Committee for her pioneering contributions to nuclear cybersecurity through innovative machine learning (ML) approaches, development of patent-pending technology, and efforts to establish Georgia Tech as a leader in the field. The award also recognizes her collaboration with the International Atomic Energy Agency and her groundbreaking research on robot-assisted nuclear power plant monitoring, which improves safety and efficiency and demonstrates exceptional impact on global nuclear security.

Zhang serves as the director of the Intelligence for Advanced Nuclear (iFAN) Lab at Georgia Tech. Her research primarily focuses on nuclear cybersecurity, online monitoring, fault detection, digital twins, AI/ML, and robotics. Her work on robot-assisted nuclear power plant monitoring, which combines these cross-cutting areas, could significantly reduce human worker presence in harsh and potentially hazardous environments and improve the efficiency of plant operation. The work was supported by the inaugural Department of Energy Office of Nuclear Energy Distinguished Early Career Award.

News Contact

Pagination

- 1 Page 1

- Next page