May. 01, 2025

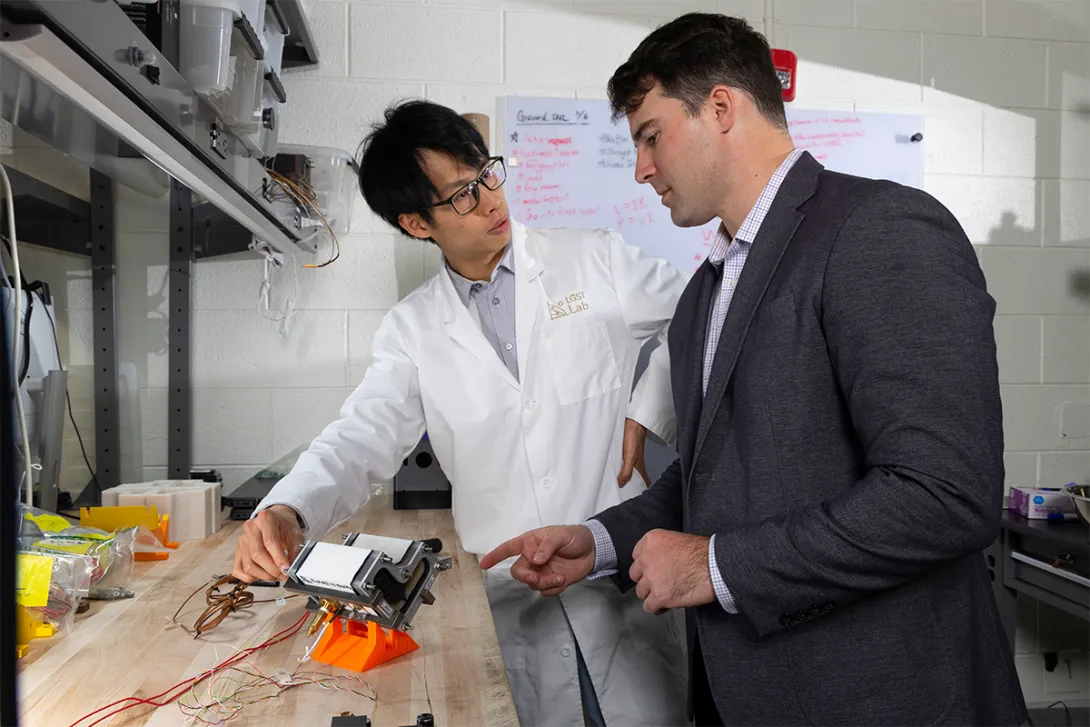

Early on, Georgia Tech graduate students William Trenton Gantt and Hugh (Ka Yui) Chen imagined working in the space industry.

“When I was 14, I dreamed about being in space one day,” recalls Chen, 22, a native of Hong Kong and a Ph.D. student in aerospace engineering. “I think the industry has been making space more accessible to everyone. Commercialization is a big part of enabling this.”

Gantt, an engineer and former U.S. Army veteran graduating with an MBA from the Scheller College of Business this spring, remembered seeing the space shuttle retire and companies begin privatizing space as he entered young adulthood.

“I’ve always been interested in space, and a lot of it comes from the challenge of going to space,” he observes. “Seeing how hard it is to get to space and seeing it become achievable — that to me was the most attractive thing about it.”

For Gantt, the feeling always brings to mind John F. Kennedy’s famous line that spelled out America’s space ambitions: “We choose to go to the moon in this decade and do the other things, not because they are easy, but because they are hard.”

Recognizing Georgia Tech’s aerospace strengths, Gantt didn’t waste time building bridges within Scheller and in other parts of Georgia Tech. He founded the Scheller MBA Space Club, a first at the College, to track the industry as it grows and develops.

“I came from a military background, so I had my eye on the defense industry going into the MBA program. Georgia Tech, being the No. 2 aerospace engineering undergraduate school in the nation, I knew they already had strong industry connections. Making connections was a big goal coming into this program.”

Assessing Early-Stage Space Tech

He took part in the Entrepreneurship Assistants Program (EAP), which pairs a Scheller MBA student with a faculty or student inventor to evaluate early-stage technology for potential commercialization. He evaluated two space-related technologies, one with Chen’s support.

“The EAs conduct technology commercialization assessments and develop a business model canvas. By applying an entrepreneurial strategy compass, they predict potential go-to-market strategies for new technology,” says Paul Joseph, principal in the Office of Commercialization’s Quadrant-i unit, who created the EAP.

(See sidebar to read more about the EAP and the specific technologies assessed.)

Tapping Into a Nearly $2T Industry

According to McKinsey & Co., the space technology market, fueled by advancements in satellite technology, commercial space travel, and 5G networks, is projected to reach $1.8 trillion by 2035.

“We're seeing an industry shifting from a multibillion-dollar market cap to a multitrillion-dollar market cap in less than a decade. If you look at this from a business perspective, this is a massive addressable market for entrepreneurs," says Gantt.

From its Center for Space Technology and Research to the new Center for Space Policy and International Relations and labs like the Space Systems Design Lab, which focuses on areas such as CubeSat propulsion, lunar research, and hypersonic flight, Georgia Tech excels in space research across disciplines. In July, Georgia Tech will launch the Space Research Institute (SRI), one of its newest Interdisciplinary Research Institutes (IRI), to foster additional collaboration in this growing field.

“At Georgia Tech, there are competencies across every single College that will help to augment our understanding of space,” says Alex Oettl, professor of strategy and innovation in Scheller College, whose interest in the new space economy spans the last 20 years. “When you look at the technologies coming from Georgia Tech, they can impact this future trillion-dollar industry.”

An economist by training, Oettl led Georgia Tech’s involvement in the Creative Destruction Lab-Atlanta, a multi-university program that helped commercialize early-stage scientific technologies.

Leveraging Affordable Launch

The emergence of affordable launch, spurred by SpaceX’s introduction of the Falcon 9 rocket using reusable rocket technology, has made space much more accessible, from biomedical companies to academic institutions.

“Because there has been a drop in the cost of accessing space, it allows experimentation to flourish,” says Oettl.

He recalls Mark Costello, former chair of the Daniel Guggenheim School of Aerospace Engineering, explaining how he could launch a CubeSat into Low Earth Orbit out of his research budget, whereas before it would have been cost-prohibitive.

Today, Georgia Tech students and researchers are poised to capitalize on the new space economy stack — from new launch capabilities to new development in propellants and in-space operations and maintenance to more powerful sensors on Earth-observation satellites.

“I’ve seen firsthand the traction occurring on the commercial side. There are a lot of social scientists waking up to the opportunity that exists and thinking about business dynamics that will emerge as a result of this great opportunity,” he says.

Georgia Tech, an interdisciplinary, tech-focused university, brings significant capabilities across its Colleges to drive new and emerging technologies that have implications for space.

“Space hits on all the strengths that exist at the various Colleges,” Oettl explains. “Faculty at Georgia Tech are pushing the boundary and showing our students innovations that will emerge in the space economy that are not immediately obvious — such as in adjacent industries.”

Oettl calls these first-order and spillover impacts of new technology. By first-order impacts, he means businesses can take advantage of these opportunities and create new products on top of the original innovation. By spillovers, he cites as an example an Earth-observation satellite enabling other industries to take advantage of data from the ground. For instance, insurance companies are one of the largest users of space technology by way of satellite imagery.

Bringing Capabilities Together Through New Space IRI

The SRI will bring together the best in engineering, computer science, policy, and business research across Georgia Tech. Along the way, it could help engineers and computer scientists think with a more business-minded approach to pitch their innovations to the commercial space sector.

“You don’t see a lot of engineers having that inherent ability,” notes Gantt. “The Space IRI can shine by fostering collaboration between business students and engineers, enabling them to develop innovative go-to-market strategies and clearly define the unique value propositions these technologies offer to end users. You can bring these people together and create some forward momentum in the space industry.”

May. 01, 2025

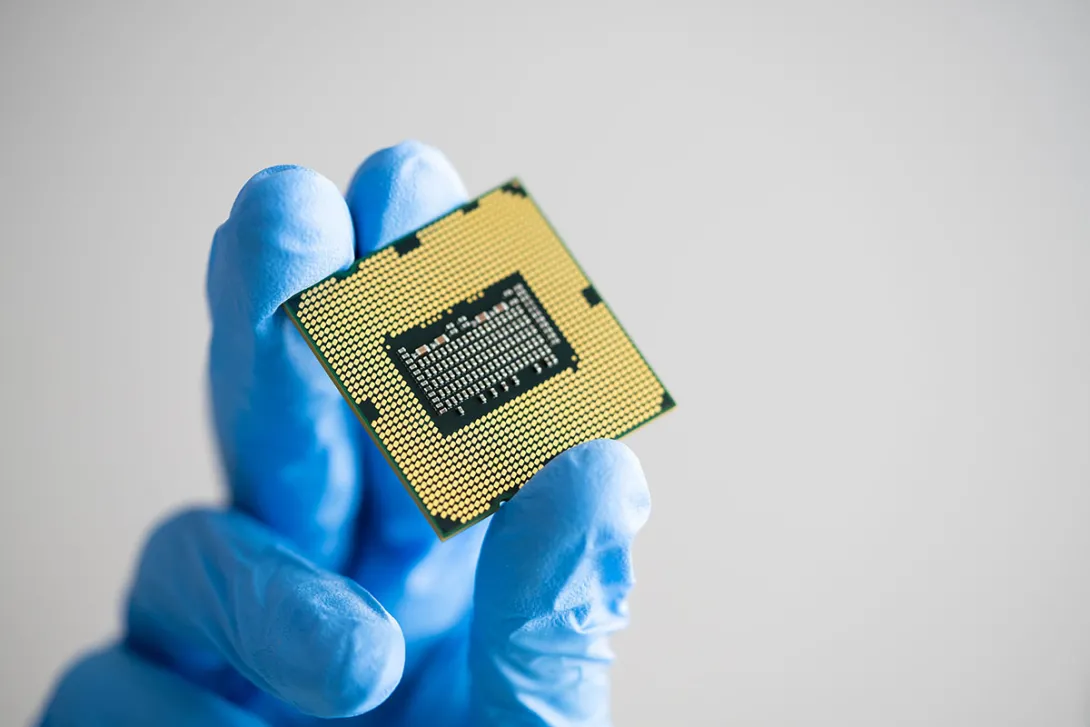

The Georgia Institute of Technology will receive up to $2 million to research advanced semiconductor packaging technologies. Georgia Tech was selected as a partner institution by the South Korean Ministry of Trade.

The Institute for Matter and Systems (IMS), George W. Woodruff School of Mechanical Engineering, and the 3D Systems Packaging Research Center (PRC) will work with Myongji University and industry partners in South Korea on a seven-year collaborative project that focuses on developing core evaluation technologies for advanced semiconductor packaging.

The project is led by Seung-Joon Paik, IMS research engineer; Yongwon Lee, research engineer in the George W. Woodruff School of Mechanical Engineering; and Kyoung-Sik “Jack” Moon, PRC research engineer. It is funded by the Korea Planning & Evaluation Institute of Industrial Technology of the Ministry of Trade, Industry and Energy in Korea.

The project aims to develop validation technologies for next-generation 3D packaging with strategic globally competitive capabilities. The developed platform will meet the high growing demand for advanced packaging technologies for artificial intelligence, high-performance computing, and chiplet-based semiconductor. As a designated partner, Georgia Tech will play a pivotal role in developing core evaluation technologies.

The project’s outcomes will contribute to the commercialization of dependable packaging technologies and the resilience of the global semiconductor supply chain.

News Contact

Amelia Neumeister | Research Communications Program Manager

Apr. 29, 2025

The most recent cohort of the Microelectronics and Nanomanufacturing Certificate Program (MNCP) have completed their training and are ready to dive into the workforce.

The MNCP is a National Science Foundation (NSF) funded collaboration between the Institute for Matter and Systems (IMS), Georgia Piedmont Technical College (GPTC) and Pennsylvania State University’s Center for Nanotechnology Education and Utilization.

The spring 2025 cohort was comprised of three individuals with non-technical backgrounds. For 12 weeks, they split time between online lectures and hands-on training in the Georgia Tech Fabrication Cleanroom where they immersed themselves in advanced microelectronic fabrication techniques. Their training included thin film deposition, photolithography, etching, metrology, laser micro-machining, and additive manufacturing. They gained hands-on experience with industry-standard equipment, even creating their own custom designs on 4-inch silicon wafers.

“The program really helps people get their head start, especially for those who don’t really have the educational background,” said Lauren Walker, one student from the cohort. Walker applied for the program after hearing about it from a colleague and was able to get a job as a laboratory technician with help from the program resources.

“[The program] gave me everything I needed to know for new skills and things like that for the industry,” said Walker. “It helped me eventually get another job. I say it helped because of the workshops they had.”

Under the direction of Seung-Joon Paik, IMS teaching lab coordinator, the cohort spent two days a week in the IMS cleanroom working on research projects with IMS staff. Michelle Wu, a research scientist in IMS, served as lab instructor throughout the program and oversaw the training on cleanroom tools.

“As their lab instructor, I’ve been thoroughly impressed with their passion, patience, and unwavering dedication to this program,” said Wu.

The program is supported by the Advanced Technological Education program at the National Science Foundation and is free for all participants.

Learn more about the Microelectronics and Nanomanufacturing Certificate Program

News Contact

Amelia Neumeister | Research Communications Program Manager

Apr. 28, 2025

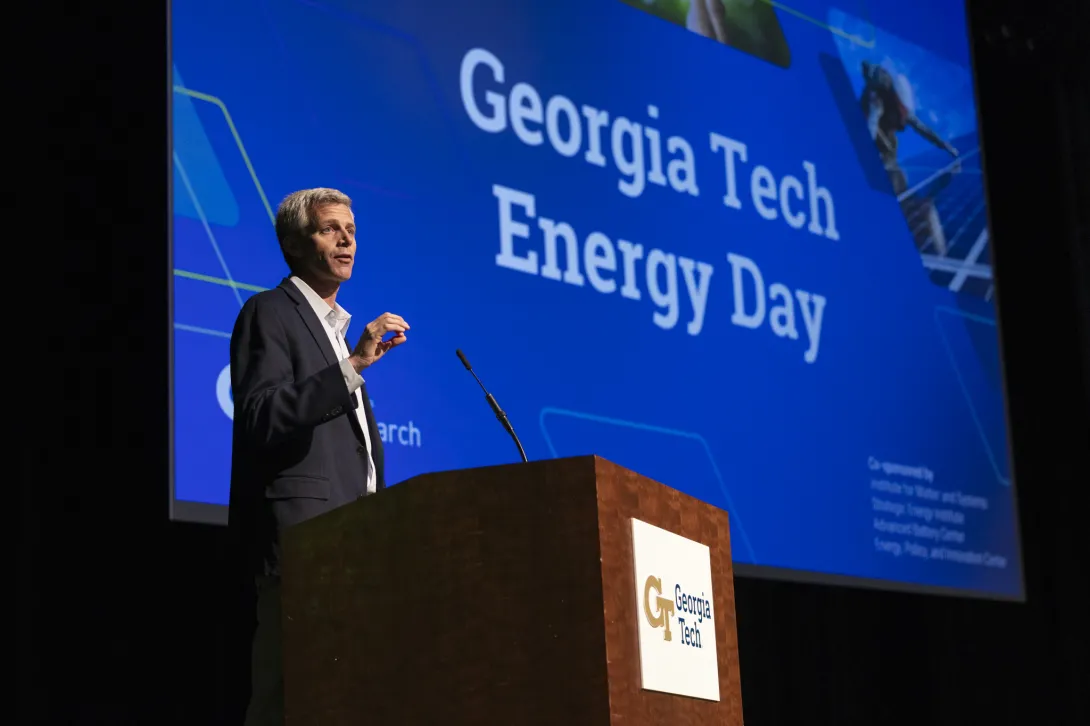

More than 300 people from industry, government, and academia converged on Georgia Tech’s campus for Energy Day. They gathered for discussion and collaboration on the topics of energy storage, solar energy conversion, and developments in carbon-neutral fuels.

Taking place on April 23, Energy Day was cohosted by Georgia Tech’s Institute for Matter and Systems (IMS), Strategic Energy Institute (SEI), the Georgia Tech Advanced Battery Center, and the Energy Policy and Innovation Center.

“The ideas coming out of Georgia Tech and other research universities can drive greater partnerships with our local and state officials. Whether you live in Georgia or elsewhere, we are changing how energy is viewed and consumed,” said Tim Lieuwen, Georgia Tech executive vice president for Research.

Energy Day 2025 is the latest evolution in a series of events that began as in 2023 Battery Day. As local and national energy research needs have evolved, the event has grown to highlight Georgia Tech, and the state of Georgia, as a go-to location for modern energy companies.

“At Georgia Tech, we approach energy holistically, leveraging innovative R&D, economic policy, community-building and strategic partnerships,” said Christine Conwell, SEI's interim executive director. “We are thrilled to convene this event for the third year. The keynote and sessions highlight our comprehensive strategy, showcasing cutting-edge advancements and collaborative efforts driving the next big energy innovations."

The day was divided into two parts: a morning session that included a keynote speaker and two panels, and an afternoon session with separate tracks addressing three different energy research areas. Speakers shared research being conducted at Georgia Tech, as well as updates from industry leaders, to create an open dialogue about current energy needs.

“We believe we can solve problems and build the economy when you bring various disciplines together and work from matter — the fundamental scientists and devices all the way out to final systems at large — economic systems, societal systems,” said Eric Vogel, executive director for IMS. “Not only did we share the latest research, but we discussed and debated how we can continue to transform the energy economy.”

Discussions ranged from adapting to rapid changes in battery storage to advancing photo-voltaic manufacturing in the U.S. to the environmental impacts and sustainable practices of e-fuels and renewable energy.

The day ended with a robust poster session that attracted more than 25 student posters presentations. Three were awarded best posters.

First place: Austin Shoemaker

Second Place: Roahan Zhang

Third Place: Connor Davel

Related Links:

Advancing Clean Energy: Georgia Tech Hosts Energy Materials Day

Georgia Tech Battery Day Reveals Opportunities in Energy Storage Research

News Contact

Amelia Neumeister | Research Communications Program Manager

Apr. 28, 2025

Origami — the Japanese art of folding paper — could be at the next frontier in innovative materials.

Practiced in Japan since the early 1600s, origami involves combining simple folding techniques to create intricate designs. Now, Georgia Tech researchers are leveraging the technique as the foundation for next-generation materials that can both act as a solid and predictably deform, “folding” under the right forces. The research could lead to innovations in everything from heart stents to airplane wings and running shoes.

Recently published in Nature Communications, the study, “Coarse-grained fundamental forms for characterizing isometries of trapezoid-based origami metamaterials,” was led by first author James McInerney, who is now a NRC Research Associate at the Air Force Research Laboratory. McInerney, who completed the research while a postdoctoral student at the University of Michigan, was previously a doctoral student at Georgia Tech in the group of study co-author Zeb Rocklin. The team also includes Glaucio Paulino (Princeton University), Xiaoming Mao (University of Michigan), and Diego Misseroni (University of Trento).

“Origami has received a lot of attention over the past decade due to its ability to deploy or transform structures,” McInerney says. “Our team wondered how different types of folds could be used to control how a material deforms when different forces and pressures are applied to it” — like a creased piece of cardboard folding more predictably than one that might crumple without any creases.

The applications of that type of control are vast. “There are a variety of scenarios ranging from the design of buildings, aircraft, and naval vessels to the packaging and shipping of goods where there tends to be a trade-off between enhancing the load-bearing capabilities and increasing the total weight,” McInerney explains. “Our end goal is to enhance load-bearing designs by adding origami-inspired creases — without adding weight.”

The challenge, Rocklin adds, is using physics to find a way to predictably model what creases to use and when to achieve the best results.

Deformable solids

Rocklin, a theoretical physicist and associate professor in the School of Physics at Georgia Tech, emphasizes the complex nature of these types of materials. “If I tug on either end of a sheet of paper, it's solid — it doesn’t separate,” he explains. “But it's also flexible — it can crumple and wave depending on how I move it. That’s a very different behavior than what we might see in a conventional solid, and a very useful one.”

But while flexible solids are uniquely useful, they are also very hard to characterize, he says. “With these materials, it is often difficult to predict what is going to happen — how the material will deform under pressure because they can deform in many different ways. Conventional physics techniques can't solve this type of problem, which is why we're still coming up with new ways to characterize structures in the 21st century.”

When considering origami-inspired materials, physicists start with a flat sheet that's carefully creased to create a specific three-dimensional shape; these folds determine how the material behaves. But the method is limited: only parallelogram-based origami folding, which uses shapes like squares and rectangles, had previously been modeled, allowing for limited types of deformation.

“Our goal was to expand on this research to include trapezoid faces,” McInerney says. Parallelograms have two sets of parallel sides, but trapezoids only need to have one set of parallel sides. Introducing these more variable shapes makes this type of creasing more difficult to model, but potentially more versatile.

Breathing and shearing

“From our models and physical tests, we found that trapezoid faces have an entirely different class of responses,” McInerney shares. In other words — using trapezoids leads to new behavior.

The designs had the ability to change their shape in two distinct ways: "breathing" by expanding and contracting evenly, and “shearing" by deforming in a twisting motion. “We learned that we can use trapezoid faces in origami to constrain the system from bending in certain directions, which provides different functionality than parallelogram faces,” McInerney adds.

Surprisingly, the team also found that some of the behavior in parallelogram-based origami carried over to their trapezoidal origami, hinting at some features that might be universal across designs.

“While our research is theoretical, these insights could give us more opportunities for how we might deploy these structures and use them,” Rocklin shares.

Future folding

“We still have a lot of work to do,” McInerney says, sharing that there are two separate avenues of research to pursue. “The first is moving from trapezoids to more general quadrilateral faces, and trying to develop an effective model of the material behavior — similar to the way this study moved from parallelograms to trapezoids.” Those new models could help predict how creased materials might deform under different circumstances, and help researchers compare those results to sheets without any creases at all. “This will essentially let us assess the improvement our designs provide,” he explains.

“The second avenue is to start thinking deeply about how our designs might integrate into a real system,” McInerney continues. “That requires understanding where our models start to break down, whether it is due to the loading conditions or the fabrication process, as well as establishing effective manufacturing and testing protocols.”

“It’s a very challenging problem, but biology and nature are full of smart solids — including our own bodies — that deform in specific, useful ways when needed,” Rocklin says. “That’s what we’re trying to replicate with origami.”

This research was funded by the Office of Naval Research, European Union, Army Research Office, and National Science Foundation.

Apr. 18, 2025

The Institute for Matter and Systems (IMS) supports a range of research activities, catering to users at all levels – from students to professional researchers. A pillar of that support lies in IMS’s facilities, offering tools and resources that are vital for both fundamental and applied research.

Recently, four graduate students using IMS core facilities in their research have been selected to receive NSF Graduate Research Fellowships. The prestigious fellowships including funding for three years of graduate study and tuition.

The core facility users are:

- Anna R. Burson – chemical engineering

- Connor M. Davel – photonic materials

- Ethan Daniel Ray – photonic materials

- Alessandro Zerbini-Flores – electrical and electronic engineering

IMS’s electronics and nanotechnology core facilities provide access to advanced instrumentation and technologies that are essential for research activities from basic discovery to prototype realization. This ensures that undergraduate and graduate students, as well as faculty and external collaborators, can access the necessary infrastructure to pursue their scientific inquiries.

News Contact

Amelia Neumeister | Research Communications Program Manager

Apr. 17, 2025

Baseball season is underway, and it’s not a surprise that the New York Yankees are among the league leaders in hits and home runs. But how they’re doing it has become the biggest storyline in the sport.

Several Yankee sluggers were swinging a new style of bat, dubbed the torpedo bat, on March 29, when the team hit nine home runs, and the trend quickly caught on around the league.

Materials and manufacturers may vary, but the design of a baseball bat has remained relatively unchanged, so how does the torpedo bat compare to other game-changing innovations in sports history? Jud Ready, associate director for external engagement at the Institute for Matter and Systems and the creator of the Materials Science and Engineering of Sports course at Georgia Tech, shares his thoughts on the bat and how the Institute is using technology to create change in sports.

News Contact

Steven Gagliano

Apr. 16, 2025

What’s the hottest thing in electronics and high-performance computing? In a word, it’s “cool.”

To be more precise, it’s a liquid cooling system developed at Georgia Tech for electronics aimed at solving a long-standing problem: overheating.

Developed by Daniel Lorenzini, a 2019 Tech graduate who earned his Ph.D. in mechanical engineering, the cooling system uses microfluidic channels — tiny, intricate pathways for liquids — that are embedded within the chip packaging.

He worked with VentureLab, a Tech program in the Office of Commercialization, to spin his research into a startup company, EMCOOL, headquartered in Norcross.

“Our solution directly addresses the heat at the source of the silicon chip and therefore makes it faster,” Lorenzini said. “Our design has our system sitting directly on the silicon chips that generate the most heat. Using the fluids in the micro-pin fins, it carries the heat that’s produced away from the chip.”

That cooling solution is directly integrated into the electronic components, making it significantly more efficient than conventional cooling methods, because it enhances the heat dissipation process.

The result is a much lower risk of overheating and reduced power consumption, he said.

Lorenzini, who researched and refined the technology in the lab of Yogendra Joshi at the George W. Woodruff School of Mechanical Engineering, was awarded a patent for the technology in September 2024.

Now, EMCOOL, which has five empoloyees, is actively pursuing venture capital funding to scale its technology and address the escalating thermal management challenges posed by AI processors in modern data centers.

The system uses a cooling block with tiny, pin-like fins on one side and a special thermal interface material on the other. There's also a junction attached to the block, with ports for the fluid to flow in and out. The cooling fluid moves through the micro-pin fins and helps to carry away the heat.

Since the ports are designed to match the shape of the fins, it ensures that the fluid flows efficiently and the heat is dissipated as effectively as possible at chip-scale.

As electronic devices — from high-performance personal computers to data centers used for artificial intelligence processing — become more powerful, they generate more heat. This excess heat can damage components or cause the device to underperform.

Traditional cooling methods, which include fans or heat sinks, often struggle to keep pace with the increasing demands of the newer model electronics. Lorenzini’s microfluidic system addresses the challenge of overheating with his patented, more effective, compact, and integrated cooling solution.

With the guidance of Jonathan Goldman, director of Quadrant-i in Tech’s Office of Commercialization, Lorenzini secured grant funding through the National Science Foundation and the Georgia Research Alliance to further the research and build design prototypes.

“We immediately had the sense there was commercial potential here,” Goldman said. “Thermal management, or getting rid of heat, is a ubiquitous problem in the computer industry, so when we saw what Daniel was doing, we immediately began to engage with him to understand what the commercial potential was.”

Indeed, the initial focus for the technology was the $159 billion global electronic gaming market. Gamers need a lot of computing power, which generates a lot of heat, causing lag.

But beyond gaming systems, the company, which manufactures custom cooling blocks and kits at its Norcross facility, is eyeing more sectors, which also suffer from overheating, Goldman said.

The technology addresses similar overheating electronics challenges in high-performance computing, telecommunications, and energy systems.

“This work propels us forward in pushing the boundaries of what traditional cooling technologies can achieve because by harnessing the power of microfluidics, EMCOOL's systems offer a compact and energy-efficient way to manage heat,” Goldman said. “This has the potential to revolutionize industries reliant on high-performance computing, where heat management is a constant challenge.”

News Contact

Péralte C. Paul

peralte@gatech.edu

404.316.1210

Apr. 11, 2025

For centuries, innovations in structural materials have prioritized strength and durability — often at a steep environmental price. Today, the construction industry accounts for approximately 10% of global greenhouse gas emissions, with cement, steel, and concrete responsible for more than two-thirds of that total. As the world presses for a sustainable future, scientists are racing to reinvent the very foundations of our built environment.

Paradigm Shift in Construction

Now, researchers at Georgia Tech have developed a novel class of modular, reconfigurable, and sustainable building blocks — a new construction paradigm as well-suited for terrestrial homes as it is for extraterrestrial habitats. Their study, published in Matter, demonstrates that these innovative units, dubbed eco-voxels, can reduce carbon footprints by up to 40% compared to traditional construction materials. These units also maintain the structural performance needed for applications ranging from load-bearing walls to aircraft wings.

“We created sustainable structures using these eco-friendly building blocks, combining our knowledge of structural mechanics and mechanical design with industry-relevant manufacturing practices and environmental assessments,” said Christos Athanasiou, assistant professor at the Daniel Guggenheim School of Aerospace Engineering.

Housing Affordability Solutions

Their work offers a potential solution to the growing housing affordability crisis. As climate-driven disasters such as hurricanes, wildfires, and floods increase, homes are damaged at higher rates, and insurance costs are skyrocketing. This crisis is fueled by rising land prices and restrictive development regulations. Meanwhile, the growing demand for housing places an increasing strain on global resources and the environment. The modularity and circularity of the developed approach can effectively address these issues.

The New Building Blocks

Eco-voxels — short for eco-friendly voxels, the 3D equivalent of pixels — are made from polytrimethylene terephthalate (PTT). PTT is a partially bio-based polymer derived from corn sugar and reinforced with recycled carbon fibers from aerospace waste (scrap material lost during the manufacturing of aerospace components). Eco-voxels can be easily assembled into large, load-bearing structures and then disassembled and reconfigured, all without generating waste. Consequently, they offer a highly adaptable, sustainable approach to construction.

The team tested eco-voxels and found they can handle the pressure that buildings usually face. They also used computer simulations to show that changing the shape of eco-voxels makes them suitable for many different building needs.

The researchers compared the eco-voxel approach to other emerging construction methods like 3D-printed concrete and cross-laminated timber (CLT), finding that eco-voxels offer significant environmental advantages. While traditional and alternative materials are often heavy and carbon-intensive, the eco-voxel wall had the lowest carbon footprint: 30% lower than concrete and 20% lower than CLT.

These results highlight eco-voxels as a promising low-carbon, high-performance solution for sustainable and affordable construction, opening new possibilities for faster, more sustainable building solutions. In addition to residential uses, emergency shelters built with eco-voxels could be used for disaster-relief scenarios, where quick assembly, modularity, and minimal environmental impact are crucial.

“This study exemplifies how advances in structural mechanics, sustainable composite development, and sustainability analysis can yield transformative solutions when coupled. Eco-voxels — our modular, reconfigurable building blocks — provide a scalable, low-carbon alternative that redefines our approach to building in both terrestrial and extraterrestrial environments," said Athanasiou.

Building in Space

Beyond their terrestrial potential, eco-voxels can also offer a promising solution for off-world construction where traditional building methods are unfeasible. Their lightweight, rapid assembly — structures can be erected in less than an hour — and reliance on sustainable or locally sourced materials make them ideal candidates for future Martian or lunar shelters.

“The ability to build these structures quickly is a significant advantage for space construction,” said Athanasiou. “In space, we need lightweight units made from locally sourced materials.”

Perhaps most importantly, the researchers envision a future where the built environment not only minimizes harm but actively contributes to the preservation of planetary health.

This research was led by Georgia Tech, in collaboration with teams from the Massachusetts Institute of Technology, the University of Guelph in Ontario, Canada, and the National University of Singapore.

News Contact

Monique Waddell

Apr. 10, 2025

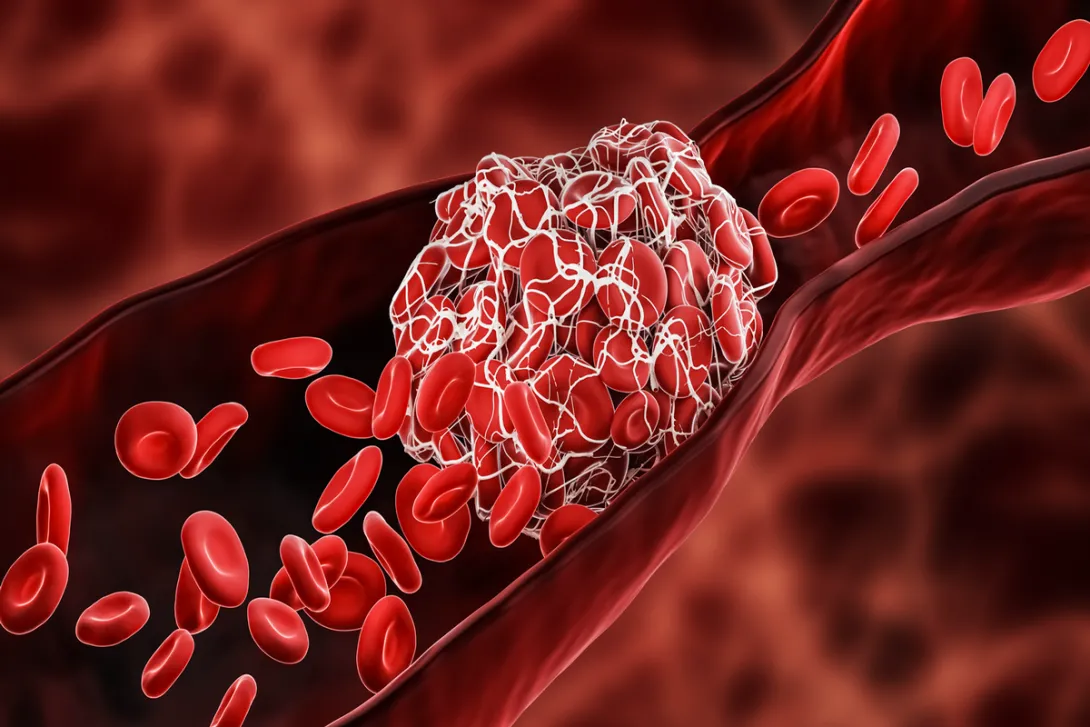

In a groundbreaking study published in Nature, researchers from Georgia Tech and Emory University have developed a new model that could enable precise, life-saving medication delivery for blood clot patients. The novel technique uses a 3D microchip

Wilbur Lam, professor at Georgia Tech and Emory University, and a clinician at Children’s Healthcare of Atlanta, led the study. He worked closely with Yongzhi Qiu, an assistant professor in the Department of Pediatrics at Emory University School of Medicine.

The significance of the thromboinflammation-on-a-chip model, is that it mimics clots in a human-like way, allowing them to last for months and resolve naturally. This model helps track blood clots and more effectively test treatments for conditions including sickle cell anemia, strokes, and heart attacks.

News Contact

Amelia Neumeister | Research Communications Program Manager

Pagination

- Previous page

- 5 Page 5

- Next page