May. 14, 2025

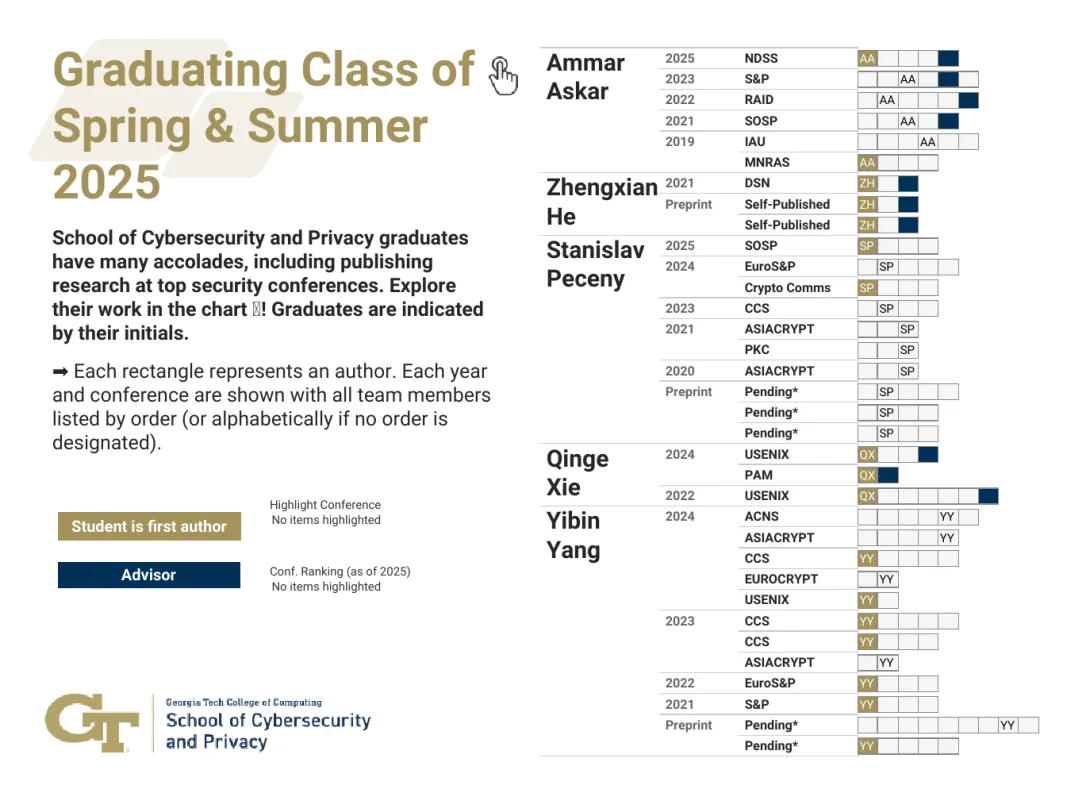

The School of Cybersecurity and Privacy at Georgia Tech is proud to recognize the accomplishments of five doctoral students who finished their doctoral programs in Spring 2025. These scholars have advanced critical research in software security, cryptography, and privacy, collectively publishing 34 papers, most of which appear in top-tier venues.

Ammar Askar developed new tools for software security in multi-language systems, including a concolic execution engine powered by large language models. He highlighted DEFCON 2021, which he attended with the Systems Software and Security Lab (SSLab), as a favorite memory.

Zhengxian He persevered through the pandemic to lead a major project with an industry partner, achieving strong research outcomes. He will be joining Amazon and fondly remembers watching sunsets from the CODA building.

Stanislav Peceny focused on secure multiparty computation (MPC), designing high-performance cryptographic protocols that improve efficiency by up to 1000x. He’s known for his creativity in both research and life, naming avocado trees after famous mathematicians and enjoying research discussions on the CODA rooftop.

Qinge Xie impressed faculty with her adaptability across multiple domains. Her advisor praised her independence and technical range, noting her ability to pivot seamlessly between complex research challenges.

Yibin Yang contributed to the advancement of zero-knowledge proofs and MPC, building toolchains that are faster and more usable than existing systems. His work earned a Distinguished Paper Award at ACM CCS 2023, and he also served as an RSAC Security Scholar. Yang enjoyed teaching and engaging with younger students, especially through events like Math Kangaroo.

Faculty mentors included Regents’ Entrepreneur Mustaque Ahamad, Professors Taesoo Kim and Vladimir Kolesnikov, and Assistant Professor Frank Li, who played vital roles in guiding the graduates’ research journeys.

Learn more about the graduates and their mentors on the 2025 Ph.D. graduate microsite.

News Contact

JP Popham, Communications Officer II

College of Computing | School of Cybersecurity and Privacy

May. 02, 2025

Jason Azoulay is an associate professor of Chemistry and Biochemistry and Materials Science and Engineering at Georgia Tech. He is the Georgia Research Alliance Vasser-Woolley Distinguished Investigator in Optoelectronics and serves as co-director of the Center for Organic Photonics and Electronics.

This story by Janette Neuwahl Tannen is shared jointly with the University of Miami newsroom.

Today, most of us carry a fairly powerful computer in our hand — a smartphone.

But computers weren’t always so portable. Since the 1980s, they have become smaller, lighter, and better equipped to store and process vast troves of data.

Yet the silicon chips that power computers can only get so small.

“Over the past 50 years, the number of transistors we can put on a chip has doubled every two years,” says Kun Wang, assistant professor of physics at the University of Miami College of Arts and Sciences. “But we are rapidly reaching the physical limits for silicon-based electronics, and it’s more challenging to miniaturize electronic components using the technologies we have been using for half a century.”

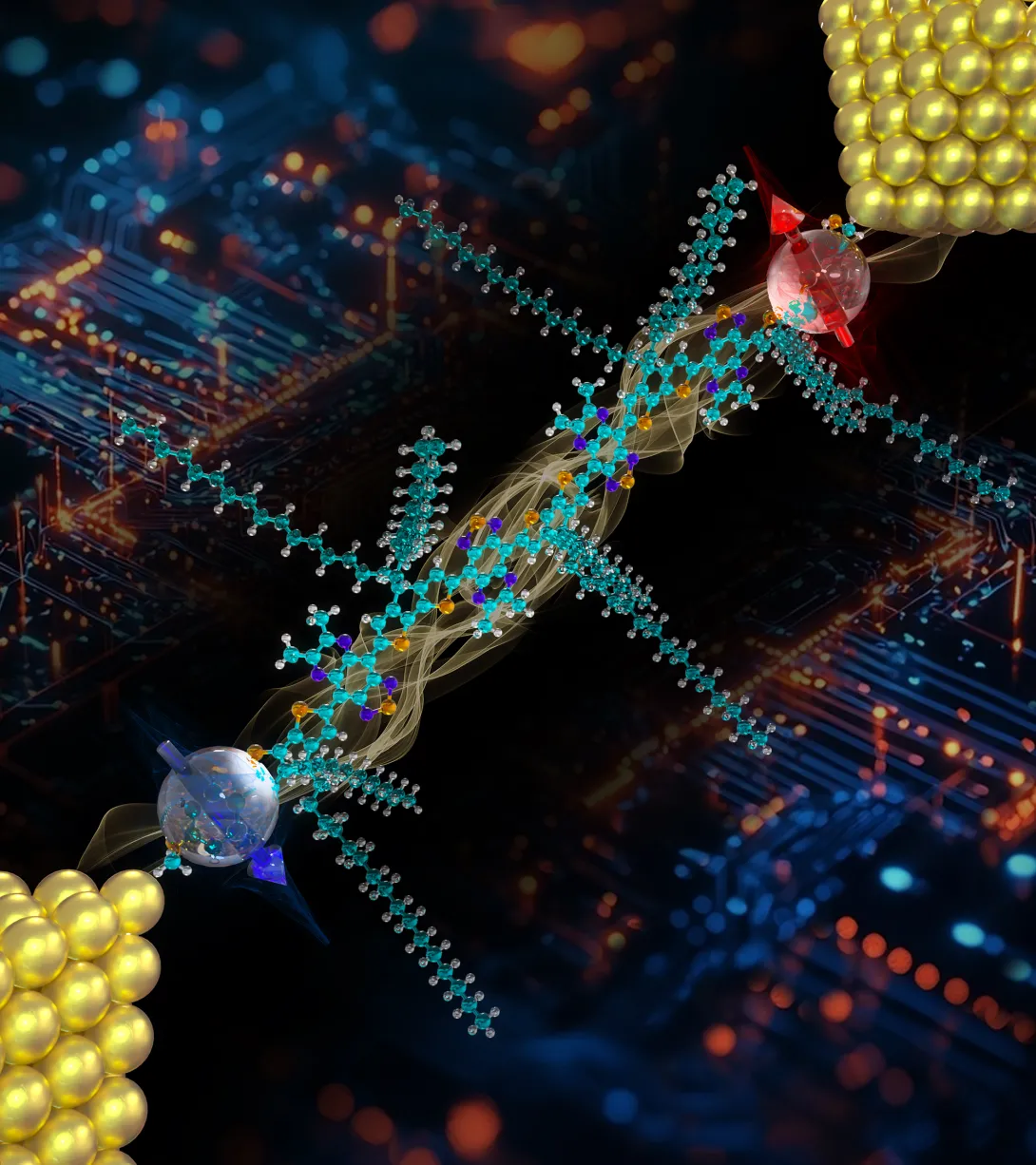

It’s a problem that Wang and many in his field of molecular electronics are hoping to solve. Specifically, they are looking for a way to conduct electricity without using silicon or metal, which are used to create computer chips today. Using tiny molecular materials for functional components, like transistors, sensors, and interconnects in electronic chips offers several advantages, especially as traditional silicon-based technologies approach their physical and performance limits.

But finding the ideal chemical makeup for this molecule has stumped scientists. Recently, Wang, along with his graduate students, Mehrdad Shiri and Shaocheng Shen, and collaborators Jason Azoulay, associate professor at Georgia Institute of Technology and Georgia Research Alliance Vasser-Woolley Distinguished Investigator; and Ignacio Franco, professor at the University of Rochester, uncovered a promising solution.

This week, the team shared what they believe is the world’s most electrically conductive organic molecule. Their discovery, published in the Journal of the American Chemical Society, opens up new possibilities for constructing smaller, more powerful computing devices at the molecular scale. Even better, the molecule is composed of chemical elements found in nature — mostly carbon, sulfur, and nitrogen.

“So far, there is no molecular material that allows electrons to go across it without significant loss of conductivity,” Wang says. “This work is the first demonstration that organic molecules can allow electrons to migrate across it without any energy loss over several tens of nanometers.”

The testing and validation of their unique new molecule took more than two years.

However, the work of this team reveals that their molecules are stable under everyday ambient conditions and offer the highest possible electrical conductance at unparalleled lengths. Therefore, it could pave the way for classical computing devices to become smaller, more energy-efficient, as well as cost-efficient, Wang adds.

Currently, the ability of a molecule to conduct electrons decreases exponentially as the molecular size increases. These newly developed molecular “wires” are needed highways for information to be transferred, processed, and stored in future computing, Wang says.

“What’s unique in our molecular system is that electrons travel across the molecule like a bullet without energy loss, so it is theoretically the most efficient way of electron transport in any material system,” Wang notes. “Not only can it downsize future electronic devices, but its structure could also enable functions that were not even possible with silicon-based materials.”

Wang means that the molecule’s abilities might create new opportunities to revolutionize molecule-based quantum information science.

“The ultra-high electrical conductance observed in our molecules is a result of an intriguing interaction of electron spins at the two ends of the molecule,” he adds. “In the future, one could use this molecular system as a qubit, which is a fundamental unit for quantum computing.”

The team was able to notice these abilities by studying their new molecule under a scanning tunneling microscope (STM). Using a technique called STM break-junction, the team was able to capture a single molecule and measure its conductance.

Shiri, the graduate student, adds: “In terms of application, this molecule is a big leap toward real-world applications. Since it is chemically robust and air-stable, it could even be integrated with existing nanoelectronic components in a chip and work as an electronic wire or interconnects between chips.”

Beyond that, the materials needed to compose the molecule are inexpensive, and it can be created in a lab.

“This molecular system functions in a way that is not possible with current, conventional materials,” Wang says. “These are new properties that would not add to the cost but could make (computing devices) more powerful and energy efficient.”

DOI: https://doi.org/10.1021/jacs.4c18150

Funding: U.S. Department of Energy, Office of Science, Basic Energy

Sciences; National Science Foundation (NSF); Air Force Office of Scientific Research (AFOSR) under support provided by the Organic Materials

Chemistry Program; Georgia Tech Research Institute (GTRI) Graduate

Student Researcher Fellowship Program (GSFP). Computational resources were provided by the Center for Integrated Research Computing (CIRC) at the

University of Rochester.

Along with Jason Azoulay, Georgia Tech co-authors also include Paramasivam Mahalingam, Tyler Bills, Alexander J. Bushnell, and Tanya A. Balandin.

News Contact

Jess Hunt-Ralston

Director of Communications

College of Sciences at Georgia Tech

May. 01, 2025

The Georgia Institute of Technology will receive up to $2 million to research advanced semiconductor packaging technologies. Georgia Tech was selected as a partner institution by the South Korean Ministry of Trade.

The Institute for Matter and Systems (IMS), George W. Woodruff School of Mechanical Engineering, and the 3D Systems Packaging Research Center (PRC) will work with Myongji University and industry partners in South Korea on a seven-year collaborative project that focuses on developing core evaluation technologies for advanced semiconductor packaging.

The project is led by Seung-Joon Paik, IMS research engineer; Yongwon Lee, research engineer in the George W. Woodruff School of Mechanical Engineering; and Kyoung-Sik “Jack” Moon, PRC research engineer. It is funded by the Korea Planning & Evaluation Institute of Industrial Technology of the Ministry of Trade, Industry and Energy in Korea.

The project aims to develop validation technologies for next-generation 3D packaging with strategic globally competitive capabilities. The developed platform will meet the high growing demand for advanced packaging technologies for artificial intelligence, high-performance computing, and chiplet-based semiconductor. As a designated partner, Georgia Tech will play a pivotal role in developing core evaluation technologies.

The project’s outcomes will contribute to the commercialization of dependable packaging technologies and the resilience of the global semiconductor supply chain.

News Contact

Amelia Neumeister | Research Communications Program Manager

Apr. 29, 2025

The most recent cohort of the Microelectronics and Nanomanufacturing Certificate Program (MNCP) have completed their training and are ready to dive into the workforce.

The MNCP is a National Science Foundation (NSF) funded collaboration between the Institute for Matter and Systems (IMS), Georgia Piedmont Technical College (GPTC) and Pennsylvania State University’s Center for Nanotechnology Education and Utilization.

The spring 2025 cohort was comprised of three individuals with non-technical backgrounds. For 12 weeks, they split time between online lectures and hands-on training in the Georgia Tech Fabrication Cleanroom where they immersed themselves in advanced microelectronic fabrication techniques. Their training included thin film deposition, photolithography, etching, metrology, laser micro-machining, and additive manufacturing. They gained hands-on experience with industry-standard equipment, even creating their own custom designs on 4-inch silicon wafers.

“The program really helps people get their head start, especially for those who don’t really have the educational background,” said Lauren Walker, one student from the cohort. Walker applied for the program after hearing about it from a colleague and was able to get a job as a laboratory technician with help from the program resources.

“[The program] gave me everything I needed to know for new skills and things like that for the industry,” said Walker. “It helped me eventually get another job. I say it helped because of the workshops they had.”

Under the direction of Seung-Joon Paik, IMS teaching lab coordinator, the cohort spent two days a week in the IMS cleanroom working on research projects with IMS staff. Michelle Wu, a research scientist in IMS, served as lab instructor throughout the program and oversaw the training on cleanroom tools.

“As their lab instructor, I’ve been thoroughly impressed with their passion, patience, and unwavering dedication to this program,” said Wu.

The program is supported by the Advanced Technological Education program at the National Science Foundation and is free for all participants.

Learn more about the Microelectronics and Nanomanufacturing Certificate Program

News Contact

Amelia Neumeister | Research Communications Program Manager

Apr. 28, 2025

More than 300 people from industry, government, and academia converged on Georgia Tech’s campus for Energy Day. They gathered for discussion and collaboration on the topics of energy storage, solar energy conversion, and developments in carbon-neutral fuels.

Taking place on April 23, Energy Day was cohosted by Georgia Tech’s Institute for Matter and Systems (IMS), Strategic Energy Institute (SEI), the Georgia Tech Advanced Battery Center, and the Energy Policy and Innovation Center.

“The ideas coming out of Georgia Tech and other research universities can drive greater partnerships with our local and state officials. Whether you live in Georgia or elsewhere, we are changing how energy is viewed and consumed,” said Tim Lieuwen, Georgia Tech executive vice president for Research.

Energy Day 2025 is the latest evolution in a series of events that began as in 2023 Battery Day. As local and national energy research needs have evolved, the event has grown to highlight Georgia Tech, and the state of Georgia, as a go-to location for modern energy companies.

“At Georgia Tech, we approach energy holistically, leveraging innovative R&D, economic policy, community-building and strategic partnerships,” said Christine Conwell, SEI's interim executive director. “We are thrilled to convene this event for the third year. The keynote and sessions highlight our comprehensive strategy, showcasing cutting-edge advancements and collaborative efforts driving the next big energy innovations."

The day was divided into two parts: a morning session that included a keynote speaker and two panels, and an afternoon session with separate tracks addressing three different energy research areas. Speakers shared research being conducted at Georgia Tech, as well as updates from industry leaders, to create an open dialogue about current energy needs.

“We believe we can solve problems and build the economy when you bring various disciplines together and work from matter — the fundamental scientists and devices all the way out to final systems at large — economic systems, societal systems,” said Eric Vogel, executive director for IMS. “Not only did we share the latest research, but we discussed and debated how we can continue to transform the energy economy.”

Discussions ranged from adapting to rapid changes in battery storage to advancing photo-voltaic manufacturing in the U.S. to the environmental impacts and sustainable practices of e-fuels and renewable energy.

The day ended with a robust poster session that attracted more than 25 student posters presentations. Three were awarded best posters.

First place: Austin Shoemaker

Second Place: Roahan Zhang

Third Place: Connor Davel

Related Links:

Advancing Clean Energy: Georgia Tech Hosts Energy Materials Day

Georgia Tech Battery Day Reveals Opportunities in Energy Storage Research

News Contact

Amelia Neumeister | Research Communications Program Manager

Apr. 28, 2025

Origami — the Japanese art of folding paper — could be at the next frontier in innovative materials.

Practiced in Japan since the early 1600s, origami involves combining simple folding techniques to create intricate designs. Now, Georgia Tech researchers are leveraging the technique as the foundation for next-generation materials that can both act as a solid and predictably deform, “folding” under the right forces. The research could lead to innovations in everything from heart stents to airplane wings and running shoes.

Recently published in Nature Communications, the study, “Coarse-grained fundamental forms for characterizing isometries of trapezoid-based origami metamaterials,” was led by first author James McInerney, who is now a NRC Research Associate at the Air Force Research Laboratory. McInerney, who completed the research while a postdoctoral student at the University of Michigan, was previously a doctoral student at Georgia Tech in the group of study co-author Zeb Rocklin. The team also includes Glaucio Paulino (Princeton University), Xiaoming Mao (University of Michigan), and Diego Misseroni (University of Trento).

“Origami has received a lot of attention over the past decade due to its ability to deploy or transform structures,” McInerney says. “Our team wondered how different types of folds could be used to control how a material deforms when different forces and pressures are applied to it” — like a creased piece of cardboard folding more predictably than one that might crumple without any creases.

The applications of that type of control are vast. “There are a variety of scenarios ranging from the design of buildings, aircraft, and naval vessels to the packaging and shipping of goods where there tends to be a trade-off between enhancing the load-bearing capabilities and increasing the total weight,” McInerney explains. “Our end goal is to enhance load-bearing designs by adding origami-inspired creases — without adding weight.”

The challenge, Rocklin adds, is using physics to find a way to predictably model what creases to use and when to achieve the best results.

Deformable solids

Rocklin, a theoretical physicist and associate professor in the School of Physics at Georgia Tech, emphasizes the complex nature of these types of materials. “If I tug on either end of a sheet of paper, it's solid — it doesn’t separate,” he explains. “But it's also flexible — it can crumple and wave depending on how I move it. That’s a very different behavior than what we might see in a conventional solid, and a very useful one.”

But while flexible solids are uniquely useful, they are also very hard to characterize, he says. “With these materials, it is often difficult to predict what is going to happen — how the material will deform under pressure because they can deform in many different ways. Conventional physics techniques can't solve this type of problem, which is why we're still coming up with new ways to characterize structures in the 21st century.”

When considering origami-inspired materials, physicists start with a flat sheet that's carefully creased to create a specific three-dimensional shape; these folds determine how the material behaves. But the method is limited: only parallelogram-based origami folding, which uses shapes like squares and rectangles, had previously been modeled, allowing for limited types of deformation.

“Our goal was to expand on this research to include trapezoid faces,” McInerney says. Parallelograms have two sets of parallel sides, but trapezoids only need to have one set of parallel sides. Introducing these more variable shapes makes this type of creasing more difficult to model, but potentially more versatile.

Breathing and shearing

“From our models and physical tests, we found that trapezoid faces have an entirely different class of responses,” McInerney shares. In other words — using trapezoids leads to new behavior.

The designs had the ability to change their shape in two distinct ways: "breathing" by expanding and contracting evenly, and “shearing" by deforming in a twisting motion. “We learned that we can use trapezoid faces in origami to constrain the system from bending in certain directions, which provides different functionality than parallelogram faces,” McInerney adds.

Surprisingly, the team also found that some of the behavior in parallelogram-based origami carried over to their trapezoidal origami, hinting at some features that might be universal across designs.

“While our research is theoretical, these insights could give us more opportunities for how we might deploy these structures and use them,” Rocklin shares.

Future folding

“We still have a lot of work to do,” McInerney says, sharing that there are two separate avenues of research to pursue. “The first is moving from trapezoids to more general quadrilateral faces, and trying to develop an effective model of the material behavior — similar to the way this study moved from parallelograms to trapezoids.” Those new models could help predict how creased materials might deform under different circumstances, and help researchers compare those results to sheets without any creases at all. “This will essentially let us assess the improvement our designs provide,” he explains.

“The second avenue is to start thinking deeply about how our designs might integrate into a real system,” McInerney continues. “That requires understanding where our models start to break down, whether it is due to the loading conditions or the fabrication process, as well as establishing effective manufacturing and testing protocols.”

“It’s a very challenging problem, but biology and nature are full of smart solids — including our own bodies — that deform in specific, useful ways when needed,” Rocklin says. “That’s what we’re trying to replicate with origami.”

This research was funded by the Office of Naval Research, European Union, Army Research Office, and National Science Foundation.

Apr. 25, 2025

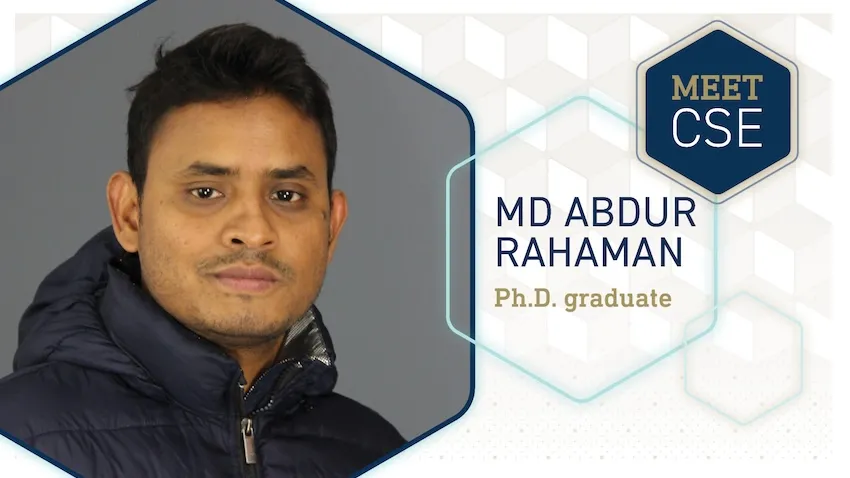

A Georgia Tech doctoral student’s dissertation could help physicians diagnose neuropsychiatric disorders, including schizophrenia, autism, and Alzheimer’s disease. The new approach leverages data science and algorithms instead of relying on traditional methods like cognitive tests and image scans.

Ph.D. candidate Md Abdur Rahaman’s dissertation studies brain data to understand how changes in brain activity shape behavior.

Computational tools Rahaman developed for his dissertation look for informative patterns between the brain and behavior. Successful tests of his algorithms show promise to help doctors diagnose mental health disorders and design individualized treatment plans for patients.

“I've always been fascinated by the human brain and how it defines who we are,” Rahaman said.

“The fact that so many people silently suffer from neuropsychiatric disorders, while our understanding of the brain remains limited, inspired me to develop tools that bring greater clarity to this complexity and offer hope through more compassionate, data-driven care.”

Rahaman’s dissertation introduces a framework focusing on granular factoring. This computing technique stratifies brain data into smaller, localized subgroups, making it easier for computers and researchers to study data and find meaningful patterns.

Granular factoring overcomes the challenges of size and heterogeneity in neurological data science. Brain data is obtained from neuroimaging, genomics, behavioral datasets, and other sources. The large size of each source makes it a challenge to study them individually, let alone analyze them simultaneously, to find hidden inferences.

Rahaman’s research allows researchers and physicians to move past one-size-fits-all approaches. Instead of manually reviewing tests and scans, algorithms look for patterns and biomarkers in the subgroups that otherwise go undetected, especially ones that indicate neuropsychiatric disorders.

“My dissertation advances the frontiers of computational neuroscience by introducing scalable and interpretable models that navigate brain heterogeneity to reveal how neural dynamics shape behavior,” Rahaman said.

“By uncovering subgroup-specific patterns, this work opens new directions for understanding brain function and enables more precise, personalized approaches to mental health care.”

Rahaman defended his dissertation on April 14, the final step in completing his Ph.D. in computational science and engineering. He will graduate on May 1 at Georgia Tech’s Ph.D. Commencement.

After walking across the stage at McCamish Pavilion, Rahaman’s next step in his career is to go to Amazon, where he will work in the generative artificial intelligence (AI) field.

Graduating from Georgia Tech is the summit of an educational trek spanning over a decade. Rahaman hails from Bangladesh where he graduated from Chittagong University of Engineering and Technology in 2013. He attained his master’s from the University of New Mexico in 2019 before starting at Georgia Tech.

“Munna is an amazingly creative researcher,” said Vince Calhoun, Rahman’s advisor. Calhoun is the founding director of the Translational Research in Neuroimaging and Data Science Center (TReNDS).

TReNDS is a tri-institutional center spanning Georgia Tech, Georgia State University, and Emory University that develops analytic approaches and neuroinformatic tools. The center aims to translate the approaches into biomarkers that address areas of brain health and disease.

“His work is moving the needle in our ability to leverage multiple sources of complex biological data to improve understanding of neuropsychiatric disorders that have a huge impact on an individual’s livelihood,” said Calhoun.

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Apr. 22, 2025

A Georgia Tech alum’s dissertation introduced ways to make artificial intelligence (AI) more accessible, interpretable, and accountable. Although it’s been a year since his doctoral defense, Zijie (Jay) Wang’s (Ph.D. ML-CSE 2024) work continues to resonate with researchers.

Wang is a recipient of the 2025 Outstanding Dissertation Award from the Association for Computing Machinery Special Interest Group on Computer-Human Interaction (ACM SIGCHI). The award recognizes Wang for his lifelong work on democratizing human-centered AI.

“Throughout my Ph.D. and industry internships, I observed a gap in existing research: there is a strong need for practical tools for applying human-centered approaches when designing AI systems,” said Wang, now a safety researcher at OpenAI.

“My work not only helps people understand AI and guide its behavior but also provides user-friendly tools that fit into existing workflows.”

[Related: Georgia Tech College of Computing Swarms to Yokohama, Japan, for CHI 2025]

Wang’s dissertation presented techniques in visual explanation and interactive guidance to align AI models with user knowledge and values. The work culminated from years of research, fellowship support, and internships.

Wang’s most influential projects formed the core of his dissertation. These included:

- CNN Explainer: an open-source tool developed for deep-learning beginners. Since its release in July 2020, more than 436,000 global visitors have used the tool.

- DiffusionDB: a first-of-its-kind large-scale dataset that lays a foundation to help people better understand generative AI. This work could lead to new research in detecting deepfakes and designing human-AI interaction tools to help people more easily use these models.

- GAM Changer: an interface that empowers users in healthcare, finance, or other domains to edit ML models to include knowledge and values specific to their domain, which improves reliability.

- GAM Coach: an interactive ML tool that could help people who have been rejected for a loan by automatically letting an applicant know what is needed for them to receive loan approval.

- Farsight: a tool that alerts developers when they write prompts in large language models that could be harmful and misused.

“I feel extremely honored and lucky to receive this award, and I am deeply grateful to many who have supported me along the way, including Polo, mentors, collaborators, and friends,” said Wang, who was advised by School of Computational Science and Engineering (CSE) Professor Polo Chau.

“This recognition also inspired me to continue striving to design and develop easy-to-use tools that help everyone to easily interact with AI systems.”

Like Wang, Chau advised Georgia Tech alumnus Fred Hohman (Ph.D. CSE 2020). Hohman won the ACM SIGCHI Outstanding Dissertation Award in 2022.

Chau’s group synthesizes machine learning (ML) and visualization techniques into scalable, interactive, and trustworthy tools. These tools increase understanding and interaction with large-scale data and ML models.

Chau is the associate director of corporate relations for the Machine Learning Center at Georgia Tech. Wang called the School of CSE his home unit while a student in the ML program under Chau.

Wang is one of five recipients of this year’s award to be presented at the 2025 Conference on Human Factors in Computing Systems (CHI 2025). The conference occurs April 25-May 1 in Yokohama, Japan.

SIGCHI is the world’s largest association of human-computer interaction professionals and practitioners. The group sponsors or co-sponsors 26 conferences, including CHI.

Wang’s outstanding dissertation award is the latest recognition of a career decorated with achievement.

Months after graduating from Georgia Tech, Forbes named Wang to its 30 Under 30 in Science for 2025 for his dissertation. Wang was one of 15 Yellow Jackets included in nine different 30 Under 30 lists and the only Georgia Tech-affiliated individual on the 30 Under 30 in Science list.

While a Georgia Tech student, Wang earned recognition from big names in business and technology. He received the Apple Scholars in AI/ML Ph.D. Fellowship in 2023 and was in the 2022 cohort of the J.P. Morgan AI Ph.D. Fellowships Program.

Along with the CHI award, Wang’s dissertation earned him awards this year at banquets across campus. The Georgia Tech chapter of Sigma Xi presented Wang with the Best Ph.D. Thesis Award. He also received the College of Computing’s Outstanding Dissertation Award.

“Georgia Tech attracts many great minds, and I’m glad that some, like Jay, chose to join our group,” Chau said. “It has been a joy to work alongside them and witness the many wonderful things they have accomplished, and with many more to come in their careers.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu

Apr. 18, 2025

The Institute for Matter and Systems (IMS) supports a range of research activities, catering to users at all levels – from students to professional researchers. A pillar of that support lies in IMS’s facilities, offering tools and resources that are vital for both fundamental and applied research.

Recently, four graduate students using IMS core facilities in their research have been selected to receive NSF Graduate Research Fellowships. The prestigious fellowships including funding for three years of graduate study and tuition.

The core facility users are:

- Anna R. Burson – chemical engineering

- Connor M. Davel – photonic materials

- Ethan Daniel Ray – photonic materials

- Alessandro Zerbini-Flores – electrical and electronic engineering

IMS’s electronics and nanotechnology core facilities provide access to advanced instrumentation and technologies that are essential for research activities from basic discovery to prototype realization. This ensures that undergraduate and graduate students, as well as faculty and external collaborators, can access the necessary infrastructure to pursue their scientific inquiries.

News Contact

Amelia Neumeister | Research Communications Program Manager

Apr. 17, 2025

Baseball season is underway, and it’s not a surprise that the New York Yankees are among the league leaders in hits and home runs. But how they’re doing it has become the biggest storyline in the sport.

Several Yankee sluggers were swinging a new style of bat, dubbed the torpedo bat, on March 29, when the team hit nine home runs, and the trend quickly caught on around the league.

Materials and manufacturers may vary, but the design of a baseball bat has remained relatively unchanged, so how does the torpedo bat compare to other game-changing innovations in sports history? Jud Ready, associate director for external engagement at the Institute for Matter and Systems and the creator of the Materials Science and Engineering of Sports course at Georgia Tech, shares his thoughts on the bat and how the Institute is using technology to create change in sports.

News Contact

Steven Gagliano

Pagination

- 1 Page 1

- Next page