Apr. 17, 2024

Computing research at Georgia Tech is getting faster thanks to a new state-of-the-art processing chip named after a female computer programming pioneer.

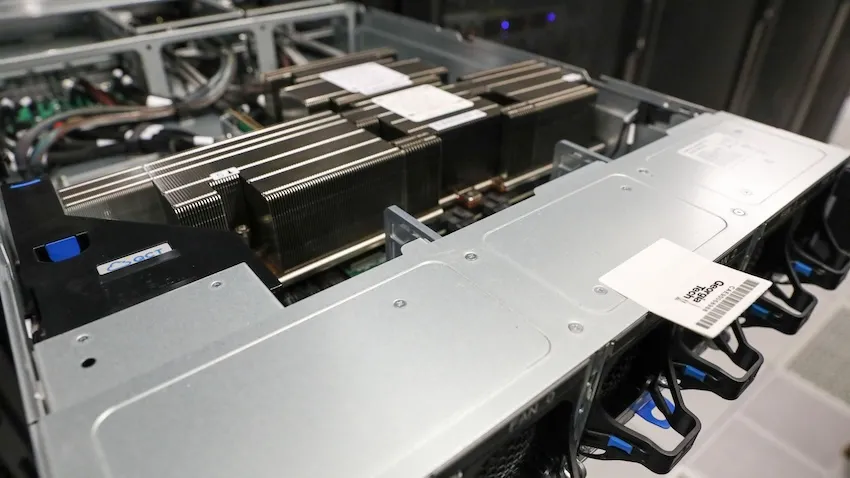

Tech is one of the first research universities in the country to receive the GH200 Grace Hopper Superchip from NVIDIA for testing, study, and research.

Designed for large-scale artificial intelligence (AI) and high-performance computing applications, the GH200 is intended for large language model (LLM) training, recommender systems, graph neural networks, and other tasks.

Alexey Tumanov and Tushar Krishna procured Georgia Tech’s first pair of Grace Hopper chips. Spencer Bryngelson attained four more GH200s, which will arrive later this month.

“We are excited about this new design that puts everything onto one chip and accessible to both processors,” said Will Powell, a College of Computing research technologist.

“The Superchip’s design increases computation efficiency where data doesn’t have to move as much and all the memory is on the chip.”

A key feature of the new processing chip is that the central processing unit (CPU) and graphics processing unit (GPU) are on the same board.

NVIDIA’s NVLink Chip-2-Chip (C2C) interconnect joins the two units together. C2C delivers up to 900 gigabytes per second of total bandwidth, seven times faster than PCIe Gen5 connections used in newer accelerated systems.

As a result, the two components share memory and process data with more speed and better power efficiency. This feature is one that the Georgia Tech researchers want to explore most.

Tumanov, an assistant professor in the School of Computer Science, and his Ph.D. student Amey Agrawal, are testing machine learning (ML) and LLM workloads on the chip. Their work with the GH200 could lead to more sustainable computing methods that keep up with the exponential growth of LLMs.

The advent of household LLMs, like ChatGPT and Gemini, pushes the limit of current architectures based on GPUs. The chip’s design overcomes known CPU-GPU bandwidth limitations. Tumanov’s group will put that design to the test through their studies.

Krishna is an associate professor in the School of Electrical and Computer Engineering and associate director of the Center for Research into Novel Computing Hierarchies (CRNCH).

His research focuses on optimizing data movement in modern computing platforms, including AI/ML accelerator systems. Ph.D. student Hao Kang uses the GH200 to analyze LLMs exceeding 30 billion parameters. This study will enable labs to explore deep learning optimizations with the new chip.

Bryngelson, an assistant professor in the School of Computational Science and Engineering, will use the chip to compute and simulate fluid and solid mechanics phenomena. His lab can use the CPU to reorder memory and perform disk writes while the GPU does parallel work. This capability is expected to significantly reduce the computational burden for some applications.

“Traditional CPU to GPU communication is slower and introduces latency issues because data passes back and forth over a PCIe bus,” Powell said. “Since they can access each other’s memory and share in one hop, the Superchip’s architecture boosts speed and efficiency.”

Grace Hopper is the inspirational namesake for the chip. She pioneered many developments in computer science that formed the foundation of the field today.

Hopper invented the first compiler, a program that translates computer source code into a target language. She also wrote the earliest programming languages, including COBOL, which is still used today in data processing.

Hopper joined the U.S. Navy Reserve during World War II, tasked with programming the Mark I computer. She retired as a rear admiral in August 1986 after 42 years of military service.

Georgia Tech researchers hope to preserve Hopper’s legacy using the technology that bears her name and spirit for innovation to make new discoveries.

“NVIDIA and other vendors show no sign of slowing down refinement of this kind of design, so it is important that our students understand how to get the most out of this architecture,” said Powell.

“Just having all these technologies isn’t enough. People must know how to build applications in their coding that actually benefit from these new architectures. That is the skill.”

News Contact

Bryant Wine, Communications Officer

bryant.wine@cc.gatech.edu